linux之RAID应用

一、RAID的基本概念

RAID即廉价磁盘冗余阵列(Redundant Array of Inexpensive Disks),多个独立的物理硬盘按照不同的方式组合起来,形成一个虚拟的硬盘。

二、RAID级别

常用级别:RAID0;RAID1;RAID5

RAID0:RAID0是以条带的形式将数据均匀分布在阵列的各个磁盘上.

RAID0特性:

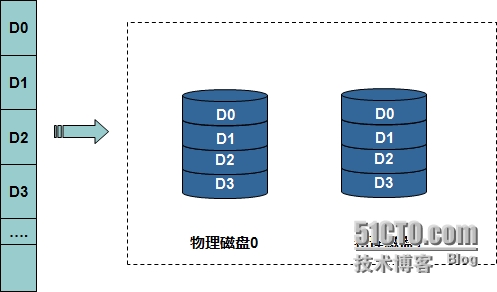

RAID1:RAID1以镜像为冗余方式,对虚拟磁盘上的数据做多份拷贝,放在成员磁盘上

RAID1特性:

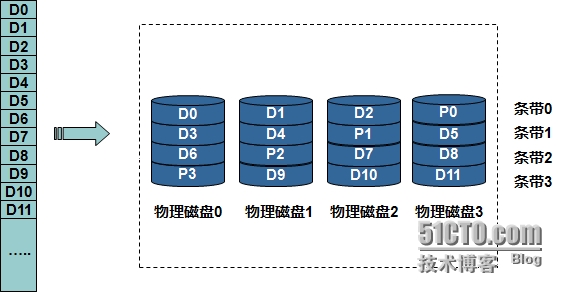

RAID5:RAID5采用独立存取的阵列方式,校验信息被均匀的分散到阵列的各个磁盘上.

RAID5特性:

组合不同级别的RAID:

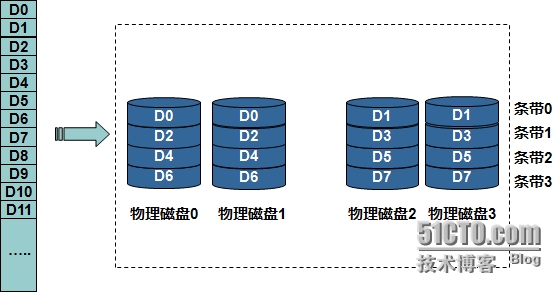

RAID10:RAID10结合RAID1和RAID0,先镜像,再条带化

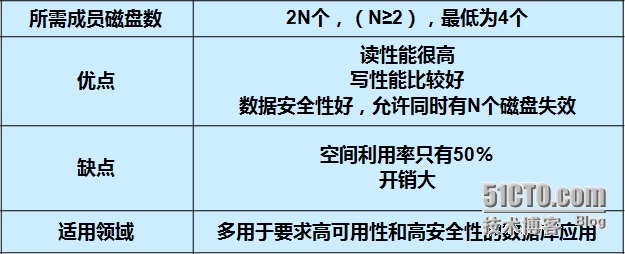

RAID10特性:

RAID50:

RAID50特性:

常用RAID级别的比较

三、RAID的实现方式和运行状态

软件RAID

à功能都依赖于主机CPU完成,没有第三方的控制处理器和I/O芯片.

硬件RAID

à有专门的RAID控制处理器和I/O处理芯片来处理RAID任务,不需占用主机CPU资源.

四、软件RAID的实现方式

在实现软件RAID时,我们需要用到mdadm命令。

mdadm:将任何块设备作成RAID

mdadm为模式化命令:

1、创建模式:-C

2、管理模式

3、监控模式:-F

4、增长模式:-G

5、装配模式:-A

-D|--detail :显示RAID设备的详细信息;

管理模式:

--add:增加块设备

--remove:移除块设备

-f|--fail|--set-faulty:模拟块设备损坏

-S :mdadm -S /dev/md# 停止RIAD

mdadm -D --scan >> /etc/mdadm.conf :把创建好的RAID写入配置文件,便于以后的装配。

格式化时,可以使用命令:mke2fs -j --stride=16 -b 4096 /dev/md# 可以提高设备效率,stride=chunkd值/块大小(即4k)

创建模式:

mdadm -C RAID-name -l RAID级别 -n 设备个数和具体设备 -a 自动为其创建设备文件 yes|no -c 指定chunk大小 -x 指定空闲盘的个数

具体的实施过程:(以RAID5为例)

首先准备3个以上块设备或者分区(必须在不同的硬盘上的不同分区,否则无意义)

这里只演示基本过程:

创建一个2G的RAID5.

[root@localhost ~]# fdisk /dev/sdb

The number of cylinders for this disk is set to 2610.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): p

Disk /dev/sdb: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 123 987966 fd Linux raid autodetect

/dev/sdb2 124 246 987997+ fd Linux raid autodetect

/dev/sdb3 247 369 987997+ fd Linux raid autodetect

/dev/sdb4 370 492 987997+ fd Linux raid autodetect

Command (m for help): q

[root@localhost ~]# partprobe /dev/sdb

[root@localhost ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 305203 sda1

8 2 19615365 sda2

8 3 1044225 sda3

8 16 20971520 sdb

8 17 987966 sdb1

8 18 987997 sdb2

8 19 987997 sdb3

8 20 987997 sdb4

[root@localhost ~]#

//需要的四块分区已准备好(其中一块是备用的),分别是1G,RAID5的利用率为(n-1)/n,且调整类型为fd(Linux raid autodetect)。

步骤开始:

[root@localhost ~]# mdadm -C /dev/md2 -l 5 -a yes -n 3 /dev/sdb{1,2,3} -x 1 /dev/sdb4

mdadm: Unknown keyword /dev/md3:

mdadm: Unknown keyword Preferred

mdadm: Unknown keyword Working

mdadm: /dev/sdb1 appears to be part of a raid array:

level=raid0 devices=2 ctime=Wed Feb 25 23:54:13 2015

mdadm: /dev/sdb2 appears to be part of a raid array:

level=raid0 devices=2 ctime=Wed Feb 25 23:54:13 2015

Continue creating array? y

mdadm: array /dev/md2 started.

[root@localhost ~]# mdadm -D /dev/md2

mdadm: Unknown keyword /dev/md3:

mdadm: Unknown keyword Preferred

mdadm: Unknown keyword Working

/dev/md2:

Version : 0.90

Creation Time : Sun Mar 1 21:56:37 2015

Raid Level : raid5

Array Size : 1975680 (1929.70 MiB 2023.10 MB)

Used Dev Size : 987840 (964.85 MiB 1011.55 MB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 2

Persistence : Superblock is persistent

Update Time : Sun Mar 1 21:56:50 2015

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

UUID : 97957132:c644253c:b9eb8d83:a8bae7d1

Events : 0.4

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

2 8 19 2 active sync /dev/sdb3

3 8 20 - spare /dev/sdb4

[root@localhost ~]# mke2fs -j /dev/md2 //格式化/dev/md2为ext3文件系统

mke2fs 1.39 (29-May-2006)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

247296 inodes, 493920 blocks

24696 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=507510784

16 block groups

32768 blocks per group, 32768 fragments per group

15456 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 37 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@localhost ~]# mount /dev/md2 /mnt/ //挂载

[root@localhost ~]# ls -lh /mnt/ //挂载成功

total 16K

drwx------ 2 root root 16K Mar 1 21:59 lost+found

[root@localhost mnt]# mdadm /dev/md2 -f /dev/sdb1 //模拟损坏/dev/sdb1,剩余的/dev/sdb4自动补上。

mdadm: Unknown keyword /dev/md3:

mdadm: Unknown keyword Preferred

mdadm: Unknown keyword Working

mdadm: set /dev/sdb1 faulty in /dev/md2

[root@localhost mnt]# mdadm -D /dev/md2

mdadm: Unknown keyword /dev/md3:

mdadm: Unknown keyword Preferred

mdadm: Unknown keyword Working

/dev/md2:

Version : 0.90

Creation Time : Sun Mar 1 21:56:37 2015

Raid Level : raid5

Array Size : 1975680 (1929.70 MiB 2023.10 MB)

Used Dev Size : 987840 (964.85 MiB 1011.55 MB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 2

Persistence : Superblock is persistent

Update Time : Sun Mar 1 22:06:40 2015

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 31% complete

UUID : 97957132:c644253c:b9eb8d83:a8bae7d1

Events : 0.6

Number Major Minor RaidDevice State

3 8 20 0 spare rebuilding /dev/sdb4

1 8 18 1 active sync /dev/sdb2

2 8 19 2 active sync /dev/sdb3

4 8 17 - faulty spare /dev/sdb1

[root@localhost mnt]# mdadm -D --scan >/etc/mdadm.conf //写入配置文件

[root@localhost /]# umount /dev/md2

[root@localhost /]# mdadm -S /dev/md2

mdadm: stopped /dev/md2

[root@localhost /]# mdadm -A /dev/md2

mdadm: /dev/md2 has been started with 3 drives.

[root@localhost /]#

教程完成