部署mysql高可用、读写分离集群

部署mysql高可用、读写分离集群

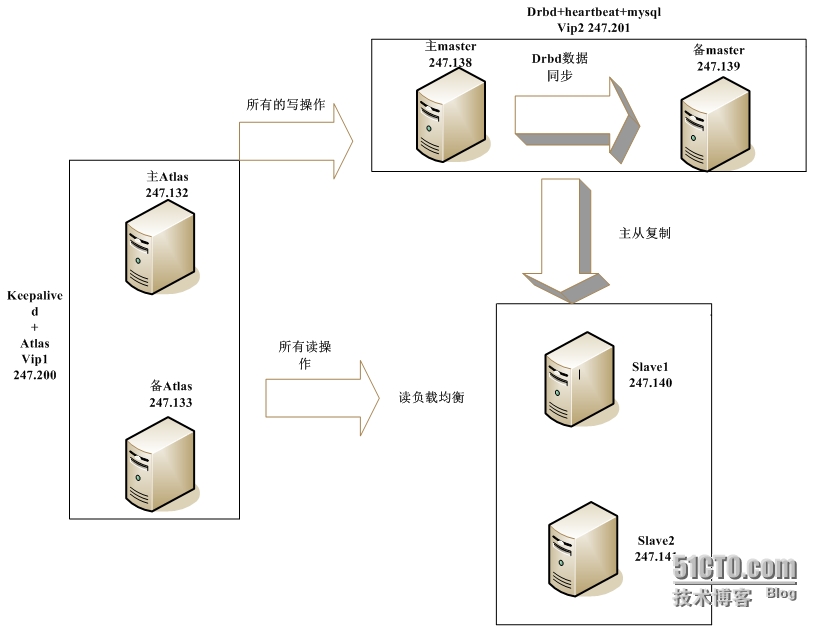

架构图:

部署集群:

注意:

##Atlas要求mysql版本必须是5.1以上,这里建议安装mysql5.6 ##mysql5.6软件下载地址: http://pan.baidu.com/s/1bnrzpZh

主master和备master安装DRBD:

http://732233048.blog.51cto.com/9323668/1665979

主master和备master安装heartbeat和mysql:

http://732233048.blog.51cto.com/9323668/1670068

slave1和slave2安装mysql:

##mysql5.6采用cmake安装 yum -y install make gcc gcc-c++ cmake bison-devel ncurses-devel kernel-devel readline-devel openssl-devel openssl zlib zlib-devel pcre-devel perl perl-devel #安装依赖包 cd /usr/local/src/ tar -zxf mysql-5.6.22.tar.gz cd mysql-5.6.22 mkdir -p /data/mysql/data #数据目录 cmake -DCMAKE_INSTALL_PREFIX=/usr/local/mysql -DMYSQL_DATADIR=/data/mysql/data -DSYSCONFDIR=/usr/local/mysql -DWITH_MYISAM_STORAGE_ENGINE=1 -DWITH_INNOBASE_STORAGE_ENGINE=1 -DWITH_MEMORY_STORAGE_ENGINE=1 -DWITH_PARTITION_STORAGE_ENGINE=1 -DMYSQL_UNIX_ADDR=/var/lib/mysql/mysql.sock -DDEFAULT_CHARSET=utf8 -DDEFAULT_COLLATION=utf8_general_ci -DEXTRA_CHARSETS:STRING=utf8,gbk -DWITH_DEBUG=0 make ##时间会很久 make install

groupadd mysql useradd -s /sbin/nologin -g mysql mysql /usr/local/mysql/scripts/mysql_install_db --basedir=/usr/local/mysql --datadir=/data/mysql/data --defaults-file=/usr/local/mysql/my.cnf --user=mysql chown -R mysql.mysql /data/mysql

##修改配置文件: mv /usr/local/mysql/my.cnf /usr/local/mysql/my.cnf.old vi /usr/local/mysql/my.cnf [mysqld] basedir = /usr/local/mysql datadir = /data/mysql/data port = 3306 socket = /var/lib/mysql/mysql.sock pid-file = /var/lib/mysql/mysql.pid default_storage_engine = InnoDB expire_logs_days = 14 max_binlog_size = 1G binlog_cache_size = 10M max_binlog_cache_size = 20M slow_query_log long_query_time = 2 slow_query_log_file = /data/mysql/logs/slowquery.log open_files_limit = 65535 innodb = FORCE innodb_buffer_pool_size = 4G innodb_log_file_size = 1G query_cache_size = 0 thread_cache_size = 64 table_definition_cache = 512 table_open_cache = 512 max_connections = 1000 sort_buffer_size = 10M max_allowed_packet = 6M sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES [mysqld_safe] log-error = /data/mysql/logs/error.log [client] socket = /var/lib/mysql/mysql.sock port = 3306 ##innodb_buffer_pool_size: 主要作用是缓存innodb表的索引,数据,插入数据时的缓冲; 默认值:128M; 专用mysql服务器设置此值的大小: 系统内存的70%-80%最佳。 如果你的系统内存不大,查看这个参数,把它的值设置小一点吧(若值设置大了,启动会报错)

##启动mysql: cp -a /usr/local/mysql/support-files/mysql.server /etc/init.d/mysqld chkconfig --add mysqld chkconfig mysqld on /etc/init.d/mysqld start netstat -tlnp | grep mysql ##查看是否启动 vi /etc/profile ##修改path路径 ##在最后添加:export PATH=$PATH:/usr/local/mysql/bin source /etc/profile

##创建mysql密码: mysqladmin -u root password "123456"

slave1和slave2配置主从复制:

##首先先确认哪台master的drbd是primary状态##

##主master: [root@dbm138 ~]# cat /proc/drbd | grep ro version: 8.3.16 (api:88/proto:86-97) 0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r----- ##138是primary

##备master: [root@dbm139 ~]# cat /proc/drbd | grep ro version: 8.3.16 (api:88/proto:86-97) 0: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r----- ##139是secondary

##由上确认:主master138的drbd目前是primary##

##主master: ##开启二进制文件: vi /usr/local/mysql/my.cnf 在[mysqld]下添加: log-bin = /data/mysql/binlog/mysql-binlog ##二进制文件最好单独放在一个目录下 mkdir /data/mysql/binlog/ ##创建日志目录 chown -R mysql.mysql /data/mysql /etc/init.d/mysqld restart ##reload好像不可行

##slave1: vi /usr/local/mysql/my.cnf 在[mysqld]下添加: server-id = 2 /etc/init.d/mysqld restart ##必须是restart

##slave2: vi /usr/local/mysql/my.cnf 在[mysqld]下添加: server-id = 3 /etc/init.d/mysqld restart ##必须是restar

##主master: ##给每台从库创建一个数据库账号(授权) mysql -uroot -p123456 grant replication slave on *.* to 'slave'@'192.168.247.140' identified by '123456'; grant replication slave on *.* to 'slave'@'192.168.247.141' identified by '123456'; flush privileges;

##主master: ##备份数据: mysql -uroot -p123456 flush tables with read lock; #锁表,只读 show master status; #查看此时的binlog位置和pos值,这个要记录下来 +---------------------+----------+--------------+------------------+-------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set | +---------------------+----------+--------------+------------------+-------------------+ | mysql-binlog.000010 | 625 | | | | +---------------------+----------+--------------+------------------+-------------------+ 1 row in set (0.03 sec) ##打开另外一个终端:mysqldump -u root -p123456 --all-databases > /tmp/mysqldump.sql ##回到之前终端:unlock tables; #解表

##主master: ##拷贝数据到所有从库: scp /tmp/mysqldump.sql 192.168.247.140:/tmp/ scp /tmp/mysqldump.sql 192.168.247.141:/tmp/

##salve1和slave2: ##导入数据: mysql -uroot -p123456 < /tmp/mysqldump.sql ##开始同步: mysql -uroot -p123456 ##注意:这里指定的ip是master端的vip 201## change master to master_host='192.168.247.201',master_user='slave',master_password='123456',master_log_file='mysql-binlog.000010',master_log_pos=625,master_port=3306; start slave; ##启动slave show slave status\G; ##查看状态,两个YES则正常 *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 192.168.247.201 Master_User: slave Master_Port: 3306 Connect_Retry: 60 Master_Log_File: mysql-binlog.000010 Read_Master_Log_Pos: 625 Relay_Log_File: mysql-relay-bin.000002 Relay_Log_Pos: 286 Relay_Master_Log_File: mysql-binlog.000010 Slave_IO_Running: Yes Slave_SQL_Running: Yes Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0 Last_Error: Skip_Counter: 0 Exec_Master_Log_Pos: 625 Relay_Log_Space: 459 Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Master_SSL_Allowed: No Master_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0 Master_SSL_Verify_Server_Cert: No Last_IO_Errno: 0 Last_IO_Error: Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 1 Master_UUID: 95e83a45-668a-11e5-aa2d-000c299d90cb Master_Info_File: /data/mysql/data/master.info SQL_Delay: 0 SQL_Remaining_Delay: NULL Slave_SQL_Running_State: Slave has read all relay log; waiting for the slave I/O thread to update it Master_Retry_Count: 86400 Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: Auto_Position: 0 1 row in set (0.00 sec) ERROR: No query specified

主从复制测试:

测试一:master端切换drbd状态(主备切换),查看slave端是否仍正常访问

##此时主master是primary ##主master: [root@dbm138 ~]# /etc/init.d/heartbeat restart ##进行了主备切换

##slave1: [root@localhost ~]# mysql -h 192.168.247.201 -u slave -p123456 Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 4 Server version: 5.6.22-log Source distribution Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> ##仍可访问

测试二:master端进行写操作,查看能否同步到slave端

##此时备master是primary ##备master: [root@dbm139 ~]# mysql -p123456 Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 5 Server version: 5.6.22-log Source distribution Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database ku1; ##创建ku1 Query OK, 1 row affected (0.05 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.07 sec)

##slave1: mysql -p123456 mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.06 sec)

##slave2: mysql -p123456 mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.06 sec)

##正常##

测试三:master端主备切换后再写入

##此时备master是primary ##备master: /etc/init.d/heartbeat restart ##主备切换

##此时主master是primary ##主master: root@dbm138 ~]# mysql -p123456 Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 3 Server version: 5.6.22-log Source distribution Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; ##查看ku1是否存在 +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.09 sec) mysql> create database ku2; ##创建ku2 Query OK, 1 row affected (0.06 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | mysql | | performance_schema | | test | +--------------------+ 6 rows in set (0.00 sec)

##slave1: mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | mysql | | performance_schema | | test | +--------------------+ 6 rows in set (0.00 sec)

##slave2: mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | mysql | | performance_schema | | test | +--------------------+ 6 rows in set (0.00 sec)

##切换后仍可访问,且数据也同步了

安装Atlas:

参考:https://github.com/Qihoo360/Atlas/blob/master/README_ZH.md

Atlas是由 Qihoo 360, Web平台部基础架构团队开发维护的一个基于MySQL协议的数据中间层项目。 它在MySQL官方推出的MySQL-Proxy 0.8.2版本的基础上,修改了大量bug,添加了很多功能特性。 目前该项目在360公司内部得到了广泛应用,很多MySQL业务已经接入了Atlas平台,每天承载的读写请求数达几十亿条。 主要功能: a.读写分离 b.从库负载均衡 c.IP过滤 d.SQL语句黑白名单 e.自动分表 f.自动摘除宕机的DB

首先:所有库服务器对Atlas端创建数据库账号(授权)

Atlas可以通过这个账号和密码去连接所有的数据库服务器

##此时主master是primary ##主master: mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.132' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.133' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.200' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) ##正常只对vip 247.200授权就可以了,不过为了可能出现的问题,这里对Atlas的真实ip也授权了

##slave1: mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.132' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.133' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.200' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec)

##slave2: mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.132' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.133' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'Atlas'@'192.168.247.200' identified by 'mysql'; Query OK, 0 rows affected (0.00 sec)

安装Atlas:(Atlas主,Atlas备)

软件下载地址:https://github.com/Qihoo360/Atlas/releases

cd /usr/local/src/ rpm -ivh Atlas-2.2.1.el6.x86_64.rpm [root@localhost src]# rpm -ql Atlas | grep conf /usr/local/mysql-proxy/conf/test.cnf /usr/local/mysql-proxy/lib/mysql-proxy/lua/proxy/auto-config.lua ##Atlas的配置文件:/usr/local/mysql-proxy/conf/test.cnf

##对Atlas的密码mysql进行加密(上面所有库的授权用户) /usr/local/mysql-proxy/bin/encrypt mysql TWbz0dlu35U= ##加密后的密码 ##修改配置文件: mv /usr/local/mysql-proxy/conf/test.cnf /usr/local/mysql-proxy/conf/test.cnf.old vi /usr/local/mysql-proxy/conf/test.cnf [mysql-proxy] plugins = admin,proxy #默认插件不用修改 admin-username=admin #Atlas管理员用户 admin-password=admin #Atlas管理员密码 admin-lua-script = /usr/local/mysql-proxy/lib/mysql-proxy/lua/admin.lua proxy-backend-addresses = 192.168.247.201:3306 #主库的IP及端口,这里是vip 201 proxy-read-only-backend-addresses = 192.168.247.140:3306,192.168.247.141:3306 #从库的IP及端口 #proxy-read-only-backend-addresses = 192.168.247.140:3306@1,192.168.247.141:3306@2 ##权重为1和2 pwds = Atlas:TWbz0dlu35U=, root:08xYdWX+7MBR/g== #Atlas用户与其对应的加密过的密码,逗号分隔多个 daemon = true #设置Atlas的运行方式,线上运行时设为true keepalive = true #设置Atlas的运行方式,设为true时Atlas会启动两个进程,一个为monitor,一个为worker,monitor在worker意外退出后会自动将其重启,设为false时只有worker,没有monitor,一般开发调试时设为false,线上运行时设为true event-threads = 4 #工作线程数,对Atlas的性能有很大影响,系统的CPU核数的2至4倍 log-level = message #日志级别,分为message、warning、critical、error、debug五个级别 log-path = /usr/local/mysql-proxy/log #日志路径 instance = test #实例的名称 proxy-address = 0.0.0.0:3306 #Atlas监听的工作接口IP和端口,客户端连接端口 admin-address = 0.0.0.0:2345 #Atlas监听的管理接口IP和端口 charset = utf8 #默认字符集 ##注意:Atlas配置文件中不要出现汉字注释,否则启动会有问题## [mysql-proxy] plugins = admin,proxy admin-username=admin admin-password=admin admin-lua-script = /usr/local/mysql-proxy/lib/mysql-proxy/lua/admin.lua proxy-backend-addresses = 192.168.247.201:3306 proxy-read-only-backend-addresses = 192.168.247.140:3306,192.168.247.141:3306 pwds = Atlas:TWbz0dlu35U= daemon = true keepalive = true event-threads = 4 log-level = message log-path = /usr/local/mysql-proxy/log instance = test proxy-address = 0.0.0.0:3306 admin-address = 0.0.0.0:2345 charset = utf8

启动Atlas:(Atlas主,Atlas备)

[root@localhost src]# /usr/local/mysql-proxy/bin/mysql-proxyd test start OK: MySQL-Proxy of test is started [root@localhost src]# netstat -tlnp | grep 3306 tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 1594/mysql-proxy [root@localhost src]# netstat -tlnp | grep 2345 tcp 0 0 0.0.0.0:2345 0.0.0.0:* LISTEN 1594/mysql-proxy ##关闭Atlas:/usr/local/mysql-proxy/bin/mysql-proxyd test stop ##重启:/usr/local/mysql-proxy/bin/mysql-proxyd test restart

##创建启动脚本:

vi /etc/init.d/atlas

#!/bin/sh

#

#atlas: Atlas Daemon

#

# chkconfig: - 90 25

# description: Atlas Daemon

#

# Source function library.

start()

{

echo -n $"Starting atlas: "

/usr/local/mysql-proxy/bin/mysql-proxyd test start

echo

}

stop()

{

echo -n $"Shutting down atlas: "

/usr/local/mysql-proxy/bin/mysql-proxyd test stop

echo

}

ATLAS="/usr/local/mysql-proxy/bin/mysql-proxyd"

[ -f $ATLAS ] || exit 1

# See how we were called.

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

sleep 3

start

;;

*)

echo $"Usage: $0 {start|stop|restart}"

exit 1

esac

exit 0

chmod +x /etc/init.d/atlas

chkconfig atlas on

管理Atlas:(Atlas主)

##随便选择一台Atlas,这里选择Atlas主##

##随便找一台数据库服务器,如:slave1 ##slave1: mysql -h192.168.247.132 -P2345 -uadmin -padmin ##登录Atlas主的管理界面##Atlas主的ip 132,管理端口2345,账号admin mysql> select * from help; ##查看帮助 +----------------------------+---------------------------------------------------------+ | command | description | +----------------------------+---------------------------------------------------------+ | SELECT * FROM help | shows this help | | SELECT * FROM backends | lists the backends and their state | | SET OFFLINE $backend_id | offline backend server, $backend_id is backend_ndx's id | | SET ONLINE $backend_id | online backend server, ... | | ADD MASTER $backend | example: "add master 127.0.0.1:3306", ... | | ADD SLAVE $backend | example: "add slave 127.0.0.1:3306", ... | | REMOVE BACKEND $backend_id | example: "remove backend 1", ... | | SELECT * FROM clients | lists the clients | | ADD CLIENT $client | example: "add client 192.168.1.2", ... | | REMOVE CLIENT $client | example: "remove client 192.168.1.2", ... | | SELECT * FROM pwds | lists the pwds | | ADD PWD $pwd | example: "add pwd user:raw_password", ... | | ADD ENPWD $pwd | example: "add enpwd user:encrypted_password", ... | | REMOVE PWD $pwd | example: "remove pwd user", ... | | SAVE CONFIG | save the backends to config file | | SELECT VERSION | display the version of Atlas | +----------------------------+---------------------------------------------------------+ 16 rows in set (0.00 sec) mysql> SELECT * FROM backends; ##查看读写数据库ip,端口 +-------------+----------------------+-------+------+ | backend_ndx | address | state | type | +-------------+----------------------+-------+------+ | 1 | 192.168.247.201:3306 | up | rw | | 2 | 192.168.247.140:3306 | up | ro | | 3 | 192.168.247.141:3306 | up | ro | +-------------+----------------------+-------+------+

通过Atlas作为代理真正去管理所有的数据库:

##这里仍以Atlas主和slave1作为测试## ##slave1: mysql -h192.168.247.132 -P3306 -uAtlas -pmysql ##Atlas主的ip 132,代理端口3306,账号Atlas mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 3 | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 2 | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 3 | +---------------+-------+ 1 row in set (0.00 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 2 | +---------------+-------+ 1 row in set (0.00 sec) ##SHOW VARIABLES LIKE 'server_id'; 会轮训获取每台读数据库的server-id mysql> create database ku3; Query OK, 1 row affected (0.01 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | ku3 | | mysql | | performance_schema | | test | +--------------------+ 7 rows in set (0.00 sec) ##写操作也正常

安装keepalived实现Atlas高可用:

参考:http://blog.csdn.net/jibcy/article/details/7826158

http://bbs.nanjimao.com/thread-855-1-1.ht

(Atlas主,Atlas备)

##安装依赖包: yum -y install make gcc gcc-c++ bison-devel ncurses-devel kernel-devel readline-devel pcre-devel openssl-devel openssl zlib zlib-devel pcre-devel perl perl-devel ##安装keepalived: cd /usr/local/src/ wget http://www.keepalived.org/software/keepalived-1.2.15.tar.gz tar -zxf keepalived-1.2.15.tar.gz cd keepalived-1.2.15 ./configure --prefix=/usr/local/keepalived make make install ##拷贝文件: cp -a /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ cp -a /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ mkdir /etc/keepalived/ cp -a /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/ cp -a /usr/local/keepalived/sbin/keepalived /usr/sbin/ ##注意: /etc/sysconfig/keepalived 和 /etc/keepalived/keepalived.conf 的路径一定要正确, ##因为在执行/etc/init.d/keepalived这个启动脚本时,会读取/etc/sysconfig/keepalived 和 /etc/keepalived/keepalived.conf 这两个文件

(Atlas主)

##修改配置:

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.old

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

732233048@qq.com

}

notification_email_from root@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id Atlas_ha

}

vrrp_instance VI_1 {

state master

interface eth0

virtual_router_id 51

priority 150

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.247.200

}

}

virtual_server 192.168.247.200 3306 {

delay_loop 6

#lb_algo wrr

#lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 192.168.247.132 3306 {

#weight 3

notify_down /etc/keepalived/keepalived_monitor.sh

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

}

(Atlas备)

##修改配置:

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.old

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

732233048@qq.com

}

notification_email_from root@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id Atlas_ha

}

vrrp_instance VI_1 {

state backup

interface eth0

virtual_router_id 51

priority 100

advert_int 1

#nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.247.200

}

}

virtual_server 192.168.247.200 3306 {

delay_loop 6

#lb_algo wrr

#lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 192.168.247.133 3306 {

#weight 3

notify_down /etc/keepalived/keepalived_monitor.sh

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

}

(Atlas主,Atlas备)

##创建/etc/keepalived/keepalived_monitor.sh脚本: vi /etc/keepalived/keepalived_monitor.sh #!/bin/bash # check Atlas server status Atlas_status=`netstat -tlnp | grep 0.0.0.0:3306 | grep LISTEN | wc -l` if [ $Atlas_status -eq 0 ];then /etc/init.d/atlas start sleep 1 Atlas_status=`netstat -tlnp | grep 0.0.0.0:3306 | grep LISTEN | wc -l` if [ $Atlas_status -eq 0 ];then /etc/init.d/keepalived stop fi fi

chmod 755 /etc/keepalived/keepalived_monitor.sh

(Atlas主,Atlas备)

##修改keepalived的日志文件## 参考:http://chenwenming.blog.51cto.com/327092/745316 说明: centos6.3之后的syslog改名叫rsyslog了,默认在 /etc/rsyslog.conf

##修改/etc/sysconfig/keepalived: vi /etc/sysconfig/keepalived # Options for keepalived. See `keepalived --help' output and keepalived(8) and # keepalived.conf(5) man pages for a list of all options. Here are the most # common ones : # # --vrrp -P Only run with VRRP subsystem. # --check -C Only run with Health-checker subsystem. # --dont-release-vrrp -V Dont remove VRRP VIPs & VROUTEs on daemon stop. # --dont-release-ipvs -I Dont remove IPVS topology on daemon stop. # --dump-conf -d Dump the configuration data. # --log-detail -D Detailed log messages. # --log-facility -S 0-7 Set local syslog facility (default=LOG_DAEMON) # #KEEPALIVED_OPTIONS="-D" KEEPALIVED_OPTIONS="-D -d -S 0" ##在最后添加此行 ##修改/etc/rsyslog.conf: vi /etc/rsyslog.conf ##在最后添加此行: local0.* /var/log/keepalived.log ## /etc/init.d/rsyslog restart Shutting down system logger: [ OK ] Starting system logger: [ OK ]

(Atlas主)

##启动keepalived: /etc/init.d/keepalived start

Sep 29 10:58:12 localhost Keepalived[3544]: Starting Keepalived v1.2.15 (09/29,2015) Sep 29 10:58:12 localhost Keepalived[3545]: Starting Healthcheck child process, pid=3547 Sep 29 10:58:12 localhost Keepalived[3545]: Starting VRRP child process, pid=3548 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Netlink reflector reports IP 192.168.247.132 added Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Initializing ipvs 2.6 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Netlink reflector reports IP fe80::20c:29ff:fe5c:722c added Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Registering Kernel netlink reflector Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Registering Kernel netlink command channel Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Registering gratuitous ARP shared channel Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Opening file '/etc/keepalived/keepalived.conf'. Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Configuration is using : 63286 Bytes Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: ------< Global definitions >------ Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Router ID = Atlas_ha Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Smtp server = 127.0.0.1 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Smtp server connection timeout = 30 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Email notification from = root@localhost Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Email notification = 732233048@qq.com Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: VRRP IPv4 mcast group = 224.0.0.18 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: VRRP IPv6 mcast group = 224.0.0.18 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: ------< VRRP Topology >------ Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: VRRP Instance = VI_1 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Want State = BACKUP Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Runing on device = eth0 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Gratuitous ARP repeat = 5 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Gratuitous ARP refresh repeat = 1 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Virtual Router ID = 51 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Priority = 150 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Advert interval = 1sec Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Preempt disabled Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Authentication type = SIMPLE_PASSWORD Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Password = 1111 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Virtual IP = 1 Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: 192.168.247.200/32 dev eth0 scope global Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: Using LinkWatch kernel netlink reflector... Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) Entering BACKUP STATE Sep 29 10:58:12 localhost Keepalived_vrrp[3548]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Netlink reflector reports IP 192.168.247.132 added Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Netlink reflector reports IP fe80::20c:29ff:fe5c:722c added Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Registering Kernel netlink reflector Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Registering Kernel netlink command channel Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Opening file '/etc/keepalived/keepalived.conf'. Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Configuration is using : 11723 Bytes Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: IPVS: Scheduler or persistence engine not found Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: IPVS: No such process Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: ------< Global definitions >------ Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Router ID = Atlas_ha Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Smtp server = 127.0.0.1 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Smtp server connection timeout = 30 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Email notification from = root@localhost Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Email notification = 732233048@qq.com Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: VRRP IPv4 mcast group = 224.0.0.18 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: VRRP IPv6 mcast group = 224.0.0.18 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: ------< SSL definitions >------ Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Using autogen SSL context Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: ------< LVS Topology >------ Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: System is compiled with LVS v1.2.1 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: VIP = 192.168.247.200, VPORT = 3306 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: delay_loop = 6, lb_algo = Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: protocol = TCP Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: alpha is OFF, omega is OFF Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: quorum = 1, hysteresis = 0 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: lb_kind = NAT Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: RIP = 192.168.247.132, RPORT = 3306, WEIGHT = 1 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: -> Notify script DOWN = /etc/keepalived/keepalived_monitor.sh Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: ------< Health checkers >------ Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: [192.168.247.132]:3306 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Keepalive method = TCP_CHECK Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Connection dest = [192.168.247.132]:3306 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Connection timeout = 10 Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Using LinkWatch kernel netlink reflector... Sep 29 10:58:12 localhost Keepalived_healthcheckers[3547]: Activating healthchecker for service [192.168.247.132]:3306 Sep 29 10:58:15 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) Transition to MASTER STATE Sep 29 10:58:16 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) Entering MASTER STATE Sep 29 10:58:16 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) setting protocol VIPs. Sep 29 10:58:16 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.247.200 Sep 29 10:58:16 localhost Keepalived_healthcheckers[3547]: Netlink reflector reports IP 192.168.247.200 added Sep 29 10:58:21 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.247.200 ##进入master状态 ##绑定vip 200 ##检测ip 132 ##设置开机自动启动 chkconfig keepalived on

(Atlas备)

##启动: /etc/init.d/keepalived start

Sep 29 11:01:55 localhost Keepalived[3274]: Starting Keepalived v1.2.15 (09/29,2015) Sep 29 11:01:55 localhost Keepalived[3275]: Starting Healthcheck child process, pid=3277 Sep 29 11:01:55 localhost Keepalived[3275]: Starting VRRP child process, pid=3278 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Initializing ipvs 2.6 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Netlink reflector reports IP 192.168.247.133 added Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Netlink reflector reports IP fe80::20c:29ff:fe01:3824 added Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Netlink reflector reports IP 192.168.247.133 added Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Registering Kernel netlink reflector Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Registering Kernel netlink command channel Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Registering gratuitous ARP shared channel Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Opening file '/etc/keepalived/keepalived.conf'. Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Netlink reflector reports IP fe80::20c:29ff:fe01:3824 added Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Registering Kernel netlink reflector Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Registering Kernel netlink command channel Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Opening file '/etc/keepalived/keepalived.conf'. Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Configuration is using : 63266 Bytes Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: ------< Global definitions >------ Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Router ID = Atlas_ha Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Smtp server = 127.0.0.1 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Smtp server connection timeout = 30 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Email notification from = root@localhost Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Email notification = 732233048@qq.com Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: VRRP IPv4 mcast group = 224.0.0.18 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: VRRP IPv6 mcast group = 224.0.0.18 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: ------< VRRP Topology >------ Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: VRRP Instance = VI_1 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Want State = BACKUP Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Runing on device = eth0 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Gratuitous ARP repeat = 5 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Gratuitous ARP refresh repeat = 1 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Virtual Router ID = 51 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Priority = 100 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Advert interval = 1sec Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Authentication type = SIMPLE_PASSWORD Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Password = 1111 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Virtual IP = 1 Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: 192.168.247.200/32 dev eth0 scope global Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: Using LinkWatch kernel netlink reflector... Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Configuration is using : 11703 Bytes Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) Entering BACKUP STATE Sep 29 11:01:55 localhost Keepalived_vrrp[3278]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: IPVS: Scheduler or persistence engine not found Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: IPVS: No such process Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: ------< Global definitions >------ Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Router ID = Atlas_ha Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Smtp server = 127.0.0.1 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Smtp server connection timeout = 30 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Email notification from = root@localhost Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Email notification = 732233048@qq.com Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: VRRP IPv4 mcast group = 224.0.0.18 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: VRRP IPv6 mcast group = 224.0.0.18 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: ------< SSL definitions >------ Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Using autogen SSL context Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: ------< LVS Topology >------ Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: System is compiled with LVS v1.2.1 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: VIP = 192.168.247.200, VPORT = 3306 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: delay_loop = 6, lb_algo = Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: protocol = TCP Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: alpha is OFF, omega is OFF Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: quorum = 1, hysteresis = 0 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: lb_kind = NAT Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: RIP = 192.168.247.133, RPORT = 3306, WEIGHT = 1 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: -> Notify script DOWN = /etc/keepalived/keepalived_monitor.sh Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: ------< Health checkers >------ Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: [192.168.247.133]:3306 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Keepalive method = TCP_CHECK Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Connection dest = [192.168.247.133]:3306 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Connection timeout = 10 Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Using LinkWatch kernel netlink reflector... Sep 29 11:01:55 localhost Keepalived_healthcheckers[3277]: Activating healthchecker for service [192.168.247.133]:3306 ##进入backup状态 ##检测ip 133

查看vip绑定在哪台机器上:

(Atlas主)

# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:5c:72:2c brd ff:ff:ff:ff:ff:ff inet 192.168.247.132/24 brd 192.168.247.255 scope global eth0 inet 192.168.247.200/32 scope global eth0 inet6 fe80::20c:29ff:fe5c:722c/64 scope link valid_lft forever preferred_lft forever ##vip 200绑定在Atlas主 ##设置开机自动启动 chkconfig keepalived on

(Atlas备)

# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:01:38:24 brd ff:ff:ff:ff:ff:ff inet 192.168.247.133/24 brd 192.168.247.255 scope global eth0 inet6 fe80::20c:29ff:fe01:3824/64 scope link valid_lft forever preferred_lft forever

Atlas主备之间测试:

测试一:把Atlas主的Atlas服务stop掉

##Atlas主: /etc/init.d/atlas stop ##查看日志: Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: TCP connection to [192.168.247.132]:3306 failed !!! Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: Removing service [192.168.247.132]:3306 from VS [192.168.247.200]:3306 Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: IPVS: Service not defined Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: Executing [/etc/keepalived/keepalived_monitor.sh] for service [192.168.247.132]:3306 in VS [192.168.247.200]:3306 Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: Lost quorum 1-0=1 > 0 for VS [192.168.247.200]:3306 Sep 29 11:06:42 localhost Keepalived_healthcheckers[3547]: Remote SMTP server [127.0.0.1]:25 connected. Sep 29 11:06:43 localhost Keepalived_healthcheckers[3547]: SMTP alert successfully sent. Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: TCP connection to [192.168.247.132]:3306 success. Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: Adding service [192.168.247.132]:3306 to VS [192.168.247.200]:3306 Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: IPVS: Service not defined Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: Gained quorum 1+0=1 <= 1 for VS [192.168.247.200]:3306 Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: Remote SMTP server [127.0.0.1]:25 connected. Sep 29 11:06:48 localhost Keepalived_healthcheckers[3547]: SMTP alert successfully sent. ##Atlas服务stop后,keepalived把它从集群中移除,然后又把它启动起来,加入集群

测试二:把Atlas备的Atlas服务stop掉

##Atlas服务stop后,keepalived把它从集群中移除,然后又把它启动起来,加入集群

测试三:把Atlas主的keepalived服务stop掉

##Atlas主: /etc/init.d/keepalived stop ##查看日志: Sep 29 11:10:34 localhost Keepalived[3545]: Stopping Keepalived v1.2.15 (09/29,2015) Sep 29 11:10:34 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) sending 0 priority Sep 29 11:10:34 localhost Keepalived_vrrp[3548]: VRRP_Instance(VI_1) removing protocol VIPs. Sep 29 11:10:34 localhost Keepalived_healthcheckers[3547]: Netlink reflector reports IP 192.168.247.200 removed Sep 29 11:10:34 localhost Keepalived_healthcheckers[3547]: Removing service [192.168.247.132]:3306 from VS [192.168.247.200]:3306 Sep 29 11:10:34 localhost Keepalived_healthcheckers[3547]: IPVS: Service not defined Sep 29 11:10:34 localhost Keepalived_healthcheckers[3547]: IPVS: No such service ##移除虚拟ip

##Atlas备查看日志: Sep 29 11:10:35 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) Transition to MASTER STATE Sep 29 11:10:36 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) Entering MASTER STATE Sep 29 11:10:36 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) setting protocol VIPs. Sep 29 11:10:36 localhost Keepalived_healthcheckers[3277]: Netlink reflector reports IP 192.168.247.200 added Sep 29 11:10:36 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.247.200 Sep 29 11:10:41 localhost Keepalived_vrrp[3278]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.247.200 ##变为master状态 ##绑定vip 200

测试四:Atlas主的keepalived重新启动

/etc/init.d/keepalived start ##结果:Atlas主并没有变为master,而是作为了backup,因为在配置文件中,我们使用了nopreempt参数,不抢占(注:只能在优先级高的一端才能配这个参数)

测试五:把Atlas备的keepalived服务stop掉

##前提是Atlas备此时对外服务 ##正常切换

测试六:把Atlas主的Atlas服务stop掉,并不再让其成功启动

##Atlas主的keepalived被stop掉,正常主备切换

测试访问vip 200,来管理数据库

##此时的两台Atlas,一台对外服务,一台处于空闲状态##

##随便选择一台数据库,如slave2:这里只是选择用来登录,随便一台数据库都可以 ##也可以用windows下的navicat来登录,ip是200,端口是3306,账号是Atlas ##slave2: mysql -h192.168.247.200 -P3306 -uAtlas -pmysql ##这里必须是vip 200,端口3306,账号Atlas mysql> show databases; ##查看数据库 +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | ku3 | | ku4 | | mysql | | performance_schema | | test | +--------------------+ 8 rows in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; ##轮训获取读数据库的server-id,读负载均衡正常 +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 2 | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 3 | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 2 | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW VARIABLES LIKE 'server_id'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | server_id | 3 | +---------------+-------+ 1 row in set (0.00 sec) mysql> create database ku5; ##创建ku5 Query OK, 1 row affected (0.01 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | ku3 | | ku4 | | ku5 | | mysql | | performance_schema | | test | +--------------------+ 9 rows in set (0.00 sec ##到其它任何一台数据库查看是否数据同步 ##如:slave1: mysql -p123456 mysql> show databases; ##数据同步正常 +--------------------+ | Database | +--------------------+ | information_schema | | ku1 | | ku2 | | ku3 | | ku4 | | ku5 | | mysql | | performance_schema | | test | +--------------------+ 9 rows in set (0.01 sec)

##测试:将Atlas进行主备切换,查看访问200是否仍正常##