HA集群之三:corosync+pacemaker实现httpd服务的高可用(crm的用法)

一、基础概念

1、集群的组成结构

HA Cluster:

Messaging and Infrastructure Layer|Heartbeat Layer 集群信息事务层

Membership Layer 集群成员关系层

CCM 投票系统

Resource Allocation Layer 资源分配层

CRM,

DC:LRM,PE,TE,CIB

Other:LRM,CIB

Resource Layer 资源代理

RA

2、OpenAIS: 开放式应用接口规范

提供了一种集群模式,包含集群框架、集群成员管理、通信方式、集群监测,但没有集群资源管理功能;

组件包括:AMF, CLM, CPKT, EVT等;分支不同,包含的组件略有区别;

分支:picacho, whitetank, wilson,

corosync (集群管理引擎)

是openais的一个子组件;

分裂成为两个项目:

corosync, wilson(ais的接口标准)

CentOS 5:

cman + rgmanager (RHCS 系统自带)

CentOS 6:

cman + rgmanager

corosync + pacemaker

3、命令行管理工具:

crmsh: suse, CentOS 6.4-自带

pcs: RedHat, CentOS 6.5+自带

二、案例:实现HA httpd

1、安装corosync + pacemaker

注意:确定HA集群的前提:时间同步,集群节点基于hostname命令显示的主机名通信,节点之间的root用户能够基于密钥认证,考虑仲裁设备是否要使用

yum install corosync pacemaker -y [root@BAIYU_175 ~]# rpm -ql corosync /etc/corosync /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf.example.udpu /etc/corosync/service.d /etc/corosync/uidgid.d /etc/dbus-1/system.d/corosync-signals.conf /etc/rc.d/init.d/corosync /etc/rc.d/init.d/corosync-notifyd /etc/sysconfig/corosync-notifyd /usr/bin/corosync-blackbox /usr/libexec/lcrso /usr/libexec/lcrso/coroparse.lcrso /usr/libexec/lcrso/objdb.lcrso /usr/libexec/lcrso/quorum_testquorum.lcrso /usr/libexec/lcrso/quorum_votequorum.lcrso /usr/libexec/lcrso/service_cfg.lcrso /usr/libexec/lcrso/service_confdb.lcrso /usr/libexec/lcrso/service_cpg.lcrso /usr/libexec/lcrso/service_evs.lcrso /usr/libexec/lcrso/service_pload.lcrso /usr/libexec/lcrso/vsf_quorum.lcrso /usr/libexec/lcrso/vsf_ykd.lcrso /usr/sbin/corosync /usr/sbin/corosync-cfgtool /usr/sbin/corosync-cpgtool /usr/sbin/corosync-fplay /usr/sbin/corosync-keygen /usr/sbin/corosync-notifyd /usr/sbin/corosync-objctl /usr/sbin/corosync-pload /usr/sbin/corosync-quorumtool /usr/share/doc/corosync-1.4.7 /usr/share/doc/corosync-1.4.7/LICENSE /usr/share/doc/corosync-1.4.7/SECURITY /usr/share/man/man5/corosync.conf.5.gz /usr/share/man/man8/confdb_keys.8.gz /usr/share/man/man8/corosync-blackbox.8.gz /usr/share/man/man8/corosync-cfgtool.8.gz /usr/share/man/man8/corosync-cpgtool.8.gz /usr/share/man/man8/corosync-fplay.8.gz /usr/share/man/man8/corosync-keygen.8.gz /usr/share/man/man8/corosync-notifyd.8.gz /usr/share/man/man8/corosync-objctl.8.gz /usr/share/man/man8/corosync-pload.8.gz /usr/share/man/man8/corosync-quorumtool.8.gz /usr/share/man/man8/corosync.8.gz /usr/share/man/man8/corosync_overview.8.gz /usr/share/snmp/mibs/COROSYNC-MIB.txt /var/lib/corosync /var/log/cluster

2、配置corosync

[root@BAIYU_173 ~]# cd /etc/corosync/ [root@BAIYU_173 corosync]# ls corosync.conf.example corosync.conf.example.udpu service.d uidgid.d [root@BAIYU_173 corosync]# cp corosync.conf.example corosync.conf

1)主配置文件/etc/corosync.conf详解:

compatibility: whitetank #兼容08.以前的版本

secauth:off 不打开集群安全认证 推荐打开 on 使用 corosync-keygen 生成密钥

threads: 0 定义多线程工作模式 0表示不使用线程而使用进程

ringnumber:0 环数目,类似ttl 默认即可

bindnetaddr: 192.168.1.0 多播地址监听哪个网络地址,填上自己的网络地址即可:192.168.100.0

mcastaddr: 239.255.1.1 指定多播地址 225.25.25.25

mcastport: 5405 多播使用的端口utp

to_logfile: yes

to_syslog: yes 使用一个文件记录地址即可 off

在/etc/crorosync/corosync.conf中添加以下内容:

amf {

mode: disabled

}

查看修改后的/etc/corosync/corosync.conf:

[root@BAIYU_173 corosync]# grep -v '#' corosync.conf|grep -v '^$'

compatibility: whitetank

totem {

version: 2

secauth: on

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.100.0

mcastaddr: 225.25.25.25

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: off

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

2)在/etc/corosync/service.d中新建pacemaker文件:

[root@BAIYU_173 service.d]# cat pacemaker

service {

name: pacemaker

ver: 1

}

[root@BAIYU_173 corosync]# corosync-keygen #生成用于集群验证时的密钥 Corosync Cluster Engine Authentication key generator. Gathering 1024 bits for key from /dev/random. Press keys on your keyboard to generate entropy. Writing corosync key to /etc/corosync/authkey. [root@BAIYU_173 corosync]# ls authkey corosync.conf.example corosync.conf.orig uidgid.d corosync.conf corosync.conf.example.udpu service.d

3)将密钥和corosync的配置文件复制给集群其它节点:

[root@BAIYU_173 corosync]# scp -p authkey corosync.conf 192.168.100.175:/etc/corosync

3、启动corosync并验证:

[root@BAIYU_173 corosync]# service corosync start #要先启动pacemaker依赖于corosync所以要先启动corosync Starting Corosync Cluster Engine (corosync): [确定] [root@BAIYU_173 corosync]# netstat -nlptu Active Internet connections (only servers) [root@BAIYU_173 ~]# service pacemaker start #如果启动失败,先重启corosync再重启pacemaker Starting Pacemaker Cluster Manager[确定] udp 0 0 0.0.0.0:53243 0.0.0.0:* 1372/rpc.statd [root@BAIYU_173 ~]# netstat -nlptu Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1348/rpcbind tcp 0 0 0.0.0.0:57269 0.0.0.0:* LISTEN 1372/rpc.statd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1551/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1642/master udp 0 0 192.168.100.173:5404 0.0.0.0:* 2330/corosync udp 0 0 192.168.100.173:5405 0.0.0.0:* 2330/corosync udp 0 0 225.25.25.25:5405 0.0.0.0:* 2330/corosync udp 0 0 0.0.0.0:671 0.0.0.0:* 1348/rpcbind udp 0 0 127.0.0.1:703 0.0.0.0:* 1372/rpc.statd udp 0 0 0.0.0.0:111 0.0.0.0:* 1348/rpcbind udp 0 0 0.0.0.0:53243 0.0.0.0:* 1372/rpc.statd

1)验证corosync引擎是否正常启动:

[root@BAIYU_173 corosync]# grep -e 'Corosync Cluster Engine' -e 'configuration file' /

var/log/cluster/corosync.log

Oct 24 17:55:00 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service.

Oct 24 17:55:00 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

2)验证初始化成员节点通知是否正常发出:

[root@BAIYU_173 corosync]# grep TOTEM /var/log/cluster/corosync.log Oct 24 17:55:00 corosync [TOTEM ] Initializing transport (UDP/IP Multicast). Oct 24 17:55:00 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0). Oct 24 17:55:00 corosync [TOTEM ] The network interface [192.168.100.173] is now up. Oct 24 17:55:00 corosync [TOTEM ] Process pause detected for 613 ms, flushing membership messages. Oct 24 17:55:00 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. Oct 24 17:55:23 corosync [TOTEM ] A processor failed, forming new configuration. Oct 24 17:55:23 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. Oct 24 17:55:27 corosync [TOTEM ] A processor failed, forming new configuration. Oct 24 17:55:27 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

3)检查启动过程中是否有错误产生:

下面的错误信息表示pacemaker不久之后讲不再作为corosync的插件运行,因此,建议使用cman作为集群基础架构服务,此处可安全忽略

[root@BAIYU_173 corosync]# grep ERROR: /var/log/cluster/corosync.log | grep -v unpack_ resources Oct 24 17:55:00 corosync [pcmk ] ERROR: process_ais_conf: You have configured a cluster using the Pacemaker plugin for Corosync. The plugin is not supported in this environment and will be removed very soon. Oct 24 17:55:00 corosync [pcmk ] ERROR: process_ais_conf: Please see Chapter 8 of 'Clusters from Scratch' (http://www.clusterlabs.org/doc) for details on using Pacemaker with CMAN Oct 24 17:55:02 corosync [pcmk ] ERROR: pcmk_wait_dispatch: Child process mgmtd exited (pid=25335, rc=100) # 可以忽略,

3)查看pacemaker是否正常启动:

[root@BAIYU_173 corosync]# grep pcmk_startup /var/log/cluster/corosync.log Oct 24 17:55:00 corosync [pcmk ] info: pcmk_startup: CRM: Initialized Oct 24 17:55:00 corosync [pcmk ] Logging: Initialized pcmk_startup Oct 24 17:55:00 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615 Oct 24 17:55:00 corosync [pcmk ] info: pcmk_startup: Service: 9 Oct 24 17:55:00 corosync [pcmk ] info: pcmk_startup: Local hostname: BAIYU_173

4、安装crm_sh:

定义资源的命令行

可以只在一个节点上安装crm_sh,配置结果会送给DC,然后DC同步给其它节点,DC在集群系统启动时集群系统自动选举出

[root@BAIYU_173 ~]# cd /etc/yum.repos.d/ [root@BAIYU_173 ~]# wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/network:ha-clustering:Stable.repo [root@BAIYU_173 ~]# yum install crmsh [root@BAIYU_173 ~]# crm crm(live)# status # 查看集群系统状态;如果这里报错很可能是pacemaker停止了或前面配置有误 Last updated: Sun Oct 25 22:41:42 2015 Last change: Sun Oct 25 22:11:26 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 0 Resources configured Online: [ BAIYU_173 BAIYU_175 ] crm(live)# configure crm(live)configure# show # 查看集群系统信息 node BAIYU_173 node BAIYU_175 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 crm(live)configure#

5、crm使用详解

crm的常用一级子命令:

cib manage shadow CIBs #cib沙盒 resource resources management #管理资源 ,所有的资源的状态都在这个子命令后定义 configure CRM cluster configuration #编辑集群配置信息,所有资源的定义 node nodes management #集群节点管理子命令 options user preferences #用户优先级 history CRM cluster history# site Geo-cluster support ra resource agents information center #资源代理子命令(所有与资源代理相关的程都在此命令之下) status show cluster status #显示当前集群的状态信息 help,? show help (help topics for list of topics)#查看当前区域可能的命令 end,cd,up go back one level #返回第一级crm(live)# quit,bye,exit exit the program #退出crm(live)交互模式

configure常用的子命令:

所有资源的定义都是在此子命令下完成的

node define a cluster node #定义一个集群节点 primitive define a resource #定义资源 monitor add monitor operation to a primitive #对一个资源添加监控选项(如超时时间,启动失败后的操作) group define a group #定义一个组类型(将多个资源整合在一起) clone define a clone #定义一个克隆类型(可以设置总的克隆数,每一个节点上可以运行几个克隆) ms define a master-slave resource #定义一个主从类型(集群内的节点只能有一个运行主资源,其它从的做备用) rsc_template define a resource template #定义一个资源模板 location a location preference #定义位置约束优先级(默认运行于那一个节点(如果位置约束的值相同,默认倾向性那一个高,就在那一个节点上运行)) colocation colocate resources #排列约束资源(多个资源在一起的可能性) order order resources #资源的启动的先后顺序 rsc_ticket resources ticket dependency property set a cluster property #设置集群全局属性 rsc_defaults set resource defaults #设置资源默认属性(粘性) fencing_topology node fencing order #隔离节点顺序 role define role access rights #定义角色的访问权限 user define user access rights #定义用用户访问权限 op_defaults set resource operations defaults #设置资源默认选项 schema set or display current CIB RNG schema show display CIB objects #显示集群信息库对 edit edit CIB objects #编辑集群信息库对象(vim模式下编辑) filter filter CIB objects #过滤CIB对象 delete delete CIB objects #删除CIB对象 default-timeouts set timeouts for operations to minimums from the meta-data rename rename a CIB object #重命名CIB对象 modgroup modify group #改变资源组 refresh refresh from CIB #重新读取CIB信息 erase erase the CIB #清除CIB信息 ptest show cluster actions if changes were committed rsctest test resources as currently configured cib CIB shadow management cibstatus CIB status management and editing template edit and import a configuration from a template commit commit the changes to the CIB #将更改后的信息提交写入CIB verify verify the CIB with crm_verify #CIB语法验证 upgrade upgrade the CIB to version 1.0 save save the CIB to a file #将当前CIB导出到一个文件中(导出的文件存于切换crm 之前的目录) load import the CIB from a file #从文件内容载入CIB graph generate a directed graph xml raw xml help show help (help topics for list of topics) #显示帮助信息 end go back one level #回到第一级(crm(live)#) quit exit the program #退出crm交互模式

常用的全局属性配置:

configure

property

stonith-eanabled=true|false true 默认 “没有报头设备时使用”

no-quorum-policy=stopped 停止 默认 “简单的2节点集群时使用”

ignore 忽略 仍然运行

freeze 冻结 旧连接可以继续访问,新的连接被拒绝

suicide 殉情

default-resource-stickiness=#|-#|inf|-inf “资源对当前节点的粘性”

symetric-cluster=true|false 是否是对称集群 true:rsc可以运行于集群中的任意节点

resource子命令

所有的资源状态都此处控制

status show status of resources

#显示资源状态信息

start start a resource

#启动一个资源

stop stop a resource

#停止一个资源

restart restart a resource

#重启一个资源

promote promote a master-slave resource

#提升一个主从资源

demote demote a master-slave resource

#降级一个主从资源

manage put a resource into managed mode

unmanage put a resource into unmanaged mode

migrate migrate a resource to another node

#将资源迁移到另一个节点上

unmigrate unmigrate a resource to another node

param manage a parameter of a resource

#管理资源的参数

secret manage sensitive parameters

#管理敏感参数

meta manage a meta attribute

#管理源属性

utilization manage a utilization attribute

failcount manage failcounts

#管理失效计数器

cleanup cleanup resource status

#清理资源状态

refresh refresh CIB from the LRM status

#从LRM(LRM本地资源管理)更新CIB(集群信息库),在

reprobe probe

for

resources not started by the CRM

#探测在CRM中没有启动的资源

trace start RA tracing

#启用资源代理(RA)追踪

untrace stop RA tracing

#禁用资源代理(RA)追踪

help show help (help topics

for

list of topics)

#显示帮助

end go back one level

#返回一级(crm(live)#)

quit

exit

the program

#退出交互式程序

node子命令

节点管理和状态命令

status show nodes status as XML #以xml格式显示节点状态信息 show show node #命令行格式显示节点状态信息 standby put node into standby #模拟指定节点离线(standby在后面必须的FQDN) online set node online # 节点重新上线 maintenance put node into maintenance mode ready put node into ready mode fence fence node #隔离节点 clearstate Clear node state #清理节点状态信息 delete delete node #删除 一个节点 attribute manage attributes utilization manage utilization attributes status-attr manage status attributes help show help (help topics for list of topics) end go back one level quit exit the program

ra子命令

资源代理类别都在此处

classes list classes and providers #为资源代理分类 list list RA for a class (and provider)#显示一个类别中的提供的资源 meta show meta data for a RA #显示一个资源代理序的可用参数(如meta ocf:heartbeat:IPaddr2) providers show providers for a RA and a class help show help (help topics for list of topics) end go back one level quit exit the program

6、定义资源

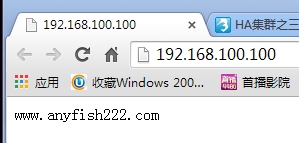

webip: 192.168.100.100

那在这里,我们如何去配置一个资源呢,虽然它跟heartbeat略有区别,但是概念基本上是一样的,下面我们就来配置一个web资源吧!

1)配置两点的corosync+pacemaker集群,设置两个全局属性:

stonith-enabled=false

no-quorum-policy=ignore

由于我们的corosync默认是启用stonith功能的,但是我们这里没有stonith设备,如果我们直接去配置资源的话,由于没有stonith设备,所以资源的切换并不会完成,所以要禁用stonith功能,但禁用stonoith需要我们去配置集群的全局stonith属性,全局属性是对所有的节点都生效;

要注意:2节点的集群,如果一个节点挂了,就不拥有法定票数了,那资源是不会切换的,资源默认会停止

集群的策略有几种:

stopped :停止服务 (默认)

ignore :忽略,继续运行

freeze :冻结,已经连接的请求继续响应,新的请求不再响应

suicide :自杀,将服务kill掉

a、禁用stonith-enable

[root@BAIYU_173 ~]# crm configure #进入crm命令行模式配置资源等 crm(live)configure# property #切换到property目录下,可以用两次tab键进行补全和查看 crm(live)configure# help property #不知道用法就help Set a cluster property Set the cluster (crm_config) options. Usage: property [$id=<set_id>] [rule ...] <option>=<value> [<option>=<value> ...] Example: property stonith-enabled=true property rule date spec years=2014 stonith-enabled=false crm(live)configure# property stonith-enabled=false #禁用stonith-enabled crm(live)configure# verify #检查设置的属性是否正确 crm(live)configure# commit #检查没问题就可以提交了 crm(live)configure# show #查看当前集群的所有配置信息 node BAIYU_173 node BAIYU_175 #两个节点 primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ #DC的版本号 cluster-infrastructure="classic openais (with plugin)" \ #集群的基础架构,使用的是OpenAIS,插件式的 expected-quorum-votes=2 \ #期望节点的票数 stonith-enabled=false #禁用stonith功能

b、忽略投票规则

crm(live)configure# property no-quorum-policy=ignore crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore

primitive 语法:

primitive <rsc_id> class:provider:ra params param1=value1 param2=value2 op op1 param1=value op op2 parma1=value1

c、添加资源

crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=192.168.100.100 nic=eth0 cidr_netmask=24 crm(live)configure# verify # 检查语法是否有错 crm(live)configure# commit # 提交给内核 Online: [ BAIYU_173 BAIYU_175 ] crm(live)# status Last updated: Mon Oct 26 16:24:53 2015 Last change: Mon Oct 26 16:24:21 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 1 Resources configured Online: [ BAIYU_173 BAIYU_175 ] webip (ocf::heartbeat:IPaddr): Started BAIYU_173 #可以看到webip 已经在BAIYU_173上运行了 crm(live)# [root@BAIYU_173 ~]# ip addr # 注意configure查看不到 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:6a:dc:8d brd ff:ff:ff:ff:ff:ff inet 192.168.100.173/24 brd 192.168.100.255 scope global eth0 inet 192.168.100.100/24 brd 192.168.100.255 scope global secondary eth0 #webip

crm(live)configure# primitive webserver lsb:httpd crm(live)configure# verify crm(live)configure# commit crm(live)configure# cd crm(live)# status Last updated: Mon Oct 26 17:52:46 2015 Last change: Mon Oct 26 17:52:34 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] webip (ocf::heartbeat:IPaddr): Started BAIYU_175 webserver (lsb:httpd): Started BAIYU_173

上面发现2个资源默认是分散在系统节点中

把资源定义在同1个节点有2种方法:定义组或位置约束:

1)新建组,把资源按启动顺序先后加入组

crm(live)configure# group webservice webip webserver #定义组,组中资源在同一个节点并 按顺序启动 crm(live)configure# cd crm(live)# status Last updated: Mon Oct 26 18:09:16 2015 Last change: Mon Oct 26 18:07:19 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started BAIYU_173 webserver (lsb:httpd): Started BAIYU_173 crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 primitive webserver lsb:httpd group webservice webip webserver property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore

crm(live)configure# cd ../resource crm(live)resource# status webservice # 资源在运行时不能删除,要先停止 resource webservice is running on: BAIYU_173 crm(live)resource# stop webservice crm(live)resource# status webservice resource webservice is NOT running crm(live)resource# cd ../configure crm(live)configure# delete webservice #删除组 crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 primitive webserver lsb:httpd property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)# status Last updated: Mon Oct 26 18:47:24 2015 Last change: Mon Oct 26 18:46:41 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] #资源又分散在节点中 webip (ocf::heartbeat:IPaddr): Started BAIYU_173 webserver (lsb:httpd): Started BAIYU_175

2)用colocation,ored定义资源排列约束,和顺序约束:

crm(live)configure# colocation webserver_with_webip inf: webserver webip crm(live)configure# verify crm(live)configure# commit crm(live)configure# crm status ERROR: configure.crm: No such command crm(live)configure# order webip_before_webserver Mandatory: webip webserver crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 primitive webserver lsb:httpd colocation webserver_with_webip inf: webserver webip order webip_before_webserver Mandatory: webip webserver property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# cd crm(live)# status Last updated: Mon Oct 26 18:58:09 2015 Last change: Mon Oct 26 18:57:56 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] webip (ocf::heartbeat:IPaddr): Started BAIYU_173 webserver (lsb:httpd): Started BAIYU_173

定义位置约束:

crm(live)configure# location webip_on_BAIYU_175 webip rule 50: #uname eq BA IYU_175 crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.100 nic=eth0 cidr_netmask=24 primitive webserver lsb:httpd location webip_on_BAIYU_175 webip \ rule 50: #uname eq BAIYU_175 colocation webserver_with_webip inf: webserver webip order webip_before_webserver Mandatory: webip webserver property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# verify crm(live)configure# commit crm(live)# status Last updated: Mon Oct 26 19:27:28 2015 Last change: Mon Oct 26 19:27:10 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] webip (ocf::heartbeat:IPaddr): Started BAIYU_175 webserver (lsb:httpd): Started BAIYU_175

定义资源对当前节点的粘性:

crm(live)configure# property default-resource-stickiness=50 crm(live)configure# verify crm(live)configure# commit

注意: 即使当资源在BAIYU_173时,当前有2个资源,粘性:50+50>location:50 资源也不会自动转到BAIYU_173

因为系统默认只监控节点,当资源被意外关闭时,系统不知道,不会做资源转移,所以我们要配置系统监控资源:

先把之前定义的资源删除:

crm(live)# cd ../resource crm(live)resource# stop webip crm(live)resource# stop webserver crm(live)resource# cd ../configure crm(live)configure# edit crm(live)configure# edit #只留定义的集群全局属性 1 node BAIYU_173 \ 2 attributes standby=off 3 node BAIYU_175 \ 4 attributes standby=off 5 property cib-bootstrap-options: \ 6 dc-version=1.1.11-97629de \ 7 cluster-infrastructure="classic openais (with plugin)" \ 8 expected-quorum-votes=2 \ 9 stonith-enabled=false \ 10 no-quorum-policy=ignore \ 11 default-resource-stickiness=50 12 #vim:set syntax=pcmk crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ default-resource-stickiness=50

重新定义加入监控选项:

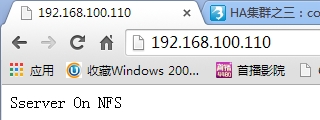

crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=192.168. 100.110 nic=eth0 cidr_netmask=24 op monitor interval=10s timeout=20s # op表示加入动作,monitor表示监控 interval 间隔时间,timeout 超时时间 crm(live)configure# verify crm(live)configure# commit crm(live)configure# primitive webserver lsb:httpd op monitor interval=10s t imout=20s crm(live)configure# group webservice webip webserver crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.110 nic=eth0 cidr_netmask=24 \ op monitor interval=10s timeout=20s primitive webserver lsb:httpd \ op monitor interval=10s timout=20s group webservice webip webserver property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ default-resource-stickiness=50 [root@BAIYU_173 ~]# crm crm(live)# status Last updated: Mon Oct 26 20:04:41 2015 Last change: Mon Oct 26 20:02:24 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ BAIYU_173 BAIYU_175 ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started BAIYU_173 webserver (lsb:httpd): Started BAIYU_173 crm(live)#

验证:

[root@BAIYU_173 ~]# netstat -nlpt|grep 80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 7186/httpd [root@BAIYU_173 ~]# killall httpd [root@BAIYU_173 ~]# netstat -nlpt|grep 80 [root@BAIYU_173 ~]# netstat -nlpt|grep 80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 8906/httpd

注意:如果资源启动不了,资源会转移到其它节点

添加NFS:

[root@BAIYU_173 ~]# crm crm(live)# resource crm(live)resource# stop webservice crm(live)resource# show Resource Group: webservice webip (ocf::heartbeat:IPaddr): Stopped webserver (lsb:httpd): Stopped crm(live)# ra info ocf:heartbeat:Filesystem #查看RA中对该类型资源的一些默认设置和必须要带参数

crm(live)configure# primitive webstore ocf:heartbeat:Filesystem params devi ce="192.168.100.10:/data/myweb" directory="/var/www/html" fstype="nfs" op m onitor interval=20s timeout=40s op start timeout=60s op stop timeout=60s crm(live)configure# edit 手动把webstore添加进之前定义的组 crm(live)configure# verify crm(live)configure# commit

crm(live)configure# show node BAIYU_173 \ attributes standby=off node BAIYU_175 \ attributes standby=off primitive webip IPaddr \ params ip=192.168.100.110 nic=eth0 cidr_netmask=24 \ op monitor interval=10s timeout=20s primitive webserver lsb:httpd \ op monitor interval=10s timout=20s primitive webstore Filesystem \ params device="192.168.100.10:/data/myweb" directory="/var/www/html" fstype=nfs \ op monitor interval=20s timeout=40s \ op start timeout=60s interval=0 \ op stop timeout=60s interval=0 group webservice webip webserver webstore \ meta target-role=Stopped property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \

crm(live)# resource start webservice crm(live)# resource show Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started webserver (lsb:httpd): Started webstore (ocf::heartbeat:Filesystem): Started crm(live)# status Last updated: Mon Oct 26 20:42:32 2015 Last change: Mon Oct 26 20:42:00 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Online: [ BAIYU_173 BAIYU_175 ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started BAIYU_173 webserver (lsb:httpd): Started BAIYU_173 webstore (ocf::heartbeat:Filesystem): Started BAIYU_173

Failed actions: webserver_monitor_10000 on BAIYU_173 'not running' (7): call=40, status=complete, last-rc-change='Mon Oct 26 20:07:46 2015', queued=0ms, exec=0ms

清理之前的状态报错信息:

crm(live)# resource cleanup webservice #清空相关资源状态的报错信息 Cleaning up webip on BAIYU_173 Cleaning up webip on BAIYU_175 Cleaning up webserver on BAIYU_173 Cleaning up webserver on BAIYU_175 Cleaning up webstore on BAIYU_173 Cleaning up webstore on BAIYU_175 Waiting for 6 replies from the CRMd...... OK

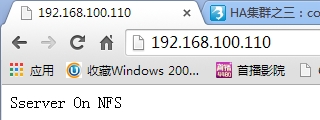

将BAIYU_173备用,资源转移到_175

crm(live)# node standby crm(live)# status Last updated: Mon Oct 26 20:53:39 2015 Last change: Mon Oct 26 20:53:35 2015 Stack: classic openais (with plugin) Current DC: BAIYU_175 - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Node BAIYU_173: standby Online: [ BAIYU_175 ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started BAIYU_175 webserver (lsb:httpd): Started BAIYU_175 webstore (ocf::heartbeat:Filesystem): Started BAIYU_175

此时完整的HA httpd配置完成。

博客作业:corosync+pacemaker实现HA MariaDB