Centos6.5+mysql5.6+cluster7.4安装配置方案

Mysql节点介绍

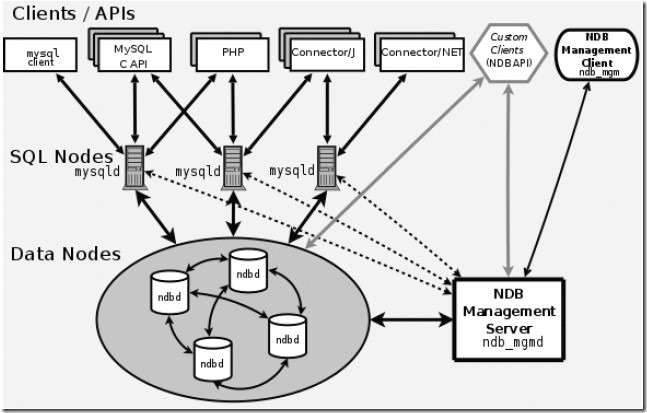

mysql 自4.1.x版本开始推出mysql cluster功能。cluster简单地说,就是一组"节点"的组合。这里的节点是一个逻辑概念,一台计算机上可以存放一个节点,也可以存放多个节点。这些节点的功能各不相同,有的用来存储数据(数据结点),有的用来存放表结构(sql结点),有的用来对其他节点进行管理(管理节点)。这些节点组合在一起,可以为应用提供具有高可用性,高性能和可缩放性的cluster数据管理。

mysql 使用 ndb 存储引擎来对数据节点的数据进行存储,以前版本的ndb数据引擎只支持基于内存的数据表,从5.1版本开始支持基于磁盘的数据表。

理论上,mysql cluster通过数据的分布式存储和可扩展的系统架构,可以满足更大规模的应用;而且通过冗余策略,可以大大地提高系统的可靠性和数据的有效性。

mysql 按照节点类型可以分为3部分。

.管理节点。

顾名思义,管理节点用来对其他节点进行管理。实际操作中,是通过一个叫作config.ini的配置文件进行维护而起到管理作用。该文件可以用来配置有多少需要维护的副本,需要在每个数据结点上为数据和索引分配多少内在,数据节点的位置,在每个节点上保存数据的磁盘位置,sql节点的位置信息。管理节点只能有一个,配置要求不高。

.sql节点

sql 节点可以理解为应用和数据节点之间的一个桥梁。应用不能直接访问数据节点,只能先访问sql节点,然后sql节点再去访问数据结点来返回数据。Cluster中可以有多个sql节点,通过每个sql节点查询到的数据都是一致的,通常来说,sql节点越多,分配到每个sql节点的负载就越小,系统的整体性能就越好。

.数据节点

用来存放Cluster里面的数据,可以有多个数据节点。每个数据结点可以有多个镜像节点。任何一个数据节点发生故障,只要它的镜像节点正常,Cluster就可以正常运行。

这三种逻辑上不同节点物理上可以存放在不同的服务器上,也可以存放在相同的服务器上。

通过架构中的各个节点的介绍,可以总结一下mysql cluster的访问过程,前台应用一定的负载均衡算法将对数据的访问分散到不同的sql节点上去,然后sql节点对数据节点进行数据访问并从数据节点返回结果,最后sql结点将收到的结果返给前台应用,而管理节点并不参与访问过程,它只用来对sql节点和数据节点进行配置管理。

一、准备

1、准备服务器

计划建立有5个节点的MySQL CLuster体系,需要用到5台服务器。

节点配置说明

节点

对应的IP和端口

管理节点(1个)

10.0.0.200:1186

SQL节点 (2个)

A:10.0.0.14:3306

B:10.0.0.16:3306

数据节点 (2个)

A:10.0.0.11

B:10.0.0.12

二、Centos6.5挂载多路径存储磁盘

1、新建2个Volume

2、配置multipath

[root@tsm-db1 mysql-5.6.25]# vim /etc/multipath.conf

# This is a basic configuration file with some examples, for device mapper

# multipath.

# For a complete list of the default configuration values, see

# /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf.defaults

# For a list of configuration options with descriptions, see

# /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf.annotated

#

# REMEMBER: After updating multipath.conf, you must run

#

# service multipathd reload

#

# for the changes to take effect in multipathd

## By default, devices with vendor = "IBM" and product = "S/390.*" are

## blacklisted. To enable mulitpathing on these devies, uncomment the

## following lines.

#blacklist_exceptions {

# device {

# vendor "IBM"

# product "S/390.*"

# }

#}

## Use user friendly names, instead of using WWIDs as names.

defaults {

find_multipaths yes

user_friendly_names yes

udev_dir /dev

path_grouping_policy multibus

failback immediate

no_path_retry fail

max_fds max

queue_without_daemon no

flush_on_last_del yes

}

##

## Here is an example of how to configure some standard options.

##

#

#defaults {

# udev_dir /dev

# polling_interval 10

# path_selector "round-robin 0"

# path_grouping_policy multibus

# getuid_callout "/lib/udev/scsi_id --whitelisted --device=/dev/%n"

# prio alua

# path_checker readsector0

# rr_min_io 100

# max_fds 8192

# rr_weight priorities

# failback immediate

# no_path_retry fail

# user_friendly_names yes

#}

##

## The wwid line in the following blacklist section is shown as an example

## of how to blacklist devices by wwid. The 2 devnode lines are the

## compiled in default blacklist. If you want to blacklist entire types

## of devices, such as all scsi devices, you should use a devnode line.

## However, if you want to blacklist specific devices, you should use

## a wwid line. Since there is no guarantee that a specific device will

## not change names on reboot (from /dev/sda to /dev/sdb for example)

## devnode lines are not recommended for blacklisting specific devices.

##

#blacklist {

# wwid 26353900f02796769

# devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

# devnode "^hd[a-z]"

#}

#multipaths {

# multipath {

# wwid 360050763008107a86000000000000005

# alias mpathg

# uid 301

# gid 201

# }

#

#}

#

#devices {

# device {

# vendor "COMPAQ "

# product "HSV110 (C)COMPAQ"

# path_grouping_policy multibus

# getuid_callout "/lib/udev/scsi_id --whitelisted --device=/dev/%n"

# path_checker readsector0

# path_selector "round-robin 0"

# hardware_handler "0"

# failback 15

# rr_weight priorities

# no_path_retry queue

# }

# device {

# vendor "COMPAQ "

# product "MSA1000 "

# path_grouping_policy multibus

# }

#}

devices {

device {

vendor "IBM" #厂商名称

product "V5000" #产品型号

path_grouping_policy multibus #默认的路径组策略

# getuid_callout "/sbin/scsi_id -g -u -s /block/%n" #获得唯一设备号使用的默认程序

getuid_callout "/lib/udev/scsi_id --whitelisted --device=/dev/%n"

path_checker readsector0 #决定路径状态的方法

path_selector "round-robin 0" #选择那条路径进行下一个IO操作的方法

failback immediate #故障恢复的模式

no_path_retry queue #在disable queue之前系统尝试使用失效路径的次数的数值

rr_min_io 100 #在当前的用户组中,在切换到另外一条路径之前的IO请求的数目

}

}

blacklist {

devnode "^sda"

}

3.对多路径磁盘分区

分区:

格式化、添加标签

添加自动挂载

三、安装mysql-cluster

1、安装各节点程序

SQL nodes:

cd /usr/local

tar -zxvf /data/packages/mysql-cluster-gpl-7.4.6-linux-glibc2.5-x86_64.tar.gz

mv mysql-cluster-gpl-7.4.6-linux-glibc2.5-x86_64 mysql

scripts/mysql_install_db --user=mysql

chown -R root .

chown -R mysql data

chgrp -R mysql .

ln -s /usr/local/mysql/support-files/mysql.server /etc/init.d/mysqld

chkconfig --add mysqld

如下操作在SQL节点A操作:

复制程序到管理节点

rsync -avz /usr/local/mysql/bin/ndb_mgm* 10.0.0.200:/usr/local/mysql/bin/

复制程序到数据节点A

rsync -avz /usr/local/mysql/bin/ndbd 10.0.0.11:/usr/local/mysql/bin/

rsync -avz /usr/local/mysql/bin/ndbmtd 10.0.0.11:/usr/local/mysql/bin/

复制程序到数据节点B

rsync -avz /usr/local/mysql/bin/ndbd 10.0.0.12:/usr/local/mysql/bin/

rsync -avz /usr/local/mysql/bin/ndbmtd 10.0.0.12:/usr/local/mysql/bin/

复制程序到SQL节点B

rsync -avz /usr/local/mysql/ 10.0.0.16:/usr/local/mysql/

Data nodes

Management nodes.

ln -s /usr/local/mysql/bin/ndb_mgm* /usr/local/sbin/

2、管理节点配置

mkdir -p /var/lib/mysql-cluster/

vim /var/lib/mysql-cluster/config.ini #添加如下内容

[ndbd default]

# Options affecting ndbd processes on all data nodes:

NoOfReplicas=2 # Number of replicas

DataMemory=80M # How much memory to allocate for data storage

IndexMemory=18M # How much memory to allocate for index storage

# For DataMemory and IndexMemory, we have used the

# default values. Since the "world" database takes up

# only about 500KB, this should be more than enough for

# this example Cluster setup.

[tcp default]

# TCP/IP options:

portnumber=2202 # This the default; however, you can use any

# port that is free for all the hosts in the cluster

# Note: It is recommended that you do not specify the port

# number at all and simply allow the default value to be used

# instead

[ndb_mgmd]

# Management process options:

hostname=10.0.0.200 # Hostname or IP address of MGM node

datadir=/var/lib/mysql-cluster # Directory for MGM node log files

[ndbd]

# Options for data node "A":

# (one [ndbd] section per data node)

hostname=10.0.0.11 # Hostname or IP address

datadir=/usr/local/mysql/data # Directory for this data node's data files

[ndbd]

# Options for data node "B":

hostname=10.0.0.12 # Hostname or IP address

datadir=/usr/local/mysql/data # Directory for this data node's data files

[mysqld]

# SQL node "A" options:

hostname=10.0.0.14 # Hostname or IP address

# (additional mysqld connections can be

# specified for this node for various

# purposes such as running ndb_restore)

[mysqld]

# SQL node "B" options:

hostname=10.0.0.16 # Hostname or IP address

# (additional mysqld connections can be

# specified for this node for various

# purposes such as running ndb_restore)

启动节点管理

/usr/local/mysql/bin/ndb_mgmd -f /var/lib/mysql-cluster/config.ini

注:

命令行中的ndb_mgmd是mysql cluster的管理服务器程序,后面的-f参数表示启动参数配置文件。

如果在启动后又需添加数据节点,修改配置文件后,启动时必须加上--initial参数,否则添加的节点不会作用在mysql cluster中。.

/usr/local/mysql/bin/ndb_mgmd -f /var/lib/mysql-cluster/config.ini --initial

关闭节点管理

# ndb_mgm

-- NDB Cluster -- Management Client --

ndb_mgm> shutdown

Connected to Management Server at: localhost:1186

1 NDB Cluster node(s) have shutdown.

Disconnecting to allow management server to shutdown.

ndb_mgm>quit

3、数据节点配置

vim /etc/my.cnf

[mysqld]

# Options for mysqld process:

ndbcluster # run NDB storage engine

[mysql_cluster]

# Options for MySQL Cluster processes:

ndb-connectstring=10.0.0.200 # location of management server

######

#server-id=16 #每个服务器的id不一样

#datadir=/usr/local/mysql/data

#socket=/tmp/mysql.sock

#user=mysql

# Disabling symbolic-links is recommended to prevent assorted security risks

#symbolic-links=0

#log-bin = mysql-bin

#max_connections=1000

#[mysqld_safe]

#pid-file = /usr/local/mysql/data/mysql.pid

#log-error = /usr/local/mysql/data/mysql.err

注:

安装后第一次启动数据节点时要加上--initial参数,其它时候不要加,除非是在备份、恢复或配置变化后重启时

# /usr/local/mysql/bin/ndbd --initial

正常启动

# /usr/local/mysql/bin/ndbd

4、配置SQL节点

vim /etc/my.cnf

[mysqld]

# Options for mysqld process:

ndbcluster # run NDB storage engine

#

#server-id=14 #每个服务器的id不一样,注释后,自动分配

datadir=/usr/local/mysql/data

socket=/tmp/mysql.sock

user=mysql

[mysql_cluster]

# Options for MySQL Cluster processes:

ndb-connectstring=10.0.0.200 # location of management server

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

log-bin = mysql-bin

max_connections=1000

[mysqld_safe]

pid-file = /usr/local/mysql/data/mysql.pid

log-error = /usr/local/mysql/data/mysql.err

正常启动

/etc/init.d/mysqld start

四、连接与测试(这里只做简单测试)

集群启停顺序

mysql集群的启动顺序为:管理节点->数据节点->SQL节点

mysql集群的关闭顺序为,管理节点->数据节点->SQL节点

连接集群方法

jdbc:mysql://[host:port],[host:port].../[database][?propertyName1][=propertyValue1][&propertyName2][=propertyValue2]...

例如:

jdbc:mysql://host1:3306,host2:3307,host3:3308/test?user=root&password=root&autoReconnect=true&failOverReadOnly=false

测试

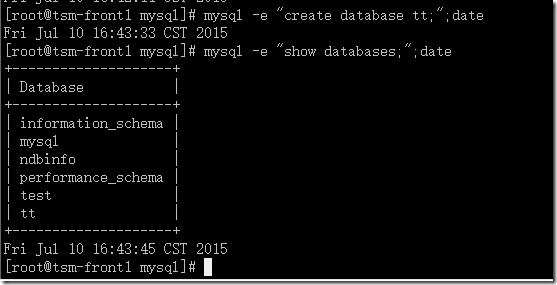

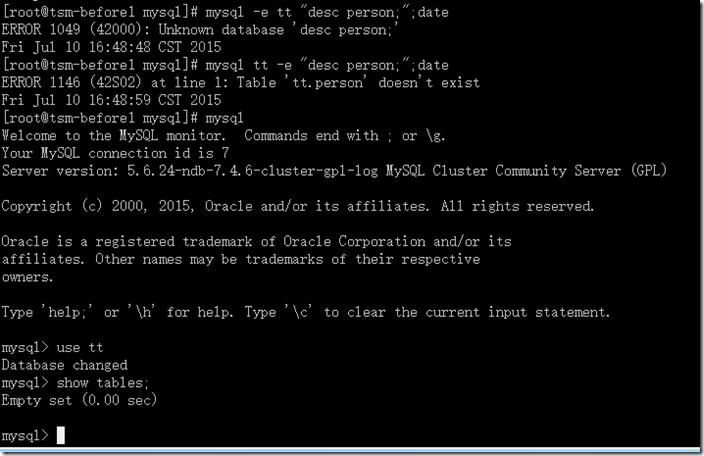

1、SQL A节点创建数据库、SQL B节点查看是否存在

SQL A节点:

SQL B节点:

SQL A节点创建数据库tt

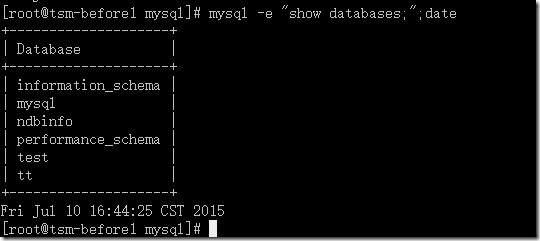

SQL B节点查看数据库tt

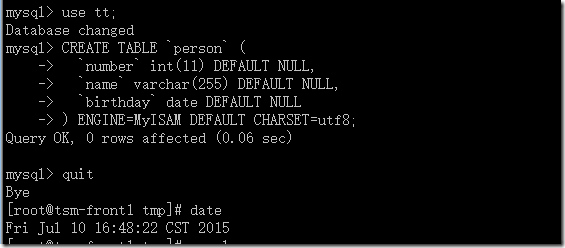

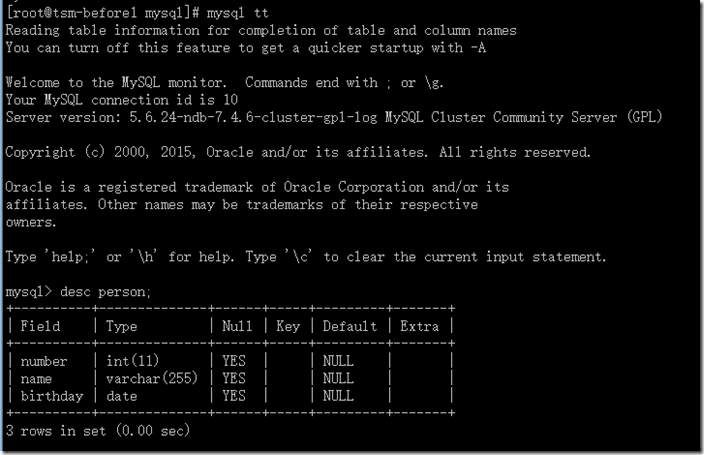

2、SQL A节点创建MyISAM表、SQL B节点查看是否存在

SQL A节点创建表

SQL B节点查看表

再到SQL A节点查看表,及删除测试表

3、SQL A节点创建innodb表、SQL B节点查看是否存在

SQL B节点查看表

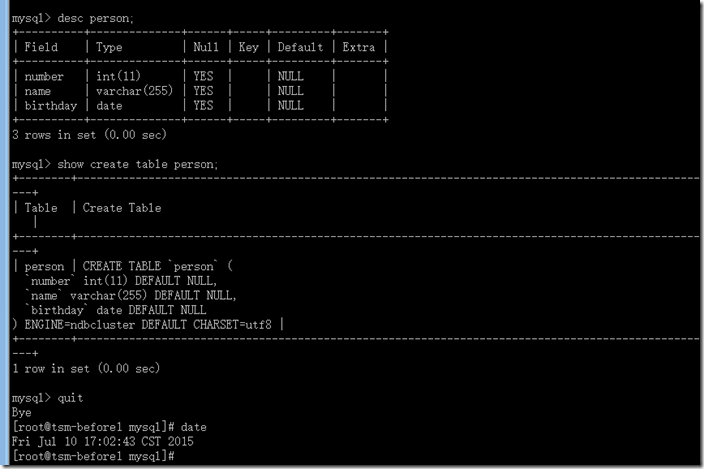

4、SQL A节点创建NDBCLUSTER表、SQL B节点查看是否存在

SQL A节点创建表

SQL B节点查看表

结论:只有ENGINE=NDBCLUSTER的表,才会存在于mysql集群里。

本文出自 “ygqygq2” 博客,谢绝转载!