hbase,zookeeper安装部署(二)

一、Hbase基础

1.概念

Hbase是一个在HDFS上开发的面向列分布式数据库,用于实时地随机访问超大规模数据集,它是一个面向列族的存储器。由于调优和存储都是在列族这个层次上进行,最好所有列族的成员都有相同的“访问模式”和大小特征

2.区域

hbase自动把表水平划分“区域”(region)。

每个区域由表中行的子集构。每个区域由它所属于表,它所包含的第一行及其最后一行(不包括这行)来表示

区域是在hbase集群上分布数据最小单位。用这种方式,一个因为太大而无法放在单台服务器的表会被放到服务器集群上,其中每个节点都负责管理表所有区域的一个子集。

3.实现

Hbase主控制(master):负责启动(bootstrap)一个全新安装,把区域分配给注册的regionserver,恢复regionserver的故障。master的负载很轻。

regionsever:负责零个或多个区域的管理以及响应客户端的读写请。还负责区域划分并通知Hbase master有了新的子区域(daughter region),这样主控机就可以把父区域设为离线,并用子区域替换父区域。

Hbase依赖于ZooKeeper。默认情况下,它管理一个ZooKeeper实例,作为集群的“权威机构”(authority)。Hbase负责根目录表(root catalog table)的位置,当前集群主控机地址等重要信息管理。

ZooKeeper上管理分配事务状诚有助于恢复能够从崩溃服务器遗留的状态开始继续分配。

启动一个客户端到HBase集群连接时,客户端必须至少拿到集群所传递的ZooKeeper集合体(ensemble)的位置。这样,客户端才能访问Zookeeper的层次结构,从而了解集群的属性。如服务器位置

4.多种文件系统接口的实现

HBase通过hadoop的文件系统API来持久久化存储数据。

有多种文件系统接口的实现:一种用于本地化文件系统;一种用于KFS文件系统,Amazon S3以及HDFS。多数人使用HDFS作为存储来运行HBase,目前我公司就这样。

5.运行中的HBase

Hbase内部保留名为-ROOT-和.META.的特殊目录表(catalog table)。它们维护着当前集群上所有区域的表,状态和位置。

-ROOT-表:包含.META.表的区域列表。

.META.表:包含所有用户空间区域(user-space region)的列表。

表中的项使用区域名作为键。

区域名由表名,起始行,创建时间戳进行哈希后的结果组成

6.与区域regionsver交互过程

连接到ZooKeeper集群上的客户端首先查找-ROOT-的位置,然后客户端通过-ROOT-获取所请求行所在范围所属.META.区域位置。客户端接着查找.META.区域来获取用户空间区域所在节点及其位置。接着客户端就可直接和管理那个区域的regionserver进行交互了

7.每个行操作可能要访问三次远程节点。节省代价,可利用缓存

客户端会缓存它们遍历-ROOT-时获取的信息和.META位置以有用户空间区域的开始行和结束行。这样,以后不需要访问.META.表也能得知区域存放的位置。当发生错误时--却区域被移动了,客户端会再去查看.META.获取区域新位置。如果.META.区域也被移动了,客户端就去查看-ROOT-

8.regionsever写操作

到达Regionsver的写操作首先被追架到“提交日志”(commit log)中,然后被加入内存的memstore。如果memstore满,它的内容被“涮入”(flush)文件系统

9、regionserver故障恢复

提交日志存放在HDFS,即使一个regionserver崩溃,主控机会根据区域死掉的regionserver的提交日志进行分割。重新分配后,在打开并使用死掉的regionserver上的区域之前,这些区域会找到属于它们的从被分割提交日志中得到文件,其中包含还没有被持久化存储的更新。这些更新会被“重做”(replay)以使区域恢复到服务器失败前状态

10、regionserver读操作

在读时候,首先查区域memstore。如果memstore找到所要征曾版本,查询结束了。否则,按照次序从新到旧松果“涮新文件"(flush file),直到找到满足查询的版本,或所有刷新文件都处理完止。

11、regionsever监控进程

一个后台进程负责在刷新文件个数到达一个阀值时压缩他们

一个独立的进程监控着刷新文件的大小,一旦文件大小超出预先设定的最大值,便对区域进行分割

二、ZooKeeper基础

1、概念

ZooKeeper是hadoop的分布式协调服务

2、ZooKeeper具有特点

是简单的

是富有表现力

zookeeper是的基本操作是一组丰富的”构件“(bulding block),可用于实现多种协调数据结构和协议。如:分布式队列,分布式锁和一组节点的”领导者选举“(lead election)

具有高可用性

运行一组机器上,设计上具有高可用性,应用程序可以完全依赖于它。zookeeper可帮助系统避免出现单点故障,因此可用于构建一个可靠的程序

采用松藕合交互方

支技交互过程,参与者不需要彼此了解。

一个资源库

提供一个通用协调的模式的实现方法开源共享库

对于写操作为主的工作负裁来说,zookeeper的基准吞吐量已经超过了每秒10 000 个操作;对于以读操作来说,吞吐量更是高出好几倍

3.ZooKeeper中组成员关系

看作一个具有高可用性特征的文件系统。这个文件系统没有文件和目录,统一使用”节点“(node)的概念,称为znode,作为保存数据的容器(如同文件),也可作为保存其他znode的容器。一个以组名为节点为的znode作为父节点,然后以组成员(服务器名)为节点名创建的作为子节点的znode.

4.数据模型

ZooKeeper维护着一个树形层次结构,znode可用于存储数据,并且与之关联的ACL。被设计用来实现协调服务(这类服务通常使用小数据文件),而不是用于大容量数据存储。一个znode能存储数据被限制在1MB以内。

ZooKeeper的数据访问具有原子性。

客户端端读取一个znode的数据时,要么读到所有数据,要么读操作失败,不会只读到部分数据。同样一个写操作将替换znode存储的所有数据。ZooKeeper会保证写操作不成就失败,不会出现部分写之类的情况,也就不会出现只保存客户端所写部分数据情部。ZooKeeper不支技添操作。这些特征与HDF所不同的。HDFS被设计用于大容量数据存储,支技流式数据访问和添加操作。

5.znode类型

znode有两种类型:短暂的和持久的。znode的类型在创建时被确定并且之后不能再修改。在创建短暂znode客户端会话结束时,zookeeper会将短暂znode删除。持久znode不依赖于客户端会话,只有当客户端(不一定是创建它的那个客户端)明确要删除该持久znode时才会被删除。短暂znode不可以有子节点,即使短暂子节点

6.顺序号

顺序(sequential)znode是指名称包含zookeeper指定顺序的znode.如果创建znode时设置了顺序标识,那zanode名称之后会附加一个值,这个值是由一个单调递增计数器(由父节点维护)所有添加。如如znode的名字/a/b-3

7.观察

znode以某种方式发生变化时,”观察“(watch)机制可以让客户端得到通知。可以针对zookeeper服务的操作来设置观察,该服务的其他操作可以触发观察

二、基本环境准备(参考上一章)

1.机器准备

IP地址 主机名 扮演的角色 10.1.2.208 vm13 master 10.1.2.215 vm7 slave 10.1.2.216 vm8 slave

2.系统版本

CentOS release 6.5

3.时间同步

4.防火墙关闭

5.创建hadoop用户和hadoop用户组

6.修改hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.1.2.214 master 10.1.2.215 slave-one 10.1.2.216 slave-two 10.1.2.208 vm13 10.1.2.197 vm7 10.1.2.198 vm8

7.修改文件句柄

8.JVM环境准备

三、hbase安装配置

1.软件准备

hbase-0.94.16.tar.gz jdk1.7.0_25.tar.gz

2.解压包

[hadoop@vm13 local]$ ls -ld /usr/local/hbase-0.94.16/ drwxr-xr-x. 11 hadoop hadoop 4096 Jan 13 13:11 /usr/local/hbase-0.94.16/

3.配置文件修改

3.1 hbase-env.sh

# 指定jdk安装目录 export JAVA_HOME=/usr/local/jdk1.7.0_25 # 指定Hadoop配置目录,看需要性 export HBASE_CLASSPATH=/usr/local/hadoop-1.0.4/conf #设置堆的使用量 export HBASE_HEAPSIZE=2000 export HBASE_OPTS="-XX:ThreadStackSize=2048 -XX:+UseConcMarkSweepGC" #额外ssh选,默认22端口 export HBASE_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR -p 22" #在这里先让hbase管理自带zookeeper工具,默认开启,hbase是启动依赖zookeeper export HBASE_MANAGES_ZK=true

3.2 hbase-site.xml

hbase.rootdir:设置Hbase数据存放目录

hbase.cluster.distributed:启用Hbase分布模式

hbase.maste:指定Hbase master节点

hbase.zookeeper.quorum:指定Zookeeper集群几点,据说必须为奇数

hbase.zookeeper.property.dataDir:Zookeeper的data目录

?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- /** * Copyright 2010 The Apache Software Foundation * * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ --> <configuration> <property> <name>hbase.rootdir</name> <value>hdfs://master:9000/hbase</value> <description>The directory shared by RegionServers.</description> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> <description> The mode the cluster will be in. Possible values are false: standalone and pseudo-distributed setups with managed Zookeeper true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh) </description> </property> <property> <name>hbase.zookeeper.quorum</name> <!--<value>dcnamenode1,dchadoop1,dchadoop3,dchbase1,dchbase2</value>--> <value>vm13,vm7,vm8</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/data0/zookeeper</value> </property> <property> <name>hbase.regionserver.handler.count</name> <value>32</value> <description>Default : 10. Count of RPC Listener instances spun up on RegionServers. Same property is used by the Master for count of master handlers. </description> </property> <!--memStore flush policy : no blocking writes--> <property> <name>hbase.hregion.memstore.flush.size</name> <value>134217728</value> <description>Default : 134217728(128MB) Memstore will be flushed to disk if size of the memstore exceeds this number of bytes. Value is checked by a thread that runs every hbase.server.thread.wakefrequency. </description> </property> <property> <name>hbase.regionserver.maxlogs</name> <value>64</value> <description>Default : 32.</description> </property> <!-- <property> <name>hbase.regionserver.hlog.blocksize</name> <value>67108864</value> <description>Default is hdfs block size, e.g. 64MB/128MB</description> </property> --> <property> <name>hbase.regionserver.optionalcacheflushinterval</name> <value>7200000</value> <description>Default : 3600000(1 hour). Maximum amount of time an edit lives in memory before being automatically flushed. Default 1 hour. Set it to 0 to disable automatic flushing. </description> </property> <!--memStore flush policy : blocking writes--> <property> <name>hbase.hregion.memstore.block.multiplier</name> <value>3</value> <description>Default : 2. Block updates if memstore has hbase.hregion.block.memstore time hbase.hregion.flush.size bytes. Useful preventing runaway memstore during spikes in update traffic. Without an upper-bound, memstore fills such that when it flushes the resultant flush files take a long time to compact or split, or worse, we OOME. </description> </property> <property> <name>hbase.regionserver.global.memstore.lowerLimit</name> <value>0.35</value> <description>Default : 0.35. When memstores are being forced to flush to make room in memory, keep flushing until we hit this mark. Defaults to 35% of heap. This value equal to hbase.regionserver.global.memstore.upperLimit causes the minimum possible flushing to occur when updates are blocked due to memstore limiting. </description> </property> <property> <name>hbase.regionserver.global.memstore.upperLimit</name> <value>0.4</value> <description>Default : 0.4. Maximum size of all memstores in a region server before new updates are blocked and flushes are forced. Defaults to 40% of heap </description> </property> <property> <name>hbase.hstore.blockingStoreFiles</name> <value>256</value> <description>Default : 7. If more than this number of StoreFiles in any one Store (one StoreFile is written per flush of MemStore) then updates are blocked for this HRegion until a compaction is completed, or until hbase.hstore.blockingWaitTime has been exceeded. </description> </property> <!--split policy--> <property> <name>hbase.hregion.max.filesize</name> <value>4294967296</value> <description>Default : 10737418240(10G). Maximum HStoreFile size. If any one of a column families' HStoreFiles has grown to exceed this value, the hosting HRegion is split in two. </description> </property> <!--compact policy--> <property> <name>hbase.hregion.majorcompaction</name> <value>0</value> <description>Default : 1 day. The time (in miliseconds) between 'major' compactions of all HStoreFiles in a region. Default: 1 day. Set to 0 to disable automated major compactions. </description> </property> <property> <name>hbase.hstore.compactionThreshold</name> <value>5</value> <description>Default : 3. If more than this number of HStoreFiles in any one HStore (one HStoreFile is written per flush of memstore) then a compaction is run to rewrite all HStoreFiles files as one. Larger numbers put off compaction but when it runs, it takes longer to complete. </description> </property> </configuration>

3.3 regionservers

vm7 vm8

3.4 将修改的好/usr/local/hbase-0.94.16整个目录复制到vm7,vm8,注意修改好属主属组hadoop权限

3.5 事先创建/data0,并属主属组为hadoop

3.6 启动hbase

[hadoop@vm13 conf]$ start-hbase.sh

3.7 验证进程是否成功

[hadoop@vm13 conf]$ jps 8146 Jps 7988 HMaster

[hadoop@vm7 conf]$ jps 4798 Jps 4571 HQuorumPeer 4656 HRegionServer

[hadoop@vm8 conf]$ jps 4022 HQuorumPeer 4251 Jps 4111 HRegionServer

四、zookeeper

1.软件准备

zookeeper-3.4.5.tar.gz

2.解压包

[hadoop@vm13 local]$ pwd /usr/local [hadoop@vm13 local]$ ls -l drwxr-xr-x. 10 hadoop hadoop 4096 Apr 22 2014 zookeeper

3.配置文件

3.1 log4j.properties

3.1.1 配置根Logger

其语法为:

log4j.rootLogger = [ level ] , appenderName1, appenderName2, …

level : 是日志记录的优先级,分为OFF、FATAL、ERROR、WARN、INFO、DEBUG、ALL或者您定义的级别。Log4j建议只使用四个级别,优先级从高到低分别是ERROR、WARN、INFO、DEBUG。通过在这里定义的级别,您可以控制到应用程序中相应级别的日志信息的开关。比如在这里定 义了INFO级别,则应用程序中所有DEBUG级别的日志信息将不被打印出来。appenderName:就是指定日志信息输出到哪个地方。您可以同时指定多个输出目的地。

例如:log4j.rootLogger=info,A1,B2,C3

3.1.2 配置日志信息输出目的地

其语法为:

log4j.appender.appenderName = fully.qualified.name.of.appender.class //

"fully.qualified.name.of.appender.class" 可以指定下面五个目的地中的一个:

1.org.apache.log4j.ConsoleAppender(控制台)

2.org.apache.log4j.FileAppender(文件)

3.org.apache.log4j.DailyRollingFileAppender(每天产生一个日志文件)

4.org.apache.log4j.RollingFileAppender(文件大小到达指定尺寸的时候产生一个新的文件)

5.org.apache.log4j.WriterAppender(将日志信息以流格式发送到任意指定的地方)

1.ConsoleAppender选项

Threshold=WARN:指定日志消息的输出最低层次。

ImmediateFlush=true:默认值是true,意谓着所有的消息都会被立即输出。

Target=System.err:默认情况下是:System.out,指定输出控制台

2.FileAppender 选项

Threshold=WARN:指定日志消息的输出最低层次。

ImmediateFlush=true:默认值是true,意谓着所有的消息都会被立即输出。

File=mylog.txt:指定消息输出到mylog.txt文件。

Append=false:默认值是true,即将消息增加到指定文件中,false指将消息覆盖指定的文件内容。

3.DailyRollingFileAppender 选项

Threshold=WARN:指定日志消息的输出最低层次。

ImmediateFlush=true:默认值是true,意谓着所有的消息都会被立即输出。

File=mylog.txt:指定消息输出到mylog.txt文件。

Append=false:默认值是true,即将消息增加到指定文件中,false指将消息覆盖指定的文件内容。

DatePattern=''.''yyyy-ww:每周滚动一次文件,即每周产生一个新的文件。当然也可以指定按月、周、天、时和分。即对应的格式如下:

1)''.''yyyy-MM: 每月

2)''.''yyyy-ww: 每周

3)''.''yyyy-MM-dd: 每天

4)''.''yyyy-MM-dd-a: 每天两次

5)''.''yyyy-MM-dd-HH: 每小时

6)''.''yyyy-MM-dd-HH-mm: 每分钟

4.RollingFileAppender 选项

Threshold=WARN:指定日志消息的输出最低层次。

ImmediateFlush=true:默认值是true,意谓着所有的消息都会被立即输出。

File=mylog.txt:指定消息输出到mylog.txt文件。

Append=false:默认值是true,即将消息增加到指定文件中,false指将消息覆盖指定的文件内容。

MaxFileSize=100KB: 后缀可以是KB, MB 或者是 GB. 在日志文件到达该大小时,将会自动滚动,即将原来的内容移到mylog.log.1文件。

MaxBackupIndex=2:指定可以产生的滚动文件的最大数。

3.1.3 配置日志信息的格式

其语法为:

1). log4j.appender.appenderName.layout = fully.qualified.name.of.layout.class

"fully.qualified.name.of.layout.class" 可以指定下面4个格式中的一个:

1.org.apache.log4j.HTMLLayout(以HTML表格形式布局),

2.org.apache.log4j.PatternLayout(可以灵活地指定布局模式),

3.org.apache.log4j.SimpleLayout(包含日志信息的级别和信息字符串),

4.org.apache.log4j.TTCCLayout(包含日志产生的时间、线程、类别等等信息)

1.HTMLLayout 选项

LocationInfo=true:默认值是false,输出java文件名称和行号

Title=my app file: 默认值是 Log4J Log Messages.

2.PatternLayout 选项

ConversionPattern=%m%n :指定怎样格式化指定的消息。

3.XMLLayout 选项

LocationInfo=true:默认值是false,输出java文件和行号

2). log4j.appender.A1.layout.ConversionPattern=%-4r %-5p %d{yyyy-MM-dd HH:mm:ssS} %c %m%n

这里需要说明的就是日志信息格式中几个符号所代表的含义:

-X号: X信息输出时左对齐;

%p: 输出日志信息优先级,即DEBUG,INFO,WARN,ERROR,FATAL,

%d: 输出日志时间点的日期或时间,默认格式为ISO8601,也可以在其后指定格式,比如:%d{yyy MMM dd HH:mm:ss,SSS},输出类似:2002年10月18日 22:10:28,921

%r: 输出自应用启动到输出该log信息耗费的毫秒数

%c: 输出日志信息所属的类目,通常就是所在类的全名

%t: 输出产生该日志事件的线程名

%l: 输出日志事件的发生位置,相当于%C.%M(%F:%L)的组合,包括类目名、发生的线程,以及在代码中的行数。举例:Testlog4.main(TestLog4.java:10)

%x: 输出和当前线程相关联的NDC(嵌套诊断环境),尤其用到像java servlets这样的多客户多线程的应用中。

%%: 输出一个"%"字符

%F: 输出日志消息产生时所在的文件名称

%L: 输出代码中的行号

%m: 输出代码中指定的消息,产生的日志具体信息

%n: 输出一个回车换行符,Windows平台为"",Unix平台为"

"输出日志信息换行

可以在%与模式字符之间加上修饰符来控制其最小宽度、最大宽度、和文本的对齐方式。如:

1)%20c:指定输出category的名称,最小的宽度是20,如果category的名称小于20的话,默认的情况下右对齐。

2)%-20c:指定输出category的名称,最小的宽度是20,如果category的名称小于20的话,"-"号指定左对齐。

3)%.30c:指定输出category的名称,最大的宽度是30,如果category的名称大于30的话,就会将左边多出的字符截掉,但小于30的话也不会有空格。

4)%20.30c:如果category的名称小于20就补空格,并且右对齐,如果其名称长于30字符,就从左边交远销出的字符截掉

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO,ROLLINGFILE

zookeeper.console.threshold=INFO

# zookeeper.log.dir=.

zookeeper.log.dir=/data0/zookeeper/logs

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

# zookeeper.tracelog.dir=.

zookeeper.tracelog.dir=/data0/zookeeper/logs

zookeeper.tracelog.file=zookeeper_trace.log

#

# ZooKeeper Logging Configuration

#

# Format is "<default threshold> (, <appender>)+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

#log4j.appender.ROLLINGFILE.MaxBackupIndex=10

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

3.2 zoo.cfg

zookeeper服务器集合体中,每个服务器都有一个数值型ID,服务器ID在集合体中是唯一的,并且取值范围在1到255之间。通过一个名为myid的纯文本文件设定服务器ID,这个文件保存在dataDir参数所指定的目录中

每台服务器添格格式如下

server.n=hostname:port:port

n是服务器的ID。这里2个端口设置:第一个是跟随者用来连接领导者的端口;第二个端口用来被用于领导者选举。如server.2=10.1.2.198:12888:13888,服务器在3个端口上监听,2181端口用于客户端连接;对于领导者来说,12888被用于跟随者连接;13888端口被用于领导者选举阶段的其他务器连接。当一个zookeeper服务器启动时,读取myid文件用于确定自己的服务器ID,然后通过读取配置文件来确定应当在哪个端口进行听,同时确定集合体中其他服务器的网络地址。

initLimit:设定所有跟随者与领导者进行连接同步的时间范围。如果设定的时间段内,半数以上的跟随者未能完成同步,领导者宣布放弃领导地位,然后进行另外一次领导者选举。如果这情况经常发生,可通过日志中记录发现情况,表明设定的值太小

syncLimit:设定了允许一个跟随者与领导者进行同步的时间。如果在设定时间段内,一个跟随者未能完成同步,会自己重启。所有关联到跟随者客户端将连接另一个跟随者

tickTime:指定了zookeeper中基本时间单元(以毫秒为单位)

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/data0/zookeeper/data # the port at which the clients will connect clientPort=2181 server.1=vm13:12888:13888 server.2=vm7:12888:13888 server.3=vm8:12888:13888 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1

3.3 zookeeper-env.sh

ZOO_LOG_DIR=/data0/zookeeper/logs ZOO_LOG4J_PROP=INFO,ROLLINGFILE

五、启动

1.修改hbase-env.sh,不使用hbase自带zookeeper工具

export HBASE_MANAGES_ZK=true

2.启动zookeeper,并查看日志

[hadoop@vm13 data]$ zkServer.sh start JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... ^[[ASTARTED [hadoop@vm13 data]$ tail /data0/zookeeper/logs/zookeeper.log -n 30 org.apache.zookeeper.server.quorum.QuorumPeerConfig$ConfigException: Error processing /usr/local/zookeeper/bin/../conf/zoo.cfg at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:121) at org.apache.zookeeper.server.quorum.QuorumPeerMain.initializeAndRun(QuorumPeerMain.java:101) at org.apache.zookeeper.server.quorum.QuorumPeerMain.main(QuorumPeerMain.java:78) Caused by: java.lang.IllegalArgumentException: serverid null is not a number at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parseProperties(QuorumPeerConfig.java:358) at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:117) ... 2 more 2016-01-14 13:19:27,482 [myid:] - INFO [main:QuorumPeerConfig@101] - Reading configuration from: /usr/local/zookeeper/bin/../conf/zoo.cfg 2016-01-14 13:19:27,488 [myid:] - INFO [main:QuorumPeerConfig@334] - Defaulting to majority quorums 2016-01-14 13:19:27,489 [myid:] - ERROR [main:QuorumPeerMain@85] - Invalid config, exiting abnormally org.apache.zookeeper.server.quorum.QuorumPeerConfig$ConfigException: Error processing /usr/local/zookeeper/bin/../conf/zoo.cfg at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:121) at org.apache.zookeeper.server.quorum.QuorumPeerMain.initializeAndRun(QuorumPeerMain.java:101) at org.apache.zookeeper.server.quorum.QuorumPeerMain.main(QuorumPeerMain.java:78) Caused by: java.lang.IllegalArgumentException: serverid null is not a number at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parseProperties(QuorumPeerConfig.java:358) at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:117) ... 2 more 2016-01-14 13:19:36,661 [myid:] - INFO [main:QuorumPeerConfig@101] - Reading configuration from: /usr/local/zookeeper/bin/../conf/zoo.cfg 2016-01-14 13:19:36,666 [myid:] - INFO [main:QuorumPeerConfig@334] - Defaulting to majority quorums 2016-01-14 13:19:36,668 [myid:] - ERROR [main:QuorumPeerMain@85] - Invalid config, exiting abnormally org.apache.zookeeper.server.quorum.QuorumPeerConfig$ConfigException: Error processing /usr/local/zookeeper/bin/../conf/zoo.cfg at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:121) at org.apache.zookeeper.server.quorum.QuorumPeerMain.initializeAndRun(QuorumPeerMain.java:101) at org.apache.zookeeper.server.quorum.QuorumPeerMain.main(QuorumPeerMain.java:78) Caused by: java.lang.IllegalArgumentException: serverid null is not a number at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parseProperties(QuorumPeerConfig.java:358) at org.apache.zookeeper.server.quorum.QuorumPeerConfig.parse(QuorumPeerConfig.java:117) ... 2 more

从日志查看可以知道,Zookeeper的启动入口org.apache.zookeeper.server.quorum.QuorumPeerMain。

在这个类的main方法里进入了zookeeper的启动过程,首先我们会解析配置文件,即zoo.cfg和myid。

3.创建服务器ID

[hadoop@vm13 data]$ echo 1 > myid

[hadoop@vm7 zookeeper]$ echo 2 > /data0/zookeeper/data/myid

[hadoop@vm8 zookeeper]$ echo 3 > /data0/zookeeper/data/myid

4.再启动

zookeep服务,需要三台单独各启动起来

[hadoop@vm13 data]$ zkServer.sh start JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@vm13 data]$ tail /data0/zookeeper/logs/zookeeper.log -n 30 at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:579) at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:354) at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:327) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:393) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:365) at java.lang.Thread.run(Thread.java:724) 2016-01-14 13:29:27,154 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:QuorumPeer@738] - FOLLOWING 2016-01-14 13:29:27,177 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Learner@85] - TCP NoDelay set to: true 2016-01-14 13:29:27,184 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 2016-01-14 13:29:27,184 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:host.name=vm13 2016-01-14 13:29:27,184 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.version=1.7.0_25 2016-01-14 13:29:27,184 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.vendor=Oracle Corporation 2016-01-14 13:29:27,185 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.home=/usr/local/jdk1.7.0_25/jre 2016-01-14 13:29:27,185 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.2.2.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.15.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.5.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf: 2016-01-14 13:29:27,185 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2016-01-14 13:29:27,185 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.io.tmpdir=/tmp 2016-01-14 13:29:27,186 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:java.compiler=<NA> 2016-01-14 13:29:27,186 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:os.name=Linux 2016-01-14 13:29:27,186 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:os.arch=amd64 2016-01-14 13:29:27,189 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:os.version=2.6.32-431.el6.x86_64 2016-01-14 13:29:27,189 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:user.name=hadoop 2016-01-14 13:29:27,190 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:user.home=/home/hadoop 2016-01-14 13:29:27,190 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Environment@100] - Server environment:user.dir=/data0/zookeeper/data 2016-01-14 13:29:27,191 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:ZooKeeperServer@162] - Created server with tickTime 2000 minSessionTimeout 4000 maxSessionTimeout 40000 datadir /data0/zookeeper/data/version-2 snapdir /data0/zookeeper/data/version-2 2016-01-14 13:29:27,194 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Follower@63] - FOLLOWING - LEADER ELECTION TOOK - 283 2016-01-14 13:29:27,223 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:Learner@322] - Getting a diff from the leader 0x100000002 2016-01-14 13:29:27,228 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:FileTxnSnapLog@240] - Snapshotting: 0x100000002 to /data0/zookeeper/data/version-2/snapshot.100000002 2016-01-14 13:29:27,230 [myid:0] - INFO [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:12181:FileTxnSnapLog@240] - Snapshotting: 0x100000002 to /data0/zookeeper/data/version-2/snapshot.100000002

5、验证

使用nc(telnet也可以)发送ruok命令(Are you OK?)到监听端口,检查zookeeper是否正在运行

imok[hadoop@vm13 hbase]$ echo "ruok" | nc localhost 2181 imok

进程验证

[hadoop@vm13 data]$ jps 9134 Jps 9110 QuorumPeerMain [hadoop@vm7 zookeeper]$ jps 5312 Jps 5287 QuorumPeerMain [hadoop@vm8 zookeeper]$ jps 4797 Jps 4732 QuorumPeerMain

6.启动hbase,查看日志

[hadoop@vm13 data]$ start-hbase.sh start starting master, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-master-vm13.out vm7: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm7.out vm8: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm8.out

[hadoop@vm13 hbase-0.94.16]$ tail /usr/local/hbase-0.94.16/logs/hbase-hadoop-master-vm13.log -n 30 at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:152) at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:104) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65) at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:76) at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:2120) Caused by: org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /hbase at org.apache.zookeeper.KeeperException.create(KeeperException.java:99) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.exists(ZooKeeper.java:1041) at org.apache.zookeeper.ZooKeeper.exists(ZooKeeper.java:1069) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.exists(RecoverableZooKeeper.java:199) at org.apache.hadoop.hbase.zookeeper.ZKUtil.createAndFailSilent(ZKUtil.java:1109) at org.apache.hadoop.hbase.zookeeper.ZKUtil.createAndFailSilent(ZKUtil.java:1099) at org.apache.hadoop.hbase.zookeeper.ZKUtil.createAndFailSilent(ZKUtil.java:1083) at org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.createBaseZNodes(ZooKeeperWatcher.java:162) at org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.<init>(ZooKeeperWatcher.java:155) at org.apache.hadoop.hbase.master.HMaster.<init>(HMaster.java:347) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:2101) ... 5 more 2016-01-14 13:32:33,200 INFO org.apache.zookeeper.ClientCnxn: Opening socket connection to server vm8/10.1.2.198:2181. Will not attempt to authenticate using SASL (unknown error) 2016-01-14 13:32:33,201 WARN org.apache.zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:708) at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:350) at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1068)

从日志 来看:org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /hbase。这是由于hbase自带zookeeper管理具,在hadoop上创建/hbase是目录,所以回到hadoop集群下mastser查看

[root@master hadoop1.0]# hadoop fs -ls / Found 2 items drwxr-xr-x - hadoop supergroup 0 2016-01-11 11:40 /hadoop drwxr-xr-x - hadoop supergroup 0 2016-01-14 10:42 /hbase

删除/hbase

[hadoop@master hadoop1.0]$ hadoop fs -rmr /hbase Moved to trash: hdfs://master:9000/hbase

另外如果zookeeper定义不是默认的2181端口,比如是12181端口,那么还要在hbase-site.sh下需要添加

<property> <name>hbase.zookeeper.property.clientPort</name> <value>12181</value> <description> Property from ZooKeeper's config zoo.cfg. The port at which the clients will connect. </description> </property>

再启动

[hadoop@vm13 conf]$ start-hbase.sh starting master, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-master-vm13.out vm8: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm8.out vm7: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm7.out

检查

hadoop@vm13 conf]$ start-hbase.sh starting master, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-master-vm13.out vm8: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm8.out vm7: starting regionserver, logging to /usr/local/hbase-0.94.16//logs/hbase-hadoop-regionserver-vm7.out

日志

[hadoop@vm13 conf]$ tail ../logs/hbase-hadoop-master-vm13.log -n 50

2016-01-14 16:34:19,901 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:19,904 INFO org.apache.hadoop.hbase.catalog.CatalogTracker: Failed verification of .META.,,1 at address=vm7,60020,1452760130913; org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: .META.,,1

2016-01-14 16:34:19,957 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:19,958 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:19,967 INFO org.apache.hadoop.hbase.catalog.CatalogTracker: Failed verification of .META.,,1 at address=vm7,60020,1452760130913; org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: .META.,,1

2016-01-14 16:34:19,973 DEBUG org.apache.hadoop.hbase.master.AssignmentManager: Handling transition=RS_ZK_REGION_OPENING, server=vm8,60020,1452760452737, region=1028785192/.META.

2016-01-14 16:34:20,019 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,021 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,025 INFO org.apache.hadoop.hbase.catalog.CatalogTracker: Failed verification of .META.,,1 at address=vm7,60020,1452760130913; org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: .META.,,1

2016-01-14 16:34:20,030 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,032 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,038 INFO org.apache.hadoop.hbase.catalog.CatalogTracker: Failed verification of .META.,,1 at address=vm7,60020,1452760130913; org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: .META.,,1

2016-01-14 16:34:20,093 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,094 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,098 INFO org.apache.hadoop.hbase.catalog.CatalogTracker: Failed verification of .META.,,1 at address=vm7,60020,1452760130913; org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: .META.,,1

2016-01-14 16:34:20,133 DEBUG org.apache.hadoop.hbase.master.AssignmentManager: Handling transition=RS_ZK_REGION_OPENED, server=vm8,60020,1452760452737, region=1028785192/.META.

2016-01-14 16:34:20,136 INFO org.apache.hadoop.hbase.master.handler.OpenedRegionHandler: Handling OPENED event for .META.,,1.1028785192 from vm8,60020,1452760452737; deleting unassigned node

2016-01-14 16:34:20,136 DEBUG org.apache.hadoop.hbase.zookeeper.ZKAssign: master:60000-0x1523f403d6e0003 Deleting existing unassigned node for 1028785192 that is in expected state RS_ZK_REGION_OPENED

2016-01-14 16:34:20,140 DEBUG org.apache.hadoop.hbase.master.AssignmentManager: The znode of region .META.,,1.1028785192 has been deleted.

2016-01-14 16:34:20,140 INFO org.apache.hadoop.hbase.master.AssignmentManager: The master has opened the region .META.,,1.1028785192 that was online on vm8,60020,1452760452737

2016-01-14 16:34:20,140 DEBUG org.apache.hadoop.hbase.catalog.CatalogTracker: Current cached META location, null, is not valid, resetting

2016-01-14 16:34:20,142 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,144 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,145 DEBUG org.apache.hadoop.hbase.zookeeper.ZKAssign: master:60000-0x1523f403d6e0003 Successfully deleted unassigned node for region 1028785192 in expected state RS_ZK_REGION_OPENED

2016-01-14 16:34:20,151 DEBUG org.apache.hadoop.hbase.catalog.CatalogTracker: Set new cached META location: vm8,60020,1452760452737

2016-01-14 16:34:20,151 INFO org.apache.hadoop.hbase.master.HMaster: .META. assigned=1, rit=false, location=vm8,60020,1452760452737

2016-01-14 16:34:20,154 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,156 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Creating scanner over -ROOT- starting at key ''

2016-01-14 16:34:20,156 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Advancing internal scanner to startKey at ''

2016-01-14 16:34:20,159 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,166 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Finished with scanning at {NAME => '-ROOT-,,0', STARTKEY => '', ENDKEY => '', ENCODED => 70236052,}

2016-01-14 16:34:20,166 INFO org.apache.hadoop.hbase.catalog.MetaMigrationRemovingHTD: Meta version=0; migrated=true

2016-01-14 16:34:20,166 INFO org.apache.hadoop.hbase.catalog.MetaMigrationRemovingHTD: ROOT/Meta already up-to date with new HRI.

2016-01-14 16:34:20,169 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Looked up root region location, connection=org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22; serverName=vm7,60020,1452760452808

2016-01-14 16:34:20,176 DEBUG org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation: Cached location for .META.,,1.1028785192 is vm8:60020

2016-01-14 16:34:20,177 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Creating scanner over .META. starting at key ''

2016-01-14 16:34:20,177 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Advancing internal scanner to startKey at ''

2016-01-14 16:34:20,210 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Finished with scanning at {NAME => '.META.,,1', STARTKEY => '', ENDKEY => '', ENCODED => 1028785192,}

2016-01-14 16:34:20,212 INFO org.apache.hadoop.hbase.master.AssignmentManager: Clean cluster startup. Assigning userregions

2016-01-14 16:34:20,212 DEBUG org.apache.hadoop.hbase.zookeeper.ZKAssign: master:60000-0x1523f403d6e0003 Deleting any existing unassigned nodes

2016-01-14 16:34:20,215 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Creating scanner over .META. starting at key ''

2016-01-14 16:34:20,215 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Advancing internal scanner to startKey at ''

2016-01-14 16:34:20,218 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Finished with scanning at {NAME => '.META.,,1', STARTKEY => '', ENDKEY => '', ENCODED => 1028785192,}

2016-01-14 16:34:20,221 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Creating scanner over .META. starting at key ''

2016-01-14 16:34:20,221 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Advancing internal scanner to startKey at ''

2016-01-14 16:34:20,224 DEBUG org.apache.hadoop.hbase.client.ClientScanner: Finished with scanning at {NAME => '.META.,,1', STARTKEY => '', ENDKEY => '', ENCODED => 1028785192,}

2016-01-14 16:34:20,239 INFO org.apache.hadoop.hbase.master.HMaster: Registered HMaster MXBean

2016-01-14 16:34:20,239 INFO org.apache.hadoop.hbase.master.HMaster: Master has completed initialization

2016-01-14 16:34:20,240 DEBUG org.apache.hadoop.hbase.client.MetaScanner: Scanning .META. starting at row= for max=2147483647 rows using org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@4c627d22

2016-01-14 16:34:20,249 DEBUG org.apache.hadoop.hbase.master.CatalogJanitor: Scanned 0 catalog row(s) and gc'd 0 unreferenced parent region(s)

运行jps命令

[hadoop@vm13 conf]$ jps 13676 Jps 12927 QuorumPeerMain 13513 HMaster

[root@vm7 ~]# jps | grep -iv jps 1022 HRegionServer 944 QuorumPeerMain

[hadoop@vm8 conf]$ jps | grep -iv jps 7043 HRegionServer 6955 QuorumPeerMain

7.连接服务器

[hadoop@vm13 conf]$ zkCli.sh -server localhost:2181 Connecting to localhost:2181 Welcome to ZooKeeper! JLine support is enabled WATCHER:: WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 2] h ZooKeeper -server host:port cmd args connect host:port get path [watch] ls path [watch] set path data [version] rmr path delquota [-n|-b] path quit printwatches on|off create [-s] [-e] path data acl stat path [watch] close ls2 path [watch] history listquota path setAcl path acl getAcl path sync path redo cmdno addauth scheme auth delete path [version] setquota -n|-b val path [zk: localhost:2181(CONNECTED) 0] ls / [hbase, zookeeper]

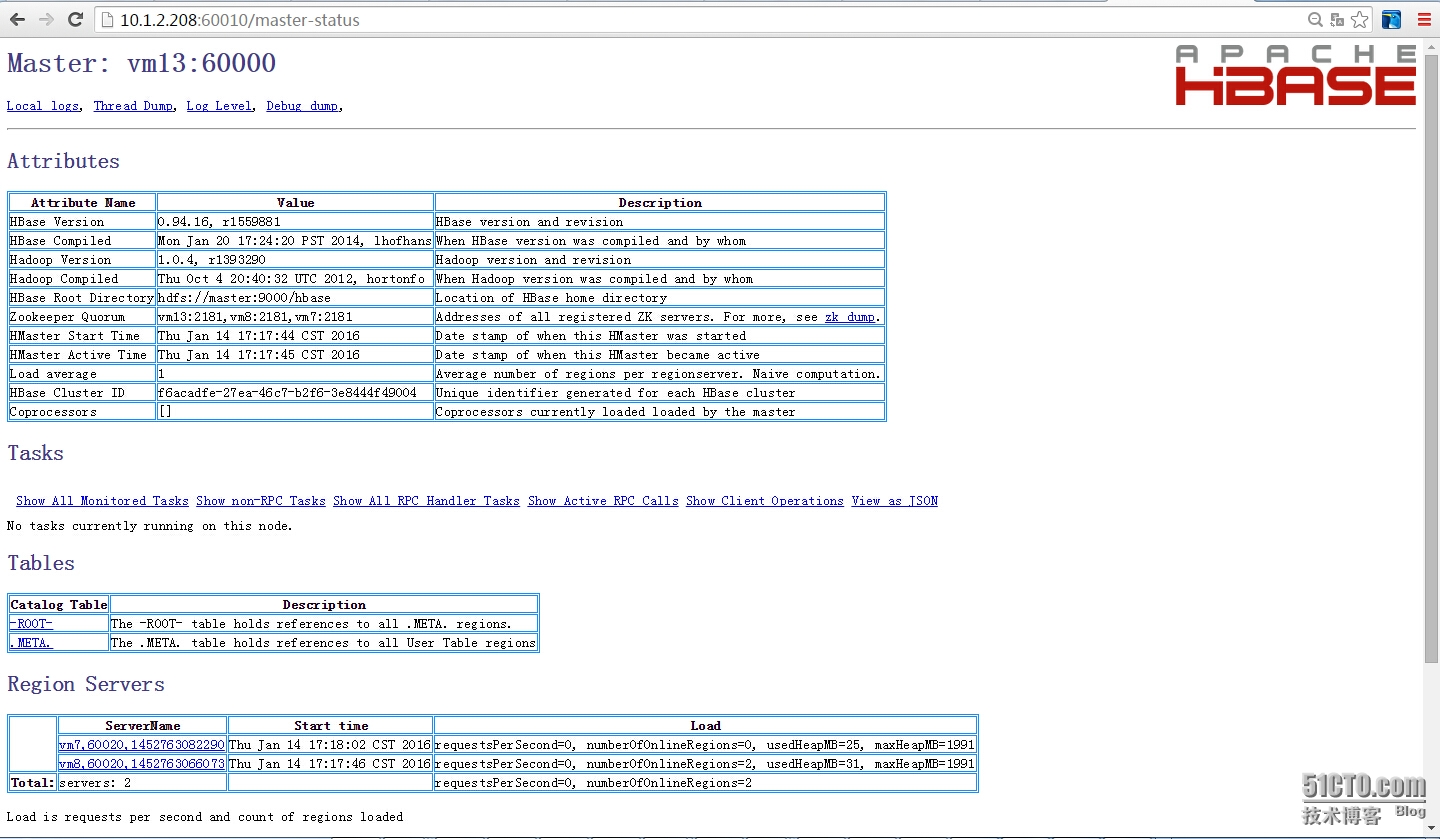

8.HBase Master web