MapReduce-XML处理-定制OutputFormat及定制RecordWriter

这一篇紧接上一篇博客《 MapReduce-XML处理-定制InputFormat及定制RecordReader》,上一篇是说明InputFormat和RecordReader,这里说明OutputFormat以及RecordWriter的定制,下面是

这次测试的要求:

输入数据:

<configuration>

<property>

<name>hadoop.kms.authentication.type</name>

<value>simple</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.keytab</name>

<value>${user.home}/kms.keytab</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.principal</name>

<value>HTTP/localhost</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.name.rules</name>

<value>DEFAULT</value>

</property>

</configuration>

实现的结果:把上述XML文件中的value的值倒序,然后按照XML标签的格式输出,如下:

<name>hadoop.kms.authentication.kerberos.keytab</name>

<value>batyek.smk/}emoh.resu{$</value>

<name>hadoop.kms.authentication.kerberos.name.rules</name>

<value>TLUAFED</value>

<name>hadoop.kms.authentication.kerberos.principal</name>

<value>tsohlacol/PTTH</value>

<name>hadoop.kms.authentication.type</name>

<value>elpmis</value>

由于代码量过多,这里就不贴出上一篇说明InputFormat和RecordReader的代码了,如果要做完整的测试到上一篇找代码

《MapReduce-xml文件的处理-定制InputFormat及定制RecordReader》。InputFormat、RecordReader、mapper的代码在这

里就不贴出来了。

定制步骤:

扩展OutputFormat和RecordWriter这两类或其子类,其功能是:

OutputFormat用于验证数据接收器的属性,RecordWriter用于将reduce对象的输出结果导入数据接收器中。

首先扩展OutputFormat类其要实现的内容为:

1.public abstract class OutputFormat<K, V>

K,V为定义reduce输入键和值的类型

2.public abstract RecordWriter<K, V> getRecordWriter(TaskAttemptContext context ) throws IOException, InterruptedException;

创建一个RecordWriter实例将数据写入到目标

3.public abstract void checkOutputSpecs(JobContext context ) throws IOException, InterruptedException;

验证与MapReduce作业相关联的输出信息是否下确

4.public abstract OutputCommitter getOutputCommitter(TaskAttemptContext context ) throws IOException, InterruptedException;

获取相关OutputCommitter。当所有任务成功和作业完成后,OutputCommitters负责在最后将结果输出

然后扩展RecordWriter类,其实现的内容为:

1.public abstract class RecordWriter<K, V>

K,V为定义reduce输入键和值的类型

2.public abstract void write(K key, V value ) throws IOException, InterruptedException;

将一个逻辑键/值对写到接收器。

3.public abstract void close(TaskAttemptContext context ) throws IOException, InterruptedException;

清理任何与目标数据接收器相关的资源

这次测试的要求:

输入数据:

<configuration>

<property>

<name>hadoop.kms.authentication.type</name>

<value>simple</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.keytab</name>

<value>${user.home}/kms.keytab</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.principal</name>

<value>HTTP/localhost</value>

</property>

<property>

<name>hadoop.kms.authentication.kerberos.name.rules</name>

<value>DEFAULT</value>

</property>

</configuration>

实现的结果:把上述XML文件中的value的值倒序,然后按照XML标签的格式输出,如下:

<name>hadoop.kms.authentication.kerberos.keytab</name>

<value>batyek.smk/}emoh.resu{$</value>

<name>hadoop.kms.authentication.kerberos.name.rules</name>

<value>TLUAFED</value>

<name>hadoop.kms.authentication.kerberos.principal</name>

<value>tsohlacol/PTTH</value>

<name>hadoop.kms.authentication.type</name>

<value>elpmis</value>

由于代码量过多,这里就不贴出上一篇说明InputFormat和RecordReader的代码了,如果要做完整的测试到上一篇找代码

《MapReduce-xml文件的处理-定制InputFormat及定制RecordReader》。InputFormat、RecordReader、mapper的代码在这

里就不贴出来了。

定制步骤:

扩展OutputFormat和RecordWriter这两类或其子类,其功能是:

OutputFormat用于验证数据接收器的属性,RecordWriter用于将reduce对象的输出结果导入数据接收器中。

首先扩展OutputFormat类其要实现的内容为:

1.public abstract class OutputFormat<K, V>

K,V为定义reduce输入键和值的类型

2.public abstract RecordWriter<K, V> getRecordWriter(TaskAttemptContext context ) throws IOException, InterruptedException;

创建一个RecordWriter实例将数据写入到目标

3.public abstract void checkOutputSpecs(JobContext context ) throws IOException, InterruptedException;

验证与MapReduce作业相关联的输出信息是否下确

4.public abstract OutputCommitter getOutputCommitter(TaskAttemptContext context ) throws IOException, InterruptedException;

获取相关OutputCommitter。当所有任务成功和作业完成后,OutputCommitters负责在最后将结果输出

然后扩展RecordWriter类,其实现的内容为:

1.public abstract class RecordWriter<K, V>

K,V为定义reduce输入键和值的类型

2.public abstract void write(K key, V value ) throws IOException, InterruptedException;

将一个逻辑键/值对写到接收器。

3.public abstract void close(TaskAttemptContext context ) throws IOException, InterruptedException;

清理任何与目标数据接收器相关的资源

定制OutputFormat:这里是扩展OutputFormat的子类FileOutputFormat

import java.io.DataOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.ReflectionUtils;

public class XMLOutputFormat extends FileOutputFormat<Text, Text> {

@Override

public RecordWriter<Text, Text> getRecordWriter(TaskAttemptContext job) throws IOException, InterruptedException {

/**

* 为创建FSDataOutputStream做准备,判断是否设置了输出压缩

* 如果有提取扩展名

*/

Configuration conf = job.getConfiguration();

boolean isCompressed = getCompressOutput(job);

CompressionCodec codec = null;

String extension = "";

if (isCompressed) {

Class<? extends CompressionCodec> codecClass = getOutputCompressorClass(job, GzipCodec.class);

codec = (CompressionCodec) ReflectionUtils.newInstance(codecClass, conf);

extension = codec.getDefaultExtension();

}

Path file = getDefaultWorkFile(job, extension);

FileSystem fs = file.getFileSystem(conf);

/**

* 创建RecordWriter,并把准备好的输出流传入RecordWriter以便其输出定制格式换

* key,value到指定的文件中

*/

if (!isCompressed) {

FSDataOutputStream fileOut = fs.create(file, false);

return new XMLRecordWriter(fileOut);

} else {

FSDataOutputStream fileOut = fs.create(file, false);

return new XMLRecordWriter(new DataOutputStream( codec.createOutputStream(fileOut)));

}

}

}定制RecordWriter:

import java.io.DataOutputStream;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

public class XMLRecordWriter extends RecordWriter<Text, Text> {

protected DataOutputStream out;

private static final String utf8 = "UTF-8";

private static final byte[] newline;

private String nameTag;

private String valueTag;

private Text key_ = new Text();

private Text value_ = new Text();

static {

try {

/**

* 定义换行符

*/

newline = "\n".getBytes(utf8);

} catch (UnsupportedEncodingException uee) {

throw new IllegalArgumentException("can't find " + utf8 + " encoding");

}

}

@Override

public synchronized void close(TaskAttemptContext arg0) throws IOException,

InterruptedException {

out.close();

}

/**

* 实现输出定制的核心方法,在reduce调用write方法后,根据传入的key,value

* 来做相应形式的输出

*/

@Override

public synchronized void write(Text key, Text value) throws IOException,

InterruptedException {

boolean nullKey = key == null;

boolean nullValue = value == null;

/**

* 判断key,value是否都为空如果都为空则不输出

*/

if (nullKey && nullValue) {

return;

}

/**

* 如果key不为空怎么输出

*/

if (!nullKey) {

nameTag = "<name>" + key.toString() +"</name>";

key_.set(nameTag.getBytes());

writeObject(key_);

}

if (!(nullKey || nullValue)) {

out.write(newline);

}

/**

* 如果value不为空怎么输出

*/

if (!nullValue) {

valueTag = "<value>" + value.toString() + "</value>";

value_.set(valueTag.getBytes());

writeObject(value_);

}

out.write(newline);

}

/**

* 调用以下方法把对象写入HDFS

* @param o

* @throws IOException

*/

private void writeObject(Object o) throws IOException {

if (o instanceof Text) {

Text to = (Text) o;

out.write(to.getBytes(), 0, to.getLength());

} else {

out.write(o.toString().getBytes(utf8));

}

}

public XMLRecordWriter(DataOutputStream out ) {

this.out = out;

}

}reduce阶段:

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class XMLReducer extends Reducer<Text, Text, Text, Text>{

private Text val_ = new Text();

private StringBuffer sb = new StringBuffer();

@Override

protected void reduce(Text key, Iterable<Text> value, Context context)

throws IOException, InterruptedException {

for(Text val: value) {

//倒序的处理也可以放到RecordWriter中去实现

sb.append(val.toString());

val_.set(sb.reverse().toString());

context.write(key, val_);

sb.delete(0, sb.length());

}

}

}启动函数:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class JobMain {

public static void main(String[] args) throws Exception{

Configuration configuration = new Configuration();

configuration.set("key.value.separator.in.input.line", " ");

configuration.set("xmlinput.start", "<property>");

configuration.set("xmlinput.end", "</property>");

Job job = new Job(configuration, "xmlread-job");

job.setJarByClass(JobMain.class);

job.setMapperClass(XMLMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

//设置输入格式处理类

job.setInputFormatClass(XMLInputFormat.class);

job.setNumReduceTasks(1);

job.setReducerClass(XMLReducer.class);

//设置输出格式处理类

job.setOutputFormatClass(XMLOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

Path output = new Path(args[1]);

FileOutputFormat.setOutputPath(job, output);

output.getFileSystem(configuration).delete(output, true);

System.exit(job.waitForCompletion(true) ? 0: 1);

}

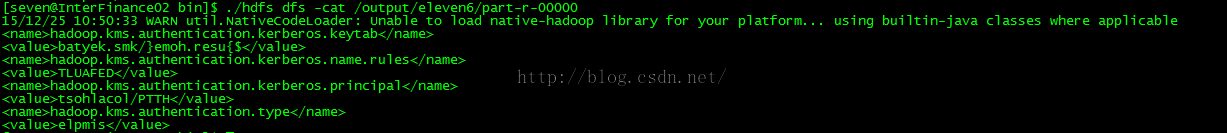

}运行结果:

这一个实例是对上一篇博客《MapReduce-XML处理-定制InputFormat及定制RecordReader》的一个扩展,这里没有贴出Mapper,InputFormat以及RecordReader的代码,如果要运行出结果map端的实现代码请参考上一篇代码。下一篇会说明mapreduce中join的的实现。