ubuntu中测试进程的处理器亲和性和vCPU的绑定

cpu 隔离

启动宿主机时隔离出两个逻辑CPU专门供客户机使用。在Linux内核启动的命令行加上“isolcpus=”参数,可以实现CPU的隔离,让系统启动后普通进程默认都不会调度到被隔离的CPU上执行。下面测试,在四核心的ubuntu 系统中隔离cpu2和cpu3

root@map-VirtualBox:~# grep "processor" /proc/cpuinfo processor : 0 processor : 1 processor : 2 processor : 3

ubuntu 启动的引导项保持在/boot/grub/grub.cfg 中,可以通过开机加载引导项时编译,或者在系统中通过 /etc/default/grub 修改

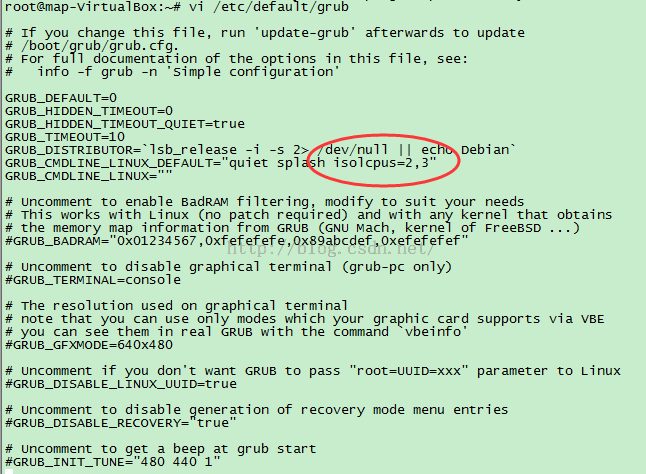

编辑 /etc/default/grub 文件 ,在 quiet splash 后面加上 isolcpus=2,3

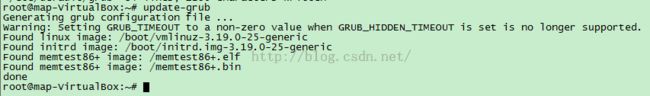

编辑完成并保存后,回到终端,执行命令“update-grub”。其将自动依照刚才编辑的配置文件(/etc/default/grub)生成为引导程序准备的配置文件(/boot/grub/grub.cfg)

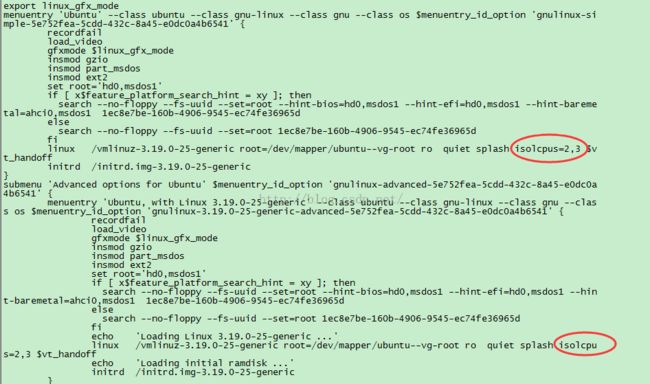

连续输出了各个引导项之后,输出“done”即已完成生成过程,查看 /boot/grub/grub.cfg,已经添加成功

重启系统

root@map-VirtualBox:~# ps -eLo psr | grep 0 | wc -l 280 root@map-VirtualBox:~# ps -eLo psr | grep 1 | wc -l 239 root@map-VirtualBox:~# ps -eLo psr | grep 2 | wc -l 5 root@map-VirtualBox:~# ps -eLo psr | grep 3 | wc -l 5 root@map-VirtualBox:~# ps -eLo psr | grep 4 | wc -l 0

从上面的命令行输出信息可知,cpu0和cpu1上分别有280和239个线程在运行,而cpu2和cpu3上都分别只有5个线程在运行,机器只有四核心所以cpu4不存在。

隔离了cpu2和cpu3 为什么还有进程

查看cpu2,cpu3 上的进程

root@map-VirtualBox:~# ps -eLo ruser,pid,ppid,lwp,psr,args | awk '{if($5==2)print $0}'

root 20 2 20 2 [watchdog/2]

root 21 2 21 2 [migration/2]

root 22 2 22 2 [ksoftirqd/2]

root 23 2 23 2 [kworker/2:0]

root 24 2 24 2 [kworker/2:0H]

root@map-VirtualBox:~# ps -eLo ruser,pid,ppid,lwp,psr,args | awk '{if($5==3)print $0}'

root 27 2 27 3 [watchdog/3]

root 28 2 28 3 [migration/3]

root 29 2 29 3 [ksoftirqd/3]

root 30 2 30 3 [kworker/3:0]

root 31 2 31 3 [kworker/3:0H]

root@map-VirtualBox:~#

根据输出信息中cpu2和cpu3上运行的线程信息(也包括进程在内),分别有migration进程(用于进程在不同CPU间迁移)、两个kworker进程(用于处理workqueues)、ksoftirqd进程(用于调度CPU软中断的进程),这些进程都是内核对各个CPU的一些守护进程,而没有其他的普通进程在cup2和cpu3上运行,说明对其的隔离是生效的。

另外,简单解释一下上面的一些命令行工具及其参数的意义。ps命令显示当前系统的进程信息的状态,它的“-e”参数用于显示所有的进程,“-L”参数用于将线程(LWP,light-weight process)也显示出来,“-o”参数表示以用户自定义的格式输出(其中“psr”这列表示当前分配给进程运行的处理器编号,“lwp”列表示线程的ID,“ruser”表示运行进程的用户,“pid”表示进程的ID,“ppid”表示父进程的ID,“args”表示运行的命令及其参数)。结合ps和awk工具的使用,是为了分别将在处理器cpu2和cpu3上运行的进程打印出来。

KVM 虚拟机绑定 CPU

我在devstack 部署的环境中测试,启动一个虚拟机

stack@map-VirtualBox:~/devstack$ nova list +--------------------------------------+------+---------+------------+-------------+------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+------+---------+------------+-------------+------------------+ | 5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e | b | SHUTOFF | - | Shutdown | private=10.0.0.2 | +--------------------------------------+------+---------+------------+-------------+------------------+ stack@map-VirtualBox:~/devstack$ nova start b Request to start server b has been accepted. stack@map-VirtualBox:~/devstack$ nova list +--------------------------------------+------+--------+------------+-------------+------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+------+--------+------------+-------------+------------------+ | 5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e | b | ACTIVE | - | Running | private=10.0.0.2 | +--------------------------------------+------+--------+------------+-------------+------------------+ stack@map-VirtualBox:~/devstack$ virsh list --all Id Name State ---------------------------------------------------- 2 instance-00000003 running stack@map-VirtualBox:~/devstack$ ps -ef|grep instance-00000003 libvirt+ 5006 1 85 15:06 ? 00:00:17 /usr/bin/qemu-system-x86_64 -name instance-00000003 -S -machine pc-i440fx-trusty,accel=tcg,usb=off -m 512 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid 5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e -smbios type=1,manufacturer=OpenStack Foundation,product=OpenStack Nova,version=13.0.0,serial=c0c968fd-2739-4ede-91ab-0f8aace8855c,uuid=5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e,family=Virtual Machine -no-user-config -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/instance-00000003.monitor,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc -no-shutdown -boot strict=on -kernel /opt/stack/data/nova/instances/5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e/kernel -initrd /opt/stack/data/nova/instances/5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e/ramdisk -append root=/dev/vda console=tty0 console=ttyS0 no_timer_check -device piix3-usb-uhci,id=usb,bus=pci.0,addr=0x1.0x2 -drive file=/opt/stack/data/nova/instances/5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e/disk,if=none,id=drive-virtio-disk0,format=qcow2,cache=none -device virtio-blk-pci,scsi=off,bus=pci.0,addr=0x4,drive=drive-virtio-disk0,id=virtio-disk0,bootindex=1 -drive file=/opt/stack/data/nova/instances/5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e/disk.config,if=none,id=drive-ide0-1-1,readonly=on,format=raw,cache=none -device ide-cd,bus=ide.1,unit=1,drive=drive-ide0-1-1,id=ide0-1-1 -netdev tap,fd=24,id=hostnet0 -device virtio-net-pci,netdev=hostnet0,id=net0,mac=fa:16:3e:c8:98:d8,bus=pci.0,addr=0x3 -chardev file,id=charserial0,path=/opt/stack/data/nova/instances/5e4aaac7-ab8d-45d0-92a1-1fd39aaa432e/console.log -device isa-serial,chardev=charserial0,id=serial0 -chardev pty,id=charserial1 -device isa-serial,chardev=charserial1,id=serial1 -vnc 127.0.0.1:0 -k en-us -device cirrus-vga,id=video0,bus=pci.0,addr=0x2 -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x5 stack 5058 4280 0 15:06 pts/25 00:00:00 grep --color=auto instance-00000003 stack@map-VirtualBox:~/devstack$

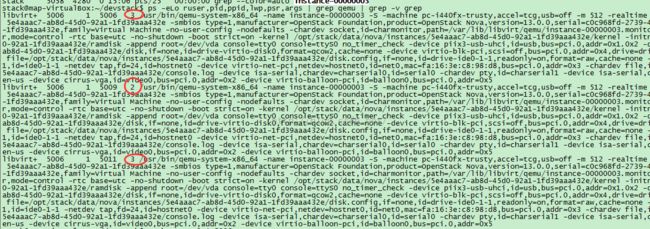

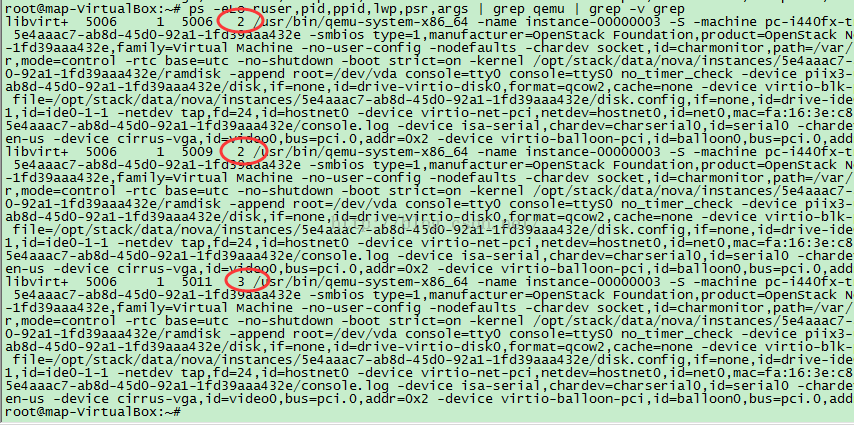

查看vCPU 的qemu 进程,执行 ps -eLo ruser,pid,ppid,lwp,psr,args | grep qemu | grep -v grep

多执行几次,可以看到,第五列的值是变动的,它是基于cpu 时间片的机制,不停切换的

stack@map-VirtualBox:~/devstack$ su - Password: root@map-VirtualBox:~# taskset -p 0x4 5006 pid 5006's current affinity mask: f pid 5006's new affinity mask: 4 root@map-VirtualBox:~# taskset -p 0x4 5009 pid 5009's current affinity mask: f pid 5009's new affinity mask: 4 root@map-VirtualBox:~# taskset -p 0x4 5011 pid 5011's current affinity mask: f pid 5011's new affinity mask: 4 root@map-VirtualBox:~# taskset -p 0x8 5011 pid 5011's current affinity mask: 4 pid 5011's new affinity mask: 8 root@map-VirtualBox:~#三条命令分别为 :

绑定代表整个虚拟机的QEMU进程,使其运行在cpu2上

绑定虚拟机 第一个vCPU的QEMU进程,使其运行在cpu2上

绑定虚拟机 第一个vCPU的QEMU进程,使其运行在cpu3上

再次 查看vCPU 的qemu 进程,执行 ps -eLo ruser,pid,ppid,lwp,psr,args | grep qemu | grep -v grep

多执行几次,可以看到,第五列的值是固定的

绑定成功 !