【OpenStack】OpenStack笔记

声明:

本博客欢迎转发,但请保留原作者信息!

新浪微博:@孔令贤HW;

博客地址:http://blog.csdn.net/lynn_kong

内容系本人学习、研究和总结,如有雷同,实属荣幸!

1. 通用

根据类名动态导入类:

mod_str, _sep, class_str = import_str.rpartition('.')

try:

__import__(mod_str)

return getattr(sys.modules[mod_str], class_str)

except (ImportError, ValueError, AttributeError), exc:

相关组件/项目:

yagi:从AMQP队列中获取通知,提供API,通过PubSubHubbub提供订阅信息

计费: Dough https://github.com/lzyeval/dough

trystack.org billing https://github.com/trystack/dash_billing

nova-billing https://github.com/griddynamics/nova-billing

主机监控: Nagios, Zabbix and Munin

Climate(component towards resource reservations, capacity leasing project), https://launchpad.net/climate

XCloud: HPC on cloud,http://xlcloud.org/bin/view/Main/

Savanna: this project is to enable users to easily provision and manage Hadoop clusters on OpenStack, https://wiki.openstack.org/wiki/Savanna

Ironic:there will be a driver in Nova that talks to the Ironic API for Bare Metal. 关于Bare Metal的BP和wiki:

https://blueprints.launchpad.net/nova/+spec/baremetal-force-node(for havana)

国际化:

/nova/openstack/common/gettextutils.py

可以使用request和response的gzip 压缩提高性能

Header Type Name Value

HTTP/1.1 Request Accept-Encoding gzip

HTTP/1.1 Response Content-Encoding gzip

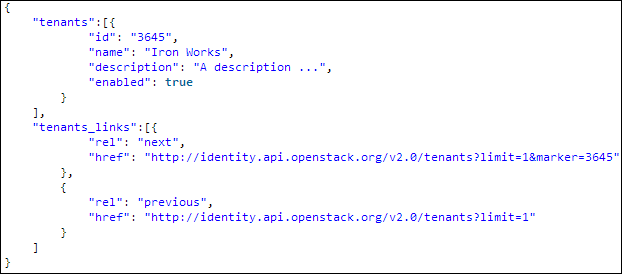

1. GET /tenants HTTP/1.1

Host: identity.api.openstack.org

Accept: application/vnd.openstack.identity+xml; version=1.1

X-Auth-Token: eaaafd18-0fed-4b3a-81b4-663c99ec1cbb

2. GET / v1.1/tenants HTTP/1.1

Host: identity.api.openstack.org

Accept: application/xml

X-Auth-Token: eaaafd18-0fed-4b3a-81b4-663c99ec1cbb

直接Get http://IP:PORT可以查看支持的版本号

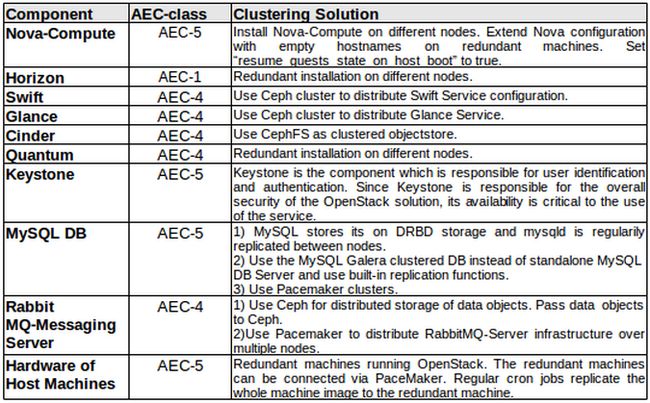

1.1 HA

1. Build OpenStack on top of Corosync and use Pacemaker cluster resource manager to replicate cluster OpenStack services over multiple redundant nodes.

2. For clustering of storage a DRBD block storage solution can be used. DRBD is a software that replicates block storage(hard disks etc.) over multiple nodes.

3. Object storage services can be clustered via Ceph. Ceph is a clustered storage solution which is able to cluster not only block devices but also data objects and filesystems. Obviously Swift Object Store could be made highly available by using Ceph.

4. OpenStack has MySQL as an underlying database system which is used to manage the different OpenStack Services. Instead of using a MySQL standalone database server one could use a MySQL Galera clustered database servers to make MySQL highly available too.

1.2 数据库的备份和恢复

#!/bin/bash

backup_dir="/var/lib/backups/mysql"

filename="${backup_dir}/mysql-`hostname`-`eval date +%Y%m%d`.sql.gz"

# Dump the entire MySQL database

/usr/bin/mysqldump --opt --all-databases | gzip > $filename

# Delete backups older than 7 days

find $backup_dir -ctime +7 -type f -delete

2. 测试

To investigate risk probabilities and impacts, we must have a test on what happens to the OpenStack cloud if some components fail. One such test is the “Chaos Monkey” test developed by Netflix. A “Chaos Monkey” is a service which identifies groups of systems in an IT architecture environment and randomly terminates some of the systems. The random termination of some components serves as a test on what happens if some systems in a complex IT environment randomly fail. The risk of component failures in anOpenStack implementation could be tested by using such Chaos Monkey services.By running multiple tests on multiple OpenStack configurations one can easily learn if the current architecture is able to reach the required availabilitylevel or not.

3. 调试

使用PDB调试quantum

1. 插入代码:

import pdb; pdb.set_trace()

2. 停止服务

3. 手动启动服务:/usr/bin/quantum-server --config-file /etc/quantum/quantum.conf --log-file /var/log/quantum/server.log

如果是nova,需要修改osapi_compute_workers=1,以防止并发

4. 日志

Nova(Folsom)(nova/openstack/common/log.py)中定义了:

'%(asctime)s %(levelname)s %(name)s [%(request_id)s] %(instance)s %(message)s'

我一般在配置文件中如下配置:

logging_default_format_string = '%(asctime)s %(levelname)s [%(name)s %(lineno)d] [%(process)d] %(message)s'

logging_context_format_string= '%(asctime)s %(levelname)s [%(name)s %(lineno)d] [%(process)d] %(message)s'

Quantum(Folsom)配置:

log_format='%(asctime)s %(levelname)s [%(name)s %(lineno)d] [%(process)d] %(message)s'

修改默认日志文件循环方式:

filelog = logging.handlers.RotatingFileHandler(logpath, maxBytes=10*1024*1024, backupCount=10)

log_root.addHandler(filelog)

文件中加打印日志需要先引入logging:

from nova.openstack.common import log as logging

LOG = logging.getLogger(__name__)

打印CONF的配置:

cfg.CONF.log_opt_values(LOG, logging.DEBUG)

rsyslog的使用:

如果系统中的节点过多,登录每一个节点分析日志就显得过于繁琐。所幸的是,Ubuntu默认安装了rsyslog服务,我们需要做的仅仅是按照需求进行配置即可。

rsyslog客户端:配置各个组件中use_syslog=True,同时可以配置不同的syslog_log_facility=LOG_LOCAL0

然后在/etc/rsyslog.d/client.conf中配置:*.* @192.168.1.10

意思是把所有的日志发送到该服务器。

rsyslog服务端:在服务端配置/etc/rsyslog.d/server.conf (只处理Nova日志的示例):

# Enable UDP

$ModLoad imudp

# Listen on 192.168.1.10 only

$UDPServerAddress 192.168.1.10

# Port 514

$UDPServerRun 514

# Create logging templates for nova

$template NovaFile,"/var/log/rsyslog/%HOSTNAME%/nova.log"

$template NovaAll,"/var/log/rsyslog/nova.log"

# Log everything else to syslog.log

$template DynFile,"/var/log/rsyslog/%HOSTNAME%/syslog.log"

*.* ?DynFile

# Log various openstack components to their own individual file

local0.* ?NovaFile

local0.* ?NovaAll

& ~因此,从host1发来的日志都存储在/var/log/rsyslog/host1/nova.log里,同时,/var/log/rsyslog/nova.log文件保存了所有节点的日志。

5. Nova

5.1 更新

可以查看launchpad

5.2 通知机制

配置Nova产生通知(publish notifications to ‘nova’ exchange with routing-key ‘monitor.*’):

--notification_driver=nova.openstack.common.notifier.rabbit_notifier

--notification_topics= ['notifications', ‘monitor’]

为了获取虚拟机状态发生变化的通知, 配置项"notify_on_state_change=vm_state";

为了获取虚拟机状态或任务状态(task state)发生变化的通知,配置项"notify_on_state_change=vm_and_task_state".

在nova/openstack/common/notifier中已经有几个实现类

Rackspace开发了一个收集Nova通知的工具:StackTach

5.3 虚拟机创建

5.3.1 block-device-mapping

若不指定,创建系统卷下载镜像,虚拟机删除时删除系统卷;

若指定,命令如下:

nova boot --image <image_id> --flavor 2 --key-name mykey --block-device-mapping vda=<vol_id>:<type>:<size>:<delete-on-terminate> <instance_name>

此时忽略image参数,type是snap或空;size尽量是空;(2013.06.08)G版可以不填--image <id>

一个例子:

nova boot kong2 --flavor 6 --nic port-id=93604ec4-010e-4fa5-a792-33901223313b --key-name mykey --block-device-mapping vda=b66e294e-b997-48c1-9208-817be475e95b:::0

root@controller231:~# nova show kong2

+-------------------------------------+----------------------------------------------------------+

| Property | Value |

+-------------------------------------+----------------------------------------------------------+

| status | ACTIVE |

| updated | 2013-06-26T10:01:29Z |

| OS-EXT-STS:task_state | None |

| OS-EXT-SRV-ATTR:host | controller231 |

| key_name | mykey |

| image | Attempt to boot from volume - no image supplied |

| hostId | 083729f2f8f664fffd4cffb8c3e76615d7abc1e11efc993528dd88b9 |

| OS-EXT-STS:vm_state | active |

| OS-EXT-SRV-ATTR:instance_name | instance-00000021 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller231.openstack.org |

| flavor | kong_flavor (6) |

| id | 8989a10b-5a89-4f87-9b59-83578eabb997 |

| security_groups | [{u'name': u'default'}] |

| user_id | f882feb345064e7d9392440a0f397c25 |

| name | kong2 |

| created | 2013-06-26T10:00:51Z |

| tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| testnet01 network | 10.1.1.6 |

| progress | 0 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-------------------------------------+----------------------------------------------------------+

此时,传递给nova driver的block_device_info结构体:

{

'block_device_mapping': [{

'connection_info': {

u'driver_volume_type': u'iscsi',

'serial': u'b66e294e-b997-48c1-9208-817be475e95b',

u'data': {

u'target_discovered': False,

u'target_iqn': u'iqn.2010-10.org.openstack: volume-b66e294e-b997-48c1-9208-817be475e95b',

u'target_portal': u'192.168.82.231: 3260',

u'volume_id': u'b66e294e-b997-48c1-9208-817be475e95b',

u'target_lun': 1,

u'auth_password': u'jcYpzNiA4ZQ4dyiC26fB',

u'auth_username': u'CQZto4sC4HKkx57U4WfX',

u'auth_method': u'CHAP'

}

},

'mount_device': u'vda',

'delete_on_termination': False

}],

'root_device_name': None,

'ephemerals': [],

'swap': None

}

在nova-compute中由ComputeManager对象的_setup_block_device_mapping方法处理,会调用Cinder的initialize_connection()和attach()。调用过程:

cinder-api --(RPC)--> 卷所在主机上的cinder-volume--> driver

initialize_connection方法返回:

connection_info:

{

'driver_volume_type':'iscsi',

'data': {

'target_discovered': False,

'target_iqn': 'iqn.2010-10.org.openstack:volume-a242e1b2-3f3f-42af-84a3-f41c87e19c2b',

'target_portal': '182.168.61.24:3260',

'volume_id': a242e1b2-3f3f-42af-84a3-f41c87e19c2b

}

}

attach方法:修改数据表volumes的status(in-use)、mountpoint(vda)、attach_status(attached)、instance_uuid字段

5.3.2 aggregate

相关接口都是管理员接口,最初是用来使用Xen hypervisor resource pools。相关的配置:

在scheduler_default_filters配置项加入AggregateInstanceExtraSpecsFilter

工作流:

$ nova aggregate-create fast-io nova

+----+---------+-------------------+-------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+---------+-------------------+-------+----------+

| 1 | fast-io | nova | | |

+----+---------+-------------------+-------+----------+

$ nova aggregate-set-metadata 1 ssd=true(如果value为None,则删除该key)

+----+---------+-------------------+-------+-------------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+---------+-------------------+-------+-------------------+

| 1 | fast-io | nova | [] | {u'ssd': u'true'} |

+----+---------+-------------------+-------+-------------------+

$ nova aggregate-add-host 1 node1(主机必须与aggregate在同一个zone)

+----+---------+-------------------+-----------+-------------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+---------+-------------------+------------+-------------------+

| 1 | fast-io | nova | [u'node1'] | {u'ssd': u'true'} |

+----+---------+-------------------+------------+-------------------+

# nova-manage instance_type set_key --name=<flavor_name> --key=ssd --value=true,或者使用:

# nova flavor-key 1 set ssd=true

与Xen的结合使用参见 《在OpenStack使用XenServer资源池浅析》

(2013.5.26)G版中,创建aggregate时可以指定zone,此时,zone和aggregate的意义相同,普通用户可以通过使用zone而使用aggregate。示例:

root@controller60:~/controller# nova aggregate-create my_aggregate my_zone +----+--------------+-------------------+-------+----------+ | Id | Name | Availability Zone | Hosts | Metadata | +----+--------------+-------------------+-------+----------+ | 2 | my_aggregate | my_zone | | | +----+--------------+-------------------+-------+----------+

root@controller60:~/controller# nova aggregate-details 2

+----+--------------+-------------------+-------+------------------------------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+--------------+-------------------+-------+------------------------------------+

| 2 | my_aggregate | my_zone | [] | {u'availability_zone': u'my_zone'} |

+----+--------------+-------------------+-------+------------------------------------+

此时,zone只是aggregate的metadata中的选项之一。

5.3.3 指定主机创建

管理员操作

nova boot --image aee1d242-730f-431f-88c1-87630c0f07ba --flavor 1 --availability-zone nova:<host_name> testhost

5.3.4 注入

虚拟机创建时可以向虚拟机镜像中注入key、password、net、metadata、files内容;

user data:虚拟机可以通过查询metadata service或从config-drive获取user data,比如在虚拟机内部执行:

$ curl http://169.254.169.254/2009-04-04/user-data

This is some text

$ curl http://169.254.169.254/openstack/2012-08-10/user_data

This is some text

user data和cloud-init则允许配置虚拟机启动时的行为,Cloud-init是Canonical的一个开源工程,Utuntu镜像中都预装了cloud-init,与Compute metadata service和Compute config drive兼容。cloud-init能够识别以#!(执行脚本,相当于写一个/etc/rc.local脚本)或#cloud-config(可以与Puppet或Chef配合)开头的内容。

Config drive:可以传递一些内容,虚拟机启动时挂载并读取信息。比如当DHCP不可用时,可以向config drive传入网络配置。cloud-init可以自动从config drive获取信息,如果镜像没有安装cloud-init,则需要自定义脚本挂载config drive,读取数据,执行任务。

一个复杂的创建虚拟机示例:

nova boot --config-drive=true --image my-image-name --key-name mykey --flavor 1 --user-data ./my-user-data.txt myinstance --file /etc/network/interfaces=/home/myuser/instance-interfaces --file known_hosts=/home/myuser/.ssh/known_hosts --meta role=webservers --meta essential=false

所有指定的信息都可以从config drive获取。

也可以指定配置项:force_config_drive=true,总是创建config drive

在虚拟机内部访问config drive:

# mkdir -p /mnt/config

# mount /dev/disk/by-label/config-2 /mnt/config

如果操作系统没有使用udev,目录/dev/disk/by-label可能不存在,可以用:

# blkid -t LABEL="config-2" -odevice

查看config drive对应的卷标,然后执行:

# mkdir -p /mnt/config

# mount /dev/vdb /mnt/config

config drive中的内容(执行上述创建虚拟机命令后):

ec2/2009-04-04/meta-data.json

ec2/2009-04-04/user-data

ec2/latest/meta-data.json

ec2/latest/user-data

openstack/2012-08-10/meta_data.json

openstack/2012-08-10/user_data

openstack/content

openstack/content/0000

openstack/content/0001

openstack/latest/meta_data.json

openstack/latest/user_data

ec2开头的记录会在将来移除,选择版本号最高的记录,如openstack/2012-08-10/meta_data.json文件内容(与openstack/latest/meta_data.json内容相同):

openstack/2012-08-10/user_data文件(内容同openstack/latest/user_data)在有--user-data时才会创建,包含参数中传递的文件内容。

(2013.06.27)对于注入的key、userdata等内容的获取,G版中提供了新的实现方式,可以参见metadata在OpenStack中的使用(一)。(2013.07.23)对于Ubuntu镜像,注入SSH有一些限制:

Ubuntu cloud images do not have any ssh HostKey generated inside them

(/etc/ssh/ssh_host_{ecdsa,dsa,rsa}_key). The keys are generated by

cloud-init after it finds a metadata service. Without a metadata service,

they do not get generated. ssh will drop your connections immediately

without HostKeys.

https://lists.launchpad.net/openstack/msg12202.html

5.3.5 request_network(G版)

1. 若仅指定port,则更新port的device_id(vmid)和device_owner('compute:zone'),如果同时指定了安全组,会忽略安全组;

2. 否则,创建port,参数:device_id, device_owner, (fixed_ips), network_id, admin_state_up, security_groups, (mac_address,允许虚拟化层提供可用的mac地址)

若使用quantum的securitygroup实现,则在Nova的虚拟机数据表中securitygroup为空

一个VIF的结构体:

{

id: XXX,

address: XXX(mac),

type: XXX(ovs or bridge or others),

ovs_interfaceid: XXX(portid or none),

devname: XXX('tap+portid'),

network: {

id: XXX,

bridge: XXX('br-int' or 'brq+netid'),

injected: XXX,

label: XXX,

tenant_id: XXX,

should_create_bridge: XXX(true or none),

subnets: [subnet: {

cidr: XXX,

gateway: {

address: XXX,

type: 'gateway'

},

dhcp_server: XXX,

dns: [dns: {

address: XXX,

type='dns'

}],

ips: [ip: {

address: XXX,

type: 'fixed',

floating_ips: {

address: XXX,

type='floating'

}

}],

routes: []

}]

}

}

5.3.6 Cinder

1. initialize_connection(volume, connector)

LVM: 获取卷的target_portal,target_iqn ,target_lun等信息

2. attach(volume, instance_uuid, mountpoint)

5.3.7 虚拟机的卷和镜像

libvert driver创建虚拟机时,会先生成disk_info结构体,大致如下:

'disk_info':

{

'cdrom_bus':'ide',

'disk_bus':'virtio',

'mapping': {

'root': {

'bus': 'virtio/ide/xen/...',

'dev': 'vda1',

'type': 'disk'

},

'disk': { 如果是本地系统卷才有该字段;如果是后端系统卷启动,没有该字段

'bus': 'virtio',

'dev': 'vda1',

'type': 'disk'

},

'disk.local':{ 如果规格中有ephemeral

'bus': 'virtio',

'dev': 'vda2',

'type': 'disk'

},

'disk.ephX':{ 根据bdm中的ephemerals

'bus': 'virtio/ide/xen/...',

'dev': 'XXX',

'type': 'disk'

},

'disk.swap':{ 优先从bdm中获取,其次是规格中

'bus': 'virtio/ide/xen/...',

'dev': 'XXX',

'type': 'disk'

},

'/dev/XXX':{ 后端卷

'bus': 'virtio/ide/xen/...',

'dev': 'XXX',

'type': 'disk'

},

'disk.config':{ 如果虚拟机使用了configdrive

'bus': 'virtio',

'dev': 'XXX25',

'type': 'disk'

}

}

}生成的xml中关于disk有一个source_path参数,如果是本地系统卷,该参数形式为"/var/lib/nova/instances/

uuid/disk"(F版中是"/var/lib/nova/instances/

instance_name/disk"),source_type=file, driver_format=qcow2, source_device=disk, target_dev=卷标;

[root@Fedora17 ~]# ll /var/lib/nova/instances/d6bd399f-f374-45c6-840b-01f36181286d/ total 1672 -rw-rw----. 1 qemu qemu 56570 May 7 11:43 console.log -rw-r--r--. 1 qemu qemu 1769472 May 7 11:45 disk -rw-r--r--. 1 nova nova 1618 May 7 06:41 libvirt.xml

e2fsck -fp diskfile 强制检查及修复系统

resize2fs diskfile 重新定义文件系统的大小

5.4 Nova中的Quantum

OVS(使用过滤功能):libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybirdOVSBridgeDriver

OVS(libvert.version < 0.9.11,不使用过滤):

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtOpenVswitchDriver

OVS(libvert.version >= 0.9.11,不使用过滤):

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtOpenVswitchVirtualPortDriver

Nova's LibvirtHybridOVSBridgeDriver (which is recommended to use by default) creates an additional bridge and related interfacces per tap interface.

The reason to create the bridge is to make Nova'security group work. Security group implementation is based on iptables, but iptables rules are not applied when packets are forwarded on OVS bridges. Thus we prepare an extra bridge per VIF to apply iptables rules and ensure security group works.

5.5 F版中的安全组

nova/compute/api.py中SecurityGroupAPI::trigger_members_refresh()

nova/compute/api.py中SecurityGroupAPI::trigger_handler()

1. 找到虚拟机所属安全组可访问的安全组内的虚拟机,向这些虚拟机所在的计算节点发送消息,refresh_instance_security_rules;

2. 调用配置项security_group_handler表示的类的trigger_security_group_members_refresh方法

3. 在计算节点,会重置与虚拟机关联的chain规则

增加/删除安全组规则:

1. 在db中增加/删除规则记录;

2. 向每个属于该安全组内的虚拟机所在的compute节点发送RPC消息,refresh_instance_security_rules;

3. ComputeManager进而调用ComputeDriver的refresh_instance_security_rules(instance)方法;

4. 在Driver内,以KVM为例,调用FirewallDriver的refresh_instance_security_rules,重置与虚拟机关联的chain规则;

5.6 Rescue模式

The ability to boot from a rescue image and mount the original virtual machine's disk as a secondary block device, steps:1. VM is marked in rescue mode

2. VM is shutdown

3. a new VM is created with identical network configuration but a new root password

4. the new VM has a rescue image as the primary filesystem and the secondary filesystem would be the original VM's primary filesystem

5.7 关于Resize

libvirt: logs it cannot resize to smaller and just keeps the larger disk

xen: tries to copy contents to a smaller disk, fails if too large

hyperv: always errors out if new disk size is smaller

powervm: silently keeps the larger disk

vmware: (couldn't find where migration code handled resize)

The only mention in the API guide - http://docs.openstack.org/api/openstack-compute/2/content/Resize_Server-d1e3707.html - is "scaling the server up or down."

What is the * expected * behavior here? For metering reasons, my thought is that if the disk cannot be sized down an error should be thrown and that the libvirt and powervm should be modified. One issue with this approach is how we expose to the end-user what happened, I don't believe the ERROR state has any details. Additionally, auto-reverting the resize has the same side effect. I would like to explore standardizing the behavior in Havana, documenting in the API guide, and sync'ing in the hyperivsors.

5.8 nova-scheduler

scheduler_max_attempts,3;

scheduler_available_filters,系统可用的filter,不一定使用,['nova.scheduler.filters.all_filters'];

scheduler_default_filters,系统使用的filter,必须是可用filter的子集,['RetryFilter','AvailabilityZoneFilter','RamFilter','ComputeFilter','ComputeCapabilitiesFilter','ImagePropertiesFilter'];

nova-scheduler用到了OpenStack中的topic exchange上的共享队列。可以多进程部署,利用消息队列自身特性实现负载均衡。For availability purposes, or for very large or high-schedule frequency installations, you should consider running multiple nova-scheduler services. No special load balancing is required, as the nova-scheduler communicates entirely using the message queue.

5.9 挂卷

6. Cinder

6.1 G版更新

查看launchpad

6.2 知识点

如果是快照创建卷且配置项snapshot_same_host为真,则需要直接向快照原卷所在的主机发送RPC消息;否则扔给cinder-scheduler处理。

配置项scheduler_driver是cinder-scheduler的driver。scheduler_driver=cinder.scheduler.filter_scheduler.FilterScheduler,这也是默认配置

7. Quantum

7.1 G版更新

查看launchpad

7.2 dhcp-agent

Dnsmasq的配置目录:/var/lib/quantum/dhcp/

(Grizzly)配置项dhcp_agent_notification决定是否向dhcp agent发送RPC消息。

7.3 l3-agent

如果use_namespaces=True,则可以处理多个Router;

如果use_namespaces=False,只能处理一个Router(配置项router_id);

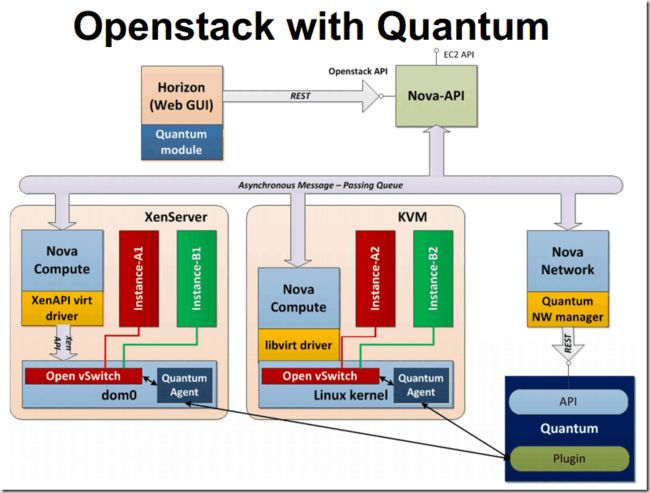

7.4 Quantum with Xen and KVM

7.5 Quota

[QUOTAS]quota_driver = quantum.extensions._quotav2_driver.DbQuotaDriver

在Folsom版本,只有OVS和LinuxBridge支持Quota

Grizzly版本:[QUOTAS]quota_driver = quantum.db.quota_db.DbQuotaDriver

该选项默认是quantum.quota.ConfDriver,从配置文件读取。

8. Keystone

8.1 PKI

#token_format = UUID

certfile = /home/boden/workspaces/openstack/keystone/tests/signing/signing_cert.pem

keyfile = /home/boden/workspaces/openstack/keystone/tests/signing/private_key.pem

ca_certs = /home/boden/workspaces/openstack/keystone/tests/signing/cacert.pem

#key_size = 1024

#valid_days = 3650

#ca_password = None

token_format = PKI

Keystone保存公钥和私钥,可通过接口获取公钥信息;

服务启动时会向Keystone获取公钥;

Keystone将用户信息用私钥加密,然后MD5后返回给用户作为token;

服务不用每次收到请求都访问Keystone,直接校验即可,提高性能;

9. Glance

容器类型(Container Format):表示镜像文件是否包含metadata。主要有ovf, ami(aki, ari), bare。

9.1、镜像的状态

queued镜像的ID已经生成,但还没有开始上传到Glance.

saving

正在上传镜像数据,当使用带“x-image-meta-location”头的“POST /images”注册镜像时,不会出现该状态,为镜像数据已经有一个可用地址。

active

镜像可用。

killed

在镜像上传过程中出现错误,或镜像没有读权限

deleted

镜像信息还在,但镜像不再可用。会在一段时间后删除。

pending_delete

待删除,镜像数据并没有删除,这种状态的镜像是可恢复的。

代码中下载镜像: image_service.download(context, image_id, image_file)

9.2、制作可启动的镜像

方法一:kvm-img/qemu-img create -f raw xp.img 3G

kvm -m 1024 -cdrom winxp.iso -drive file=xp.img,if=virtio,boot=on -fda virtio-win-1.1.16.vfd -boot d -nographic -vnc :9

对于做好的镜像,查看/etc/sysconfig/network-scripts/ifcfg-eth0并删除HWADDR=行

方法二:

一个虚拟机只有系统卷,目前仅支持KVM和Xen,需要qemu-img 0.14以上版本,虚拟机使用qcow2类型的镜像,配置文件中:use_cow_images=true。执行(之前最好在虚拟机内执行sync):

nova image-create <虚拟机id> <image name>

然后用nova image-list可以查看

实际调用的是server对象的createImage

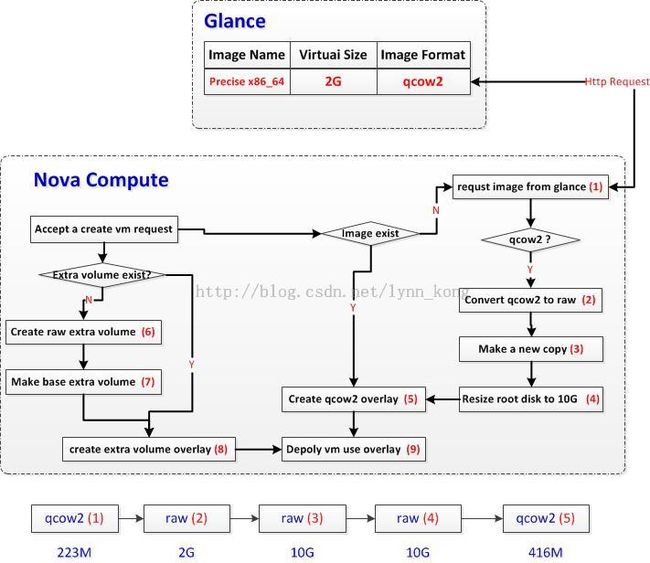

9.3、VM创建过程中镜像的变化过程

10. 性能

2. 针对租户数量,合理设置quota

3. 使用overprovision(超分配),可以通过调整scheduler filters使hypervisor上报的资源大于实际的资源

4. 合理规划节点能力,配置高的节点运行的虚拟机较多,势必会增加虚拟机之间的资源(磁盘,带宽)竞争,响应变慢

5. 挑选合适的hypervisor。目前新的特性都会在KVM充分的测试,并且最好使用KVM运行Linux虚拟机,如果只运行Windows,可以考虑Hyper-V

6. hypervisor调优

7. Glance需要足够的空间,Performance is not critical. The huge space requirement is because many cloud users seem to rely heavily on instance snapshots as a way of cloning and backup

8. For Cinder, it’s all about data accessibility and speed. The volume node needs to provide a high level of IOPS. Can use two NICs /w Bonding/LACP for network reliability and speed and iSCSI optimization.

9. Network. For larger deployments we recommend dedicating each type to a different physical connection.