Practice Every Day_13

继续昨天的话题,还有一类搜索的方法。用QueryParser来分析用户输入的关键词,将关键词转为Query对象,使用QueryParser解析多个关键词。

具体使用方法如下:

QueryParser parser=new QueryParser(Version.Lucene_35,String field,Analyzer analyzer);

Query query=parser.parse(String keyword);

用法举例:

Query query=parser.parse("I football")//含有“I”或“football”的空格默认为or

Query query=parser.parse("I AND football")//既含有“I”又“football”的

//改变空格的默认操作符,以下改为and

parser.setDefaultOperator(Operator.AND);

//改变搜索域和搜索关键词

query=parser.parse("name:Mike");

//搜索name为j开头的

query=parser.parse("name:j*);

//开启第一个字符的通配符匹配,默认为关闭的

parser.setAllowLeadingWildcard(true);

//搜索email以“@itat.org”结尾的

query=parser.parse("email:*@itat.org");

//匹配name中没有mike但content中含有football的

query=parser.parse("-name:Mike+football");

//匹配一个区间(TO要大写)

query=parser.parse("id:[1TO3]");

//匹配I和football之间有一个单词距离的

query=parser.parse( "\"I football\"~1");

//模糊查询

query=parser.parse("name:make~");

说实话今天没学多少东西,只是改了改昨天精确搜索和模糊搜索的代码

下面是修改之后的代码:

Query query=parser.parse("I football")//含有“I”或“football”的空格默认为or

//j建立索引和搜索器

package MySearcher;

import java.io.File;

import java.io.FileReader;

import java.io.IOException;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.NumericField;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.queryParser.ParseException;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.FuzzyQuery;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.store.LockObtainFailedException;

import org.apache.lucene.util.Version;

public class IndexAndSearcher

{

private final static Analyzer analyzer=new StandardAnalyzer(Version.LUCENE_35);

private static Directory directory=null;

static

{

try

{

directory=FSDirectory.open(new File("f:Index_04"));

}

catch(IOException e)

{

e.printStackTrace();

}

}

public static Directory getDirectory()

{

return directory;

}

public static void index() throws LockObtainFailedException

{

IndexWriter writer=null;

try

{

writer=new IndexWriter(directory,new IndexWriterConfig(Version.LUCENE_35,new StandardAnalyzer(Version.LUCENE_35)));

File file=new File("e:/Lucene实例/example/");

Document doc=null;

for(File f:file.listFiles())

{

doc=new Document();

doc.add(new Field("content",new FileReader(f)));

doc.add(new Field("filename",f.getName(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("path",f.getAbsolutePath(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new NumericField("date",Field.Store.YES,true).setLongValue(f.lastModified()));

doc.add(new NumericField("size",Field.Store.YES,true).setIntValue((int)(f.length()/1024)));

writer.addDocument(doc);

}

}

catch(CorruptIndexException e)

{

e.printStackTrace();

}

catch(LockObtainFailedException e)

{

e.printStackTrace();

}

catch(IOException e)

{

e.printStackTrace();

}

finally

{

try

{

if(writer!=null) writer.close();

}

catch(CorruptIndexException e)

{

e.printStackTrace();

}

catch(IOException e)

{

e.printStackTrace();

}

}

}

public static void check() throws IOException

{ //检查索引是否被正确建立(打印索引)

FSDirectory directory = FSDirectory.open(new File("f:Index_04"));

IndexReader reader = IndexReader.open(directory);

for(int i = 0;i<reader.numDocs();i++){

System.out.println(reader.document(i));

}

}

public static void searcher1(String f,String s,int num) throws IOException,CorruptIndexException

{

Directory directory=FSDirectory.open(new File("f:Index_04"));

IndexReader reader= IndexReader.open(directory);

IndexSearcher searcher=new IndexSearcher(reader);

Term t=new Term(f,s);

Query query=new TermQuery(t);

//QueryParser parser=new QueryParser(Version.LUCENE_35,f,analyzer);

//Query query=parser.parse(s);

TopDocs tds=searcher.search(query,num);

int total=tds.totalHits;

System.out.println("共搜索到"+total+"条结果");

ScoreDoc[] sds=tds.scoreDocs;

for(ScoreDoc sd:sds)

{

Document doc=searcher.doc(sd.doc);

System.out.println(doc.get("path")+doc.get("filename"));

}

searcher.close();

}

public static void searcher2(String f,String s,int num) throws CorruptIndexException, IOException, ParseException

{

Directory directory=FSDirectory.open(new File("f:Index_04"));

IndexReader reader= IndexReader.open(directory);

IndexSearcher searcher=new IndexSearcher(reader);

Term t=new Term(f,s);

FuzzyQuery query=new FuzzyQuery(t,0.1f);

TopDocs tds=searcher.search(query,num);

int total=tds.totalHits;

System.out.println("共搜索到"+total+"条结果");

ScoreDoc[] sds1=tds.scoreDocs;

for(ScoreDoc sd:sds1)

{

Document doc=searcher.doc(sd.doc);

System.out.println(doc.get("path")+doc.get("filename"));

}

}

}

//测试类

package MySearcher;

import java.io.IOException;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.queryParser.ParseException;

public class Test {

public static void main(String[] args) throws IOException ,CorruptIndexException, ParseException

{

String f="content";

String s="private";

int num=10;

IndexAndSearcher.index();

// IndexAndSearcher.check();

System.out.println("精确搜索的结果为");

IndexAndSearcher.searcher1(f,s, num);

System.out.println("模糊搜索的结果为");

IndexAndSearcher.searcher2(f,s,num);

}

}

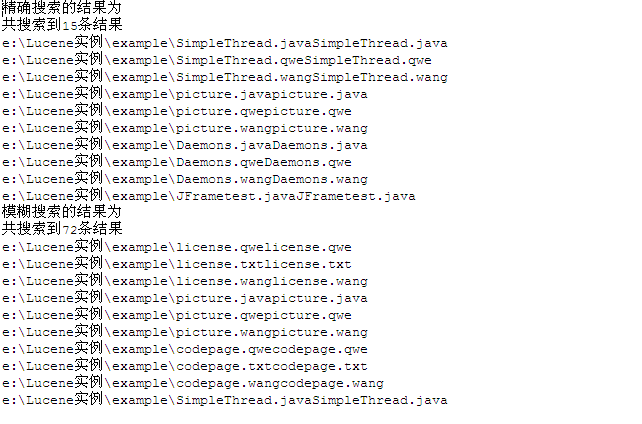

以下是搜索到的结果:

今天效率好低啊!My mood got dysphoric!