内存管理(一)页框管理概论

摘要:对内存管理涉及两个大的方面,一个是物理内存的管理,另一个是虚拟内存的管理,前者是“RAM管理”,后者是进程地址空间的管理,它们二者使用page fault联系起来。在RAM管理这一节,我们主要讲解页框管理和内存区管理,分别介绍对连续物理内存处理的两种不同技术。而“非连续内存区管理”分别介绍处理非连续内存区的第三种技术。内存管理这个系列,我们将讲到“内存管理区、内核映射、伙伴系统、slab和内存池”。本系列文章基于linux kernel 2.6.34,本小节主要为你讲解页框管理的相关数据结构和算法。

动态物理内存(除去保留给硬件和内核原始数据的部分)被划分成页框进行管理,页框可以是4K,2M和4M,我们在这里采用4K来讲解。注意,内存管理一小节,我们讲内存如何给自己分配页,这里涉及到线性地址空间的最高1GB,不涉及0~3G的线性地址空间部分。下面所有的数据结构和相关定义也大多数与这个有关系。

本文来源: 内存管理(一)页框管理概论

1.页描述符

数据结构定义:

page:结构体类型定义,是页框描述符

mem_map:数组,用于存放所有的页框描述符

函数定义:

virt_to_page(addr):宏定义,产生线性地址addr对应的页描述符地址

pfn_to_page( pfn): 宏定义,与页框号对应的页描述符地址(这两个函数的实现尚有疑问)

内核必须记录每个页框当前的状态,保存在类型为page的页描述符之中,长度是32B(不是32b),所有页描述符存放在mem_map数组中。页描述符的相关字段如下:

34 struct page {

35 unsigned long flags; /* Atomic flags, some possibly

36 * updated asynchronously */

37 atomic_t _count; /* Usage count, see below. */

38 union {

39 atomic_t _mapcount; /* Count of ptes mapped in mms,

40 * to show when page is mapped

41 * & limit reverse map searches.

42 */

43 struct { /* SLUB */

44 u16 inuse;

45 u16 objects;

46 };

47 };

48 union {

49 struct {

50 unsigned long private; /* 伙伴系统中,某个页块的order

56 */

57 struct address_space *mapping; /* If low bit clear, points to

58 * inode address_space, or NULL.

59 * If page mapped as anonymous

60 * memory, low bit is set, and

61 * it points to anon_vma object:

62 * see PAGE_MAPPING_ANON below.

63 */

64 };

65 #if USE_SPLIT_PTLOCKS

66 spinlock_t ptl;

67 #endif

68 struct kmem_cache *slab; /* SLUB: Pointer to slab */

69 struct page *first_page; /* Compound tail pages */

70 };

71 union {

72 pgoff_t index; /* Our offset within mapping. */

73 void *freelist; /* SLUB: freelist req. slab lock */

74 };

75 struct list_head lru; /* Pageout list, eg. active_list

76 * protected by zone->lru_lock !

77 */

78 /*

79 * On machines where all RAM is mapped into kernel address space,

80 * we can simply calculate the virtual address. On machines with

81 * highmem some memory is mapped into kernel virtual memory

82 * dynamically, so we need a place to store that address.

83 * Note that this field could be 16 bits on x86 ... ;)

84 *

85 * Architectures with slow multiplication can define

86 * WANT_PAGE_VIRTUAL in asm/page.h

87 */

88 #if defined(WANT_PAGE_VIRTUAL)

89 void *virtual; /* Kernel virtual address (NULL if

90 not kmapped, ie. highmem) */

91 #endif /* WANT_PAGE_VIRTUAL */

92 #ifdef CONFIG_WANT_PAGE_DEBUG_FLAGS

93 unsigned long debug_flags; /* Use atomic bitops on this */

94 #endif

95

96 #ifdef CONFIG_KMEMCHECK

97 /*

98 * kmemcheck wants to track the status of each byte in a page; this

99 * is a pointer to such a status block. NULL if not tracked.

100 */

101 void *shadow;

102 #endif

103 };<span style="font-size:14px">

</span>

我们主要关注两个字段:

_count:页的引用计数,page_count()返回_count+1之后的数值,就是该页使用者的数目。_count>=0的时候,该页非空闲。

flags:页框状态的标志,有32个,使用PageXyz返回相应标志的值,而SetPageXyz和ClearPageXyz用来设置和清除相应的宏。

总结:内核编程吸收了很多面向对象的思想,定了了相应的数据结构和它上面的相关操作。

flags的数值和相关的宏操作如下:

/* Page flag bit values */

#define PG_locked 0

#define PG_error 1

#define PG_referenced 2

#define PG_uptodate 3

#define PG_dirty 4

#define PG_decr_after 5

#define PG_active 6

#define PG_inactive_dirty 7

#define PG_slab 8

#define PG_swap_cache 9

#define PG_skip 10

#define PG_inactive_clean 11

#define PG_highmem 12

/* bits 21-29 unused */

#define PG_arch_1 30

#define PG_reserved 31

/* Make it prettier to test the above... */

#define Page_Uptodate(page) test_bit(PG_uptodate, &(page)->flags)

#define SetPageUptodate(page) set_bit(PG_uptodate, &(page)->flags)

#define ClearPageUptodate(page) clear_bit(PG_uptodate, &(page)->flags)

#define PageDirty(page) test_bit(PG_dirty, &(page)->flags)

#define SetPageDirty(page) set_bit(PG_dirty, &(page)->flags)

#define ClearPageDirty(page) clear_bit(PG_dirty, &(page)->flags)

#define PageLocked(page) test_bit(PG_locked, &(page)->flags)

#define LockPage(page) set_bit(PG_locked, &(page)->flags)

#define TryLockPage(page) test_and_set_bit(PG_locked, &(page)->flags)<span style="font-size:14px">

</span>

线性地址——页描述符地址;页框号——页描述符;这两种对应关系,分别用virt_to_page(addr )&&pfn_to_page(pfn )两者来表示,具体代码如下:

#define virt_to_page(kaddr) pfn_to_page(__pa(kaddr) >> PAGE_SHIFT) #define pfn_to_kaddr(pfn) __va((pfn) << PAGE_SHIFT)

说明:页描述符是用于管理每个页框的,结构体是struct page,对应变量是mem_map;操作函数是virt_to_page, pfn_to_page.

2.非一致性访问

数据结构定义:

pg_data_t:每个节点的节点内存总体描述符

pgdata_list:指向第一个节点的描述符

在numa存储模型中,对每个节点都有一个类型为pg_data_t的描述符,所有节点的描述符存放在一个链表中,第一个元素为pgdata_list变量指向。

typedef struct pglist_data {

struct zone node_zones[MAX_NR_ZONES];//节点中管理区描述符数组,注意这个可以下面的zone数据结构

struct zonelist node_zonelists[MAX_ZONELISTS];

int nr_zones;//节点中管理区个数

#ifdef CONFIG_FLAT_NODE_MEM_MAP /* means !SPARSEMEM */

struct page *node_mem_map;//节点中,页描述符数组

#ifdef CONFIG_CGROUP_MEM_RES_CTLR

struct page_cgroup *node_page_cgroup;

#endif

#endif

#ifndef CONFIG_NO_BOOTMEM

struct bootmem_data *bdata;//用于内核初始化

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/*

* Must be held any time you expect node_start_pfn, node_present_pages

* or node_spanned_pages stay constant. Holding this will also

* guarantee that any pfn_valid() stays that way.

*

* Nests above zone->lock and zone->size_seqlock.

*/

spinlock_t node_size_lock;

#endif

unsigned long node_start_pfn;//节点中第一个页框的下标

unsigned long node_present_pages; /* total number of physical pages ,no including hole*/

unsigned long node_spanned_pages; /* total size of physical page

range, including holes */

int node_id; //节点标识符

wait_queue_head_t kswapd_wait;

struct task_struct *kswapd;

int kswapd_max_order;//kswap要创建的空闲块大小取对数的值

} pg_data_t;<span style="font-size:14px">

</span>

注意:上面这些数据结构都是用来管理物理内存的。3.内存管理区

数据结构定义:

zone:RAM的内存管理区(内核区域)

函数定义:

page_zone():接受一个页描述符的地址作为参数,读取其中的flags字段的最高位,通过查看zone_table数组来确定相应的管理区描述符数组

页框之间看似等价,但受到的物理硬件的制约:

**DMA处理器只能对RAM的前16M寻址

**如果物理机器内存大小超过了4G, 用32位地址进行直接寻址

为此,我们需要了解高端内存的概念,可以参考:高端内存。

在80X86体系中,RAM被分成3个管理区:ZONE_DMA,ZONE_NORMAL,ZONE_HEGHMEM,每个内存管理区都有自己的描述符,字段如下:

<span style="font-size:14px">struct zone {

/* Fields commonly accessed by the page allocator */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long watermark[NR_WMARK];

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

/*

* We don't know if the memory that we're going to allocate will be freeable

* or/and it will be released eventually, so to avoid totally wasting several

* GB of ram we must reserve some of the lower zone memory (otherwise we risk

* to run OOM on the lower zones despite there's tons of freeable ram

* on the higher zones). This array is recalculated at runtime if the

* sysctl_lowmem_reserve_ratio sysctl changes.

*/

unsigned long lowmem_reserve[MAX_NR_ZONES];

#ifdef CONFIG_NUMA

int node;

/*

* zone reclaim becomes active if more unmapped pages exist.

*/

unsigned long min_unmapped_pages;

unsigned long min_slab_pages;

#endif

struct per_cpu_pageset __percpu *pageset;

/*

* free areas of different sizes

*/

spinlock_t lock;

int all_unreclaimable; /* All pages pinned */

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

struct free_area free_area[MAX_ORDER];

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

ZONE_PADDING(_pad1_)

/* Fields commonly accessed by the page reclaim scanner */

spinlock_t lru_lock;

struct zone_lru {

struct list_head list;

} lru[NR_LRU_LISTS];

struct zone_reclaim_stat reclaim_stat;

unsigned long pages_scanned; /* since last reclaim */

unsigned long flags; /* zone flags, see below */

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

/*

* prev_priority holds the scanning priority for this zone. It is

* defined as the scanning priority at which we achieved our reclaim

* target at the previous try_to_free_pages() or balance_pgdat()

* invocation.

*

* We use prev_priority as a measure of how much stress page reclaim is

* under - it drives the swappiness decision: whether to unmap mapped

* pages.

*

* Access to both this field is quite racy even on uniprocessor. But

* it is expected to average out OK.

*/

int prev_priority;

/*

* The target ratio of ACTIVE_ANON to INACTIVE_ANON pages on

* this zone's LRU. Maintained by the pageout code.

*/

unsigned int inactive_ratio;

ZONE_PADDING(_pad2_)

/* Rarely used or read-mostly fields */

/*

* wait_table -- the array holding the hash table

* wait_table_hash_nr_entries -- the size of the hash table array

* wait_table_bits -- wait_table_size == (1 << wait_table_bits)

*

* The purpose of all these is to keep track of the people

* waiting for a page to become available and make them

* runnable again when possible. The trouble is that this

* consumes a lot of space, especially when so few things

* wait on pages at a given time. So instead of using

* per-page waitqueues, we use a waitqueue hash table.

*

* The bucket discipline is to sleep on the same queue when

* colliding and wake all in that wait queue when removing.

* When something wakes, it must check to be sure its page is

* truly available, a la thundering herd. The cost of a

* collision is great, but given the expected load of the

* table, they should be so rare as to be outweighed by the

wait_queue_head_t * wait_table;

unsigned long wait_table_hash_nr_entries;

unsigned long wait_table_bits;

/*

* Discontig memory support fields.

*/

struct pglist_data *zone_pgdat;

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn;

/*

* zone_start_pfn, spanned_pages and present_pages are all

* protected by span_seqlock. It is a seqlock because it has

* to be read outside of zone->lock, and it is done in the main

* allocator path. But, it is written quite infrequently.

*

* The lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*/

unsigned long spanned_pages; /* total size, including holes */

unsigned long present_pages; /* amount of memory (excluding holes) */

/*

* rarely used fields:

*/

const char *name;

} ____cacheline_internodealigned_in_smp;

</span>

管理区中的很多结构用于回收页框,每个页描述符都有内存节点到节点内管理区的链接;为了节省空间这些链接的存放方式与典型指针不同,而是被编码成索引放在flags字段的高位。

page_zone()接受一个页描述符的地址作为参数,读取其中的flags字段的最高位,通过查看zone_table数组来确定相应的管理区描述符数组。在启动的时候,所有内存节点的所有管理区描述符的地址初始化了这个数组。

zonelist数据结构,用于帮助内核指明内存分配请求中首选管理区,它是管理区描述符指针数组。

4.保留的页框池

保留页框的数量存放在min_free_kbyptes变量中;从ZONE_DMA&&ZONE_NORMAL中按比例分配。管理区描述符的pages_min字段存放了管理区保留的页框数目。(注意:在2.6.11之前的kernel版本,才可能看到此字段,从2.6.34以后的版本,不再用这个字段)

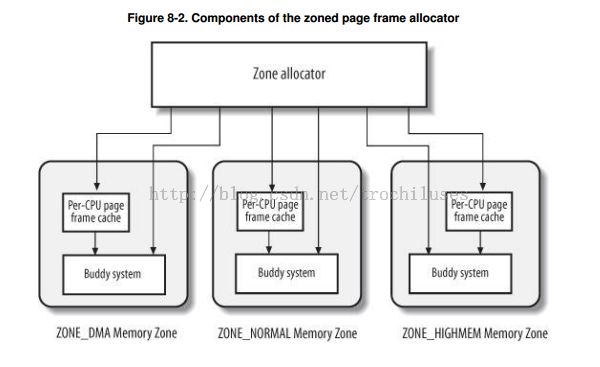

5.分区页框分配器

分区页框分配器的内核子系统负责处理连续页框组的分配请求。它的组成如下:

1)请求和释放页框

通过6个稍有差别的函数和宏来请求和分配页框。一般情况下,它们都返回第一个所分配页的线性地址(因为是在内核空间,所以线性地址和页框具有简单的对应关系),如果分配失败,返回NULL

<span style="font-size:14px">static inline struct page *

alloc_pages(gfp_t gfp_mask, unsigned int order)

{

return alloc_pages_current(gfp_mask, order);

}

</span>

<span style="font-size:14px">#define alloc_page(gfp_mask) alloc_pages(gfp_mask, 0) </span>

<span style="font-size:14px">unsigned long __get_free_pages(gfp_t gfp_mask, unsigned int order)

{

struct page *page;

/*

* __get_free_pages() returns a 32-bit address, which cannot represent

* a highmem page

*/

VM_BUG_ON((gfp_mask & __GFP_HIGHMEM) != 0);

page = alloc_pages(gfp_mask, order);

if (!page)

return 0;

return (unsigned long) page_address(page);

}

</span>

<span style="font-size:14px">#define __get_free_page(gfp_mask) \

__get_free_pages((gfp_mask),0)

</span>

<span style="font-size:14px">unsigned long get_zeroed_page(gfp_t gfp_mask)

{

return __get_free_pages(gfp_mask | __GFP_ZERO, 0);

}

</span>

<span style="font-size:14px">#define __get_dma_pages(gfp_mask, order) \

__get_free_pages((gfp_mask) | GFP_DMA,(order))

</span>

其中,gfp_mask是一组标志,指明了如何寻找空闲的页框。内容如下:

<span style="font-size:14px">#define __GFP_DMA ((__force gfp_t)0x01u) #define __GFP_HIGHMEM ((__force gfp_t)0x02u) #define __GFP_DMA32 ((__force gfp_t)0x04u) #define __GFP_MOVABLE ((__force gfp_t)0x08u) /* Page is movable */ #define GFP_ZONEMASK (__GFP_DMA|__GFP_HIGHMEM|__GFP_DMA32|__GFP_MOVABLE) /* * Action modifiers - doesn't change the zoning * * __GFP_REPEAT: Try hard to allocate the memory, but the allocation attempt * _might_ fail. This depends upon the particular VM implementation. * * __GFP_NOFAIL: The VM implementation _must_ retry infinitely: the caller * cannot handle allocation failures. This modifier is deprecated and no new * users should be added. * * __GFP_NORETRY: The VM implementation must not retry indefinitely. * * __GFP_MOVABLE: Flag that this page will be movable by the page migration * mechanism or reclaimed */ #define __GFP_WAIT ((__force gfp_t)0x10u) /* Can wait and reschedule? */ #define __GFP_HIGH ((__force gfp_t)0x20u) /* Should access emergency pools? */ #define __GFP_IO ((__force gfp_t)0x40u) /* Can start physical IO? */ #define __GFP_FS ((__force gfp_t)0x80u) /* Can call down to low-level FS? */ #define __GFP_COLD ((__force gfp_t)0x100u) /* Cache-cold page required */ #define __GFP_NOWARN ((__force gfp_t)0x200u) /* Suppress page allocation failure warning */ #define __GFP_REPEAT ((__force gfp_t)0x400u) /* See above */ #define __GFP_NOFAIL ((__force gfp_t)0x800u) /* See above */ #define __GFP_NORETRY ((__force gfp_t)0x1000u)/* See above */ #define __GFP_COMP ((__force gfp_t)0x4000u)/* Add compound page metadata */ #define __GFP_ZERO ((__force gfp_t)0x8000u)/* Return zeroed page on success */ #define __GFP_NOMEMALLOC ((__force gfp_t)0x10000u) /* Don't use emergency reserves */ #define __GFP_HARDWALL ((__force gfp_t)0x20000u) /* Enforce hardwall cpuset memory allocs */ #define __GFP_THISNODE ((__force gfp_t)0x40000u)/* No fallback, no policies */ #define __GFP_RECLAIMABLE ((__force gfp_t)0x80000u) /* Page is reclaimable */ </span>

实际上,linux使用预定义的标志值的组合,组名就是你在6个页框函数中遇到的参数.在寻找空闲页框的时候,需要从管理区中分配页框。但是优先从哪个管理区中获得页面呢?contig_page_data节点描述符的node_zonelists字段是一个管理区描述符链表数组,它解决了这个分配顺序问题。

下面四个函数和宏定义中的任何一个都可以释放页框。

void __free_pages(struct page *page, unsigned int order)

2042 {

2043 if (put_page_testzero(page)) {

2044 if (order == 0)

2045 free_hot_cold_page(page, 0);

2046 else

2047 __free_pages_ok(page, order);

2048 }

2049 }

2053 void free_pages(unsigned long addr, unsigned int order)

2054 {

2055 if (addr != 0) {

2056 VM_BUG_ON(!virt_addr_valid((void *)addr));

2057 __free_pages(virt_to_page((void *)addr), order);

2058 }

2059 }

332 #define __free_page(page) __free_pages((page), 0) 333 #define free_page(addr) free_pages((addr),0)