学习OpenCV范例(二十)——角点检测算法

本次范例讲的都是检测角点的算法,在这里我们会讲到,harris角点检测,Shi-Tomasi角点检测,FAST角点检测,尺度不变surf检测,尺度不变sift检测,特征点的描述。由于是算法问题,相对来说会比较复杂,而且都是一些比较经典的算法,如果只是纯粹的想要用算法来实现一些功能,那么只要调用OpenCV几个简单的函数就可以了,但是如果想学习一下理论知识,为以后自己的研究有所帮助,而且想理解函数的参数如何改变,那么还是得硬着头皮去看看原理吧,本人也是看了挺久的算法原理,但是还是没有完全理解透,所以在这里只是贴出我看过的比较有用的博客,还有一些自己编译的代码和实现结果,记录一下这个过程,方便以后可以进一步的研究。

1、原理

Harris:Opencv学习笔记(五)Harris角点检测

Shi-Tomasi:【OpenCV】角点检测:Harris角点及Shi-Tomasi角点检测

FAST:OpenCV学习笔记(四十六)——FAST特征点检测features2D

SIFT:【OpenCV】SIFT原理与源码分析

特征点检测学习_1(sift算法)

SURF: 特征点检测学习_2(surf算法)

2、代码实现

由于代码量较大,所以只是贴出代码的一部分,如果想要整体代码,可以从下面的链接中找到

http://download.csdn.net/detail/chenjiazhou12/7129327

①、harris角点检测

//计算角点响应函数以及非最大值抑制

void detect(const Mat &image){

//opencv自带的角点响应函数计算函数

cornerHarris (image,cornerStrength,neighbourhood,aperture,k);

double minStrength;

//计算最大最小响应值

minMaxLoc (cornerStrength,&minStrength,&maxStrength);

Mat dilated;

//默认3*3核膨胀,膨胀之后,除了局部最大值点和原来相同,其它非局部最大值点被

//3*3邻域内的最大值点取代

dilate (cornerStrength,dilated,cv::Mat());

//与原图相比,只剩下和原图值相同的点,这些点都是局部最大值点,保存到localMax

compare(cornerStrength,dilated,localMax,cv::CMP_EQ);

}

cornerHarris

功能:Harris角点检测

结构:

void cornerHarris(InputArray src, OutputArray dst, int blockSize, int apertureSize, double k, int borderType=BORDER_DEFAULT )

src :8位或者32位浮点数单通道图像

dst:保存Harris检测结果的图像,32位单通道,和src有同样的size

blockSize :邻域大小,相邻像素的尺寸(见关于 cornerEigenValsAndVecs() 的讨论)

apertureSize :滤波器的孔径大小

k :harris 检测器的自由参数

boderType :插值类型

compare

功能:两个数组之间或者一个数组和一个常数之间的比较

结构:

void compare(InputArray src1, InputArray src2, OutputArray dst, int cmpop)

src1 :第一个数组或者标量,如果是数组,必须是单通道数组。

src2 :第二个数组或者标量,如果是数组,必须是单通道数组。

dst :输出数组,和输入数组有同样的size和type=CV_8UC1

cmpop :

标志指明了元素之间的对比关系

CMP_EQ src1 相等 src2.

CMP_EQ src1 相等 src2.

CMP_GT src1 大于 src2.

CMP_GE src1 大于或等于 src2.

CMP_LT src1 小于 src2.

CMP_LE src1 小于或等于 src2.

CMP_NE src1 不等于 src2.

CMP_GE src1 大于或等于 src2.

CMP_LT src1 小于 src2.

CMP_LE src1 小于或等于 src2.

CMP_NE src1 不等于 src2.

如果对比结果为true,那么输出数组对应元素的值为255,否则为0

//获取角点图

Mat getCornerMap(double qualityLevel) {

Mat cornerMap;

// 根据角点响应最大值计算阈值

thresholdvalue= qualityLevel*maxStrength;

threshold(cornerStrength,cornerTh,

thresholdvalue,255,cv::THRESH_BINARY);

// 转为8-bit图

cornerTh.convertTo(cornerMap,CV_8U);

// 和局部最大值图与,剩下角点局部最大值图,即:完成非最大值抑制

bitwise_and(cornerMap,localMax,cornerMap);

return cornerMap;

}

bitwise_and

功能:计算两个数组或数组和常量之间与的关系

结构:

void bitwise_and(InputArray src1, InputArray src2, OutputArray dst, InputArray mask=noArray())

src1 :第一个输入的数组或常量

src2 :第二个输入的数组或常量

dst :输出数组,和输入数组有同样的size和type

mask :可选择的操作掩码,为8位单通道数组,指定了输出数组哪些元素可以被改变,哪些不可以

操作过程为:

如果为多通道数组,每个通道单独处理

②、Shi-Tomasi检测

void goodFeaturesDetect()

{

// 改进的harris角点检测方法

vector<Point> corners;

goodFeaturesToTrack(image,corners,

200,

//角点最大数目

0.01,

// 质量等级,这里是0.01*max(min(e1,e2)),e1,e2是harris矩阵的特征值

10);

// 两个角点之间的距离容忍度

harris().drawOnImage(image,corners);//标记角点

imshow (winname,image);

}

goodFeaturesToTrack

功能:确定图像的强角点

结构:

void goodFeaturesToTrack(InputArray image, OutputArray corners, int maxCorners, double qualityLevel, double minDistance, InputArray mask=noArray(), int blockSize=3, bool useHarrisDetector=false, double k=0.04 )

image :输入8位或32为单通道图像

corners :输出检测到的角点

maxCorners :返回的角点的最大值,如果设置的值比检测到的值大,那就全部返回

qualityLevel :最大最小特征值的乘法因子。定义可接受图像角点的最小质量因子

minDistance :限制因子,两个角点之间的最小距离,使用 Euclidian 距离

mask :ROI:感兴趣区域。函数在ROI中计算角点,如果 mask 为 NULL,则选择整个图像。 必须为单通道的灰度图,大小与输入图像相同。mask对应的点不为0,表示计算该点。

blockSize :邻域大小,相邻像素的尺寸(见关于 cornerEigenValsAndVecs() 的讨论)

useHarrisDetector :是否使用Harris检测器 (见关于 cornerHarris() 或 cornerMinEigenVal()的讨论).

k :Harris检测器的自由参数

1、该函数在原图像的每一个像素点使用cornerMinEigenVal()或者cornerHarris()来计算角点

2、对检测到的角点进行非极大值抑制(在3*3的领域内极大值被保留)

3、对检测到的角点进行阈值处理,小于阈值,则被删除

4、对最终得到的角点进行降序排序

5、删除离强角点距离比minDistance近的角点

2、对检测到的角点进行非极大值抑制(在3*3的领域内极大值被保留)

3、对检测到的角点进行阈值处理,小于阈值,则被删除

4、对最终得到的角点进行降序排序

5、删除离强角点距离比minDistance近的角点

③、FAST检测

void fastDetect()

{

//快速角点检测

vector<KeyPoint> keypoints;

FastFeatureDetector fast(40,true);

fast.detect (image,keypoints);

drawKeypoints (image,keypoints,image,Scalar::all(255),DrawMatchesFlags::DRAW_OVER_OUTIMG);

imshow (winname,image);

}

这个类是FeatureDetector类继承过来的

构造函数

FastFeatureDetector( int threshold=1, bool nonmaxSuppression=true );

threshold:检测阈值

nonmaxSuppression:非极大值抑制

void FeatureDetector::detect(const Mat& image, vector<KeyPoint>& keypoints, const Mat& mask=Mat() ) const

void FeatureDetector::detect(const vector<Mat>& images, vector<vector<KeyPoint>>& keypoints, const vector<Mat>& masks=vector<Mat>() ) const

image :输入图片

images :输入图片组

keypoints :第一个为检测到的keypoints,第二个为检测到的keypoints组

mask :可选的操作掩码,指定哪些keypoints,必须是8位二值化有非零元素的感兴趣区域

masks :多个操作掩码,masks[i]对应images[i]

drawKeypoints

功能:绘制特征关键点.

结构:

void drawKeypoints(const Mat& image, const vector<KeyPoint>& keypoints, Mat& outImg, const Scalar& color=Scalar::all(-1), int flags=DrawMatchesFlags::DEFAULT )

image :原图片

keypoints :得到的keypoints

outImg :输出图片,它的内容依赖于flags的值

color :keypoints的颜色

flags :标志画在输出图像的特征,flags是由DrawMatchesFlags定义的

struct DrawMatchesFlags

{

enum

{

DEFAULT = 0, // 会创建一个输出矩阵,两张源文件,匹配结果,

// 和keypoints将会被绘制在输出图像中

// 对于每一个keypoints点,只有中心被绘制,

// 不绘制半径和方向

DRAW_OVER_OUTIMG = 1, // 不创建输出图像,匹配结构绘制在已经存在的输出图像中

NOT_DRAW_SINGLE_POINTS = 2, // 单独的keypoints点不被绘制

DRAW_RICH_KEYPOINTS = 4 // 对于每一个keypoints点,半径和方向都被绘制

};

};

④、SIFT检测

void siftDetect()

{

vector<KeyPoint> keypoints;

SiftFeatureDetector sift(0.03,10);

sift.detect(image,keypoints);

drawKeypoints(image,keypoints,image,Scalar(255,255,255),DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

imshow (winname,image);

}

SiftFeatureDetector

结构:

SiftFeatureDetector( double threshold, double edgeThreshold,

int nOctaves=SIFT::CommonParams::DEFAULT_NOCTAVES,

int nOctaveLayers=SIFT::CommonParams::DEFAULT_NOCTAVE_LAYERS,

int firstOctave=SIFT::CommonParams::DEFAULT_FIRST_OCTAVE,

int angleMode=SIFT::CommonParams::FIRST_ANGLE );

threshold:过滤掉较差的特征点的对阈值。threshold越大,返回的特征点越少。

edgeThreshold:过滤掉边缘效应的阈值。edgeThreshold越大,特征点越多

⑤、SURF检测

void surfDetect()

{

vector<KeyPoint> keypoints_1, keypoints_2;

Mat descriptors_1, descriptors_2;

//-- Step 1: Detect the keypoints using SURF Detector

SurfFeatureDetector surf(2500);

surf.detect(image,keypoints_1);

surf.detect(image2,keypoints_2);

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

extractor.compute( image, keypoints_1, descriptors_1 );

extractor.compute( image2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

BruteForceMatcher< L2<float> > matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

nth_element(matches.begin(),matches.begin()+24,matches.end());

matches.erase(matches.begin()+25,matches.end());

//-- Draw matches

Mat img_matches;

drawMatches( image, keypoints_1, image2, keypoints_2, matches, img_matches,Scalar(255,255,255) );

drawKeypoints(image,keypoints_1,image,Scalar(255,255,255),DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

//-- Show detected matches

imshow("Matches", img_matches );

imshow (winname,image);

}

SurfFeatureDetector

构造函数

SurfFeatureDetector( double hessianThreshold = 400., int octaves = 3,

int octaveLayers = 4 );

hessianThreshold:阈值

octaves:金字塔组数

octaveLayers:金字塔中每组的层数

SurfDescriptorExtractor

功能:来封装的用于计算特征描述子的类,构造SURE描述子提取器

compute

功能:根据检测到的图像(第一种情况)或者图像集合(第二种情况)中的关键点(检测子)计算描述子.

void DescriptorExtractor::compute(const Mat& image, vector<KeyPoint>& keypoints, Mat& descriptors) const

void DescriptorExtractor::compute(const vector<Mat>& images, vector<vector<KeyPoint>>& keypoints, vector<Mat>& descriptors) const

image :输入图像.

images :输入图像集.

keypoints:输入的特征关键点.

descriptors:计算特征描述子

BruteForceMatcher< L2<float> >

功能:暴力搜索特征点匹配. 对于第一集合中的特征描述子, 这个匹配寻找了在第二个集合中最近的特征描述子. 这种特征描述子匹配支持 masking permissible特征描述子集合匹配.

它是一个模板类,<>中的参数表示匹配的方式

DMatch

功能:用于匹配特征关键点的特征描述子的类:查询特征描述子索引, 特征描述子索引, 训练图像索引, 以及不同特征描述子之间的距离.

match

功能:给定查询集合中的每个特征描述子,寻找最佳匹配.

结构:

void DescriptorMatcher::match(const Mat& queryDescriptors, const Mat& trainDescriptors, vector<DMatch>& matches, const Mat& mask=Mat() ) const

void DescriptorMatcher::match(const Mat& queryDescriptors, vector<DMatch>& matches, const vector<Mat>& masks=vector<Mat>() )

queryDescriptors :特征描述子查询集.

trainDescriptors :待训练的特征描述子集.

matches :匹配特征描述子类

mask – 特定的在输入查询和训练特征描述子集之间的可允许掩码匹配,指定哪些可以被匹配

masks – masks集. 每个 masks[i] 特定标记出了在输入查询特征描述子和存储的从第i个图像中提取的特征描述子集

第二个方法的trainDesctiptors由DescriptorMatcher::add给出。

nth_element

功能:nth_element作用为求第n小的元素,并把它放在第n位置上,下标是从0开始计数的,也就是说求第0小的元素就是最小的数。

功能:nth_element作用为求第n小的元素,并把它放在第n位置上,下标是从0开始计数的,也就是说求第0小的元素就是最小的数。

erase

功能:移除参数1和参数2之间的元素,返回下一个元素

drawMatches

功能:给定两幅图像,绘制寻找到的特征关键点及其匹配

结构:

void drawMatches(const Mat& img1, const vector<KeyPoint>& keypoints1, const Mat& img2, const vector<KeyPoint>& keypoints2, const vector<DMatch>& matches1to2, Mat& outImg, const Scalar& matchColor=Scalar::all(-1), const Scalar& singlePointColor=Scalar::all(-1), const vector<char>& matchesMask=vector<char>(), int flags=DrawMatchesFlags::DEFAULT )

img1 :第一张原图片

keypoints1 :第一张得到的关键点

img2 :第二张图片

keypoints2 :第二张得到的关键点

matches :匹配点

outImg :输出图片,它的内容依赖于flags的值

matchColor :匹配线的颜色,如果为-1,则颜色随机分配

singlePointColor :单独点,没有匹配到的点的颜色,如果为-1,则颜色随机分配

matchesMask :掩码,表示哪些匹配值被绘制出来,如果为空,表示所有匹配点都绘制出来

flags :和上面drawkeypoints中的flags一样

3、运行结果

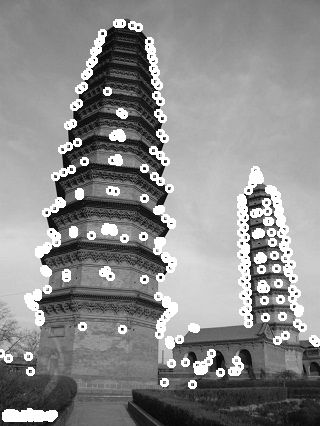

图1、Harris 图2、Shi-Tomasi

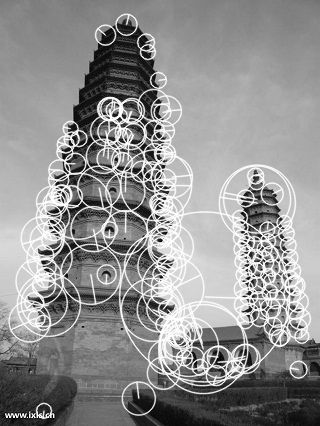

图3、FAST 图4、SIFT

图5、SURF

图6、匹配结果

源代码下载地址:

http://download.csdn.net/detail/chenjiazhou12/7129327