Spark-1.4.0集群搭建

主要内容

- Ubuntu 10.04 系统设置

- ZooKeeper集群搭建

- Hadoop-2.4.1集群搭建

- Spark 1.4.0集群搭建

假设已经安装好Ubuntu操作系统

Ubuntu 10.04设置

1.主机规划

| 主机名 | IP地址 | 进程号 |

|---|---|---|

| SparkMaster | 192.168.1.103 | ResourceManager DataNode、NodeManager、JournalNode、QuorumPeerMain |

| SparkSlave01 | 192.168.1.101 | ResourceManager DataNode、NodeManager、JournalNode、QuorumPeerMain NameNode、DFSZKFailoverController(zkfc) |

| SparkSlave02 | 192.168.1.102 | DataNode、NodeManager、JournalNode、QuorumPeerMain NameNode、DFSZKFailoverController(zkfc) |

**说明:

1.在hadoop2.0中通常由两个NameNode组成,一个处于active状态,另一个处于standby状态。Active NameNode对外提供服务,而Standby NameNode则不对外提供服务,仅同步active namenode的状态,以便能够在它失败时快速进行切换。

hadoop2.0官方提供了两种HDFS HA的解决方案,一种是NFS,另一种是QJM。这里我们使用简单的QJM。在该方案中,主备NameNode之间通过一组JournalNode同步元数据信息,一条数据只要成功写入多数JournalNode即认为写入成功。通常配置奇数个JournalNode

这里还配置了一个zookeeper集群,用于ZKFC(DFSZKFailoverController)故障转移,当Active NameNode挂掉了,会自动切换Standby NameNode为standby状态

2.hadoop-2.2.0中依然存在一个问题,就是ResourceManager只有一个,存在单点故障,hadoop-2.4.1解决了这个问题,有两个ResourceManager,一个是Active,一个是Standby,状态由zookeeper进行协调**

2. 修改主机名称设置

利用vi /etc/hostname修改主机名称

3. 修改主机IP地址

利用vi /etc/network/interfaces修改主要IP

| 主机 | /etc/network/interfaces文件内容 |

|---|---|

| SparkMaster | auto loiface lo inet loopback auto eth0 iface eth0 inet static address 192.168.1.103 netmask 255.255.255.0 gateway 192.168.1.1 |

| SparkSlave01 | auto loiface lo inet loopback auto eth0 iface eth0 inet static address 192.168.1.101 netmask 255.255.255.0 gateway 192.168.1.1 |

| SparkSlave02 | auto loiface lo inet loopback auto eth0 iface eth0 inet static address 192.168.1.102 netmask 255.255.255.0 gateway 192.168.1.1 |

4. 修改域名解析服务器

由于需要联网安装OpenSSH等实现名密码登录,因此这边需要配置对应的域名解析服务器

| 主机 | /etc/resolv.conf文件内容 |

|---|---|

| SparkMaster | domain localdomain search localdomain nameserver 8.8.8.8 |

| SparkSlave01 | domain localdomain search localdomain nameserver 8.8.8.8 |

| SparkSlave02 | domain localdomain search localdomain nameserver 8.8.8.8 |

5.修改主机名与IP地址映射

| 主机 | /etc/resolv.conf文件内容 |

|---|---|

| SparkMaster | 127.0.0.1 SparkMaster localhost.localdomain localhost 192.168.1.101 SparkSlave01 192.168.1.102 SparkSlave02 192.168.1.103 SparkMaster ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters ff02::3 ip6-allhosts |

| SparkSlave01 | 127.0.0.1 SparkSlave01 localhost.localdomain localhost 192.168.1.101 SparkSlave01 192.168.1.102 SparkSlave02 192.168.1.103 SparkMaster ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters ff02::3 ip6-allhosts |

| SparkSlave02 | 127.0.0.1 SparkSlave02 localhost.localdomain localhost 192.168.1.101 SparkSlave01 192.168.1.102 SparkSlave02 192.168.1.103 SparkMaster ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters ff02::3 ip6-allhosts |

完成上述步骤后重新启动机器

6.安装SSH (三台主机执行相同命令)

sudo apt-get install openssh-server

然后确认sshserver是否启动了:

ps -e |grep ssh

7.设置无密码登录 (三台主机执行相同命令)

执行命令:ssh-keygen -t rsa

执行完这个命令后,会生成两个文件id_rsa(私钥)、id_rsa.pub(公钥)

将公钥拷贝到要免登陆的机器上

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

或

ssh-copy-id -i SparkMaster

ssh-copy-id -i SparkSlave02

ssh-copy-id -i SparkSlave01

ZooKeeper集群搭建

本集群用的ZooKeeper版本是3.4.5,将/hadoopLearning/zookeeper-3.4.5/conf目录下的zoo_sample.cfg文件名重命名为zoo.cfg

vi conf/zoo.cfg,在文件中填入以下内容:

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# ZK文件存放目录

dataDir=/hadoopLearning/zookeeper-3.4.5/zookeeper_data

# the port at which the clients will connect

clientPort=2181

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

#http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=SparkSlave01:2888:3888

server.2=SparkSlave02:2888:3888

server.3=SparkMaster:2888:3888

在/hadoopLearning/zookeeper-3.4.5/目录下创建zookeeper_data

然后cd zookeeper_data进入该目录,执行命令

touch myid

echo 3 > myid

利用scp -r zookeeper-3.4.5 root@SparkSlave01:/hadoopLearning/

scp -r zookeeper-3.4.5 root@SparkSlave02:/hadoopLearning/

将文件拷贝到其它服务器上,然后分别进入zookeeper_data目录执行SparkSlave01服务器上echo 1> myid

SparkSlave02服务器上echo 2> myid

root@SparkMaster:/hadoopLearning/zookeeper-3.4.5/bin ./zkServer.sh start

在其它两台机器上执行相同操作

root@SparkMaster:/hadoopLearning/zookeeper-3.4.5/bin zkServer.sh status

JMX enabled by default

Using config: /hadoopLearning/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: leader

至此ZooKeeper集群搭建完毕

- Hadoop-2.4.1集群搭建

将Hadoop安装路径HAD00P_HOME=/hadoopLearning/hadoop-2.4.1加入到环境变量

export JAVA_HOME=/hadoopLearning/jdk1.7.0_67

export JRE_HOME=${JAVA_HOME}/jre

export HAD00P_HOME=/hadoopLearning/hadoop-2.4.1

export SCALA_HOME=/hadoopLearning/scala-2.10.4

export ZOOKEEPER_HOME=/hadoopLearning/zookeeper-3.4.5

export CLASS_PATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:${HAD00P_HOME}/bin:${HAD00P_HOME}/sbin:${ZOOKEEPER_HOME}/bin:${SCALA_HOME}/bin:/hadoopLearning/idea-IC-141.1532.4/bin:$PATH修改hadoo-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_55修改core-site.xml

<configuration>

<!-- 指定hdfs的nameservice为ns1 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns1</value>

</property>

<!-- 指定hadoop临时目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/hadoopLearning/hadoop-2.4.1/tmp</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>SparkMaster:2181,SparkSlave01:2181,SparkSlave02:2181</value>

</property>

</configuration>

修改hdfs-site.xml

<configuration>

<!--指定hdfs的nameservice为ns1,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<!-- ns1下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>SparkSlave01:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>SparkSlave01:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>SparkSlave02:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>SparkSlave02:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://SparkMaster:8485;SparkSlave01:8485;SparkSlave02:8485/ns1</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/hadoopLearning/hadoop-2.4.1/journal</value>

</property>

<!-- 开启NameNode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用sshfence隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- 配置sshfence隔离机制超时时间 -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

</configuration>

修改mapred-site.xml

<configuration>

<!-- 指定mr框架为yarn方式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration> 修改yarn-site.xml

<configuration>

<!-- 开启RM高可靠 -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 指定RM的cluster id -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>SparkCluster</value>

</property>

<!-- 指定RM的名字 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 分别指定RM的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>SparkMaster</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>SparkSlave01</value>

</property>

<!-- 指定zk集群地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>SparkMaster:2181,SparkSlave01:2181,SparkSlave02:2181</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>配置Slaves

SparkMaster

SparkSlave01

SparkSlave02将配置好的hadoop-2.4.1拷到其它服务器上

scp -r /etc/profile root@SparkSlave01:/etc/profile

scp -r /hadoopLearning/hadoop-2.4.1/ root@SparkSlave01:/hadoopLearning/

scp -r /etc/profile root@SparkSlave02:/etc/profile

scp -r /hadoopLearning/hadoop-2.4.1/ root@SparkSlave02:/hadoopLearning/ 启动journalnode

hadoop-daemons.sh start journalnode

#运行jps命令检验,SparkMaster、SparkSlave01、SparkSlave02上多了JournalNode进程格式化HDFS

#在SparkSlave01上执行命令:

hdfs namenode -format

#格式化后会在根据core-site.xml中的hadoop.tmp.dir配置生成个文件,这里我配置的是/hadoopLearning/hadoop-2.4.1/tmp,然后将/hadoopLearning/hadoop-2.4.1/tmp拷贝到SparkSlave02的/hadoopLearning/hadoop-2.4.1/下。

scp -r tmp/ sparkslave02:/hadoopLearning/hadoop-2.4.1/格式化ZK(在SparkSlave01上执行即可)

hdfs zkfc -formatZK启动HDFS(在SparkSlave01上执行)

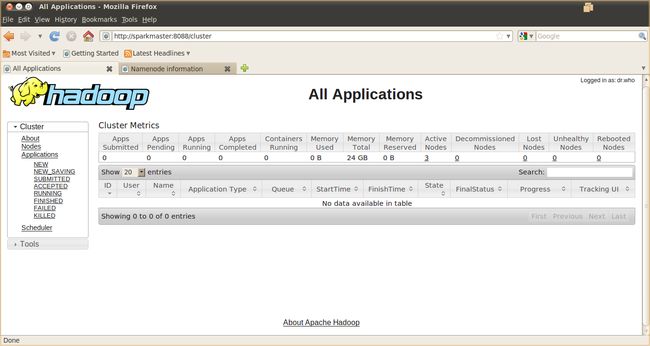

sbin/start-dfs.sh启动YARN(#####注意#####:是在SparkMaster上执行start-yarn.sh,把namenode和resourcemanager分开是因为性能问题,因为他们都要占用大量资源,所以把他们分开了,他们分开了就要分别在不同的机器上启动) sbin/start-yarn.sh打开浏览器输入:

http://sparkmaster:8088可以看到以下页面:

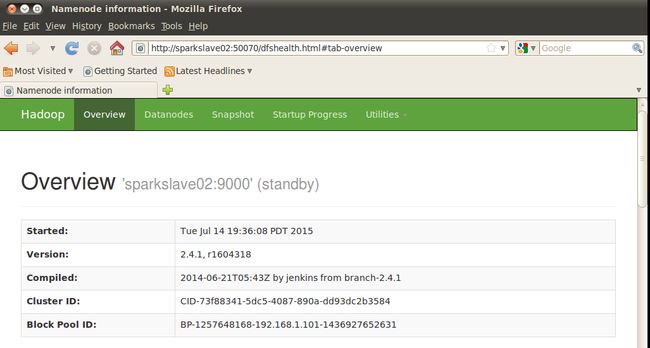

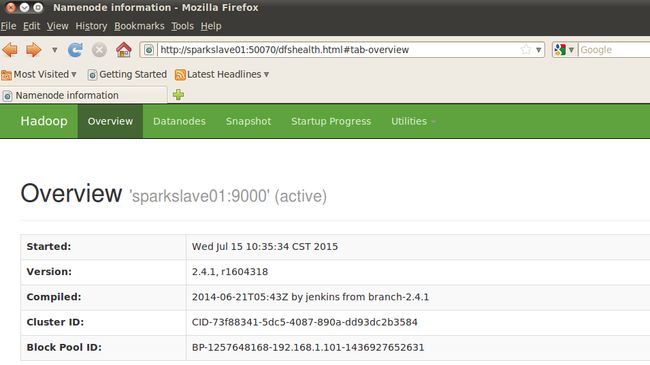

输入http://sparkslave01:50070可以看到以下页面:

输入http://sparkslave02:50070可以看到以下页面

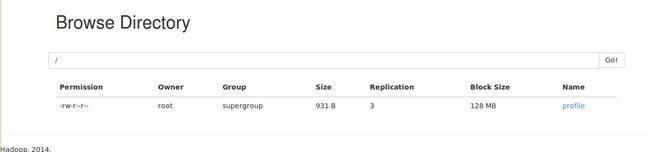

输入以下命令上传文件到hadoop

hadoop fs -put /etc/profile /

在active namenode上查看上传成功的文件

至此hadoop集群搭建成功

- Spark-1.4.0集群搭建

以Spark On Yarn为例

1 在SparkMaster上安装Scala 2.10.4和spark-1.4.0-bin-hadoop2.4,解压对应安装包到/hadoopLearning目录,修改/etc/profile文件,内容如下:

export JAVA_HOME=/hadoopLearning/jdk1.7.0_67

export JRE_HOME=${JAVA_HOME}/jre

export HAD00P_HOME=/hadoopLearning/hadoop-2.4.1

export SCALA_HOME=/hadoopLearning/scala-2.10.4

export SPARK_HOME=/hadoopLearning/spark-1.4.0-bin-hadoop2.4

export ZOOKEEPER_HOME=/hadoopLearning/zookeeper-3.4.5

export CLASS_PATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:${HAD00P_HOME}/bin:${HAD00P_HOME}/sbin:${ZOOKEEPER_HOME}/bin:${SCALA_HOME}/bin:/hadoopLearning/idea-IC-141.1532.4/bin:${SPARK_HOME}/bin:${SPARK_HOME}/sbin:$PATH2 进入/hadoopLearning/spark-1.4.0-bin-hadoop2.4/conf目录

cp spark-defaults.conf.template spark-defaults.conf

cp spark-env.sh.template spark-env.sh

在spark-defaults.conf中添加如下内容:

export JAVA_HOME=/hadoopLearning/jdk1.7.0_67

export HADOOP_CONF_DIR=/hadoopLearning/hadoop-2.4.1/etc/hadoop在spark-defaults.conf中添加如下内容:

spark.master=spark://sparkmaster:7077

spark.eventLog.enabled=true

//hdfs://ns1是前面core-site.xml中定义的hdfs名称

spark.eventLog.dir=hdfs://ns1/user/spark/applicationHistory3 将sparkmaster中的安装配置拷由到sparkslave01,sparkslave02上

scp -r /hadoopLearning/scala-2.10.4 sparkslave01:/hadoopLearning/

scp -r /hadoopLearning/scala-2.10.4 sparkslave02:/hadoopLearning/

scp -r /hadoopLearning/spark-1.4.0-bin-hadoop2.4 sparkslave01:/hadoopLearning/

scp -r /hadoopLearning/spark-1.4.0-bin-hadoop2.4 sparkslave02:/hadoopLearning/

scp -r /etc/profile sparkslave01:/etc/profile

scp -r /etc/profile sparkslave02:/etc/profile4 将sparkmaster中的 /hadoopLearning/spark-1.4.0-bin-hadoop2.4/sbin中执行以下命令:

./start-all.sh利用jps在各主要上查看,可以看到sparkmaster上多了进程master,而sparkslave01,sparkslave02多了进程worker

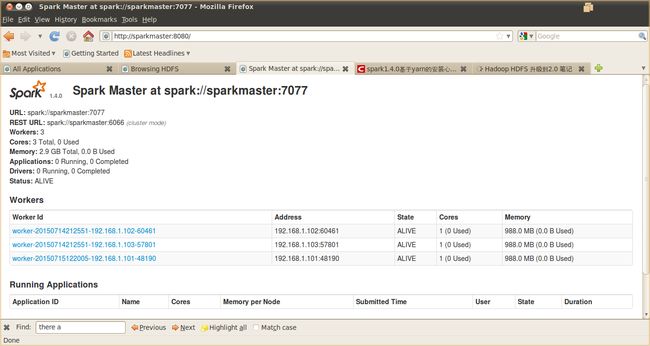

在浏览器中输入http://sparkmaster:8080,可以看到如下界面:

该图中显示了集群的运行相关信息,说明集群初步搭建成功

5 spark-1.4.0 集群程序运行测试

上传 README.md文件到hdfs /user/root目录root@sparkmaster:/hadoopLearning/spark-1.4.0-bin-hadoop2.4# hadoop fs -put README.md /user/root

在sparkmaster节点,进入 /hadoopLearning/spark-1.4.0-bin-hadoop2.4/bin目录,执行spark-shell,刷新http://sparkmaster:8080后可以看到以下内容:

输入下列语句:

val textCount = sc.textFile(“README.md”).filter(line => line.contains(“Spark”)).count()

程序结果如下:

至此,Spark集群搭建成功