SVM实用操作: svmtrain and svmclassify

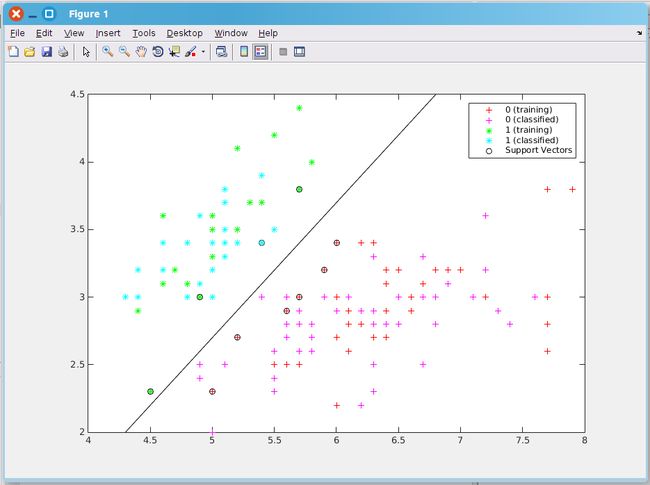

1 load fisheriris 2 data = [meas(:,1), meas(:,2)]; 3 groups = ismember(species,'setosa'); 4 [train, test] = crossvalind('holdOut',groups); 5 cp = classperf(groups); 6 svmStruct = svmtrain(data(train,:),groups(train),'showplot',true); 7

8 classes = svmclassify(svmStruct,data(test,:),'showplot',true); 9 classperf(cp,classes,test);

svmstruct = svmtrain(Training, Group)

Rows of TRAINING correspond to observations; columns correspond to features. Y is a column vector that contains the known class labels for TRAINING.

Y is a grouping variable, i.e., it can be a categorical, numeric, or logical vector; a cell vector of strings; or a character matrix with each row representing a

class label (see help for groupingvariable). Each element of Y specifies the group the corresponding row of TRAINING belongs to.

TRAINING and Y must have the same number of rows. SVMSTRUCT contains information about the trained classifier, including the support vectors, that

is used by SVMCLASSIFY for classification. svmtrain treats NaNs, empty strings or 'undefined' values as missing values and ignores the corresponding

rows in TRAINING and Y.

Group = svmclassify(SVMStruct, Sample)

>> help svmclassify

svmclassify Classify data using a support vector machine

GROUP = svmclassify(SVMSTRUCT, TEST) classifies each row in TEST using the support vector machine classifier structure SVMSTRUCT created

using SVMTRAIN, and returns the predicted class level GROUP. TEST must have the same number of columns as the data used to train the

classifier in SVMTRAIN. GROUP indicates the group to which each row of TEST is assigned.

GROUP = svmclassify(...,'SHOWPLOT',true) plots the test data TEST on the figure created using the SHOWPLOT option in SVMTRAIN.

-----------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------

利用libsvm做多分类问题的经典案例:

[y, x] = libsvmread('iris.scale.txt');

m = svmtrain(y, x, '-t 0');

test_y=[1;2;3];

test_x=[-0.555556 0.25 -0.864407 -0.916667;

0.444444 -0.0833334 0.322034 0.166667 ;

-0.277778 -0.333333 0.322034 0.583333 ];

[predict_label, accuracy, prob_estimates] = svmpredict(test_y, test_x, m);

数据:'iris.scale'可在Libsvm网站上有。共有三类。

iris.scale.txt 文档为: 1 1:-0.555556 2:0.25 3:-0.864407 4:-0.916667 1 1:-0.666667 2:-0.166667 3:-0.864407 4:-0.916667 1 1:-0.777778 3:-0.898305 4:-0.916667 1 1:-0.833333 2:-0.0833334 3:-0.830508 4:-0.916667 1 1:-0.611111 2:0.333333 3:-0.864407 4:-0.916667 1 1:-0.388889 2:0.583333 3:-0.762712 4:-0.75 1 1:-0.833333 2:0.166667 3:-0.864407 4:-0.833333 1 1:-0.611111 2:0.166667 3:-0.830508 4:-0.916667 1 1:-0.944444 2:-0.25 3:-0.864407 4:-0.916667 1 1:-0.666667 2:-0.0833334 3:-0.830508 4:-1 1 1:-0.388889 2:0.416667 3:-0.830508 4:-0.916667 1 1:-0.722222 2:0.166667 3:-0.79661 4:-0.916667 1 1:-0.722222 2:-0.166667 3:-0.864407 4:-1 1 1:-1 2:-0.166667 3:-0.966102 4:-1 1 1:-0.166667 2:0.666667 3:-0.932203 4:-0.916667 1 1:-0.222222 2:1 3:-0.830508 4:-0.75 1 1:-0.388889 2:0.583333 3:-0.898305 4:-0.75 1 1:-0.555556 2:0.25 3:-0.864407 4:-0.833333 1 1:-0.222222 2:0.5 3:-0.762712 4:-0.833333 1 1:-0.555556 2:0.5 3:-0.830508 4:-0.833333 1 1:-0.388889 2:0.166667 3:-0.762712 4:-0.916667 1 1:-0.555556 2:0.416667 3:-0.830508 4:-0.75 1 1:-0.833333 2:0.333333 3:-1 4:-0.916667 1 1:-0.555556 2:0.0833333 3:-0.762712 4:-0.666667 1 1:-0.722222 2:0.166667 3:-0.694915 4:-0.916667 1 1:-0.611111 2:-0.166667 3:-0.79661 4:-0.916667 1 1:-0.611111 2:0.166667 3:-0.79661 4:-0.75 1 1:-0.5 2:0.25 3:-0.830508 4:-0.916667 1 1:-0.5 2:0.166667 3:-0.864407 4:-0.916667 1 1:-0.777778 3:-0.79661 4:-0.916667 1 1:-0.722222 2:-0.0833334 3:-0.79661 4:-0.916667 1 1:-0.388889 2:0.166667 3:-0.830508 4:-0.75 1 1:-0.5 2:0.75 3:-0.830508 4:-1 1 1:-0.333333 2:0.833333 3:-0.864407 4:-0.916667 1 1:-0.666667 2:-0.0833334 3:-0.830508 4:-1 1 1:-0.611111 3:-0.932203 4:-0.916667 1 1:-0.333333 2:0.25 3:-0.898305 4:-0.916667 1 1:-0.666667 2:-0.0833334 3:-0.830508 4:-1 1 1:-0.944444 2:-0.166667 3:-0.898305 4:-0.916667 1 1:-0.555556 2:0.166667 3:-0.830508 4:-0.916667 1 1:-0.611111 2:0.25 3:-0.898305 4:-0.833333 1 1:-0.888889 2:-0.75 3:-0.898305 4:-0.833333 1 1:-0.944444 3:-0.898305 4:-0.916667 1 1:-0.611111 2:0.25 3:-0.79661 4:-0.583333 1 1:-0.555556 2:0.5 3:-0.694915 4:-0.75 1 1:-0.722222 2:-0.166667 3:-0.864407 4:-0.833333 1 1:-0.555556 2:0.5 3:-0.79661 4:-0.916667 1 1:-0.833333 3:-0.864407 4:-0.916667 1 1:-0.444444 2:0.416667 3:-0.830508 4:-0.916667 1 1:-0.611111 2:0.0833333 3:-0.864407 4:-0.916667 2 1:0.5 3:0.254237 4:0.0833333 2 1:0.166667 3:0.186441 4:0.166667 2 1:0.444444 2:-0.0833334 3:0.322034 4:0.166667 2 1:-0.333333 2:-0.75 3:0.0169491 4:-4.03573e-08 2 1:0.222222 2:-0.333333 3:0.220339 4:0.166667 2 1:-0.222222 2:-0.333333 3:0.186441 4:-4.03573e-08 2 1:0.111111 2:0.0833333 3:0.254237 4:0.25 2 1:-0.666667 2:-0.666667 3:-0.220339 4:-0.25 2 1:0.277778 2:-0.25 3:0.220339 4:-4.03573e-08 2 1:-0.5 2:-0.416667 3:-0.0169491 4:0.0833333 2 1:-0.611111 2:-1 3:-0.152542 4:-0.25 2 1:-0.111111 2:-0.166667 3:0.0847457 4:0.166667 2 1:-0.0555556 2:-0.833333 3:0.0169491 4:-0.25 2 1:-1.32455e-07 2:-0.25 3:0.254237 4:0.0833333 2 1:-0.277778 2:-0.25 3:-0.118644 4:-4.03573e-08 2 1:0.333333 2:-0.0833334 3:0.152542 4:0.0833333 2 1:-0.277778 2:-0.166667 3:0.186441 4:0.166667 2 1:-0.166667 2:-0.416667 3:0.0508474 4:-0.25 2 1:0.0555554 2:-0.833333 3:0.186441 4:0.166667 2 1:-0.277778 2:-0.583333 3:-0.0169491 4:-0.166667 2 1:-0.111111 3:0.288136 4:0.416667 2 1:-1.32455e-07 2:-0.333333 3:0.0169491 4:-4.03573e-08 2 1:0.111111 2:-0.583333 3:0.322034 4:0.166667 2 1:-1.32455e-07 2:-0.333333 3:0.254237 4:-0.0833333 2 1:0.166667 2:-0.25 3:0.118644 4:-4.03573e-08 2 1:0.277778 2:-0.166667 3:0.152542 4:0.0833333 2 1:0.388889 2:-0.333333 3:0.288136 4:0.0833333 2 1:0.333333 2:-0.166667 3:0.355932 4:0.333333 2 1:-0.0555556 2:-0.25 3:0.186441 4:0.166667 2 1:-0.222222 2:-0.5 3:-0.152542 4:-0.25 2 1:-0.333333 2:-0.666667 3:-0.0508475 4:-0.166667 2 1:-0.333333 2:-0.666667 3:-0.0847458 4:-0.25 2 1:-0.166667 2:-0.416667 3:-0.0169491 4:-0.0833333 2 1:-0.0555556 2:-0.416667 3:0.38983 4:0.25 2 1:-0.388889 2:-0.166667 3:0.186441 4:0.166667 2 1:-0.0555556 2:0.166667 3:0.186441 4:0.25 2 1:0.333333 2:-0.0833334 3:0.254237 4:0.166667 2 1:0.111111 2:-0.75 3:0.152542 4:-4.03573e-08 2 1:-0.277778 2:-0.166667 3:0.0508474 4:-4.03573e-08 2 1:-0.333333 2:-0.583333 3:0.0169491 4:-4.03573e-08 2 1:-0.333333 2:-0.5 3:0.152542 4:-0.0833333 2 1:-1.32455e-07 2:-0.166667 3:0.220339 4:0.0833333 2 1:-0.166667 2:-0.5 3:0.0169491 4:-0.0833333 2 1:-0.611111 2:-0.75 3:-0.220339 4:-0.25 2 1:-0.277778 2:-0.416667 3:0.0847457 4:-4.03573e-08 2 1:-0.222222 2:-0.166667 3:0.0847457 4:-0.0833333 2 1:-0.222222 2:-0.25 3:0.0847457 4:-4.03573e-08 2 1:0.0555554 2:-0.25 3:0.118644 4:-4.03573e-08 2 1:-0.555556 2:-0.583333 3:-0.322034 4:-0.166667 2 1:-0.222222 2:-0.333333 3:0.0508474 4:-4.03573e-08 3 1:0.111111 2:0.0833333 3:0.694915 4:1 3 1:-0.166667 2:-0.416667 3:0.38983 4:0.5 3 1:0.555555 2:-0.166667 3:0.661017 4:0.666667 3 1:0.111111 2:-0.25 3:0.559322 4:0.416667 3 1:0.222222 2:-0.166667 3:0.627119 4:0.75 3 1:0.833333 2:-0.166667 3:0.898305 4:0.666667 3 1:-0.666667 2:-0.583333 3:0.186441 4:0.333333 3 1:0.666667 2:-0.25 3:0.79661 4:0.416667 3 1:0.333333 2:-0.583333 3:0.627119 4:0.416667 3 1:0.611111 2:0.333333 3:0.728813 4:1 3 1:0.222222 3:0.38983 4:0.583333 3 1:0.166667 2:-0.416667 3:0.457627 4:0.5 3 1:0.388889 2:-0.166667 3:0.525424 4:0.666667 3 1:-0.222222 2:-0.583333 3:0.355932 4:0.583333 3 1:-0.166667 2:-0.333333 3:0.38983 4:0.916667 3 1:0.166667 3:0.457627 4:0.833333 3 1:0.222222 2:-0.166667 3:0.525424 4:0.416667 3 1:0.888889 2:0.5 3:0.932203 4:0.75 3 1:0.888889 2:-0.5 3:1 4:0.833333 3 1:-0.0555556 2:-0.833333 3:0.355932 4:0.166667 3 1:0.444444 3:0.59322 4:0.833333 3 1:-0.277778 2:-0.333333 3:0.322034 4:0.583333 3 1:0.888889 2:-0.333333 3:0.932203 4:0.583333 3 1:0.111111 2:-0.416667 3:0.322034 4:0.416667 3 1:0.333333 2:0.0833333 3:0.59322 4:0.666667 3 1:0.611111 3:0.694915 4:0.416667 3 1:0.0555554 2:-0.333333 3:0.288136 4:0.416667 3 1:-1.32455e-07 2:-0.166667 3:0.322034 4:0.416667 3 1:0.166667 2:-0.333333 3:0.559322 4:0.666667 3 1:0.611111 2:-0.166667 3:0.627119 4:0.25 3 1:0.722222 2:-0.333333 3:0.728813 4:0.5 3 1:1 2:0.5 3:0.830508 4:0.583333 3 1:0.166667 2:-0.333333 3:0.559322 4:0.75 3 1:0.111111 2:-0.333333 3:0.38983 4:0.166667 3 1:-1.32455e-07 2:-0.5 3:0.559322 4:0.0833333 3 1:0.888889 2:-0.166667 3:0.728813 4:0.833333 3 1:0.111111 2:0.166667 3:0.559322 4:0.916667 3 1:0.166667 2:-0.0833334 3:0.525424 4:0.416667 3 1:-0.0555556 2:-0.166667 3:0.288136 4:0.416667 3 1:0.444444 2:-0.0833334 3:0.491525 4:0.666667 3 1:0.333333 2:-0.0833334 3:0.559322 4:0.916667 3 1:0.444444 2:-0.0833334 3:0.38983 4:0.833333 3 1:-0.166667 2:-0.416667 3:0.38983 4:0.5 3 1:0.388889 3:0.661017 4:0.833333 3 1:0.333333 2:0.0833333 3:0.59322 4:1 3 1:0.333333 2:-0.166667 3:0.423729 4:0.833333 3 1:0.111111 2:-0.583333 3:0.355932 4:0.5 3 1:0.222222 2:-0.166667 3:0.423729 4:0.583333 3 1:0.0555554 2:0.166667 3:0.491525 4:0.833333 3 1:-0.111111 2:-0.166667 3:0.38983 4:0.416667