Configure DB2 remote connectivity with WebSphere DataStage Enterprise Edition

Introduction

WebSphere DataStage is one the foremost leaders in the ETL (Extract, Transform, and Load) market space. One of the great advantages of this tool is its scalability, as it is capable of parallel processing on an SMP, MPP or cluster environment. Although DataStage Enterprise Edition (DS/EE) provides many types of plug-in stages to connect to DB2, including DB2 API, DB2 load, and dynamic RDBMS, only DB2 Enterprise Stage is designed to support parallel processing for maximum scalability and performance.

The DB2 Data Partitioning Feature (DPF) offers the necessary scalability to distribute a large database over multiple partitions (logical or physical). ETL processing of a large bulk of data across whole tables is very time-expensive using traditional plug-in stages. DB2 Enterprise Stage however provides a parallel execution engine, using direct communication with each database partition to achieve the best possible performance.

DB2 Enterprise Stage with DPF communication architecture

Figure 1. DS/EE remote DB2 communication architecture

As you see in Figure 1, the DS/EE primary server can be separate from the DB2 coordinate node. Although a 32-bit DB2 client still must be installed, it’s different from the typical remote DB2 access which requires only DB2 client for connectivity. It can be used to pre-query the DB2 instance and determine partitioning of source or target table. On the DB2 server, every DB2 DPF partition must have the DS/EE engine installed. In addition, the DS/EE engine and libraries must be installed in the same location on all DS/EE servers and DB2 servers.

The following principles are important in understanding how this framework works:

- DataStage conductor node uses local DB2 environment variables to determine DB2 instance.

- DataStage reads the DB2nodes.cfg file to determine each DB2 partition. DB2nodes.cfg file is copied from DB2 server node and can be put any location of sqllib subdirectory on DS/EE server. One DS/EE environment variable $APT_DB2INSTANCE_HOME can be used to specify this location of sqllib.

- DataStage scans the current parallel configuration file specified by environment variable $APT_CONFIG_FILE. Each fastname property of this file must have a match with the node name of DB2nodes.cfg.

- DataStage conductor node queries local DB2 instance using the DB2 client to determine table partition information.

- DataStage starts up processes across ETL and DB2 nodes in the cluster. DB2/UDB Enterprise stage passes data to/from each DB2 node through the DataStage parallel framework, not the DB2 client. The parallel execution instance can be examined from the job monitor of the DataStage Director.

|

Environment used in our example

Figure 2. Example topology

In our example, we use 2 machines with RedHat Enterprise Linux 3.0 operating system for testing, one with 2 CPUs and 1G memory for the DB2 server, another with 1 CPU with 1G memory for DS/EE server. In the DB2 server, we have 2 database partitions which can be configured via DB2nodes.cfg, while in DS/EE server; the engine configuration file tells us which nodes are used to execute DataStage jobs concurrently.

The following are steps we followed to successfully configure remote DB2 instance using DS/EE DB2 Enterprise Stage. We will begin this exercise from scratch, including DB2 server configuration, DS/EE installation and configuration.

|

Installation and configuration steps for the DB2 server

- Install DB2 Enterprise Server Edition (with DPF) and create a DB2 instance at Stage164 node.

- Configure rsh service and remote authority file.

- Create sample database and check distribution of tables.

- Create DS/EE users on all members of both nodes.

If DB2 DPF environments are installed and configured, you can skip step 1 and step 3

Step 1. Install DB2 Enterprise Server and create DB2 instance at Stage164 node

Check your DB2 version before installing DB2 ESE on Stage164 node. For our example we used V8.1 fix pack 7. For DPF feature, you must have another separate license. Pay attention to Linux kernel parameters which can potentially affect DB2 installation. Please follow the DB2 installation guide.

- Before installation, create DB2 group and DB2 users.

[root@stage164 home]# groupadd –g db2grp1

[root@stage164 home]# groupadd –g db2fgrp1

[root@stage164 home]# useradd –g db2grp1 db2inst1

[root@stage164 home]# useradd –g db2fgrp1 db2fenc1

[root@stage164 home]# passwd db2inst1 - Create instance. Install DB2, then create the instance using the GUI or command line. If using the command line, switch to DB2 install path with root user and issue the command below to create one DB2 instance the users created in the previous step as parameters.

[root@stage164 home]# cd /opt/IBM/db2/V8.1/instance/

[root@stage164 instance]# ./db2icrt -u db2fenc1 db2inst1 - Confirm db2inst1 instance was created successfully. If it failed, please refer to the official DB2 installation documentation.

[root@stage164 instance]# su – db2inst1

[db2inst1@stage164 db2inst1]$ db2start

05-19-2006 03:56:01 0 0 SQL1063N DB2START processing was successful.

SQL1063N DB2START processing was successful. - Check to confirm that DBM SVCENAME configuration parameter was configured successfully. If it is not set, the client has no way to connect to the DB2 server. In addition, the TCPIP communication protocol also must be set.

[db2inst1@stage164 db2inst1]$ db2 get dbm cfg | grep -i svcename

TCP/IP Service name (SVCENAME) = 50000

[db2inst1@stage164 db2inst1]$ db2set DB2COMM=TCPIP

Step 2. Configure remote shell (rsh) service and remote authority file.

For the DPF environment, DB2 needs the remote shell utility to communicate and execute commands between each partition. Rsh utility can be used for inter-partition communication; OpenSSH utility is another option for inter-partition communication that protects secure communication. For simplicity, we will not cover it in this article.

- Check whether rsh server has installed. If not, download it and issue "rpm –ivh rsh.server-xx.rpm" to install it.

[root@stage164 /]# rpm -qa | grep -i rsh

rsh-0.17-17

rsh-server-0.17-17 - Confirm rsh service can be started successfully.

[root@stage164 /]#service xinetd start

[root@stage164 /]#netstat –na | grep 514 - Create or modify file for authority users to execute remote commands You can create (or edit if it already exists) an /etc/hosts.equiv file. This first column of this file is the machine name, and the second is the instance owner. For example, the following means only db2inst1 user has authority to execute commands on Stage164 using rsh:

.Stage164 db2inst1

- Check whether rsh works correctly or not by issuing below command using DB2inst1 user. If the date doesn't show correctly, that means there is still a configuration problem.

[db2inst1@stage164 db2inst1]$ rsh stage164 date

Thu May 18 23:26:03 CST 2006

Step 3. Create DPF partitions and create sample database

- Edit the database partition configuration file (DB2nodes.cfg) under <DB2HOME>/sqllib. In this example, we have 2 logical partitions on Stage164 host.

0 stage164 0

1 stage164 1

- Restart DB2 instance and be sure both partitions can be started successfully.

[db2inst1@stage164 db2inst1]$ db2stop force

05-18-2006 23:32:08 0 0 SQL1064N DB2STOP processing was successful.

SQL1064N DB2STOP processing was successful.

[db2inst1@stage164 db2inst1]$ db2start

05-18-2006 23:32:18 1 0 SQL1063N DB2START processing was successful.

05-18-2006 23:32:18 0 0 SQL1063N DB2START processing was successful.

SQL1063N DB2START processing was successful.

- Create sample database and check the data distribution. According to result, the total row count of table department is 9, and 4 of 9 is distributed into partition 0, while 5 of 9 into partition 1 according to partition key deptno.

[db2inst1@stage164 db2inst1]$ db2sampl

[db2inst1@stage164 db2inst1]$ db2 connect to sample

[db2inst1@stage164 db2inst1]$ db2 "select count(*) from department"

1

-----------

9

1 record(s) selected.

[db2inst1@stage164 db2inst1]$ db2 "select count(*) from department where

dbpartitionnum(deptno)=0"

1

-----------

4

1 record(s) selected.

[db2inst1@stage164 db2inst1]$ db2 "select count(*) from department where

dbpartitionnum(deptno)=1"

1

-----------

5

1 record(s) selected.

Step 4. Create DS/EE users and configure the to access the DB2 database

If DS/EE users and groups have been created on the DS/EE node, then create the same users and groups on the DB2 server node. In any case, make sure you have the same DS/EE users and groups on these two machines.

- Create DS/EE user/groups at DB2 server. In this example, they are dsadmin/dsadmin. Also add DS/EE user to DB2 instance group.

[root@stage164 home]# groupadd -g 501 dsadmin

[root@stage164 home]# useradd –u 501 –g dsadmin –G db2grp1 db2inst1

[root@stage164 home]# passwd dsadmin

- Add an entry in /etc/hosts.equiv file which was created in Step 2.3. This gives dsadmin authority to execute some commands on Stage164.

Stage164 db2inst1

Stage164 dsadmin - Add DB2 profile environment variable at <DSEngine_HOME>/.bashrc file (for example, <DSEngine_HOME> = /home/dsadmin).

. /home/db2inst1/sqllib/db2profile

- Be sure dsadmin user can connect to sample db successfully.

# su - dsadm

$ db2 connect to sample

Database Connection Information

Database server = DB2/6000 8.2.3

SQL authorization ID = DSADM

Local database alias = SAMPLE

$

|

Installation and configuration steps for the DS/EE node

- Install DataStage Enterprise Edition(DS/EE) and DB2 client

- Add DB2 library and instance home at DS/EE configuration file

- Catalog sample db to DS/EE using dsadmin

- Copy DB2nodes.cfg from DB2 server to DS/EE and configure environment variable.

- NFS configuration, export /home/dsadmin/Ascential/

- Verify DB2 operator library and execute DB2setup.sh and DB2grants.sh

- Create or modify DS/EE configuration file

- Restart DS/EE server

Now let's walk through the process in detail.

Step 1. Install DataStage Enterprise Edition(DS/EE) and DB2 client

First, DS/EE users and groups need to be created in advance. In this example, the user is dsadmin, group dsadmin. If DS/EE not installed, follow the WebSphere DataStage install guide. We assume the software is installed on DSHOME variable which is /home/dsadmin/Ascential/DataStage/DSEngine. Then, install the DB2 client and create one client instance at DS/EE node.

Step 2. Add DB2 library and instance home at DS/EE configuration file

The dsenv configuration file, located under DSHOME directory, is one of the most important configuration files in DS/EE. It contains the environment variables and library path. At this step, we will add DB2 library to LD_LIBRARY_PATH so that DS/EE engine can connect to DB2.

Note: PXEngine library should precede DB2 library for LD_LIBRARY_PATH environment path.

Configure the dsenv file as follows:

|

You can add this dsenv file to dsadmin .bashrc file (/home/dsadmin/.bashrc) to avoid executing it manually every time. What you need do is to exit the dsadmin user and re-logon to make it execute and take effect.

. /home/dsadmin/Ascential/DataStage/DSEngine/dsenv |

Step 3. Catalog remote sample db to DS/EE using dsadmin

- Catalog remote sample database from DB2 EE (Stage164) to DS/EE using dsadmin.

[dsadmin@transfer dsadmin]$ db2 CATALOG TCPIP NODE stage164 REMOTE stage164 SERVER 50000

[dsadmin@transfer dsadmin]$ db2 CATALOG DB sample AS samp_02 AT NODE stage164

- Configure rsh utility according to Step 2 of "Installation and configuration steps for the DB2 server." Be sure dsadmin user at transfer can execute remote commands for Stage164 using rsh.

[dsadmin@transfer dsadmin]$ rsh stage164 date

Thu May 19 10:22:09 CST 2006

Step 4. Copy DB2nodes.cfg from DB2 server to DS/EE and configure environment variable.

Copy the DB2nodes.cfg file from the DB2 server to one directory of DS/EE. This file tells DS/EE engine how many DB2 partitions there are in the DB2 server. Then create one environment variable APT_DB2INSTANCE_HOME by DataStage Administrator to point to the directory. This variable can be specified at the project level or the job level.

Step 5. NFS configuration, export /home/dsadmin/Ascential/

First, add 2 machine names into /etc/hosts file at both nodes to identify one another’s network name. Then, share the DS/EE whole directory to the DB2 server so that each partition can communicate with DS/EE.

- At DS/EE node, export /home/dsadmin/Ascential directory. This can be done by adding an entry in /etc/exports file, it will allow users from stage164 machine to mount /home/dsadmin/Ascential directory with read/write authority.

/home/dsadmin/Ascential stage164(rw,sync)

- Once you have changed /etc/export file, you must notify NFS daremod process to reload changes. Or you can stop and restart this nfsd process by issuing following commands:

[root@transfer /]# service nfs start

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS daemon: [ OK ]

Starting NFS mountd: [ OK ] - Then at DB2 server, create one directory called /home/dsadmin/Ascential, it’s same with DS/EE server, then mount this directory to remote DS/EE directory.

[root@stage164 home]# mount -t nfs -o rw transfer:/home/dsadmin/Ascential /home/dsadmin/

Ascential

You can check mounted files as follows:

[root@stage164 home]# df -k

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda1 7052464 3491352 3202868 53% /

transfer:/home/dsadmin/Ascential

7052464 6420892 273328 96% /home/dsadmin/Ascential

To avoid mounting it every time when machine restart, you can also add this entry into file /etc/fstab to mount this directory automatically:

transfer:/home/dsadmin/Ascential /home/dsadmin/Ascential nfs defaults 0 0

Step 6. Verify DB2 operator library and execute DB2setup.sh and DB2grants.sh

- Execute DB2setup.sh script ,which located in $PXHOME/bin. Note, you may have a problem for remote DB2 instances; you will need to change the connect userid and password.

db2 connect to samp_02 user dsadmin using passw0rd

db2 bind ${APT_ORCHHOME}/bin/db2esql.bnd datetime ISO blocking all grant public

# this statement must be run from /instance_dir/bnd

cd ${INSTHOME}/sqllib/bnd

db2 bind @db2ubind.lst blocking all grant public

db2 bind @db2cli.lst blocking all grant public

db2 connect reset

db2 terminate

- Execute DB2grants.sh

db2 connect to samp_2 user dsadmin using passw0rd

db2 grant bind, execute on package dsadm.db2esql to group dsadmin

db2 connect reset

db2 terminate

Step 7. Create or modify DS/EE configuration file

DS/EE provides parallel engine configuration files. DataStage learns about the shape and size of the system from the configuration file. It organizes the resources needed for a job according to what is defined in the configuration file. The DataStage configuration file needs to contain the node on which DataStage and the DB2 client are installed and the nodes of the remote computer where the DB2 server is installed.

The following is one example. For more detail info of engine configuration file, please refer to the "Parallel job development guide."

{ |

Step 8. Restart DS/EE server and test connectivity

At this point you have completed all configurations on both nodes. Restart DS/EE server by issuing the commands below:

[dsadmin@transfer bin]$ uv -admin –stop |

Note: after stopping the DS/EE engine, you need to exit dsadmin and re-logon, and the dsenv configuration file will be executed. Also, be sure the time interval between stop and start is longer than 30 seconds in order for the changed configuration to take effect.

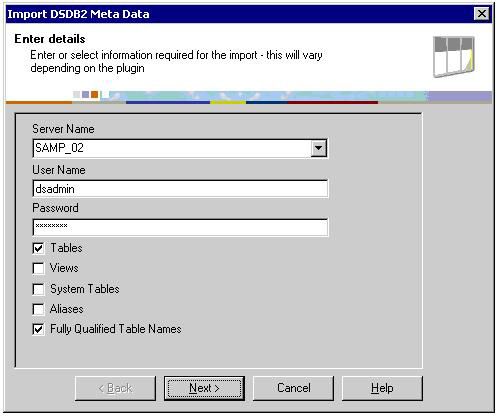

Next, we will test remote connectivity using DataStage Designer. Choose Import plug-in table definition. The following window will appear. Click Next. If it imports successfully, that means the remote DB2 connectivity configuration has succeeded.

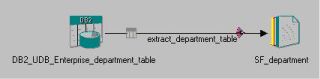

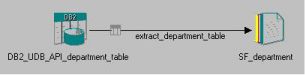

Figure 3. DB2 Enterprise stage job

|

Develop one DB2 enterprise job on DS/EE

In this part, we will develop one parallel job with DB2 Enterprise Stage using DataStage Designer. This job is very simple because it just demonstrates how to extract DB2 department table data to one sequential file.

Figure 4. Import DSDB2 Meta Data

Double-click the DB2 Enterprise stage icon, and set the following properties to the DB2 Enterprise stage. For detailed information, please reference the "Parallel job developer’s guide."

Figure 5. DS/EE DB2 Enterprise stage properties

- Client Instance Name: Set this to the DB2 client instance name. If you set this property, DataStage assumes you require remote connection.

- Server: Optionally set this to the instance name of the DB2 server. Otherwise use the DB2 environment variable, DB2INSTANCE, to identify the instance name of the DB2 server.

- Client Alias DB Name: Set this to the DB2 client’s alias database name for the remote DB2 server database. This is required only if the client’s alias is different from the actual name of the remote server database.

- Database: Optionally set this to the remote server database name. Otherwise use the environment variables APT_DBNAME or APT_DB2DBDFT to identify the database.

- User: Enter the user name for connecting to DB2. This is required for a remote connection.

- Password: Enter the password for connecting to DB2. This is required for a remote connection.

Add the following two environment variables into this job via DataStage Manager. APT_DB2INSTANCE_HOME defines DB2nodes.cfg location, while APT_CONFIG_FILE specifies the engine configuration file.

Figure 6. Job properties set

|

Performance comparison between Enterprise Stage and API Stage

In this part, we will execute the jobs developed above by DataStage Director and compare the performance between DS/EE Enterprise Stage and API Stage. The following is another job with DB2 API Stage.

Figure 7. DB2 API stage

o generate a quantity of test data, we created the following stored procedure:

|

Execute the stored procedure:

DB2 –td@ -f emp_resume.sql |

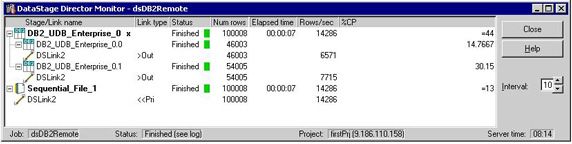

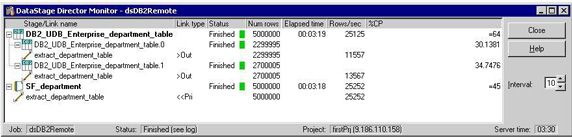

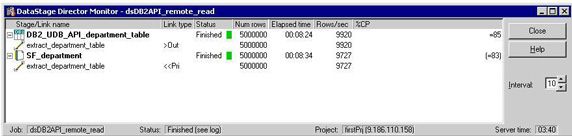

Then, we execute these 2 jobs against 100,000, 1M and 5M rows via DataStage Director and observe the result using the job monitor. The following screenshots are test results with DB2 Enterprise Stage and DB2 API Stage.

Figure 8. 100,000 records (DB2 Enterprise Stage)

Figure 9. 100,000 records (DB2 API stage)

Figure 10. 1,000,000 records (DB2 Enterprise Stage)

Figure 11. 1,000.000 records (DB2 API Stage)

Figure 12. 5,000,000 records (DB2 Enterprise Stage)

Figure 13. 5,000,000 records (DB2 API Stage)

Figure 14. Compare performance between Enterprise Stage and API Stage

In Figure 8, there are 2 nodes are executing ETL with DB2 Enterprise Stage, while with DB2 API Stage there is 1 node. The ETL processing of Enterprise Stage per second is above 2 times to API Stage. Furthermore, with data growth, Enterprise Stage has greater advantage because of parallel performance.

|

Limitation

DB2 Enterprise Stage has a great parallel performance over the other DB2 plug-in stages using a DB2 DPF environments, however, it requires the hardware and operating system of ETL server, and the DB2 nodes must be the same. Consequently, it’s not a replacement for other DB2 plug-in stages, especially in heterogeneous environments.

|

Conclusion

This article has described how to configure remote connectivity for DS/EE DB2 Enterprise Stage using step-by-step instructions. In addition, we provided a performance comparison between Enterprise Stage and DB2 API Stage using two DS/EE DataStage jobs.

Resources

- 1. Ascential Developer Site

- 2. DataStage Enterprise Edition Configure Parallel Remote DB2 Connectivity