hive on spark 编译

前置条件说明

Hive on Spark是Hive跑在Spark上,用的是Spark执行引擎,而不是MapReduce,和Hive on Tez的道理一样。

从Hive 1.1版本开始,Hive on Spark已经成为Hive代码的一部分了,并且在spark分支上面,可以看这里https://github.com/apache/hive/tree/spark,并会定期的移到master分支上面去。

关于Hive on Spark的讨论和进度,可以看这里https://issues.apache.org/jira/browse/HIVE-7292。

hive on spark文档:https://issues.apache.org/jira/secure/attachment/12652517/Hive-on-Spark.pdf

源码下载

git clone https://github.com/apache/hive.git hive_on_spark

编译

cd hive_on_spark/

git branch -r

origin/HEAD -> origin/master

origin/HIVE-4115

origin/HIVE-8065

origin/beeline-cli

origin/branch-0.10

origin/branch-0.11

origin/branch-0.12

origin/branch-0.13

origin/branch-0.14

origin/branch-0.2

origin/branch-0.3

origin/branch-0.4

origin/branch-0.5

origin/branch-0.6

origin/branch-0.7

origin/branch-0.8

origin/branch-0.8-r2

origin/branch-0.9

origin/branch-1

origin/branch-1.0

origin/branch-1.0.1

origin/branch-1.1

origin/branch-1.1.1

origin/branch-1.2

origin/cbo

origin/hbase-metastore

origin/llap

origin/master

origin/maven

origin/next

origin/parquet

origin/ptf-windowing

origin/release-1.1

origin/spark

origin/spark-new

origin/spark2

origin/tez

origin/vectorization

git checkout origin/spark

git branch

* (分离自 origin/spark)

master修改$HIVE_ON_SPARK/pom.xml

spark版本改成spark1.4.1

<spark.version>1.4.1</spark.version>hadoop版本改成2.3.0-cdh5.1.0

<hadoop-23.version>2.3.0-cdh5.1.0</hadoop-23.version>编译命令

export MAVEN_OPTS="-Xmx2g -XX:MaxPermSize=512M -XX:ReservedCodeCacheSize=512m"

mvn clean package -Phadoop-2 -DskipTests添加Spark的依赖到Hive的方法

spark home:/home/cluster/apps/spark/spark-1.4.1

hive home:/home/cluster/apps/hive_on_spark

1.set the property ‘spark.home’ to point to the Spark installation:

hive> set spark.home=/home/cluster/apps/spark/spark-1.4.1; - Define the SPARK_HOME environment variable before starting Hive CLI/HiveServer2:

export SPARK_HOME=/home/cluster/apps/spark/spark-1.4.13.Set the spark-assembly jar on the Hive auxpath:

hive --auxpath /home/cluster/apps/spark/spark-1.4.1/lib/spark-assembly-*.jar- Add the spark-assembly jar for the current user session:

hive> add jar /home/cluster/apps/spark/spark-1.4.1/lib/spark-assembly-*.jar;- Link the spark-assembly jar to $HIVE_HOME/lib.

启动Hive过程中可能出现的错误:

[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

at jline.TerminalFactory.create(TerminalFactory.java:101)

at jline.TerminalFactory.get(TerminalFactory.java:158)

at jline.console.ConsoleReader.<init>(ConsoleReader.java:229)

at jline.console.ConsoleReader.<init>(ConsoleReader.java:221)

at jline.console.ConsoleReader.<init>(ConsoleReader.java:209)

at org.apache.hadoop.hive.cli.CliDriver.getConsoleReader(CliDriver.java:773)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:715)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:675)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:615)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.main(RunJar.java:212)

Exception in thread "main" java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected解决方法:export HADOOP_USER_CLASSPATH_FIRST=true

其他场景的错误解决方法参见:https://cwiki.apache.org/confluence/display/Hive/Hive+on+Spark%3A+Getting+Started

需要设置spark.eventLog.dir参数,比如:

set spark.eventLog.dir= hdfs://master:8020/directory

否则查询会报错,否则一直报错:/tmp/spark-event类似的文件夹不存在

启动hive后设置执行引擎为spark:

hive> set hive.execution.engine=spark;设置spark的运行模式:

hive> set spark.master=spark://master:7077或者yarn:spark.master=yarn

Configure Spark-application configs for Hive

可以配置在spark-defaults.conf或者hive-site.xml

spark.master=<Spark Master URL>

spark.eventLog.enabled=true;

spark.executor.memory=512m;

spark.serializer=org.apache.spark.serializer.KryoSerializer;

spark.executor.memory=... #Amount of memory to use per executor process.

spark.executor.cores=... #Number of cores per executor.

spark.yarn.executor.memoryOverhead=...

spark.executor.instances=... #The number of executors assigned to each application.

spark.driver.memory=... #The amount of memory assigned to the Remote Spark Context (RSC). We recommend 4GB.

spark.yarn.driver.memoryOverhead=... #We recommend 400 (MB).参数配置详见文档:https://cwiki.apache.org/confluence/display/Hive/Hive+on+Spark%3A+Getting+Started

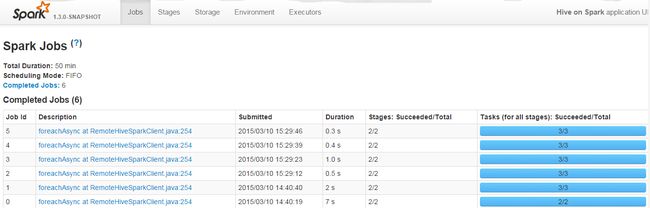

执行sql语句后可以在监控页面查看job/stages等信息

hive (default)> select city_id, count(*) c from city_info group by city_id order by c desc limit 5;

Query ID = spark_20150309173838_444cb5b1-b72e-4fc3-87db-4162e364cb1e

Total jobs = 1

Launching Job 1 out of 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

state = SENT

state = STARTED

state = STARTED

state = STARTED

state = STARTED

Query Hive on Spark job[0] stages:

1

Status: Running (Hive on Spark job[0])

Job Progress Format

CurrentTime StageId_StageAttemptId: SucceededTasksCount(+RunningTasksCount-FailedTasksCount)/TotalTasksCount [StageCost]

2015-03-09 17:38:11,822 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1 Stage-2_0: 0/1

state = STARTED

state = STARTED

state = STARTED

2015-03-09 17:38:14,845 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1 Stage-2_0: 0/1

state = STARTED

state = STARTED

2015-03-09 17:38:16,861 Stage-0_0: 1/1 Finished Stage-1_0: 0(+1)/1 Stage-2_0: 0/1

state = SUCCEEDED

2015-03-09 17:38:17,867 Stage-0_0: 1/1 Finished Stage-1_0: 1/1 Finished Stage-2_0: 1/1 Finished

Status: Finished successfully in 10.07 seconds

OK

city_id c

-1000 22826

-10 17294

-20 10608

-1 6186

4158

Time taken: 18.417 seconds, Fetched: 5 row(s)尊重原创,拒绝转载,http://blog.csdn.net/stark_summer/article/details/48466749