HDFS的副本存放策略——ReplicationTargetChooser

HDFS作为Hadoop中的一个分布式文件系统,而且是专门为它的MapReduce设计,所以HDFS除了必须满足自己作为分布式文件系统的高可靠性外,还必须为MapReduce提供高效的读写性能,那么HDFS是如何做到这些的呢?首先,HDFS将每一个文件的数据进行分块存储,同时每一个数据块又保存有多个副本,这些数据块副本分布在不同的机器节点上,这种数据分块存储+副本的策略是HDFS保证可靠性和性能的关键,这是因为:一.文件分块存储之后按照数据块来读,提高了文件随机读的效率和并发读的效率;二.保存数据块若干副本到不同的机器节点实现可靠性的同时也提高了同一数据块的并发读效率;三.数据分块是非常切合MapReduce中任务切分的思想。在这里,副本的存放策略又是HDFS实现高可靠性和搞性能的关键。

HDFS采用一种称为机架感知的策略来改进数据的可靠性、可用性和网络带宽的利用率。通过一个机架感知的过程,NameNode可以确定每一个DataNode所属的机架id(这也是NameNode采用NetworkTopology数据结构来存储数据节点的原因,也是我在前面详细介绍NetworkTopology类的原因)。一个简单但没有优化的策略就是将副本存放在不同的机架上,这样可以防止当整个机架失效时数据的丢失,并且允许读数据的时候充分利用多个机架的带宽。这种策略设置可以将副本均匀分布在集群中,有利于当组件失效的情况下的均匀负载,但是,因为这种策略的一个写操作需要传输到多个机架,这增加了写的代价。

在大多数情况下,副本系数是3,HDFS的存放策略是将一个副本存放在本地机架节点上,一个副本存放在同一个机架的另一个节点上,最后一个副本放在不同机架的节点上。这种策略减少了机架间的数据传输,提高了写操作的效率。机架的错误远远比节点的错误少,所以这种策略不会影响到数据的可靠性和可用性。与此同时,因为数据块只存放在两个不同的机架上,所以此策略减少了读取数据时需要的网络传输总带宽。在这种策略下,副本并不是均匀的分布在不同的机架上:三分之一的副本在一个节点上,三分之二的副本在一个机架上,其它副本均匀分布在剩下的机架中,这种策略在不损害数据可靠性和读取性能的情况下改进了写的性能。下面就来看看HDFS是如何来具体实现这一策略的。

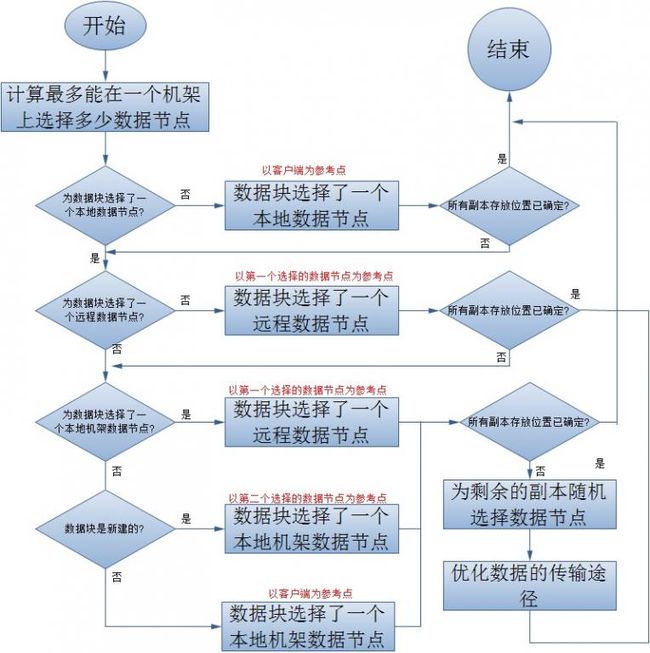

NameNode是通过类来为每一分数据块选择副本的存放位置的,这个ReplicationTargetChooser的一般处理过程如下:

上面的流程图详细的描述了Hadoop-0.2.0版本中副本的存放位置的选择策略,当然,这当中还有一些细节问题,如:如何选择一个本地数据节点,如何选择一个本地机架数据节点等,所以下面我还将继续展开讨论。

1.选择一个本地节点

这里所说的本地节点是相对于客户端来说的,也就是说某一个用户正在用一个客户端来向HDFS中写数据,如果该客户端上有数据节点,那么就应该最优先考虑把正在写入的数据的一个副本保存在这个客户端的数据节点上,它即被看做是本地节点,但是如果这个客户端上的数据节点空间不足或者是当前负载过重,则应该从该数据节点所在的机架中选择一个合适的数据节点作为此时这个数据块的本地节点。另外,如果客户端上没有一个数据节点的话,则从整个集群中随机选择一个合适的数据节点作为 此时这个数据块的本地节点。那么,如何判定一个数据节点合不合适呢,它是通过isGoodTarget方法来确定的:

/**

* 为一个Block的副本选择本地存放位置

*/

private DatanodeDescriptor chooseLocalNode(DatanodeDescriptor localMachine, List<Node> excludedNodes, long blocksize, int maxNodesPerRack, List<DatanodeDescriptor> results) throws NotEnoughReplicasException {

// if no local machine, randomly choose one node

if (localMachine == null)

return chooseRandom(NodeBase.ROOT, excludedNodes, blocksize, maxNodesPerRack, results);

// otherwise try local machine first

if (!excludedNodes.contains(localMachine)) {

excludedNodes.add(localMachine);

if (isGoodTarget(localMachine, blocksize, maxNodesPerRack, false, results)) {

results.add(localMachine);

return localMachine;

}

}

// try a node on local rack

return chooseLocalRack(localMachine, excludedNodes, blocksize, maxNodesPerRack, results);

}

private boolean isGoodTarget(DatanodeDescriptor node, long blockSize, int maxTargetPerLoc, boolean considerLoad, List<DatanodeDescriptor> results) {

Log logr = FSNamesystem.LOG;

// 节点不可用了

if (node.isDecommissionInProgress() || node.isDecommissioned()) {

logr.debug("Node "+NodeBase.getPath(node)+ " is not chosen because the node is (being) decommissioned");

return false;

}

long remaining = node.getRemaining() - (node.getBlocksScheduled() * blockSize);

// 节点剩余的容量够不够

if (blockSize* FSConstants.MIN_BLOCKS_FOR_WRITE>remaining) {

logr.debug("Node "+NodeBase.getPath(node)+ " is not chosen because the node does not have enough space");

return false;

}

// 节点当前的负载情况

if (considerLoad) {

double avgLoad = 0;

int size = clusterMap.getNumOfLeaves();

if (size != 0) {

avgLoad = (double)fs.getTotalLoad()/size;

}

if (node.getXceiverCount() > (2.0 * avgLoad)) {

logr.debug("Node "+NodeBase.getPath(node)+ " is not chosen because the node is too busy");

return false;

}

}

// 该节点坐在的机架被选择存放当前数据块副本的数据节点过多

String rackname = node.getNetworkLocation();

int counter=1;

for(Iterator<DatanodeDescriptor> iter = results.iterator(); iter.hasNext();) {

Node result = iter.next();

if (rackname.equals(result.getNetworkLocation())) {

counter++;

}

}

if (counter>maxTargetPerLoc) {

logr.debug("Node "+NodeBase.getPath(node)+ " is not chosen because the rack has too many chosen nodes");

return false;

}

return true;

}

2.选择一个本地机架节点

实际上,选择本地节假节点和远程机架节点都需要以一个节点为参考,这样才是有意义,所以在上面的流程图中,我用红色字体标出了参考点。那么,ReplicationTargetChooser是如何根据一个节点选择它的一个本地机架节点呢?

这个过程很简单,如果参考点为空,则 从整个集群中随机选择一个合适的数据节点作为 此时的本地机架节点;否则就从参考节点所在的机架中 随机选择一个合适的数据节点作为此时的本地机架节点,若这个集群中没有合适的数据节点的话,则从已选择的数据节点中找出一个作为新的参考点,如果找到了一个新的参考点,则从这个 新的参考点在的机架中随机选择一个合适的数据节点作为此时的本地机架节点;否则从整个集群中随机选择一个合适的数据节点作为此时的本地机架节点。如果新的参考点所在的机架中仍然没有合适的数据节点,则只能从整个集群中随机选择一个合适的数据节点作为此时的本地机架节点了。

private DatanodeDescriptor chooseLocalRack(DatanodeDescriptor localMachine, List<Node> excludedNodes, long blocksize, int maxNodesPerRack, List<DatanodeDescriptor> results)throws NotEnoughReplicasException {

// 如果参考点为空,则从整个集群中随机选择一个合适的数据节点作为此时的本地机架节点

if (localMachine == null) {

return chooseRandom(NodeBase.ROOT, excludedNodes, blocksize, maxNodesPerRack, results);

}

//从参考节点所在的机架中随机选择一个合适的数据节点作为此时的本地机架节点

try {

return chooseRandom(localMachine.getNetworkLocation(), excludedNodes, blocksize, maxNodesPerRack, results);

} catch (NotEnoughReplicasException e1) {

//若这个集群中没有合适的数据节点的话,则从已选择的数据节点中找出一个作为新的参考点

DatanodeDescriptor newLocal=null;

for(Iterator<DatanodeDescriptor> iter=results.iterator(); iter.hasNext();) {

DatanodeDescriptor nextNode = iter.next();

if (nextNode != localMachine) {

newLocal = nextNode;

break;

}

}

if (newLocal != null) {//找到了一个新的参考点

try {

//从这个新的参考点在的机架中随机选择一个合适的数据节点作为此时的本地机架节点

return chooseRandom(newLocal.getNetworkLocation(), excludedNodes, blocksize, maxNodesPerRack, results);

} catch(NotEnoughReplicasException e2) {

//新的参考点所在的机架中仍然没有合适的数据节点,从整个集群中随机选择一个合适的数据节点作为此时的本地机架节点

return chooseRandom(NodeBase.ROOT, excludedNodes, blocksize, maxNodesPerRack, results);

}

} else {

//从整个集群中随机选择一个合适的数据节点作为此时的本地机架节点

return chooseRandom(NodeBase.ROOT, excludedNodes, blocksize, maxNodesPerRack, results);

}

}

}

3.选择一个远程机架节点选择一个远程机架节点就是随机的选择一个合适的不在参考点坐在的机架中的数据节点,如果没有找到这个合适的数据节点的话,就只能从参考点所在的机架中选择一个合适的数据节点作为此时的远程机架节点了。

private void chooseRemoteRack(int numOfReplicas, DatanodeDescriptor localMachine, List<Node> excludedNodes, long blocksize, int maxReplicasPerRack, List<DatanodeDescriptor> results)

throws NotEnoughReplicasException {

int oldNumOfReplicas = results.size();

// randomly choose one node from remote racks

try {

chooseRandom(numOfReplicas, "~"+localMachine.getNetworkLocation(), excludedNodes, blocksize, maxReplicasPerRack, results);

} catch (NotEnoughReplicasException e) {

chooseRandom(numOfReplicas-(results.size()-oldNumOfReplicas), localMachine.getNetworkLocation(), excludedNodes, blocksize, maxReplicasPerRack, results);

}

}

private void chooseRandom(int numOfReplicas, String nodes, List<Node> excludedNodes, long blocksize, int maxNodesPerRack, List<DatanodeDescriptor> results) throws NotEnoughReplicasException {

boolean toContinue = true;

do {

DatanodeDescriptor[] selectedNodes = chooseRandom(numOfReplicas, nodes, excludedNodes);

if (selectedNodes.length < numOfReplicas) {

toContinue = false;

}

for(int i=0; i<selectedNodes.length; i++) {

DatanodeDescriptor result = selectedNodes[i];

if (isGoodTarget(result, blocksize, maxNodesPerRack, results)) {

numOfReplicas--;

results.add(result);

}

} // end of for

} while (numOfReplicas>0 && toContinue);

if (numOfReplicas>0) {

throw new NotEnoughReplicasException( "Not able to place enough replicas");

}

}

4.随机选择若干数据节点

这里的随机随机选择若干个数据节点实际上指的是从某一个范围内随机的选择若干个节点,它的实现需要利用前面提到过的 NetworkTopology数据结构。随机选择所使用的范围本质上指的是一个路径,这个路径表示的是NetworkTopology所表示的树状网络拓扑图中的一个非叶子节点,随机选择针对的就是这个节点的所有叶子子节点,因为所有的数据节点都被表示成了这个树状网络拓扑图中的叶子节点。

private DatanodeDescriptor[] chooseRandom(int numOfReplicas, String nodes, List<Node> excludedNodes) {

List<DatanodeDescriptor> results = new ArrayList<DatanodeDescriptor>();

int numOfAvailableNodes = clusterMap.countNumOfAvailableNodes(nodes, excludedNodes);

numOfReplicas = (numOfAvailableNodes<numOfReplicas)? numOfAvailableNodes:numOfReplicas;

while(numOfReplicas > 0) {

DatanodeDescriptor choosenNode = (DatanodeDescriptor)(clusterMap.chooseRandom(nodes));

if (!excludedNodes.contains(choosenNode)) {

results.add(choosenNode);

excludedNodes.add(choosenNode);

numOfReplicas--;

}

}

return (DatanodeDescriptor[])results.toArray(new DatanodeDescriptor[results.size()]);

}

5.优化数据传输的路径

以前说过, HDFS对于Block的副本copy采用的是流水线作业的方式:client把数据Block只传给一个DataNode,这个DataNode收到Block之后,传给下一个DataNode,依次类推,...,最后一个DataNode就不需要下传数据Block了。所以,在为一个数据块确定了所有的副本存放的位置之后,就需要确定这种数据节点之间流水复制的顺序,这种顺序应该使得数据传输时花费的网络延时最小。ReplicationTargetChooser用了非常简单的方法来考量的,大家一看便知:

private DatanodeDescriptor[] getPipeline( DatanodeDescriptor writer, DatanodeDescriptor[] nodes) {

if (nodes.length==0) return nodes;

synchronized(clusterMap) {

int index=0;

if (writer == null || !clusterMap.contains(writer)) {

writer = nodes[0];

}

for(;index<nodes.length; index++) {

DatanodeDescriptor shortestNode = nodes[index];

int shortestDistance = clusterMap.getDistance(writer, shortestNode);

int shortestIndex = index;

for(int i=index+1; i<nodes.length; i++) {

DatanodeDescriptor currentNode = nodes[i];

int currentDistance = clusterMap.getDistance(writer, currentNode);

if (shortestDistance>currentDistance) {

shortestDistance = currentDistance;

shortestNode = currentNode;

shortestIndex = i;

}

}

//switch position index & shortestIndex

if (index != shortestIndex) {

nodes[shortestIndex] = nodes[index];

nodes[index] = shortestNode;

}

writer = shortestNode;

}

}

return nodes;

}

6.ReplicationTargetChooser的选择策略

1).本机DataNode节点(如果客户端存在一个DataNode节点的话,就是该DataNode节点;否则,随机选择一个DataNode节点);

2).远程DataNode节点(与“本机DataNode节点”);

3).本rack下的另一个DataNode节点(与“本机DataNode节点”);

4).随机选择其它的DataNode节点。

其具体实现的源代码如下:

private DatanodeDescriptor chooseTarget(int numOfReplicas, DatanodeDescriptor writer, List<Node> excludedNodes, long blocksize, int maxNodesPerRack, List<DatanodeDescriptor> results) {

if (numOfReplicas == 0 || clusterMap.getNumOfLeaves()==0) {

return writer;

}

int numOfResults = results.size();

boolean newBlock = (numOfResults==0);

if (writer == null && !newBlock) {

writer = (DatanodeDescriptor)results.get(0);

}

try {

switch(numOfResults) {

case 0:

LOG.debug("Try to choose a local DataNode for a replication of block..");

writer = chooseLocalNode(writer, excludedNodes, blocksize, maxNodesPerRack, results);

if (--numOfReplicas == 0) {

break;

}

case 1:

LOG.debug("Try to choose a remote DataNode for a replication of block..");

chooseRemoteRack(1, results.get(0), excludedNodes, blocksize, maxNodesPerRack, results);

if (--numOfReplicas == 0) {

break;

}

case 2:

LOG.debug("Try to choose a local rack DataNode for a replication of block..");

if (clusterMap.isOnSameRack(results.get(0), results.get(1))) {

chooseRemoteRack(1, results.get(0), excludedNodes, blocksize, maxNodesPerRack, results);

} else if (newBlock){

chooseLocalRack(results.get(1), excludedNodes, blocksize, maxNodesPerRack, results);

} else {

chooseLocalRack(writer, excludedNodes, blocksize, maxNodesPerRack, results);

}

if (--numOfReplicas == 0) {

break;

}

default:

LOG.debug("Try to randomly choose a local DataNode for a replication of block..");

chooseRandom(numOfReplicas, NodeBase.ROOT, excludedNodes, blocksize, maxNodesPerRack, results);

}

} catch (NotEnoughReplicasException e) {

FSNamesystem.LOG.warn("Not able to place enough replicas, still in need of " + numOfReplicas);

}

return writer;

}

可惜的是,HDFS目前并没有把副本存放策略的实现开放给用户,也就是用户无法根据自己的实际需求来指定文件的数据块存放的具体位置。例如:我们可以将有关系的两个文件放到相同的数据节点上,这样在进行map-reduce的时候,其工作效率会大大的提高。但是,又考虑到副本存放策略是与集群负载均衡休戚相关的,所以要是真的把负载存放策略交给用户来实现的话,对用户来说是相当负载的,所以我只能说Hadoop目前还不算成熟,尚需大踏步发展。