Hbase0.96.0 +hadoop2.2.0安装

安装重点:

A:使用虚拟机如果时区没设置好,容易不一致,所以最好校对一下时间

B:每个RegionServer需要打开region*ColumnFamily*StoreFiles个文件数,所以需要系统打开一定数量的文件,而Linux系统对已一个进程课打开的文件最大个数是有限制,默认的是1024个,所以需要到调高运行Hbase的用户的允许打开文件最大个数

C:虽然Hbase已经带有了hadoop的jar包,但只是在单机模式下使用;如果在分布式模式下,hbase的jar包要和hadoop集群的jar包一致 ,所以可能存在一个hadoop的jar包替换过程。

规划:

A:hadoop 集群(NN:hadoop1,Datanode:hadoop3、hadoop4、hadoop5)

采用自己编译的安装包,安装过程见本人博客 hadoop2.2.0源码编译(CentOS6.4)

B:zookeeper集群(hadoop1、hadoop2、hadoop3、hadoop4、hadoop5)

安装过程见本人博客 zookeeper3.4.5安装笔记

C:Hbase集群(Master:hadoop1,regionserver:hadoop2、hadoop3、hadoop4、hadoop5)

1: 所有节点环境变量设置

[root@hadoop1 ~]# ntpdate ntp.ubuntu.com

[root@hadoop1 ~]# vi /etc/security/limits.conf

hadoop - nofile 32768

hadoop soft/hard nproc 32000

[root@hadoop1 ~]# vi /etc/pam.d/login

session required pam_limits.so

2:下载解压,赋予文件夹权限给hadoop:hadoop

[root@hadoop1 hadoop]# tar zxf hbase-0.96.0-hadoop2-bin.tar.gz

[root@hadoop1 hadoop]# mv hbase-0.96.0-hadoop2 /app/hadoop/hbase096_hadoop2

在hadoop2.2.0的安装目录/app/hadoop/hadoop2/share/hadoop下,建立一个新目录hbase_lib,然后对照hbase的安装目录/app/hadoop/hbase096_hadoop2/lib下hadoop*.jar,从新建目录hbase_lib同级目录内找到相应的文件,并放置在hbase_lib目录中,全部找出来后和hbase096_hadoop2/lib的hadoop*.jar仔细核对无误。然后将hbase096_hadoop2/lib下hadoop*.jar备份后删除,再将hbase_lib中的文件复制过来。本人操作过程中替换了17个文件。

[root@hadoop1 hadoop]# chown -R hadoop:hadoop /app/hadoop/hbase096_hadoop2

3:配置

[hadoop@hadoop1 conf]$ vi hbase-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_21

#HBASE_CLASSPATH设置hadoop的配置参数目录

export HBASE_CLASSPATH=/app/hadoop/hadoop2/etc/hadoop

#false使用独立的zookeeper集群,true使用自带的zookeeper集群

export HBASE_MANAGES_ZK=false

[hadoop@hadoop1 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.100.171:8000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>The mode the cluster will be in. Possible values are

false: standalone and pseudo-distributed setups with managed Zookeeper

true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh)

</description>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/app/hadoop/hbase096_hadoop2/tmp</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop1,hadoop2,hadoop3,hadoop4,hadoop5</value>

<description>Comma separated list of servers in the ZooKeeper Quorum.

For example, "host1.mydomain.com,host2.mydomain.com,host3.mydomain.com".

By default this is set to localhost for local and pseudo-distributed modes

of operation. For a fully-distributed setup, this should be set to a full

list of ZooKeeper quorum servers. If HBASE_MANAGES_ZK is set in hbase-env.sh

this is the list of servers which we will start/stop ZooKeeper on.

</description>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

<description>Property from ZooKeeper's config zoo.cfg.

The port at which the clients will connect.

</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/app/hadoop/hbase096_hadoop2/mydata</value>

<description>Property from ZooKeeper's config zoo.cfg.

The directory where the snapshot is stored.

</description>

</property>

</configuration>

[hadoop@hadoop1 conf]$ vi regionservers

hadoop2

hadoop3

hadoop4

hadoop5

4:部署

[hadoop@hadoop1 conf]$ cd ..

[hadoop@hadoop1 hbase096_hadoop2]$ mkdir tmp

[hadoop@hadoop1 hbase096_hadoop2]$ mkdir mydata

[hadoop@hadoop1 hbase096_hadoop2]$ cd ..

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop2:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop3:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop4:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop5:/app/hadoop/

5:启动

A:启动hadoop2.2.0集群

[hadoop@hadoop1 hadoop2]$ sbin/start-dfs.sh

[hadoop@hadoop1 hadoop2]$ sbin/start-yarn.sh

B:启动zookeeper集群

[hadoop@hadoop1 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop2 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop3 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop4 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop5 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

C:启动hbase

[hadoop@hadoop1 ~]$ cd /app/hadoop/hbase096_hadoop2

[hadoop@hadoop1 hbase096_hadoop2]$ bin/start-hbase.sh

D:测试

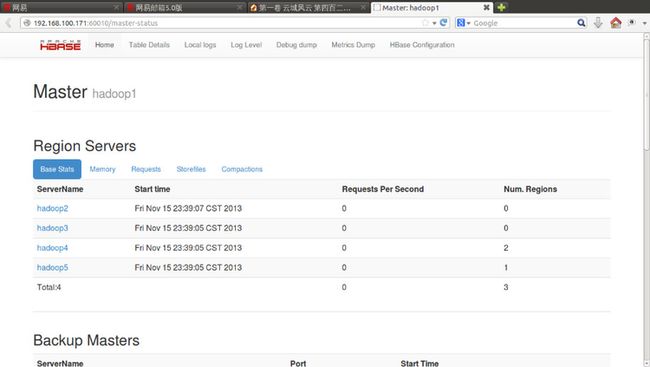

E:浏览

6:tips

A:如果想使用内嵌的zookeeper来协调集群,可以在conf/hbase-env.sh中设置

export HBASE_MANAGES_ZK=true

不过启动的进程名是HQuorumPeer,使用独立的zookeeper集群的进程名是QuorumPeerMain;建议使用独立的zookeeper集群,一方面zookeeper还可以支撑其他的分布式系统,另一方面也使得hbase更健壮。

A:使用虚拟机如果时区没设置好,容易不一致,所以最好校对一下时间

B:每个RegionServer需要打开region*ColumnFamily*StoreFiles个文件数,所以需要系统打开一定数量的文件,而Linux系统对已一个进程课打开的文件最大个数是有限制,默认的是1024个,所以需要到调高运行Hbase的用户的允许打开文件最大个数

C:虽然Hbase已经带有了hadoop的jar包,但只是在单机模式下使用;如果在分布式模式下,hbase的jar包要和hadoop集群的jar包一致 ,所以可能存在一个hadoop的jar包替换过程。

规划:

A:hadoop 集群(NN:hadoop1,Datanode:hadoop3、hadoop4、hadoop5)

采用自己编译的安装包,安装过程见本人博客 hadoop2.2.0源码编译(CentOS6.4)

B:zookeeper集群(hadoop1、hadoop2、hadoop3、hadoop4、hadoop5)

安装过程见本人博客 zookeeper3.4.5安装笔记

C:Hbase集群(Master:hadoop1,regionserver:hadoop2、hadoop3、hadoop4、hadoop5)

1: 所有节点环境变量设置

[root@hadoop1 ~]# ntpdate ntp.ubuntu.com

[root@hadoop1 ~]# vi /etc/security/limits.conf

hadoop - nofile 32768

hadoop soft/hard nproc 32000

[root@hadoop1 ~]# vi /etc/pam.d/login

session required pam_limits.so

2:下载解压,赋予文件夹权限给hadoop:hadoop

[root@hadoop1 hadoop]# tar zxf hbase-0.96.0-hadoop2-bin.tar.gz

[root@hadoop1 hadoop]# mv hbase-0.96.0-hadoop2 /app/hadoop/hbase096_hadoop2

在hadoop2.2.0的安装目录/app/hadoop/hadoop2/share/hadoop下,建立一个新目录hbase_lib,然后对照hbase的安装目录/app/hadoop/hbase096_hadoop2/lib下hadoop*.jar,从新建目录hbase_lib同级目录内找到相应的文件,并放置在hbase_lib目录中,全部找出来后和hbase096_hadoop2/lib的hadoop*.jar仔细核对无误。然后将hbase096_hadoop2/lib下hadoop*.jar备份后删除,再将hbase_lib中的文件复制过来。本人操作过程中替换了17个文件。

[root@hadoop1 hadoop]# chown -R hadoop:hadoop /app/hadoop/hbase096_hadoop2

3:配置

[hadoop@hadoop1 conf]$ vi hbase-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_21

#HBASE_CLASSPATH设置hadoop的配置参数目录

export HBASE_CLASSPATH=/app/hadoop/hadoop2/etc/hadoop

#false使用独立的zookeeper集群,true使用自带的zookeeper集群

export HBASE_MANAGES_ZK=false

[hadoop@hadoop1 conf]$ vi hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.100.171:8000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>The mode the cluster will be in. Possible values are

false: standalone and pseudo-distributed setups with managed Zookeeper

true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh)

</description>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/app/hadoop/hbase096_hadoop2/tmp</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop1,hadoop2,hadoop3,hadoop4,hadoop5</value>

<description>Comma separated list of servers in the ZooKeeper Quorum.

For example, "host1.mydomain.com,host2.mydomain.com,host3.mydomain.com".

By default this is set to localhost for local and pseudo-distributed modes

of operation. For a fully-distributed setup, this should be set to a full

list of ZooKeeper quorum servers. If HBASE_MANAGES_ZK is set in hbase-env.sh

this is the list of servers which we will start/stop ZooKeeper on.

</description>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

<description>Property from ZooKeeper's config zoo.cfg.

The port at which the clients will connect.

</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/app/hadoop/hbase096_hadoop2/mydata</value>

<description>Property from ZooKeeper's config zoo.cfg.

The directory where the snapshot is stored.

</description>

</property>

</configuration>

[hadoop@hadoop1 conf]$ vi regionservers

hadoop2

hadoop3

hadoop4

hadoop5

4:部署

[hadoop@hadoop1 conf]$ cd ..

[hadoop@hadoop1 hbase096_hadoop2]$ mkdir tmp

[hadoop@hadoop1 hbase096_hadoop2]$ mkdir mydata

[hadoop@hadoop1 hbase096_hadoop2]$ cd ..

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop2:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop3:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop4:/app/hadoop/

[hadoop@hadoop1 hadoop]$ scp -r hbase096_hadoop2 hadoop5:/app/hadoop/

5:启动

A:启动hadoop2.2.0集群

[hadoop@hadoop1 hadoop2]$ sbin/start-dfs.sh

[hadoop@hadoop1 hadoop2]$ sbin/start-yarn.sh

B:启动zookeeper集群

[hadoop@hadoop1 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop2 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop3 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop4 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

[hadoop@hadoop5 ~]$ /app/hadoop/zookeeper345/bin/zkServer.sh start

C:启动hbase

[hadoop@hadoop1 ~]$ cd /app/hadoop/hbase096_hadoop2

[hadoop@hadoop1 hbase096_hadoop2]$ bin/start-hbase.sh

D:测试

E:浏览

6:tips

A:如果想使用内嵌的zookeeper来协调集群,可以在conf/hbase-env.sh中设置

export HBASE_MANAGES_ZK=true

不过启动的进程名是HQuorumPeer,使用独立的zookeeper集群的进程名是QuorumPeerMain;建议使用独立的zookeeper集群,一方面zookeeper还可以支撑其他的分布式系统,另一方面也使得hbase更健壮。