Install Openstack with Openvswitch Plugin of Quantum on rhel6.3 by RPM Way ( by quqi99 )

Install Openstack with Openvswitch Plugin of Quantum on rhel6.3 by RPM Way ( by quqi99 )

作者:张华 发表于:2013-01-03

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

( http://blog.csdn.net/quqi99 )

Note: Please use root user to execute all bellow commands, make sure not use common user.

This guide will use openvswitch (ovs) plugin to set up quantum vlan network env.

As for gre env, redhat kernel not support, for this point, can refer http://wiki.openstack.org/ConfigureOpenvswitch

and https://lists.launchpad.net/openstack/msg18715.html, it said:

GRE tunneling with the openvswitch plugin requires OVS kernel modules that are not part of the Linux kernel source tree. These modules are not available in certain Linux distributions, including Fedora and RHEL. Tunneling must not be configured on systems without the needed kernel modules. The Open vSwitch web site indicates the OVS GRE tunnel support is being moved into the kernel source tree, but patch ports are not. Once GRE support is available, it should be possible to support tunneling by using veth devices instead of patch ports.

This env includes two nodes ( two kvm VMs, one has two NICs, one has one NIC, so need create two virtual switch, one is use NAT to visit internet, one is only for internal, must don't start dhcp server for those two vSwitchs )

Two Nodes:

node1, as control, network and compute node

will install keystone, glance-api, glance-registry, nova-api, nova-schedule, quantum-server, quantum-openvswitch-agent, quantum-l3-agent quantum-dhcp-agent

node2, as compute node

will install nova-compute quantum-openvswitch-agent

Principle:

for gre mode:

(vNic------->br-int------>eth1)-----GRE TUNNEL-----------(eth1-----br-int------qr-1549a07f-3a)

vNic->br-int->patch-tun ->patch-int ->gre gre -> patch-int->patch-tun->br-int->qr-1549a07f-3a

for vlan mode:

vNic------->int-br-phy------->phy-br-phy------->br-phy br-phy------->phy-br-phy------->int-br-phy------->tapaec0c85b-09 (gw)

1, Networking

1.1, Network Info

for openstack quantum network topology, it equals nova-network topology,

so you can refer this picture of my developworks article that shows the nova-network topology.

http://www.ibm.com/developerworks/cn/cloud/library/1209_zhanghua_openstacknetwork/image006.jpg

http://www.ibm.com/developerworks/cn/cloud/library/1209_zhanghua_openstacknetwork/

I prepared two VMs in KVM of my laptop, node1 and node2, node1 has two NICs (eth0 and eth1), node2 has one NIC.

node1:

br-ex --> eth0 192.168.100.108 --> kvm switch (default)

eth1 172.16.100.108 --> kvm switch (vSwitch1)

br-int

br-phy (don't need this bridge if you are using gre mode)

node2:

eth0 172.16.100.109 --> kvm switch (vSwitch1)

br-int

br-phy (don't need this bridge if you are using gre mode)

Note: NIC name will change when you deploy a new VM using this KVM image because MAC of vNIC has changed,

so you can delete the file "/etc/udev/rules.d/70-persistent-net.rules" in VM to prevent it, centainly finnally you need reboot the VM.

1.2, Install Openvswitch

yum install gcc make python-devel openssl-devel kernel-devel kernel-debug-devel tunctl

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/kmod-openvswitch-1.4.2-1.el6.x86_64.rpm

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/openvswitch-1.4.2-1.x86_64.rpm

Note: above openvswitch.rpm doesn't include brcompat.ko

service openvswitch restart

1.3, prepare two virtual vswitch in kvm ( note: must without dhcp )

default, Forwording to physical device by NAT mode, 192.168.100.0/24

vSwitch1, Isolated virtual network, 172.16.100.0/24

1.4, network detail of node1 and node2

node1:

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-br-ex

DEVICE=br-ex

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSBridge

BOOTPROTO=static

IPADDR=192.168.100.108

GATEWAY=192.168.100.1

BROADCAST=192.168.100.255

NETMASK=255.255.255.0

DNS1=8.8.8.8

IPV6INIT=no

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

#HWADDR=52:54:00:8C:04:42

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSPort

IPV6INIT=no

OVS_BRIDGE=br-ex

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

NM_CONTROLLED=no

ONBOOT=yes

#DEVICETYPE=eth

TYPE=Ethernet

BOOTPROTO=static

IPADDR=172.16.100.108

#GATEWAY=172.16.100.108

BROADCAST=172.16.100.255

NETMASK=255.255.255.0

IPV6INIT=no

[root@node1 ~]# cat /etc/sysconfig/network

HOSTNAME=node1

[root@node1 ~]# cat /etc/hosts

192.168.100.108 pubnode

172.16.100.108 node1

172.16.100.109 node2

[root@node1 ~]# vi /etc/sysctl.conf

# Uncomment net.ipv4.ip_forward=1, to save you from rebooting, perform the following

sysctl net.ipv4.ip_forward=1

[root@node1 ~]# sysctl -w net.ipv4.ip_forward=1

[root@node1 ~]# route add -net 172.16.100.0 netmask 255.255.255.0 dev eth1

[root@node1 ~]# route add -net 192.168.100.0 netmask 255.255.255.0 gw 192.168.100.1 dev br-ex

[root@node1 ~]# iptables -t nat -A POSTROUTING --out-interface br-ex -j MASQUERADE

[root@node1 ~]# sudo ovs-vsctl add-br br-int

node2:

[root@node2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

NM_CONTROLLED=no

ONBOOT=yes

TYPE=Ethernet

BOOTPROTO=static

IPADDR=172.16.100.109

GATEWAY=172.16.100.108

BROADCAST=172.16.100.255

NETMASK=255.255.255.0

DNS1=8.8.8.8

IPV6INIT=no

[root@node2 ~]# cat /etc/hosts

192.168.100.108 pubnode

172.16.100.108 node1

172.16.100.109 node2

[root@node1 ~]# vi /etc/sysctl.conf

# Uncomment net.ipv4.ip_forward=1, to save you from rebooting, perform the following

sysctl net.ipv4.ip_forward=1

[root@node2 ~]# route add default gw 172.16.100.108

[root@node2 ~]# sudo ovs-vsctl add-br br-int

above configurations only for gre mode, because we use vlan mode, so need to continue to create a physical ovs bridge.

node1:

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-br-phy

DEVICE=br-phy

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSBridge

BOOTPROTO=static

IPADDR=172.16.100.108

#GATEWAY=172.16.100.108

BROADCAST=172.16.100.255

NETMASK=255.255.255.0

DNS1=8.8.8.8

IPV6INIT=no

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSPort

IPV6INIT=no

OVS_BRIDGE=br-phy

node2:

[root@node2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-br-phy

DEVICE=br-phy

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSBridge

BOOTPROTO=static

IPADDR=172.16.100.109

GATEWAY=172.16.100.108

BROADCAST=172.16.100.255

NETMASK=255.255.255.0

DNS1=8.8.8.8

IPV6INIT=no

[root@node2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

#HWADDR=52:54:00:8C:04:42

NM_CONTROLLED=no

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSPort

IPV6INIT=no

OVS_BRIDGE=br-phy

2, Preparing RHEL6.3

2.1 shutdown firewall

2.2 disable selinux.

vi /etc/selinux/config

SELINUX=disabled

2.3 prepare your openstack repository, then execute the command: yum clean all && yum update --exclude boost

vi /etc/yum.repos.d/base.repo

[rhel]

name=rhel

baseurl=ftp://ftp.redhat.com/pub/redhat/linux/enterprise/6Workstation/en/os/SRPMS/

enabled=1

gpgcheck=0

[centos]

name=centos

baseurl=http://mirror.centos.org/centos/6/os/x86_64

enabled=1

gpgcheck=0

Note: This is only part of repository for linux packages, you need find openstack repository url in openstack office site

2.4, Install common packages

2.4.1 Install openstack utils

yum install openstack-utils

2.4.2 Install and configure mysql

yum install mysql mysql-server MySQL-python

chkconfig --level 2345 mysqld on

service mysqld start

mysqladmin -u root -p password password # set password='password' for mysql

sudo mysql -uroot -ppassword -h127.0.0.1 -e "GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' identified by 'password';"

sed -i 's/bind-address=127.0.0.1/bind-address=0.0.0.0/g' /etc/my.cnf

make sure can use root user to visit mysql from any hosts(%), ( update user set host = '%' where user = 'root'; ):

mysql> select host,user from user;

+------+----------+

| host | user |

+------+----------+

| % | root |

+------+----------+

# clear the former password of mysql

service mysqld stop && mysqld_safe --skip-grant-tables

use mysql && UPDATE user SET password=PASSWORD('password') WHERE user='root';

flush privileges;

yum install qpid-cpp-server qpid-cpp-server-daemon

chkconfig qpidd on

service qpidd start

2.4.4 Install memcached

yum install memcached

2.4.5 Install python-devel and gcc

yum install python-devel

yum install gcc

2.4.6 Install telnet

yum install telnet

2.4.7 export the env variables if you want to execute CLI commands

export SERVICE_TOKEN=ADMIN

export SERVICE_ENDPOINT=http://node1:35357/v2.0

export OS_USERNAME=admin

export OS_PASSWORD=password

export OS_TENANT_NAME=admin

export OS_AUTH_URL=http://node1:5000/v2.0

export OS_AUTH_STRATEGY=keystone

3, Install Keystone

3.1 Install the keystone RPM packages

yum install openstack-keystone python-keystoneclient python-keystone-auth-token

3.2 Initilize db for keystone

openstack-db --init --service keystone

keystone-manage db_sync

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON *.* TO 'keystone'@'%' WITH GRANT OPTION;"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'keystone'@'%' = PASSWORD('password');"

3.3 Configure keystone

2.4.1 configure db info

vi /etc/keystone/keystone.conf

verbose = True

debug = True

[sql]

connection = mysql://root:password@node1/keystone

[signing]

token_format = UUID

3.4 Restart service

chkconfig openstack-keystone on

service openstack-keystone restart

Note: but I fount above command doesn't work, you can use "keystone-all &" to instead it.

3.5 Init keystone data

ADMIN_PASSWORD=password SERVICE_PASSWORD=password openstack-keystone-sample-data

3.6 Verify

keystone user-list

4. Install Glance

4.1 Install glance RPM package

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/pyxattr-0.5.0-1.002.ibm.x86_64.rpm

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/pysendfile-2.0.0-3.ibm.x86_64.rpm

yum install python-jsonschema python-swiftclient python-warlock python-glanceclient

yum install openstack-glance

Note: there are some issues of openstack-glance-2012.2.1-100.ibm.noarch.rpm, finnaly I use openstack-glance-2012.2.1-002.ibm.noarch.rpm

4.2 Initilize DB for glance

openstack-db --rootpw password --init --service glance

it equals:

mysql -uroot -ppassword -e 'DROP DATABASE IF EXISTS glance;'

mysql -uroot -ppassword -e 'CREATE DATABASE glance;'

mysql -uroot -ppassword -e "grant all on *.* to root@'%'identified by 'password'"

glance-manage db_sync

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON *.* TO 'glance'@'%' WITH GRANT OPTION;"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'glance'@'%' = PASSWORD('password');"

4.3 Config the glance

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf DEFAULT sql_connection mysql://root:password@node1/glance

openstack-config --set /etc/glance/glance-registry.conf DEFAULT sql_connection mysql://root:password@node1/glance

openstack-config --set /etc/glance/glance-api.conf DEFAULT debug True

openstack-config --set /etc/glance/glance-registry.conf DEFAULT debug True

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri http://node1:5000/

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_user admin

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_password password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://node1:5000/

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_user admin

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_password password

4.4 Restart glance service

chkconfig openstack-glance-api on

chkconfig openstack-glance-registry on

service openstack-glance-api restart

service openstack-glance-registry restart

4.5 Add image

wget http://launchpad.net/cirros/trunk/0.3.0/+download/cirros-0.3.0-x86_64-disk.img

glance add name=cirros-0.3.0-x86_64 disk_format=qcow2 container_format=bare < cirros-0.3.0-x86_64-disk.img

[root@node1 tools]# glance index

ID Name Disk Format Container Format Size

------------------------------------ ------------------------------ -------------------- -------------------- --------------

40d11c4b-8043-4bd6-87b5-9c27f9b36c6f cirros-0.3.0-x86_64 qcow2 bare 9761280

or use bellow scripts to add ami image:

export IMAGE_ROOT=/root/tools

cd $IMAGE_ROOT

mkdir -p $IMAGE_ROOT/images

wget -c https://github.com/downloads/citrix-openstack/warehouse/tty.tgz -O $IMAGE_ROOT/images/tty.tgz

tar -zxf $IMAGE_ROOT/images/tty.tgz -C $IMAGE_ROOT/images

RVAL=`glance add name="cirros-kernel" is_public=true container_format=aki disk_format=aki < $IMAGE_ROOT/images/aki-tty/image`

KERNEL_ID=`echo $RVAL | cut -d":" -f2 | tr -d " "`

RVAL=`glance add name="cirros-ramdisk" is_public=true container_format=ari disk_format=ari < $IMAGE_ROOT/images/ari-tty/image`

RAMDISK_ID=`echo $RVAL | cut -d":" -f2 | tr -d " "`

glance add name="cirros" is_public=true container_format=ami disk_format=ami kernel_id=$KERNEL_ID ramdisk_id=$RAMDISK_ID < $IMAGE_ROOT/images/ami-tty/image

TOKEN=`curl -s -d "{\"auth\":{\"passwordCredentials\":{\"username\": \"$OS_USERNAME\", \"password\":\"$OS_PASSWORD\"}, \"tenantName\":\"$OS_TENANT_NAME\"}}" -H "Content-type:application/json" $OS_AUTH_URL/tokens | python -c"import sys; import json; tok = json.loads(sys.stdin.read()); print tok['access']['token']['id'];"`

5, Install quantum

5.1 Install quantum-server and agent

yum install openstack-quantum python-quantumclient

yum install openstack-quantum-openvswitch

5.2 Create quantum DB

mysql -uroot -ppassword -e 'DROP DATABASE IF EXISTS ovs_quantum';

mysql -uroot -ppassword -e 'CREATE DATABASE IF NOT EXISTS ovs_quantum';

mysql -uroot -ppassword -e "GRANT ALL PRIVILEGES ON *.* TO 'ovs_quantum'@'%' WITH GRANT OPTION;"

mysql -uroot -ppassword -e "SET PASSWORD FOR 'ovs_quantum'@'%' = PASSWORD('password');"

5.3 Configure quantum server and agent

5.3.1 for MQ

openstack-config --set /etc/quantum/quantum.conf DEFAULT rpc_backend quantum.openstack.common.rpc.impl_qpid

openstack-config --set /etc/quantum/quantum.conf DEFAULT qpid_hostname openstack

5.3.2 for DB

openstack-config --set /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini DATABASE sql_connection mysql://root:password@node1/ovs_quantum?charset=utf8

6.3.3 Using the ovs plugin in a deployment with multiple hosts requires the using of either tunneling or vlans in order to isolate traffic from multiple networks.

Edit /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini to specify the following values:

# gre mode

#enable_tunneling = True

#tenant_network_type = gre

#tunnel_id_ranges = 1:1000

# only if node is running the agent

# Note: use local_ip = 172.16.100.109 in node2,

# it will excute this command in node1: ovs-vsctl add-port br-int gre1 -- set interface gre1 type=gre options:remote_ip=172.16.100.109

#local_ip = 172.16.100.108

# vlan mode

tenant_network_type=vlan

network_vlan_ranges = physnet1:1:4094

bridge_mappings = physnet1:br-phy

root_helper = sudo

5.3.4 for keystone

openstack-config --set /etc/quantum/api-paste.ini filter:authtoken auth_uri http://node1:5000/

openstack-config --set /etc/quantum/api-paste.ini filter:authtoken admin_tenant_name admin

openstack-config --set /etc/quantum/api-paste.ini filter:authtoken admin_user admin

openstack-config --set /etc/quantum/api-paste.ini filter:authtoken admin_password password

5.3.5 for openvswitch

vi /etc/quantum/quantum.conf

core_plugin = quantum.plugins.openvswitch.ovs_quantum_plugin.OVSQuantumPluginV2

5.3.6 Fix a bug of quantum-server.

Note: before starting quantum-server, need first to fix a program error

in the file quantum/plugins/openvswitch/ovs_quantum_plugin.py, from line 213 to 216:

LOG.error(_("Tunneling disabled but "

"tenant_network_type is 'gre'. ")

"Agent terminated!")

should be:

LOG.error(_("Tunneling disabled but "

"tenant_network_type is 'gre'. "

"Agent terminated!"))

5.4 Install and configure quantum-dhcp-agent

The dhcp agent is part of the openstack-quantum package

#quantum-dhcp-setup --plugin openvswitch

Note: above quantum-dhcp-setup has bug, you can edit /etc/quantum/dhcp_agent.ini yourself to configure quantum-dhcp-agent

openstack-config --set /etc/quantum/dhcp_agent.ini DEFAULT use_namespaces False

fix a bug by the command: ln -s /usr/bin/quantum-dhcp-agent-dnsmasq-lease-update /usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update

[root@node1 bak]# sudo QUANTUM_RELAY_SOCKET_PATH=/opt/stack/data/dhcp/lease_relay QUANTUM_NETWORK_ID=40a6fcb3-1f86-49da-b935-bf09d9cd270b dnsmasq --no-hosts --no-resolv --strict-order --bind-interfaces --interface=tapadaa2f08-ce --except-interface=lo --domain=openstacklocal --pid-file=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/pid --dhcp-hostsfile=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/host --dhcp-optsfile=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/opts --dhcp-script=/usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update --leasefile-ro --dhcp-range=set:tag0,10.0.100.0,static,120s

sh: /usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update: No such file or directory

dnsmasq: cannot run lease-init script /usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update: No such file or directory

another bug, it seems the version of ibm python-quantumclient is wrong:

[root@node1 bak]# rpm -qa|grep quantum

python-quantum-2012.2.1-100.ibm.noarch

openstack-quantum-openvswitch-2012.2.1-100.ibm.noarch

python-quantumclient-2.1-003.ibm.noarch

openstack-quantum-2012.2.1-100.ibm.noarch

[root@node1 bak]# sudo QUANTUM_RELAY_SOCKET_PATH=/opt/stack/data/dhcp/lease_relay QUANTUM_NETWORK_ID=40a6fcb3-1f86-49da-b935-bf09d9cd270b dnsmasq --no-hosts --no-resolv --strict-order --bind-interfaces --interface=tapadaa2f08-ce --except-interface=lo --domain=openstacklocal --pid-file=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/pid --dhcp-hostsfile=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/host --dhcp-optsfile=/opt/stack/data/dhcp/40a6fcb3-1f86-49da-b935-bf09d9cd270b/opts --dhcp-script=/usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update --leasefile-ro --dhcp-range=set:tag0,10.0.100.0,static,120s

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/quantum/agent/quantum-dhcp-agent-dnsmasq-lease-update", line 5, in <module>

from pkg_resources import load_entry_point

File "/usr/lib/python2.6/site-packages/pkg_resources.py", line 2659, in <module>

parse_requirements(__requires__), Environment()

File "/usr/lib/python2.6/site-packages/pkg_resources.py", line 546, in resolve

raise DistributionNotFound(req)

pkg_resources.DistributionNotFound: python-quantumclient>=2.0

dnsmasq: lease-init script returned exit code 1

so I have to commend the line " '--dhcp-script=%s' % self._lease_relay_script_path()," and the line '--leasefile-ro ' of the file quantum/agent/linux/dhcp.py

5.5 install quantum-l3-agent

The l3 agent is part of the openstack-quantum package

#quantum-l3-setup --plugin openvswitch

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT external_network_bridge br-ex

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT interface_driver quantum.agent.linux.interface.OVSInterfaceDriver

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT auth_uri http://node1:5000/

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT admin_tenant_name admin

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT admin_user admin

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT admin_password password

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT use_namespaces False

5.6 Install meta service

update the /etc/quantum/l3_agent.ini:

metadata_ip = 192.168.100.108

metadata_port = 8775

5.7 For qemu, when using quantum, you need do bellow thing to use quantum.

cat <<EOF | sudo tee -a /etc/libvirt/qemu.conf

cgroup_device_acl = [

"/dev/null", "/dev/full", "/dev/zero",

"/dev/random", "/dev/urandom",

"/dev/ptmx", "/dev/kvm", "/dev/kqemu",

"/dev/rtc", "/dev/hpet","/dev/net/tun",

]

EOF

5.8 Start the all quantum services:

chkconfig quantum-server on

chkconfig quantum-openvswitch-agent on

chkconfig quantum-dhcp-agent on

chkconfig quantum-l3-agent on

service quantum-server restart

service quantum-openvswitch-agent restart

service quantum-dhcp-agent restart

service quantum-l3-agent restart

You also can start quantum processes by following ways:

quantum-server --config-file /etc/quantum/quantum.conf --config-file /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini --debug true

quantum-openvswitch-agent --config-file /etc/quantum/quantum.conf --config-file /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini --debug true

quantum-dhcp-agent --config-file /etc/quantum/quantum.conf --config-file=/etc/quantum/dhcp_agent.ini --debug true

quantum-l3-agent --config-file /etc/quantum/quantum.conf --config-file=/etc/quantum/l3_agent.ini --debug true

5.8 append bellow configurations into /etc/nova.conf

########## quantum #############

network_api_class=nova.network.quantumv2.api.API

quantum_admin_username=admin

quantum_admin_password=password

quantum_admin_auth_url=http://node1:5000/v2.0

quantum_auth_strategy=keystone

quantum_admin_tenant_name=admin

quantum_url=http://openstack:9696

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtOpenVswitchVirtualPortDriver

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver=nova.virt.firewall.NoopFirewallDriver

5.9 Create the test network

# nova-network network

# nova-manage network create demonet 10.0.1.0/24 1 256 --bridge=br100

# quantum network

TENANT_ID=cfdf5ed5e5b44d04a608627775a8c5ed

FLOATING_RANGE=192.168.100.100/24

PUBLIC_BRIDGE=br-ex

# Create gre private network

#quantum net-create net_gre --provider:network_type gre --provider:segmentation_id 122

#quantum subnet-create --tenant_id $TENANT_ID --ip_version 4 --gateway 10.0.0.1 net_gre 10.0.0.0/24

# Create vlan private network

quantum net-create net_vlan --tenant_id=$TENANT_ID --provider:network_type vlan --provider:physical_network physnet1 --provider:segmentation_id 122

quantum subnet-create --tenant_id $TENANT_ID --ip_version 4 --gateway 10.0.1.1 net_vlan 10.0.1.0/24

# Create a router, and add the private subnet as one of its interfaces

ROUTER_ID=$(quantum router-create --tenant_id $TENANT_ID router1 | grep ' id ' | get_field 2)

quantum router-interface-add $ROUTER_ID $SUBNET_ID (内部实现时会创建一个port, port的属性为device_owner=network:router_interface,device_id=router_id,fixed_ip={'ip_address': subnet['gateway_ip'], 'subnet_id': subnet['id']},且分配一个fixed_ip, 例如执行router-interface-add这步操作之后的数据结构是:

[

{

u'status':u'DOWN',

u'subnet':{

u'cidr':u'10.0.1.0/24',

u'gateway_ip':u'10.0.1.1',

u'id':u'fce9745f-6717-4022-a3d0-0807c00cd57a'

},

u'binding: host_id':None,

u'name':u'',

u'admin_state_up':True,

u'network_id':u'21b2784c-90b4-46a0-a418-8ee637b1f6f8',

u'tenant_id':u'099f4578175443af99091aaa0a50d74e',

u'binding: vif_type':u'ovs',

u'device_owner': u'network:router_interface',

u'binding: capabilities':{

u'port_filter':True

},

u'mac_address': u'fa:16:3e: c3:66:68 ',

u' fixed_ips':[

{

u'subnet_id':u'fce9745f-6717-4022-a3d0-0807c00cd57a',

u'ip_address':u'10.0.1.1'

}

],

u'id':u'148e90cf-ed10-4744-a0e6-bfac00270930',

u'security_groups':[

],

u'device_id':u'e65c999c-d1e7-47bb-a722-f91fb6d2118b'

}

]

对于这个子网,当l3-agent启动时,会调用其中的internal_network_added方法,生成一个名为qr-****的网关接口,再调用self.driver.init_l3将网关的IP给设置上。

def internal_network_added(self, ri, network_id, port_id,

internal_cidr, mac_address):

interface_name = self.get_internal_device_name(port_id)

if not ip_lib.device_exists(interface_name,

root_helper=self.root_helper,

namespace=ri.ns_name()):

self.driver.plug(network_id, port_id, interface_name, mac_address,

namespace=ri.ns_name(),

prefix=INTERNAL_DEV_PREFIX)

self.driver.init_l3(interface_name, [internal_cidr],

namespace=ri.ns_name())

ip_address = internal_cidr.split('/')[0]

self._send_gratuitous_arp_packet(ri, interface_name, ip_address)

如果这个子网是一个外部子网,则调用的是external_gateway_added方法,建一个外部接口并设上IP(这个和前一样),但要设置到外部网关的默认路由:route', 'add', 'default', 'gw', gw_ip

def external_gateway_added(self, ri, ex_gw_port,

interface_name, internal_cidrs):

if not ip_lib.device_exists(interface_name,

root_helper=self.root_helper,

namespace=ri.ns_name()):

self.driver.plug(ex_gw_port['network_id'],

ex_gw_port['id'], interface_name,

ex_gw_port['mac_address'],

bridge=self.conf.external_network_bridge,

namespace=ri.ns_name(),

prefix=EXTERNAL_DEV_PREFIX)

self.driver.init_l3(interface_name, [ex_gw_port['ip_cidr']],

namespace=ri.ns_name())

ip_address = ex_gw_port['ip_cidr'].split('/')[0]

self._send_gratuitous_arp_packet(ri, interface_name, ip_address)

gw_ip = ex_gw_port['subnet']['gateway_ip']

if ex_gw_port['subnet']['gateway_ip']:

cmd = ['route', 'add', 'default', 'gw', gw_ip]

if self.conf.use_namespaces:

ip_wrapper = ip_lib.IPWrapper(self.root_helper,

namespace=ri.ns_name())

ip_wrapper.netns.execute(cmd, check_exit_code=False)

else:

utils.execute(cmd, check_exit_code=False,

root_helper=self.root_helper)

# Create external network

quantum net-create ext_net -- --router:external=True

quantum subnet-create --allocation-pool start=192.168.100.102,end=192.168.100.126 --gateway 192.168.100.1 ext_net 192.168.100.100/24 --enable_dhcp=False

# Configure the external network as router gw

quantum router-gateway-set $ROUTER_ID $EXT_NET_ID (内部实现时会创建一个port, port的属性中有device_owner=network:router_gateway,device_id=router_id, 同时也会分配一个公网IP)

如果还要添加浮动IP的话:

quantum floatingip-create ext_net

quantum floatingip-associate <floatingip1-ID> <port-id>

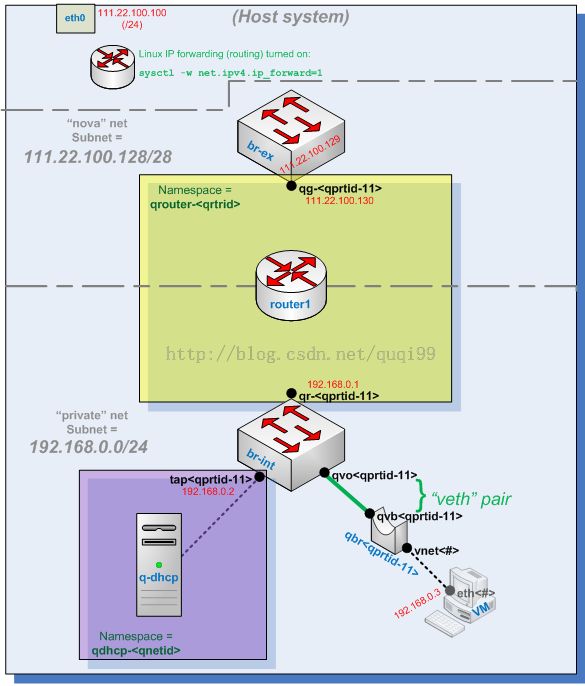

2013.07.08添加:

这时候形成的网络拓扑是:

这时候,想到一个问题,显然,不同的tenant的网络是通过子网或者vlan来隔离的,但是同一tenent的不同子网要相互通信呢?

一种方法,可以借助安全组:

iptables -t filter -I FORWARD -i qbr+ -o qbr+ -j ACCEPT

这篇博客里的一张图不错(http://blog.csdn.net/lynn_kong/article/details/8779385 ) 借用一下:

2013.07.09,社区现在的文档做的也挺不错了,http://docs.openstack.org/trunk/openstack-network/admin/content/under_the_hood_openvswitch.html

[root@node1 ~]# quantum port-list -- --device_id $ROUTER_ID --device_owner network:router_gateway

+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

| 19720c1b-2c7f-48a1-aa06-6431cecf8a03 | | fa:16:3e:ec:00:9b | {"subnet_id": "e8778b38-c804-4e89-9690-a7ee7801205f", "ip_address": "192.168.100.102"} |

+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

[root@node1 ~]# route add -net 10.0.0.0/24 gw 192.168.100.102

if "$Q_USE_NAMESPACE" = "True", then

CIDR_LEN=${FLOATING_RANGE#*/}

sudo ip addr add $EXT_GW_IP/$CIDR_LEN dev $PUBLIC_BRIDGE

sudo ip link set $PUBLIC_BRIDGE up

ROUTER_GW_IP=`quantum port-list -c fixed_ips -c device_owner | grep router_gateway | awk -F '"' '{ print $8; }'`

sudo route add -net $FIXED_RANGE gw $ROUTER_GW_IP

else

# Explicitly set router id in l3 agent configuration

openstack-config --set /etc/quantum/l3_agent.ini DEFAULT router_id $ROUTER_ID

fi

7. Install Nova

7.1 Install Nova and client

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/libyaml-0.1.4-3.ibm.x86_64.rpm

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/libyaml-devel-0.1.4-3.ibm.x86_64.rpm

rpm -ivh http://rchgsa.ibm.com/projects/e/emsol/ccs/build/driver/300/openstack/latest-bld/x86_64/PyYAML-3.10-6.ibm.x86_64.rpm

yum install openstack-nova python-nova-adminclient bridge-utils

yum install audiofile && rpm -ivh http://mirror.centos.org/centos/6/os/x86_64/Packages/esound-libs-0.2.41-3.1.el6.x86_64.rpm

yum install -y http://pkgs.repoforge.org/qemu/qemu-0.15.0-1.el6.rfx.x86_64.rpm

7.2 cat /etc/nova/nova.conf

[DEFAULT]

##### Misc #####

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path = /var/lib/nova/tmp

root_helper=sudo

##### nova-api #####

auth_strategy=keystone

cc_host=node1

##### nova-network #####

network_manager=nova.network.manager.FlatDHCPManager

public_interface=eth0

flat_interface=eth0

vlan_interface=eth0

network_host=node1

fixed_range=10.0.0.0/8

network_size=1024

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

force_dhcp_release=True

fixed_ip_disassociate_timeout=30

my_ip=172.16.100.108

routing_source_ip=192.168.100.108

##### nova-compute #####

connection_type=libvirt

libvirt_type=qemu

libvirt_use_virtio_for_bridges=True

use_cow_images=True

snapshot_image_format=qcow2

##### nova-volume #####

iscsi_ip_prefix=172.16.100.10

num_targets=100

iscsi_helper=tgtadm

##### MQ #####

rabbit_host=node1

qpid_hostname=node1

rpc_backend = nova.openstack.common.rpc.impl_qpid

##### DB #####

sql_connection=mysql://root:password@node1/nova

##### glance #####

image_service=nova.image.glance.GlanceImageService

glance_api_servers=node1:9292

##### Vnc #####

novnc_enabled=true

novncproxy_base_url=http://pubnode:6080/vnc_auto.html

novncproxy_port=6080

vncserver_proxyclient_address=pubnode

vncserver_listen=0.0.0.0

##### Cinder #####

#volume_api_class=nova.volume.cinder.API

#osapi_volume_listen_port=5900

########## quantum #############

network_api_class=nova.network.quantumv2.api.API

quantum_admin_username=admin

quantum_admin_password=password

quantum_admin_auth_url=http://node1:5000/v2.0

quantum_auth_strategy=keystone

quantum_admin_tenant_name=admin

quantum_url=http://node1:9696

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtOpenVswitchVirtualPortDriver

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver=nova.virt.firewall.NoopFirewallDriver

Note:

1) my_ip, should be management ip, not public ip, because it need to communicate with other compute nodes

2) routing_source_ip, NIC that is used for SNAT rule, so it should be external NIC

3) If the public and management/storage networks would be the same (e.g. 192.168.100.0/24),

these two parameters (my_ip and routing_source_ip) would not be needed (they both would be 192.168.100.108).

7.3 Initilize DB for nova

#openstack-db --rootpw password --init --service nova

mysql -uroot -ppassword -e 'DROP DATABASE IF EXISTS nova;'

mysql -uroot -ppassword -e 'CREATE DATABASE nova;'

nova-manage db sync

7.4 Create VG nova-volumes

dd if=/dev/zero of=/var/lib/nova/nova-volumes.img bs=1M seek=20k count=0

vgcreate nova-volumes $(losetup --show -f /var/lib/nova/nova-volumes.img)

7.5 Configure qpid

sed -i 's/auth=yes/auth=no/g' /etc/qpidd.conf

sed -i 's/#mdns_adv = .*/mdns_adv = 0/g' /etc/libvirt/libvirtd.conf

[ -e /etc/init.d/qpidd ] && service qpidd start && sudo chkconfig qpidd on

[ -e /etc/init.d/messagebus ] && /etc/init.d/messagebus start

7.6 Configure keystone

openstack-config --set /etc/nova/api-paste.ini filter:authtoken auth_uri http://node1:5000/

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_tenant_name admin

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_user admin

openstack-config --set /etc/nova/api-paste.ini filter:authtoken admin_password password

7.7 Start nova service

service libvirtd restart && sudo chkconfig libvirtd on

for svc in api compute network scheduler; do chkconfig openstack-nova-$svc on ; done

for svc in api compute network scheduler; do service openstack-nova-$svc restart ; done

7.8 Fix a bug, Quantum+Openvswitch: could not open /dev/net/tun: Operation not permitted

can refer https://lists.launchpad.net/openstack/msg12269.html

Dan said " the root cause of needing to tweak /etc/libvirt/qemu.conf is that

we're using libvirt <interface type=ethernet> elements to work with openvswitch. Starting in libvirt 0.9.11"

also can refer http://binarybitme.blogspot.hk/2012/07/libvirt-0911-has-support-for-open.html

Binary Bit Me Libvirt 0.9.11 has Support for Open vSwitch Libvirt release 0.9.11 has added support for Open vSwitch so it is no longer

required to use bridge compatibility mode! Fedora 17 has 0.9.11 included.

Repository address: http://yum.chriscowley.me.uk/test/el/6/x86_64/RPMS/

yum remove libvirt

rpm –import http://yum.chriscowley.me.uk/RPM-GPG-KEY-ChrisCowley

yum install http://yum.chriscowley.me.uk/el/6/x86_64/RPMS/chriscowley-release-1-2.noarch.rpm

yum install libvirt --enablerepo=chriscowley-test

yum install libvirt-devel

yum install libvirt-python

and make sure: libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtOpenVswitchVirtualPortDriver

7.9 Enable live migration, on all hosts.

Modify /etc/libvirt/libvirtd.conf

listen_tls = 0

listen_tcp = 1

tcp_port = "16509"

auth_tcp = "none"

Modify /etc/sysconfig/libvirtd

LIBVIRTD_ARGS="--listen"

7.10 Deploy a VM

nova --debug boot --flavor 1 --image 40d11c4b-8043-4bd6-87b5-9c27f9b36c6f --nic net-id=57ba312a-cba3-4f77-bc15-8829c6bf67a6 i1

[root@node1 ~]# quantum net-list

+--------------------------------------+---------+--------------------------------------+

| id | name | subnets |

+--------------------------------------+---------+--------------------------------------+

| af9f0450-2535-4d49-af68-d3bf4fde4164 | net1 | 3eb3f9da-983d-4e98-a79d-aec5309d2783 |

| 1d2ac18d-a9e4-4b15-929f-7765b7a02f97 | ext_net | e8778b38-c804-4e89-9690-a7ee7801205f |

+--------------------------------------+---------+--------------------------------------+

[root@node1 ~]# nova-manage service list

Binary Host Zone Status State Updated_At

nova-scheduler node1 nova enabled :-) 2013-01-02 14:03:48

nova-compute node2 nova enabled :-) 2013-01-02 14:03:47

[root@node1 ~]# glance index

ID Name Disk Format Container Format Size

------------------------------------ ------------------------------ -------------------- -------------------- --------------

f6c9eb73-aeca-4cab-a217-87da92fe4be6 cirros ami ami 25165824

15d2c5e4-feaa-4ca1-9138-d11ccee3fc69 cirros-ramdisk ari ari 5882349

2537923f-75b2-4d20-a734-bbabc75dff68 cirros-kernel aki aki 4404752

40d11c4b-8043-4bd6-87b5-9c27f9b36c6f cirros-0.3.0-x86_64 qcow2 bare 9761280

[root@node1 ~]# nova list

+--------------------------------------+------+--------+-------------------+

| ID | Name | Status | Networks |

+--------------------------------------+------+--------+-------------------+

| 62362f20-eb1b-4840-bd91-6ed10693bcd6 | i1 | ACTIVE | net_vlan=10.0.1.3 |

+--------------------------------------+------+--------+-------------------+

[root@node1 ~]# ping 10.0.1.3

PING 10.0.1.3 (10.0.1.3) 56(84) bytes of data.

64 bytes from 10.0.1.3: icmp_seq=1 ttl=64 time=5.48 ms

64 bytes from 10.0.1.3: icmp_seq=2 ttl=64 time=2.30 ms

[root@node1 ~]# quantum port-list -- --device_id=62362f20-eb1b-4840-bd91-6ed10693bcd6

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| 1c92b8dc-e1a1-40cc-8589-5799080c0822 | | fa:16:3e:6b:db:82 | {"subnet_id": "0d1c182e-65d6-4646-99ea-373338952a9a", "ip_address": "10.0.1.3"} |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

8, Vlan network

8.1, Edit the OVS plugin configuration file /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini with:

[OVS]

# gre

#enable_tunneling = True

#tenant_network_type = gre

#tunnel_id_ranges = 1:1000

# only if node is running the agent

#local_ip = 172.16.100.108

# vlan

tenant_network_type=vlan

network_vlan_ranges = physnet1:1:4094

bridge_mappings = physnet1:br-phy

8.2, Add br-eth1 on all hosts

#br-eth1 will be used for VM configuration

ovs-vsctl add-br br-phy

ovs-vsctl add-port br-phy eth0

8.3, create vlan network

TENANT_ID=cfdf5ed5e5b44d04a608627775a8c5ed

quantum net-create --tenant-id $TENANT_ID --provider:network_type vlan --provider:physical_network physnet1 --provider:segmentation_id 1024

9, Self Testing and debug ways

tcpdump -i vnet0 -nnvvS

tcpdump -nnvvS -i tap3e2fb05e-53

tcpdump -ni eth1 proto gre

iptables -nvL -t nat

vi /etc/ovs-ifup

#!/bin/sh

BRIDGE='br-int'

DEVICE=$1

sudo ovs-vsctl -- --may-exist add-br "$BRIDGE"

tunctl -u root -t tap0

sudo ovs-vsctl add-port $BRIDGE $DEVICE tag=1

ifconfig $DEVICE 0.0.0.0 promisc up

# dhcp

NETWORK=10.0.100.0

NETMASK=255.255.255.0

GATEWAY=10.0.100.1

DHCPRANGE=10.0.100.2,10.0.100.254

# Optionally parameters to enable PXE support

TFTPROOT=

BOOTP=

do_dnsmasq() {

dnsmasq "$@"

}

start_dnsmasq() {

do_dnsmasq \

--no-hosts \

--no-resolv \

--strict-order \

--except-interface=lo \

--interface=tap00578b46-91 \

--listen-address=$GATEWAY \

--bind-interfaces \

--dhcp-range=$DHCPRANGE \

--conf-file="" \

--pid-file=/var/run/qemu-dnsmasq-$BRIDGE.pid \

--dhcp-leasefile=/var/run/qemu-dnsmasq-$BRIDGE.leases \

--dhcp-no-override \

${TFTPROOT:+"--enable-tftp"} \

${TFTPROOT:+"--tftp-root=$TFTPROOT"} \

${BOOTP:+"--dhcp-boot=$BOOTP"}

}

vi /etc/ovs-ifdown

#!/bin/sh

BRIDGE='br-int'

DEVICE=$1

/sbin/ifconfig $1 0.0.0.0 down

ovs-vsctl del-port $BRIDGE $BRIDGE

# install ttylinux into hard disk and use it to debug

bunzip2 ttylinux-i686-11.2.iso.gz

qemu-img create -f raw ~/tools/ttylinux.img 100M

qemu -m 128 -boot c -hda ~/tools/ttylinux.img -cdrom ~/tools/ttylinux-i686-11.2.iso -net nic,macaddr=00:11:22:EE:EE:EE -net tap,ifname=tap0,script=no,downscript=no -vnc :3

vncviewer localhost:5903

You can now use the ttylinux-installer to install ttylinux to the virtual disk.

# ttylinux-installer /dev/hdc /dev/hda

Once the install has completed, kill the VM from the terminal that you started it from <ctrl>-c.

Now you can boot your image.

qemu -m 128 -boot c -hda ~/tools/ttylinux.img -net nic,macaddr=00:11:22:EE:EE:EE -net tap,ifname=tap0,script=no,downscript=no -vnc :3

qemu -m 128 -boot c -hda ~/tools/ttylinux.img -net nic,macaddr=00:11:22:EE:EE:EE -net tap,ifname=tap0,script=/etc/ovs-ifup,downscript=/etc/ovs-ifdown -vnc :3

disable ipv6

sudo sh -c "echo 1 > /proc/sys/net/ipv6/conf/eth0/disable_ipv6"

echo "0" > /proc/sys/net/ipv6/conf/all/autoconf

enable dhcp

vi /etc/sysconfig/network-scripts/ifcfg-eth0

ENABLE=yes

DHCP=yes

sudo ovs-vsctl add-port br-int gre0 -- set interface gre0 type=gre options:remote_ip=10.101.1.1y

run the comand "udhcpc" to begin dhcp discover.

# start dhcp server

dnsmasq --strict-order --bind-interfaces --interface=fordhcptest --conf-file= --except-interface lo --dhcp-option=3 --no-resolv --dhcp-range=10.0.0.3,10.0.0.254

Note: if you don't use dhcp-optsfile option, please also don't use leasefile-ro options

[root@node1 ~]# ifconfig -a

br-ex Link encap:Ethernet HWaddr 86:A1:09:3F:BA:48

inet addr:192.168.100.108 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::84a1:9ff:fe3f:ba48/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:427 errors:0 dropped:0 overruns:0 frame:0

TX packets:724 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:66062 (64.5 KiB) TX bytes:70164 (68.5 KiB)

br-int Link encap:Ethernet HWaddr 36:60:27:75:72:48

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:271 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:33375 (32.5 KiB) TX bytes:0 (0.0 b)

br-phy Link encap:Ethernet HWaddr 9A:D2:8D:A3:C0:41

inet addr:172.16.100.108 Bcast:172.16.100.255 Mask:255.255.255.0

inet6 addr: fe80::98d2:8dff:fea3:c041/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:65485 errors:0 dropped:0 overruns:0 frame:0

TX packets:61702 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:11974548 (11.4 MiB) TX bytes:25197505 (24.0 MiB)

eth0 Link encap:Ethernet HWaddr 52:54:00:8C:04:42

inet6 addr: fe80::5054:ff:fe8c:442/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1859 errors:0 dropped:0 overruns:0 frame:0

TX packets:756 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:122379 (119.5 KiB) TX bytes:75849 (74.0 KiB)

Interrupt:10

eth1 Link encap:Ethernet HWaddr 52:54:00:56:B3:A6

inet6 addr: fe80::5054:ff:fe56:b3a6/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:66947 errors:0 dropped:0 overruns:0 frame:0

TX packets:66704 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:11125104 (10.6 MiB) TX bytes:25532683 (24.3 MiB)

Interrupt:11 Base address:0xc000

int-br-phy Link encap:Ethernet HWaddr 52:1C:F9:84:DF:87

inet6 addr: fe80::501c:f9ff:fe84:df87/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:153 errors:0 dropped:0 overruns:0 frame:0

TX packets:145 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:15478 (15.1 KiB) TX bytes:24844 (24.2 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:533531 errors:0 dropped:0 overruns:0 frame:0

TX packets:533531 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:108857779 (103.8 MiB) TX bytes:108857779 (103.8 MiB)

phy-br-phy Link encap:Ethernet HWaddr BE:B2:B8:A1:50:A6

inet6 addr: fe80::bcb2:b8ff:fea1:50a6/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:145 errors:0 dropped:0 overruns:0 frame:0

TX packets:153 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:24844 (24.2 KiB) TX bytes:15478 (15.1 KiB)

qg-19720c1b-2c Link encap:Ethernet HWaddr FA:16:3E:EC:00:9B

inet addr:192.168.100.102 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:feec:9b/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:3 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:246 (246.0 b) TX bytes:5617 (5.4 KiB)

tapaec0c85b-09 Link encap:Ethernet HWaddr FA:16:3E:84:85:B4

inet addr:10.0.1.2 Bcast:10.0.1.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fe84:85b4/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:143 errors:0 dropped:0 overruns:0 frame:0

TX packets:55 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:11968 (11.6 KiB) TX bytes:9082 (8.8 KiB)

[root@node1 ~]# sudo ovs-vsctl show

b944213c-f5e0-40e5-a796-aef88c7be905

Bridge br-ex

Port "qg-19720c1b-2c"

Interface "qg-19720c1b-2c"

type: internal

Port "eth0"

Interface "eth0"

Port br-ex

Interface br-ex

type: internal

Bridge br-int

Port int-br-phy

Interface int-br-phy

Port "tapaec0c85b-09"

tag: 2

Interface "tapaec0c85b-09"

type: internal

Port br-int

Interface br-int

type: internal

Bridge br-phy

Port "eth1"

Interface "eth1"

Port phy-br-phy

Interface phy-br-phy

Port br-phy

Interface br-phy

type: internal

ovs_version: "1.4.2"

[root@node2 ~]# sudo ovs-vsctl show

b944213c-f5e0-40e5-a796-aef88c7be905

Bridge br-tun

Port br-tun

Interface br-tun

type: internal

Bridge br-phy

Port "eth0"

Interface "eth0"

Port br-phy

Interface br-phy

type: internal

Port phy-br-phy

Interface phy-br-phy

Bridge br-int

Port br-int

Interface br-int

type: internal

Port int-br-phy

Interface int-br-phy

Port "vnet0"

tag: 1

Interface "vnet0"

ovs_version: "1.4.2"

[root@node2 ~]# ifconfig -a

br-int Link encap:Ethernet HWaddr 36:60:27:75:72:48

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:132 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8650 (8.4 KiB) TX bytes:0 (0.0 b)

br-phy Link encap:Ethernet HWaddr 4A:66:FB:10:ED:4D

inet addr:172.16.100.109 Bcast:172.16.100.255 Mask:255.255.255.0

inet6 addr: fe80::4866:fbff:fe10:ed4d/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:36752 errors:0 dropped:0 overruns:0 frame:0

TX packets:35084 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:9258136 (8.8 MiB) TX bytes:15957256 (15.2 MiB)

br-tun Link encap:Ethernet HWaddr 86:96:A6:04:43:4B

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:300 (300.0 b) TX bytes:0 (0.0 b)

eth0 Link encap:Ethernet HWaddr 52:54:00:D5:BA:A0

inet6 addr: fe80::5054:ff:fed5:baa0/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:38097 errors:0 dropped:0 overruns:0 frame:0

TX packets:38951 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8807592 (8.3 MiB) TX bytes:16216350 (15.4 MiB)

Interrupt:11 Base address:0xc000

int-br-phy Link encap:Ethernet HWaddr 62:D5:4F:1C:E7:DF

inet6 addr: fe80::60d5:4fff:fe1c:e7df/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:54 errors:0 dropped:0 overruns:0 frame:0

TX packets:151 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8872 (8.6 KiB) TX bytes:14188 (13.8 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:54195 errors:0 dropped:0 overruns:0 frame:0

TX packets:54195 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:18219330 (17.3 MiB) TX bytes:18219330 (17.3 MiB)

phy-br-phy Link encap:Ethernet HWaddr 1A:35:0D:DB:07:3A

inet6 addr: fe80::1835:dff:fedb:73a/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:151 errors:0 dropped:0 overruns:0 frame:0

TX packets:54 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:14188 (13.8 KiB) TX bytes:8872 (8.6 KiB)

vnet0 Link encap:Ethernet HWaddr FE:16:3E:6B:DB:82

inet6 addr: fe80::fc16:3eff:fe6b:db82/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:145 errors:0 dropped:0 overruns:0 frame:0

TX packets:29 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:11026 (10.7 KiB) TX bytes:4508 (4.4 KiB)

[Reference]

1, OpenStack-folsom setup on Ubuntu 12.04 in single ESXi host, https://w3-connections.ibm.com/wikis/home?lang=en-us#!/wiki/W3cfc52416a59_406d_8e18_445dc4fb4934/page/OpenStack-folsom%20setup%20on%20Ubuntu%2012.04%20in%20single%20ESXi%20host

2, Getting started with OpenStack on Fedora 17, http://fedoraproject.org/wiki/Getting_started_with_OpenStack_on_Fedora_17

3, Install your own openstack cloud essex edition

4, Fedora 16上源码建立pydev + eclipse的OpenStack开发环境笔记草稿 ( by quqi99 ) , http://blog.csdn.net/quqi99/article/details/7411091

5, https://github.com/josh-wrale/OpenStack-Folsom-Install-guide/blob/master/OpenStack_Folsom_Install_Guide_WebVersion.rst

6, http://visualne.wordpress.com/2012/12/09/openstack-folsom-quantum-ovs-agent-gre-tunnels/

7, https://answers.launchpad.net/quantum/+question/216207

8, http://blog.csdn.net/yahohi/article/details/6631934

9, http://openvswitch.org/pipermail/dev/2012-May/017189.html

10, http://pastebin.com/D22zhiEY

11, http://brezular.wordpress.com/2011/12/04/part4-openvswitch-playing-with-bonding-on-openvswitch/

12, http://en.wikibooks.org/wiki/QEMU/Images

13, http://networkstatic.net/open-vswitch-gre-tunnel-configuration/#!prettyPhoto

14, https://answers.launchpad.net/quantum/+question/216939

15, https://lists.launchpad.net/openstack/msg18693.html

16, http://wiki.openstack.org/ConfigureOpenvswitch