Hadoop CDH4伪分布式安装

对于一名Hadoop开发人员来说,第一件事就是需要安装一个自己的Hadoop环境供日常的开发及学习使用,本文主要介绍如何安装Hadoop CDH4的伪分布式环境。

由于Hadoop是由Java编写,因此需要运行在JDK 1.6以上平台,所以第一步需要安装相应的JDK。相应的JDK可以通过下面这个连接下载。

JDK Download Page

- 安装JDK

[kevin@localhost ~]$ sudo ./jdk-6u34-linux-x64-rpm.bin

[kevin@localhost ~]$ sudo cp jdk-6u34-linux-amd64.rpm /usr/local

[kevin@localhost ~]$ cd /usr/local/

[kevin@localhost local]$ sudo chmod +x jdk-6u34-linux-amd64.rpm

[kevin@localhost local]$ sudo rpm -ivh jdk-6u34-linux-amd64.rpm

[kevin@localhost ~]$ cd /usr/local/

[kevin@localhost local]$ sudo chmod +x jdk-6u34-linux-amd64.rpm

[kevin@localhost local]$ sudo rpm -ivh jdk-6u34-linux-amd64.rpm

- 配置环境变量

[kevin@localhost jdk1.6.0_34]$ sudo vi /etc/profile

添加JDK的安装目录,具体添加内容如下:

export JAVA_HOME=/usr/java/jdk1.6.0_34

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

[kevin@localhost jdk1.6.0_34]$ java -version (验证JDK是否安装成功)

java version "1.6.0_34"

Java(TM) SE Runtime Environment (build 1.6.0_34-b04)

Java HotSpot(TM) 64-Bit Server VM (build 20.9-b04, mixed mode)

- Hadoop安装(Yarn)

Hadoop CHD4是基本Apache Hadoop 2.0,Map/Reduce框架使用Yarn。可以通用yum来安装(sudo yum install hadoop-conf-pseudo)。为了了解Hadoop的依赖关系,这里介绍使用rpm来安装,手动解决相应的依赖关系。具体的rpm包下载地址( Hadoop CDH4 RPM)

[kevin@localhost ~]$ chmod +x hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64.rpm

[kevin@localhost ~]$ sudo rpm -ivh hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64.rpm

[sudo] password for kevin:

warning: hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64.rpm: Header V4 DSA signature: NOKEY, key ID e8f86acd

error: Failed dependencies:

hadoop = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-namenode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-datanode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-secondarynamenode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-yarn-resourcemanager = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-yarn-nodemanager = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-mapreduce-historyserver = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

[kevin@localhost ~]$ sudo rpm -ivh hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64.rpm

[sudo] password for kevin:

warning: hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64.rpm: Header V4 DSA signature: NOKEY, key ID e8f86acd

error: Failed dependencies:

hadoop = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-namenode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-datanode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-hdfs-secondarynamenode = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-yarn-resourcemanager = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-yarn-nodemanager = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

hadoop-mapreduce-historyserver = 2.0.0+545-1.cdh4.1.1.p0.5.el5 is needed by hadoop-conf-pseudo-2.0.0+545-1.cdh4.1.1.p0.5.el5.x86_64

报如上依赖关系错误,可以清楚的看到hadoop-conf-pseudo依赖hadoop, hadoop-hdfs-namenode, hadoop-hdfs-datanode, hadoop-hdfs-secondarynamenode, hadoop-yarn-resourcemanager, hadoop-yarn-nodemanager, hadoop-mapreduce-historyserver,由于伪分布所有的组件都运行在同一台机器上,所以需要安装上面这些依赖包。

具体的hadoop环境参数如下:

/etc/hadoop/conf (具体的配置文件)

/etc/init.d

(所有相关的启动脚本)

/usr/lib (具体安装目录包括Hadoop, hdfs, yarn, map/reduce)

安装完成之后,会默认创建几个用户(zookeeper, hdfs, yarn, mapred),及相应的组(zookeeper, hadoop, hdfs, yarn, mapred)

- Format NameNode

Hadoop已经安装成功,在启动hadoop之前,需要对namenode进行format,format namenode必须使用hdfs这个用户,如下:

[kevin@localhost init.d]$

sudo -u hdfs hdfsnamenode -format

- 启动Hadoop HDFS

$ for service in /etc/init.d/hadoop-hdfs-*

> do

> sudo $service start

> done

> do

> sudo $service start

> done

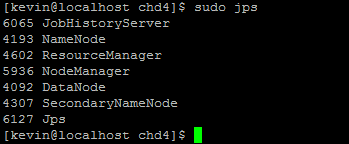

可以通过sudo jps查看启来的java进程

启动正常,可以通过hadoop fs -ls / 来访问HDFS文件系统,通过http://192.168.16.129:50070访问web console的时候不能访问,查看防火墙是启来的,应该防火墙的导致,暂时先把防火墙关闭之后,重新访问正常。

- 创建Hadoop HDFS初始目录

由于在运行map/reduce,需要创建一些临时目录,cache目录等,由于这些目录会涉及到一些权限问题,所以最好先创建好。这里把相关需要创建的目录统一放到一个init-hdfs.sh的脚本中统一执行。

[kevin@localhost chd4]$ vi init-hdfs.sh

sudo -u hdfs hadoop fs -mkdir /tmp;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp;

sudo -u hdfs hadoop fs -mkdir /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -mkdir /tmp/hadoopyarn/staging/history/done_intermediate;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoopyarn/staging/history/done_intermediate;

sudo -u hdfs hadoop fs -chown -R mapred:mapred /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -mkdir /var/log/hadoop-yarn;

sudo -u hdfs hadoop fs -chown yarn:mapred /var/log/hadoop-yarn;

sudo -u hdfs hadoop fs -mkdir /user/$USER;

sudo -u hdfs hadoop fs -chown $USER /user/$USER;

sudo -u hdfs hadoop fs -mkdir /tmp;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp;

sudo -u hdfs hadoop fs -mkdir /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -mkdir /tmp/hadoopyarn/staging/history/done_intermediate;

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoopyarn/staging/history/done_intermediate;

sudo -u hdfs hadoop fs -chown -R mapred:mapred /tmp/hadoop-yarn/staging;

sudo -u hdfs hadoop fs -mkdir /var/log/hadoop-yarn;

sudo -u hdfs hadoop fs -chown yarn:mapred /var/log/hadoop-yarn;

sudo -u hdfs hadoop fs -mkdir /user/$USER;

sudo -u hdfs hadoop fs -chown $USER /user/$USER;

执行成功之后check一下目录是否正确。

- 启动Yarn

sudo /etc/init.d/hadoop-yarn-resourcemanager start;

sudo /etc/init.d/hadoop-yarn-nodemanager start;

sudo /etc/init.d/hadoop-mapreduce-historyserver start;

sudo /etc/init.d/hadoop-yarn-nodemanager start;

sudo /etc/init.d/hadoop-mapreduce-historyserver start;

check状态

可以通过19888端口访问jobhistory

到目前为此,基本的HDFS, YARN(Map/Reduce)都已经安装并启动正常。

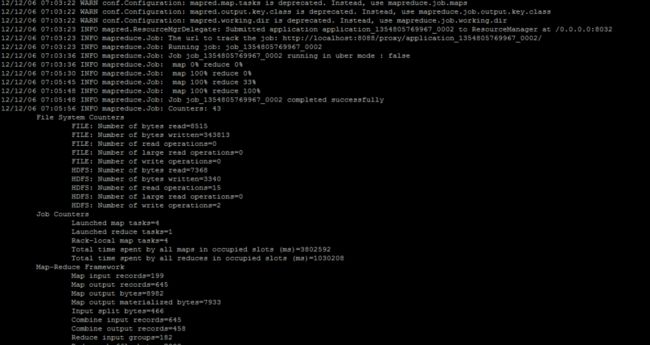

- 运行第一个Map/Reduce Job

所以安装完成之后,就来运行一个Hello World吧,Hadoop中有一个example,word count被视为Hadoop中的Hello World。下面就来运行一下这个Job,正好也来验证一下环境是否已经Ready。

通过下面命令执行word count map/reduce job,但是发现执行失败。

[kevin@localhost ~]$ hadoop jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar wordcount /user/kevin/test /user/kevin/ouput

查看log之后发现有2个问题,一个是class not found,一个是权限没有不对

第一个问题,需要在hadoop-env.sh和yarn-env.sh中加入如下内容,运行map/reduce的时候需要加载相关的jar

export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

export HADOOP_COMMON_HOME=/usr/lib/hadoop

export HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

export HADOOP_YARN_HOME=/usr/lib/hadoop-yarn

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=$HADOOP_CONF_DIR

export HADOOP_COMMON_HOME=/usr/lib/hadoop

export HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

export HADOOP_YARN_HOME=/usr/lib/hadoop-yarn

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=$HADOOP_CONF_DIR

第二个问题的报错如下:

对其重新授权之后再运行,就可以成功运行了,直接上图,哈哈。。。。

到此所有的安装和测试都已经完成,就可以开启Hadoop之旅了。