人脸识别之人眼定位、人脸矫正、人脸尺寸标准化---

代码来源于<Mastering OpenCV with Practical Computer Vision Projects >

她的另外几篇文章,也翻译的很好

http://blog.csdn.net/raby_gyl/article/details/12611861

http://blog.csdn.net/raby_gyl/article/details/12623539

http://blog.csdn.net/raby_gyl/article/details/12338371

我感觉下面的程序——对人眼定位,人脸矫正,人脸尺寸化,对于初学人脸识别,做人脸的预处理非常有帮助~

程序的思路是:首先通过人脸检测级联器检测到人脸区域,对于人脸区域我们采用经验理论(即不同的人眼检测器在不同的人脸搜索区域具有最优性),也即人眼在人脸区域中的位置,得到人眼的大体位置,采用opencv的人眼级联检测器检测人眼,获取每一个人眼的中心位置,两个人眼的连线与水平位置的夹角来确定人脸旋转、矫正的角度。同时通过我们想要得到的目标图像来计算得到仿射矩阵的scale尺度因子,即图像缩放的比例,以及通过平移来计算得到的距离,进而实现定位人眼在目的图像的位置。

代码如下:

- #include "stdafx.h"

- #include "opencv2/imgproc/imgproc.hpp"

- #include "opencv2/highgui/highgui.hpp"

- #include "opencv2/opencv.hpp"

- #include<iostream>

- #include<vector>

- using namespace std;

- using namespace cv;

- const double DESIRED_LEFT_EYE_X = 0.16; // 控制处理后人脸的多少部分是可见的

- const double DESIRED_LEFT_EYE_Y = 0.14;

- const double FACE_ELLIPSE_CY = 0.40;

- const double FACE_ELLIPSE_W = 0.50; // 应当至少为0.5

- const double FACE_ELLIPSE_H = 0.80; //控制人脸掩码的高度

- /*--------------------------------------目标检测-------------------------------------*/

- void detectObjectsCustom(const Mat &img, CascadeClassifier &cascade, vector<Rect> &objects, int scaledWidth, int flags, Size minFeatureSize, float searchScaleFactor, int minNeighbors);

- void detectLargestObject(const Mat &img, CascadeClassifier &cascade, Rect &largestObject, int scaledWidth);

- void detectManyObjects(const Mat &img, CascadeClassifier &cascade, vector<Rect> &objects, int scaledWidth);

- /*------------------------------------- end------------------------------------------*/

- void detectBothEyes(const Mat &face, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2, Point &leftEye, Point &rightEye, Rect *searchedLeftEye, Rect *searchedRightEye);

- Mat getPreprocessedFace(Mat &srcImg, int desiredFaceWidth, CascadeClassifier &faceCascade, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2, bool doLeftAndRightSeparately, Rect *storeFaceRect, Point *storeLeftEye, Point *storeRightEye, Rect *searchedLeftEye, Rect *searchedRightEye);

- int main(int argc,char **argv)

- {

- CascadeClassifier faceDetector;

- CascadeClassifier eyeDetector1;

- CascadeClassifier eyeDetector2;//未初始化不用

- try{

- //faceDetector.load("E:\\OpenCV-2.3.0\\data\\haarcascades\\haarcascade_frontalface_alt.xml");

- faceDetector.load("E:\\OpenCV-2.3.0\\data\\lbpcascades\\lbpcascade_frontalface.xml");

- eyeDetector1.load("E:\\OpenCV-2.3.0\\data\\haarcascades\\haarcascade_eye.xml");

- eyeDetector2.load("E:\\OpenCV-2.3.0\\data\\haarcascades\\haarcascade_eye_tree_eyeglasses.xml");

- }catch (cv::Exception e){}

- if(faceDetector.empty())

- {

- cerr<<"error:couldn't load face detector (";

- cerr<<"lbpcascade_frontalface.xml)!"<<endl;

- exit(1);

- }

- Mat img=imread(argv[1],1);

- Rect largestObject;

- const int scaledWidth=320;

- detectLargestObject(img,faceDetector,largestObject,scaledWidth);

- Mat img_rect(img,largestObject);

- Point leftEye,rightEye;

- Rect searchedLeftEye,searchedRightEye;

- detectBothEyes(img_rect,eyeDetector1,eyeDetector2,leftEye,rightEye,&searchedLeftEye,&searchedRightEye);

- //仿射变换

- Point2f eyesCenter;

- eyesCenter.x=(leftEye.x+rightEye.x)*0.5f;

- eyesCenter.y=(leftEye.y+rightEye.y)*0.5f;

- cout<<"左眼中心坐标 "<<leftEye.x<<" and "<<leftEye.y<<endl;

- cout<<"右眼中心坐标 "<<rightEye.x<<" and "<<rightEye.y<<endl;

- //获取两个人眼的角度

- double dy=(rightEye.y-leftEye.y);

- double dx=(rightEye.x-leftEye.x);

- double len=sqrt(dx*dx+dy*dy);

- cout<<"dx is "<<dx<<endl;

- cout<<"dy is "<<dy<<endl;

- cout<<"len is "<<len<<endl;

- double angle=atan2(dy,dx)*180.0/CV_PI;

- const double DESIRED_RIGHT_EYE_X=1.0f-0.16;

- //得到我们想要的尺度化大小

- const int DESIRED_FACE_WIDTH=70;

- const int DESIRED_FACE_HEIGHT=70;

- double desiredLen=(DESIRED_RIGHT_EYE_X-0.16);

- cout<<"desiredlen is "<<desiredLen<<endl;

- double scale=desiredLen*DESIRED_FACE_WIDTH/len;

- cout<<"the scale is "<<scale<<endl;

- Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

- double ex=DESIRED_FACE_WIDTH * 0.5f - eyesCenter.x;

- double ey = DESIRED_FACE_HEIGHT * DESIRED_LEFT_EYE_Y-eyesCenter.y;

- rot_mat.at<double>(0, 2) += ex;

- rot_mat.at<double>(1, 2) += ey;

- Mat warped = Mat(DESIRED_FACE_HEIGHT, DESIRED_FACE_WIDTH,CV_8U, Scalar(128));

- warpAffine(img_rect, warped, rot_mat, warped.size());

- imshow("warped",warped);

- rectangle(img,Point(largestObject.x,largestObject.y),Point(largestObject.x+largestObject.width,largestObject.y+largestObject.height),Scalar(0,0,255),2,8);

- rectangle(img_rect,Point(searchedLeftEye.x,searchedLeftEye.y),Point(searchedLeftEye.x+searchedLeftEye.width,searchedLeftEye.y+searchedLeftEye.height),Scalar(0,255,0),2,8);

- rectangle(img_rect,Point(searchedRightEye.x,searchedRightEye.y),Point(searchedRightEye.x+searchedRightEye.width,searchedRightEye.y+searchedRightEye.height),Scalar(0,255,0),2,8);

- //getPreprocessedFace

- imshow("img_rect",img_rect);

- imwrite("img_rect.jpg",img_rect);

- imshow("img",img);

- waitKey();

- }

- /*

- 1、采用给出的参数在图像中寻找目标,例如人脸

- 2、可以使用Haar级联器或者LBP级联器做人脸检测,或者甚至眼睛,鼻子,汽车检测

- 3、为了使检测更快,输入图像暂时被缩小到'scaledWidth',因为寻找人脸200的尺度已经足够了。

- */

- void detectObjectsCustom(const Mat &img, CascadeClassifier &cascade, vector<Rect> &objects, int scaledWidth, int flags, Size minFeatureSize, float searchScaleFactor, int minNeighbors)

- {

- //如果输入的图像不是灰度图像,那么将BRG或者BGRA彩色图像转换为灰度图像

- Mat gray;

- if (img.channels() == 3) {

- cvtColor(img, gray, CV_BGR2GRAY);

- }

- else if (img.channels() == 4) {

- cvtColor(img, gray, CV_BGRA2GRAY);

- }

- else {

- // 直接使用输入图像,既然它已经是灰度图像

- gray = img;

- }

- // 可能的缩小图像,是检索更快

- Mat inputImg;

- float scale = img.cols / (float)scaledWidth;

- if (img.cols > scaledWidth) {

- // 缩小图像并保持同样的宽高比

- int scaledHeight = cvRound(img.rows / scale);

- resize(gray, inputImg, Size(scaledWidth, scaledHeight));

- }

- else {

- // 直接使用输入图像,既然它已经小了

- inputImg = gray;

- }

- //标准化亮度和对比度来改善暗的图像

- Mat equalizedImg;

- equalizeHist(inputImg, equalizedImg);

- // 在小的灰色图像中检索目标

- cascade.detectMultiScale(equalizedImg, objects, searchScaleFactor, minNeighbors, flags, minFeatureSize);

- // 如果图像在检测之前暂时的被缩小了,则放大结果图像

- if (img.cols > scaledWidth) {

- for (int i = 0; i < (int)objects.size(); i++ ) {

- objects[i].x = cvRound(objects[i].x * scale);

- objects[i].y = cvRound(objects[i].y * scale);

- objects[i].width = cvRound(objects[i].width * scale);

- objects[i].height = cvRound(objects[i].height * scale);

- }

- }

- //确保目标全部在图像内部,以防它在边界上

- for (int i = 0; i < (int)objects.size(); i++ ) {

- if (objects[i].x < 0)

- objects[i].x = 0;

- if (objects[i].y < 0)

- objects[i].y = 0;

- if (objects[i].x + objects[i].width > img.cols)

- objects[i].x = img.cols - objects[i].width;

- if (objects[i].y + objects[i].height > img.rows)

- objects[i].y = img.rows - objects[i].height;

- }

- // 返回检测到的人脸矩形,存储在objects中

- }

- /*

- 1、仅寻找图像中的单个目标,例如最大的人脸,存储结果到largestObject

- 2、可以使用Haar级联器或者LBP级联器做人脸检测,或者甚至眼睛,鼻子,汽车检测

- 3、为了使检测更快,输入图像暂时被缩小到'scaledWidth',因为寻找人脸200的尺度已经足够了。

- 4、注释:detectLargestObject()要比 detectManyObjects()快。

- */

- void detectLargestObject(const Mat &img, CascadeClassifier &cascade, Rect &largestObject, int scaledWidth)

- {

- //仅寻找一个目标 (图像中最大的).

- int flags = CV_HAAR_FIND_BIGGEST_OBJECT;// | CASCADE_DO_ROUGH_SEARCH;

- // 最小的目标大小.

- Size minFeatureSize = Size(20, 20);

- // 寻找细节,尺度因子,必须比1大

- float searchScaleFactor = 1.1f;

- // 多少检测结果应当被滤掉,这依赖于你的检测系统是多坏,如果minNeighbors=2 ,大量的good or bad 被检测到。如果

- // minNeighbors=6,意味着只good检测结果,但是一些将漏掉。即可靠性 VS 检测人脸数量

- int minNeighbors = 4;

- // 执行目标或者人脸检测,仅寻找一个目标(图像中最大的)

- vector<Rect> objects;

- detectObjectsCustom(img, cascade, objects, scaledWidth, flags, minFeatureSize, searchScaleFactor, minNeighbors);

- if (objects.size() > 0) {

- // 返回仅检测到的目标

- largestObject = (Rect)objects.at(0);

- }

- else {

- // 返回一个无效的矩阵

- largestObject = Rect(-1,-1,-1,-1);

- }

- }

- void detectManyObjects(const Mat &img, CascadeClassifier &cascade, vector<Rect> &objects, int scaledWidth)

- {

- // 寻找图像中的许多目标

- int flags = CV_HAAR_SCALE_IMAGE;

- // 最小的目标大小.

- Size minFeatureSize = Size(20, 20);

- // 寻找细节,尺度因子,必须比1大

- float searchScaleFactor = 1.1f;

- // 多少检测结果应当被滤掉,这依赖于你的检测系统是多坏,如果minNeighbors=2 ,大量的good or bad 被检测到。如果

- // minNeighbors=6,意味着只good检测结果,但是一些将漏掉。即可靠性 VS 检测人脸数量

- int minNeighbors = 4;

- // 执行目标或者人脸检测,寻找图像中的许多目标

- detectObjectsCustom(img, cascade, objects, scaledWidth, flags, minFeatureSize, searchScaleFactor, minNeighbors);

- }

- /*

- 1、在给出的人脸图像中寻找双眼,返回左眼和右眼的中心,如果当找不到人眼时,或者设置为Point(-1,-1)

- 2、注意如果你想用两个不同的级联器寻找人眼,你可以传递第二个人眼检测器,例如如果你使用的一个常规人眼检测器和带眼镜的人眼检测器一样好,或者左眼检测器和右眼检测器一样好,

- 或者如果你不想第二个检测器,仅传一个未初始化级联检测器。

- 3、如果需要的话,也可以存储检测到的左眼和右眼的区域

- */

- void detectBothEyes(const Mat &face, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2, Point &leftEye, Point &rightEye, Rect *searchedLeftEye, Rect *searchedRightEye)

- {

- //跳过人脸边界,因为它们经常是头发和耳朵,这不是我们关心的

- /*

- // For "2splits.xml": Finds both eyes in roughly 60% of detected faces, also detects closed eyes.

- const float EYE_SX = 0.12f;

- const float EYE_SY = 0.17f;

- const float EYE_SW = 0.37f;

- const float EYE_SH = 0.36f;

- */

- /*

- // For mcs.xml: Finds both eyes in roughly 80% of detected faces, also detects closed eyes.

- const float EYE_SX = 0.10f;

- const float EYE_SY = 0.19f;

- const float EYE_SW = 0.40f;

- const float EYE_SH = 0.36f;

- */

- // For default eye.xml or eyeglasses.xml: Finds both eyes in roughly 40% of detected faces, but does not detect closed eyes.

- //haarcascade_eye.xml检测器在由下面确定的人脸区域内搜索最优。

- const float EYE_SX = 0.16f;//x

- const float EYE_SY = 0.26f;//y

- const float EYE_SW = 0.30f;//width

- const float EYE_SH = 0.28f;//height

- int leftX = cvRound(face.cols * EYE_SX);

- int topY = cvRound(face.rows * EYE_SY);

- int widthX = cvRound(face.cols * EYE_SW);

- int heightY = cvRound(face.rows * EYE_SH);

- int rightX = cvRound(face.cols * (1.0-EYE_SX-EYE_SW) ); // 右眼的开始区域

- Mat topLeftOfFace = face(Rect(leftX, topY, widthX, heightY));

- Mat topRightOfFace = face(Rect(rightX, topY, widthX, heightY));

- Rect leftEyeRect, rightEyeRect;

- // 如果需要的话,然后搜索到的窗口给调用者

- if (searchedLeftEye)

- *searchedLeftEye = Rect(leftX, topY, widthX, heightY);

- if (searchedRightEye)

- *searchedRightEye = Rect(rightX, topY, widthX, heightY);

- // 寻找左区域,然后右区域使用第一个人眼检测器

- detectLargestObject(topLeftOfFace, eyeCascade1, leftEyeRect, topLeftOfFace.cols);

- detectLargestObject(topRightOfFace, eyeCascade1, rightEyeRect, topRightOfFace.cols);

- // 如果人眼没有检测到,尝试另外一个不同的级联检测器

- if (leftEyeRect.width <= 0 && !eyeCascade2.empty()) {

- detectLargestObject(topLeftOfFace, eyeCascade2, leftEyeRect, topLeftOfFace.cols);

- //if (leftEyeRect.width > 0)

- // cout << "2nd eye detector LEFT SUCCESS" << endl;

- //else

- // cout << "2nd eye detector LEFT failed" << endl;

- }

- //else

- // cout << "1st eye detector LEFT SUCCESS" << endl;

- // 如果人眼没有检测到,尝试另外一个不同的级联检测器

- if (rightEyeRect.width <= 0 && !eyeCascade2.empty()) {

- detectLargestObject(topRightOfFace, eyeCascade2, rightEyeRect, topRightOfFace.cols);

- //if (rightEyeRect.width > 0)

- // cout << "2nd eye detector RIGHT SUCCESS" << endl;

- //else

- // cout << "2nd eye detector RIGHT failed" << endl;

- }

- //else

- // cout << "1st eye detector RIGHT SUCCESS" << endl;

- if (leftEyeRect.width > 0) { // 检查眼是否被检测到

- leftEyeRect.x += leftX; //矫正左眼矩形,因为人脸边界被去除掉了

- leftEyeRect.y += topY;

- leftEye = Point(leftEyeRect.x + leftEyeRect.width/2, leftEyeRect.y + leftEyeRect.height/2);

- }

- else {

- leftEye = Point(-1, -1); // 返回一个无效的点

- }

- if (rightEyeRect.width > 0) { //检查眼是否被检测到

- rightEyeRect.x += rightX; // 矫正左眼矩形,因为它从图像的右边界开始

- rightEyeRect.y += topY; // 矫正右眼矩形,因为人脸边界被去除掉了

- rightEye = Point(rightEyeRect.x + rightEyeRect.width/2, rightEyeRect.y + rightEyeRect.height/2);

- }

- else {

- rightEye = Point(-1, -1); // 返回一个无效的点

- }

- }

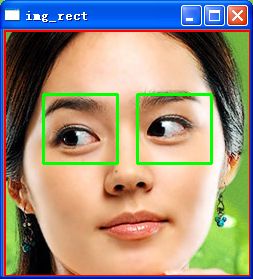

运行效果图:

1、

2、检测到的人脸矩形区域:

3、人脸矫正和尺寸归一化到70*70后的结果图:

我要说的:

1、代码是截取的原文中的一小部分,搭配好环境可以直接运行,人家的程序可能适用于网络摄像头拍的正对着人脸的,一个人脸图像。而不是针对一般的有一群人,人脸小一些的,或者人脸不是正面的图像,你可以那lena图像试一下,它只能检测到一只左眼(真实的右眼),而另外一只检测不到,那么就会返回一个无效的点Point(-1,-1)作为眼睛的中心,那么更别提后面的旋转了,即后面的旋转肯定也是不对的。在你用本程序测试的时候,一定要选择一个合理的图像。

2、我讲一下关于旋转平移的代码的理解:

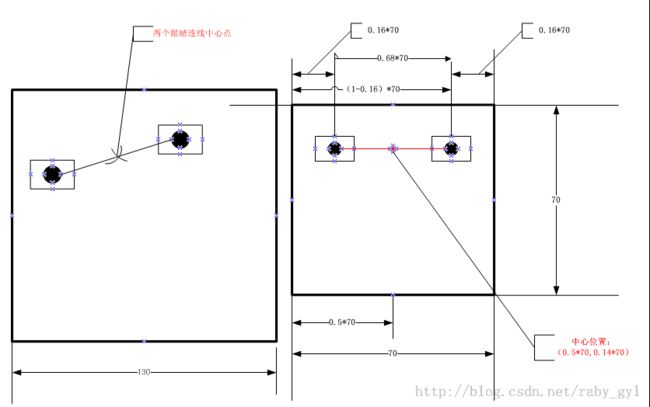

首先我们看下图:

这是我们的目的图像,满足的要求为:

(1)大小为70*70;

(2)两个眼睛直接的距离为(1-0.16)*70;(图中的(0.16,0.14)是左眼中心在图像中比例位置,由于人眼时对称的,则右眼所在比例位置为(0.68,0.14),要想获得真实的位置乘以70即可,对于本例是这样的)

(3)两个人眼连线的中心位置;

有了上述三个条件,我们就可以固定一双人眼在一个大小(这里是70*70)固定的图像中的具体位置。下面的代码就是要实现这个功能:

- Point2f eyesCenter;//原图像两个眼睛连续的中心点(参考下图参)

- eyesCenter.x=(leftEye.x+rightEye.x)*0.5f;

- eyesCenter.y=(leftEye.y+rightEye.y)*0.5f;

- double dy=(rightEye.y-leftEye.y);

- double dx=(rightEye.x-leftEye.x);

- double len=sqrt(dx*dx+dy*dy);//原图像两个眼睛之间的距离

- double angle=atan2(dy,dx)*180.0/CV_PI;//计算出来的旋转角度

- //目标图像的位置

- const double DESIRED_RIGHT_EYE_X=1.0f-0.16;

- const int DESIRED_FACE_WIDTH=70;

- const int DESIRED_FACE_HEIGHT=70;

- double desiredLen=(DESIRED_RIGHT_EYE_X-0.16);//目标图像两个眼睛直接的比例距离,乘以WIDTH=70即得到距离

- double scale=desiredLen*DESIRED_FACE_WIDTH/len;//通过目的图像两个眼睛距离除以原图像两个眼睛的距离,得到旋转矩阵的尺度因子.

- Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);//绕原图像两眼连线中心点旋转,旋转角度为angle,缩放尺度为scale

- //难点部分理解,实现中心点的平移,来控制两个眼睛在图像中的位置:

- double ex=DESIRED_FACE_WIDTH * 0.5f - eyesCenter.x;//获取x方向的平移因子,即目标两眼连线中心点的x坐标—原图像两眼连线中心点x坐标

- double ey = DESIRED_FACE_HEIGHT * DESIRED_LEFT_EYE_Y-eyesCenter.y;//获取x方向的平移因子,即目标两眼连线中心点的x坐标—原图像两眼连线中心点x坐标

- rot_mat.at<double>(0, 2) += ex;//将上述结果加到旋转矩阵中控制x平移的位置

- rot_mat.at<double>(1, 2) += ey;//将上述结果加到旋转矩阵中控制y平移的位置

- Mat warped = Mat(DESIRED_FACE_HEIGHT, DESIRED_FACE_WIDTH,CV_8U, Scalar(128));

- warpAffine(img_rect, warped, rot_mat, warped.size());

我们可以假想一下,上边的代码如果没有最后一个中心点的平移,之前的旋转矩阵只能控制图像的缩放和两个眼睛直接的相对位置,但是控制不了两个眼睛在图像中的位置,即固定两个眼在图像中的位置。

补充知识(仿射变换):

上图中a0,b0为控制平移的因子,如果我们领a2=1,a1=0,b2=0,b1=1,即变为u=x+a0,v=y+b0;

参考图: