nutch搜索引擎架设 之 抓取模块

一、准备工作

jdk:http://www.oracle.com/technetwork/java/javase/downloads/index.html

tomcat:http://tomcat.apache.org/

eclipse:http://www.eclipse.org/downloads/

Cygwin:http://www.cygwin.com/ 下载里面的setup.exe (用于模拟linux环境,nutch 是适应于linux平台的,)

nutch0.9:http://www.kuaipan.cn/file/id_16410726440632359.htm(开源的基于java的搜索引擎)

缺省jar下载:rtf-parser.jar:http://www.kuaipan.cn/file/id_16410726440632361.htm

jid3lib-0.5.1.jar:http://www.kuaipan.cn/file/id_16410726440632360.htm

二、安装

将下面下载到的(除了缺省jar)进行安装

jdk 安装:点击下载下来的安装包进行安装,别忘了配环境变量,这里不再详述

tomcat 安装:安装好以后,启动startup.bat 在浏览器端测试 http://localhost:8080/ 如出现欢迎界面即安装成功,如果安装不成功 在cmd 里运行startup.bat 查看错误信息,如果一闪而过,可手动修改startup.bat 将里面的这句 call "%EXECUTABLE%" start %CMD_LINE_ARGS%

修改为 call "%EXECUTABLE%" run %CMD_LINE_ARGS%

然后再在cmd里运行startup根据提供的具体错误信息来处理。

eclipse:这个没什么好说的。

cygwin:按提示一步一步install即可

nutch 0.9:解压到你的工程根目录里

缺省jar:将rtf-parser.jar 放到nutch目录 nutch-0.9\src\plugin\parse-rtf\lib 里面 将 jid3lib-0.5.1.jar 放到 nutch-0.9\src\plugin\parse-mp3\lib 里面

三、配置与加载nutch

首先打开Cygwin 会出现一个类似cmd的命令行的窗口,其命令格式与dos几乎差不多,不懂得可以输入help看详细的命令说明

然后进入你的nutch-0.9 目录

然后运行nutch 命令:

bin/nutch

如图所示会出现关于nutch命令的说明 即表示nutch运行环境搭建成功。

接着开始导入和配置nutch-0.9:

导入:

首先将nutch-0.9 作为工程项目倒入eclipse中。

打开eclipse,新建java项目,添加nutch-0.9 目录作为项目源

然后不要急着点完成

在Source选项卡 配置默认输出文件路径my_build

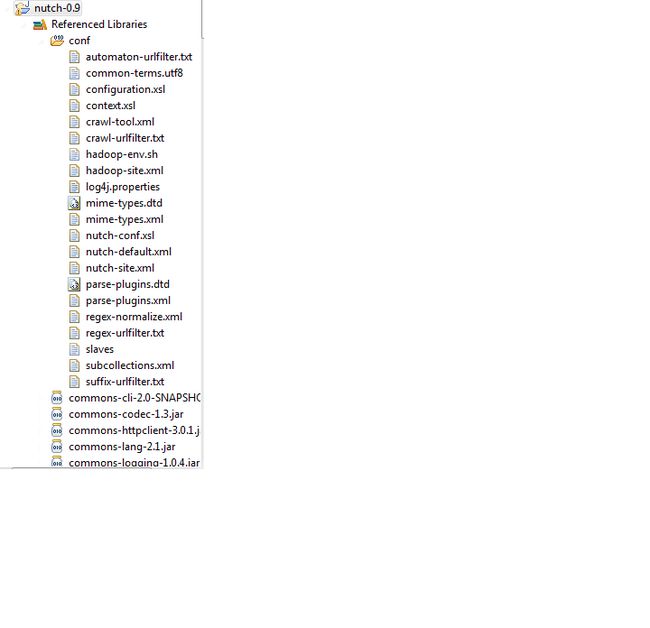

在Libraries选项卡将nutch目录的conf文件添加进来

在Order and Export选项卡 将conf文件置顶

刷新项目工程,这下基本将nutch完整地导入到eclipse中了

配置:

1.修改nutch-default.xml文件

在eclipse打开刚才倒入的项目 -〉 Referenced Libraries -〉conf 找到其中的nutch-default.xml文件

找到<name>plugin.folders</name>这个属性 将下面以行的value值修改为<value>./src/plugin</value>

2.配置nutch抓取策略

在nutch-0.9 工程根目录建立weburl.txt 用以存放搜索引擎要抓取内容得网址,里面填入要抓取的网址(例如:http://www.buct.edu.cn/)

然后在conf文件中找到crawl_urlfilter.txt

找到 :

# accept hosts in MY.DOMAIN.NAME

# +^http://([a-z0-9]*\.)*MY.DOMAIN.NAME/

修改为:

# accept hosts in MY.DOMAIN.NAME

# +^http://([a-z0-9]*\.)*MY.DOMAIN.NAME/

+^http://www.buct.edu.cn/

即配置仅抓取此网站的内容

最后配置conf里的nutch-site.xml文件

将下面一段内容完全覆盖nutch-site.xml文件中的内容:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>http.agent.name</name> <value>ahathinkingSpider</value> <description>My Search Engine</description> </property> <property> <name>http.agent.description</name> <value>My web</value> <description>Further description of our bot- this text is used in User-Agent header. It appears in parenthesis after the agent name.</description> </property> <property> <name>http.agent.url</name> <value>ahathinking.com</value> <description>A URL to advertise in the User-Agent header. This will appear in parenthesis after the agent name. Custom dictates that this should be a URL of a page explaining the purpose and behavior. of this </description> </property> <property> <name>http.agent.email</name> <value>[email protected]</value> <description>An email address to advertise in the HTTP 'From' request header and User-Agent header. A good practice is to mangle this address (e.g. 'info at example dot com') to avoid spamming. </description> </property> </configuration>

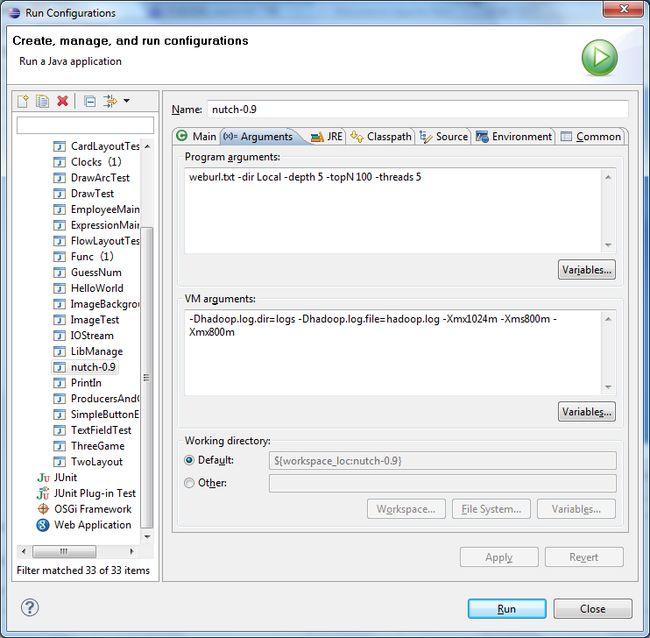

3.配置运行参数:

选取 nutch 项目,在运行栏里选择 Run Configurations... 修改相关运行参数

修改如下:

注意修改完后点击Apply

注意修改完后点击Apply

最后点击Run运行

crawl started in: Local rootUrlDir = weburl.txt threads = 5 depth = 5 topN = 100 Injector: starting Injector: crawlDb: Local/crawldb Injector: urlDir: weburl.txt Injector: Converting injected urls to crawl db entries. Injector: Merging injected urls into crawl db. Injector: done Generator: Selecting best-scoring urls due for fetch. Generator: starting Generator: segment: Local/segments/20121022110809 Generator: filtering: false Generator: topN: 100 Generator: jobtracker is 'local', generating exactly one partition. Generator: Partitioning selected urls by host, for politeness. Generator: done. Fetcher: starting Fetcher: segment: Local/segments/20121022110809 Fetcher: threads: 5 fetching http://www.buct.edu.cn/ Fetcher: done CrawlDb update: starting CrawlDb update: db: Local/crawldb CrawlDb update: segments: [Local/segments/20121022110809] CrawlDb update: additions allowed: true CrawlDb update: URL normalizing: true CrawlDb update: URL filtering: true CrawlDb update: Merging segment data into db. CrawlDb update: done Generator: Selecting best-scoring urls due for fetch. Generator: starting Generator: segment: Local/segments/20121022110815 Generator: filtering: false Generator: topN: 100 Generator: jobtracker is 'local', generating exactly one partition. Generator: Partitioning selected urls by host, for politeness. Generator: done. Fetcher: starting Fetcher: segment: Local/segments/20121022110815 Fetcher: threads: 5 fetching http://www.buct.edu.cn/kxyj/index.htm fetching http://www.buct.edu.cn/zjbh/ldjt/index.htm fetching http://www.buct.edu.cn/bhxy/index.htm fetching http://www.buct.edu.cn/zjbh/xycf/index.htm fetching http://www.buct.edu.cn/zjbh/lrld/index.htm fetching http://www.buct.edu.cn/xywh/index.htm fetching http://www.buct.edu.cn/tjtp/25314.htm fetching http://www.buct.edu.cn/xysz/index.htm fetching http://www.buct.edu.cn/zsjy/index.htm fetching http://www.buct.edu.cn/kslj/23583.htm fetching http://www.buct.edu.cn/js/jquery.flexslider-min.js fetching http://www.buct.edu.cn/rcpy/index.htm ............

出现上述信息即表示nutch的抓取模块已架设成功。

备注:

nutch架设 常见错误:

1.1.1 Crawl抓取出现hadoop出错提示

配置完成nutch在cygwin中运行nutch的crawl命令时:

[Fatal Error] hadoop-site.xml:15:7: The content of elements must consist of well

-formed character data or markup.

Exception in thread "main" java.lang.RuntimeException: org.xml.sax.SAXParseExcep

tion: The content of elements must consist of well-formed character data or mark

up.

问题解决:

hadoop-site.xml、hadoop-site.xml:其中一个标签</property>前面多了一个尖括号

1.1.2 运行crawl报错Job failed

Exception in thread "main" java.io.IOException: Job failed!

at org.apache.hadoop.mapred.JobClient.runJob(JobClient.java:604)

at org.apache.nutch.indexer.DeleteDuplicates.dedup(DeleteDuplicates.java

:439)

at org.apache.nutch.crawl.Crawl.main(Crawl.java:135)

问题解决:

此多为crawl-urlfilter.txt:MY.DOMAIN.NAME的修改不正确

1.1.3 又一个Job failed

Exception in thread "main" java.io.IOException: Job failed!

at org.apache.hadoop.mapred.JobClient.runJob(JobClient.java:604)

at org.apache.nutch.indexer.DeleteDuplicates.dedup(DeleteDuplicates.java

:439)

at org.apache.nutch.crawl.Crawl.main(Crawl.java:135)

问题解决:

多为crawl-urlfilter.txt的MY.DOMAIN.NAME修改不正确

1.1.4 Eclipse中运行nutch:Job failed

Exception in thread "main" java.io.IOException: Job failed!

at org.apache.hadoop.mapred.JobClient.runJob(JobClient.java:604)

at org.apache.nutch.crawl.Injector.inject(Injector.java:162)

at org.apache.nutch.crawl.Crawl.main(Crawl.java:115)

问题解决:

此问题是eclipse的java版本设置问题,解决方法:

如原来使用java1.4,需要改为1.6

project-》properties-》java compiler

右 jdk compliance

compiler compliance level:改为6.0

1.1.5当修改配置文件指定索引库.( nutch-site.xml)

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>http.agent.name</name>

<value>HD nutch agent</value>

</property>

<property>

<name>http.agent.version</name>

<value>1.0</value>

</property>

<property>

<name>urlfilter.order</name>

<value>org.apache.nutch.urlfilter.regex.RegexURLFilter</value>

<description>The order by which url filters are applied.

If empty, all available url filters (as dictated by properties

plugin-includes and plugin-excludes above) are loaded and applied in system

defined order. If not empty, only named filters are loaded and applied

in given order. For example, if this property has value:

org.apache.nutch.urlfilter.regex.RegexURLFilter org.apache.nutch.urlfilter.prefix.PrefixURLFilter

then RegexURLFilter is applied first, and PrefixURLFilter second.

Since all filters are AND'ed, filter ordering does not have impact

on end result, but it may have performance implication, depending

on relative expensiveness of filters.

</description>

</property>

</configuration>

复制上述配置文件时,如果出现下列错误,是因为复制文件时带有空格或编码格式,重敲一遍即可: java.io.UTFDataFormatException: Invalid byte 1 of 1-byte UTF-8 sequence

总的来说常见错误多见于配置文件配置不当……

参考文章:

http://www.ahathinking.com/archives/140.html

http://hi.baidu.com/orminknckdbkntq/item/020a8bc6e4d275000bd93a2d