Microsoft Windows Internals 4th -- Chapter7 Memory Management

目录

Terms:

Introduction to the Memory Manager

Two primary tasks:

- Translating, or mapping, a process’s virtual address space into physical memory so that when a thread running in the context of that process reads or writes to the virtual address space, the correct physical address is referenced

- Paging some of the contents of memory to disk when it becomes overcommittedand bringing the contents back into physical memory when needed.

In addition to providing virtual memory management, the memory manager provides a core set of services on which the various Windows environment subsystems are built.

- memory mapped files (internally called section objects)

- copy-on-write memory

- support for applications using large, sparse address spaces

- provides a way for a process to allocate and use larger amounts of physical memory than can be mapped into the process virtual address space

WorkingSet:The subset of a process’s virtual address space that is physically resident

Overcommitted:running threads or system code try to use more physical memory than is currently available

Memory Manager Components

Position:

- Memory manager is part of the Windows executive and therefore exists in the file Ntoskrnl.exe.

- No parts of the memory manager exist in the HAL

Memory manager consists of the following components:

- A set of executive system services for allocating, deallocating, and managing virtual memory( most exposed through Windows API or kernel-mode device driver)

- A translation-not-valid and access fault trap handler

- Several key components that run in the context of six different kernel-mode system threads

- The working set manager (priority 16) -- invoked by "balance set manager" once per second as well as when free memory falls below a certain threshold

- The process/stack swapper (priority 23)-- performs both process and kernel thread stack inswapping and outswapping. Invoked when an inswap or outswap operation needs to take place.

- The modified page writer (priority 17)-- writes dirty pages on the modified list back to the appropriate paging files. This thread is awakened when the size of the modified list needs to be reduced

- The mapped page writer (priority 17)-- writes dirty pages in mapped files to disk. A second modified page writer thread,so avoid deadlock waiting for free pages. can generate page faults that result in requests for free pages.

- The dereference segment thread (priority 18) -- cache reduction as well as page file growth and shrinkage

- The zero page thread (priority 0) --zeroes out pages on the free list so that a cache of zero pages is available to satisfy future demand-zero page faults.

Internal Synchronization

memory manager synchronize access to the following Systemwide resources :

- the page frame number (PFN) database (controlled by a spinlock)

- section objects

- the system working set (controlled by pushlocks)

- page file creation (controlled by a mutex).

A number of these locks have been either removed completely or optimized in XP & Windows Server 2003, such as spinlock.

Some Lock operation is reduced to improve the parall.

Configuring the Memory Manager

- HKLM/SYSTEM/CurrentControlSet/Control/Session Manager/Memory Management

- Many of the thresholds and limits that control memory manager policy decisions are computed at system boot time on the basis of memory size and product type,These values are stored in various kernel variables and later used by the memory manager

- Win2000 Professional ,WinXP Professional and Home editions optimized for desktop interactive

- Windows Server systems are optimized for running server applications.

Services the Memory Manager Provides

system services such as:

- allocate and free virtual memory

- share memory between processes

- map files into memory

- flush virtual pages to disk

- retrieve information about a range of virtual pages

- change the protection of virtual pages

- lock the virtual pages into memory

- Most of these services are exposed through the Windows API, seperated into 3 groups:

- page granularity virtual memory functions (Virtualxxx)

- memory-mapped file functions (CreateFileMapping, MapViewOfFile)

- heap functions (Heapxxx and the older interfaces Localxxx and Globalxxx)

- The memory manager also provides a number of services.

- These functions begin with the prefix Mm.

- executive support routines that begin with Ex

If a process creates a child process, by default it has the right to manipulate the child process’s virtual memory

Large and Small Pages

Page:

- The virtual address space is divided into units called pages.

- That is because the hardware memory management unit translates virtual to physical addresses at the granularity of a page.

- Hence,a page is the smallest unit of protection at the hardware level.

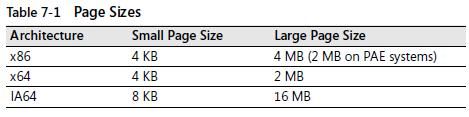

There are two page sizes.The actual sizes vary based on hardware architecture

- Small

- Large

- The advantage is speed of address translation for references to other data within the large page

- Translation look-aside buffer(TLB)

- One side-effect of large pages is that if both read-only code and read/write data exist in the page, the page could only be marked as read/write, this will allow the read-only code to be corrupted (though if happens, the system will crash sometime later , instead of crash immediately)

- HKEY_LOCAL_MACHINE/SYSTEM/CurrentControlSet/Control/Session Manager/Memory Management/LargePageDrivers

To take advantage of large pages, on systems considered to have enough memory,Windows maps the following contents with large pages:

- core operating system images (Ntoskrnl.exe and Hal.dll)

- core operating system data (such as the initial part of nonpaged pool and the data structures that describe the state of each physical memory page)

- I/O space requests that is of satisfactory large page length and alignment

- Windows also allows applications to map their images, private memory and pagefilebacked sections with large pages

Reserving and Committing Pages

- Pages in a process address space are free, reserved, or committed.

- Applications can first reserve address space and then commit pages in that address space. Or they can reserve and commit in the same function call

- Reserved address space is simply a way for a thread to reserve a range of virtual addresses for future use. Any access to reserved memory results in an access violation because the page isn’t mapped to any storage that can resolve the reference.

- Committed pages are pages that, when accessed, ultimately translate to valid pages in physical

memory - zero-initialized pages (or demand zero)

- private pages are inaccessible to any other process unless they’re accessed using cross-process memory functions, such as ReadProcessMemory or WriteProcessMemory

- Using the two-step process of reserving and committing memory can reduce memory usage by deferring committing pages until needed but keeping the convenience of virtual contiguity

- Reserving and then committing memory is useful for applications that need a potentially large virtually contiguous memory buffer; rather than committing pages for the entire region, the address space can be reserved and then committed later when needed. A utilization of this technique in the operating system is the user-mode stack for each thread.

Locking Memory

- In general, it’s better to let the memory manager decide which pages remain in physical memory

- For some special circumstances,Locking memory is necessary, implemented by two ways:

- Windows applications can call the VirtualLock function to lock pages in their process working set

- Device drivers can call the kernel-mode functions MmProbeAndLockPages, MmLockPagableCodeSection, MmLockPagableDataSection, or MmLockPagableSectionByHandle

Allocation Granularity

- Windows aligns each region of reserved process address space to begin on an integral boundary defined by the value of the system allocation granularity, which can be retrieved from the Windows GetSystemInfo function. Currently, this value is 64 KB

- Finally, when a region of address space is reserved, Windows ensures that the size and base of the region is a multiple of the system page size

Shared Memory and Mapped Files

- memory manager uses section objects (called file mapping objects in the Windows API) to implement shared memory

- A section object can be connected to an open file on disk (called a mapped file) or to committed memory (to provide shared memory)

- Sections mapped to committed memory are called "page file backed sections" because the pages are written to the paging file if memory demands dictate

- A section object can refer to files that are much larger than can fit in the address space of a process,but can map only a part(a view of the section)

Protecting Memory

Windows provides this protection in four primary ways:

- all systemwide data structures and memory pools used by kernel-mode system components

can be accessed only while in kernel mod - each process has a separate, private address space, protected from being accessed by

any thread belonging to another process- Two exceptions: the process decides to share pages with other processes

- another process has virtual memory read or write access to the process object and thus can use the ReadProcessMemory or WriteProcessMemory functions.

- Each time a thread references an address, the virtual memory hardware, in concert with the memory manager, intervenes and translates the virtual address into a physical one.

- By controlling how virtual addresses are translated, Windows can ensure that threads running in one process don’t inappropriately access a page belonging to another process.

- processors supported by Windows provide some form of hardware-controlled memory protection(read/write, read-only, and so on)

- shared memory section objects have standard Windows access-control lists (ACLs) that are checked when processes attempt to open them, thus limiting access of shared memory to those processes with the proper rights.

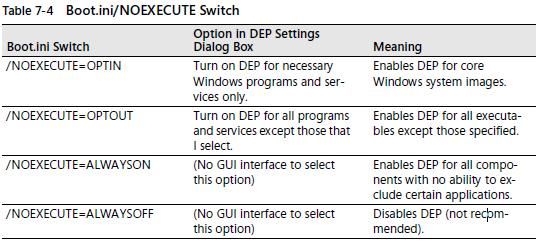

No Execute Page Protection

- No execute page protection (also referred to as data execution prevention, or DEP) means an attempt to transfer control to an instruction in a page marked as “no execute” will generate an access fault

- On 64-bit versions of Windows, execution protection is always applied to all 64-bit programs and device drivers and cannot be disabled

- execution protection is applied to thread stacks (both user and kernel mode), user mode pages not specifically marked as executable, kernel paged pool, and kernel session pool

- The application of execution protection for 32-bit programs depends on the Boot.ini /NOEXECUTE=switch

- execution protection is only applied to thread stacks and user mode pages, not to paged pool and session pool

- when execution protection is enabled on 32-bit Windows, the system automatically boots in PAE mode

- The settings can be changed by going to the Data Execution Prevention tab under My Computer, Properties, Advanced, Performance Settings

- applications that are excluded from execution protection are listed as registry values under the key HKLM/Software/Microsoft/Windows NT/CurrentVersion/AppCompatFlags/Layers

Copy-on-Write

- Copy-on-write page protection is an optimization the memory manager uses to conserve

physical memory(节省物理内存) - One application of copy-on-write is to implement breakpoint support in debuggers.(应用)

- by default, code pages start out as execute-only.

- If a programmer sets a breakpoint while debugging a program, however, the debugger must add a breakpoint instruction to the code.

- It does this by first changing the protection on the page to PAGE_EXECUTE_READWRITE and then changing the instruction stream.

- the memory manager creates a private copy for the process with the breakpoint set, while other processes continue using the unmodified code page.

- Copy-on-write is one example of an evaluation technique known as lazy evaluation that the memory manager uses as often as possible

- Lazy-evaluation algorithms avoid performing an expensive operation until absolutely required—if the operation is never required, no time is wasted on it(懒惰计算)

- The POSIX subsystem takes advantage of copy-on-write to implement the fork function

- Instead of copying the entire address space on fork, the new process shares the pages in the parent process by marking them copy-on-write

- If the child writes to the data,a process private copy is made. If not, the two processes continue sharing and no copying takes place

Heap Manager

- 64-KB is the minimum allocation granularity possible using page granularity functions such as VirtualAlloc

- Many applications allocate smaller blocks than the 64-KB

- To address this need, Windows provides a component called the heap manager, which manages allocations inside larger memory areas reserved using the page granularity memory allocation functions

- The allocation granularity in the heap manager is relatively small: 8 bytes on 32-bit systems and 16 bytes on 64-bit systems

- The heap manager exists in two places: Ntdll.dll and Ntoskrnl.exe

- most common Windows heap functions:

- HeapCreate or HeapDestroy

- HeapAlloc

- HeapFree

- HeapReAlloc

- HeapLock or HeapUnlock

- HeapWalk

Types of Heaps

- default process heap

- The default heap is created at process startup and is never deleted during the process’s lifetime.

- It defaults to 1 MB in size,but it can be bigger by specifying a starting size in the image file by using the /HEAP linker flag

- additional private heaps

- Processes can also create additional private heaps with the HeapCreate function

- When a process no longer needs a private heap, it can recover the virtual address space by calling HeapDestroy

Heap Manager Structure

the heap manager is structured in two layers:

- an optional front-end layer

- For user mode heaps only

- two types of front-end layers: look-aside lists and the Low Fragmentation Heap (LFH)

- Only one front-end layer can be used for one heap at one time

- the core heap

Heap Synchronization

- heap manager supports concurrent access from multiple threads by default

- specifying HEAP_NO_SERIALIZE either at heap creation or on a per-allocation basis to disable synchronization

- if a process is single threaded or uses an external mechanism for synchronization

- avoid the overhead of synchronization

- If heap synchronization is enabled, there is one lock per heap that protects all internal heap structures.

- A process can also lock the entire heap and prevent other threads from performing heap operations

Look-Aside Lists

- Look-aside lists are single linked lists that allow elementary operations such as “push to the list” or “pop from the list” in a last in, first out (LIFO) order with nonblocking algorithms

- There are 128 look-aside lists per heap

- Look-aside lists provide a significant performance improvement over normal heap allocations(avoid acquiring the heap global lock)

- The heap manager creates look-aside lists automatically when a heap is created

The Low Fragmentation Heap

The LFH avoids fragmentation by managing all allocated blocks in 128 predetermined different block-size ranges. Each of the 128 size ranges is called a bucket. When an application needs to allocate memory from the heap, the LFH chooses the bucket that can allocate the smallest block large enough to contain the requested size. The smallest block that can be allocated is 8 bytes.

| Buckets | Granularity | Range |

|---|---|---|

| 1-32 | 8 | 1-256 |

| 33-48 | 16 | 257-512 |

| 49-64 | 32 | 513-1024 |

| 65-80 | 64 | 1025-2048 |

| 81-96 | 128 | 2049-4096 |

| 97-112 | 256 | 4097-8192 |

| 113-128 | 512 | 8193-16384 |

Heap Debugging Features

heap manager includes several features to help detect bugs:

- Enable tail checking

- Enable free checking

- Parameter checking

- Heap validation

- Heap tagging

Pageheap

- heapdebugging tool

- The pageheap places allocations at the end of pages so that if a buffer overrun occurs, it will cause an access violation, making it easier to detect the offending code

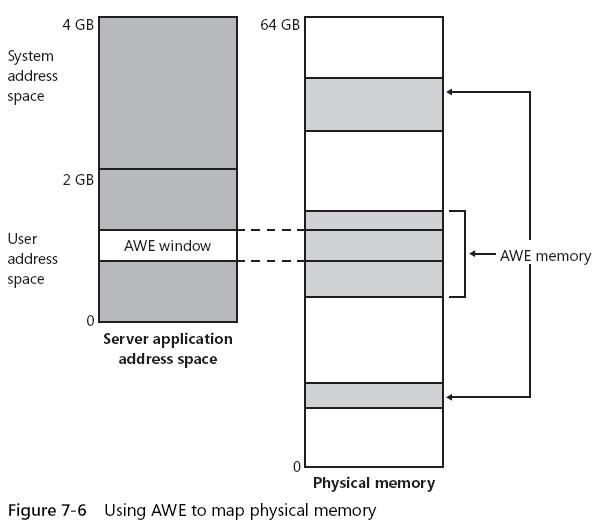

Address Windowing Extensions

- Although the 32-bit version of Windows can support up to 128 GB of physical memory, each 32-bit user process has by default only a 2-GB virtual address space.(This can be configured up to 3 GB when using the /3GB and /USERVA Boot.ini switches)

- Address Windowing Extensions,a set of functions,allows a 32-bit process to allocate and access more physical memory than can be represented in its limited address space

- AWE VS PAE

System Memory Pools

At system initialization, the memory manager creates two types of dynamically sized memory pools that the kernel-mode components use to allocate system memory:

- Nonpaged pool --Consists of ranges of system virtual addresses that are guaranteed to reside in physical memory at all times and thus can be accessed at any time (from any IRQL level and from any process context) without incurring a page fault.

- One of the reasons nonpaged pool is required is : page faults can’t be satisfied at DPC/dispatch level or above.

- Paged pool --A region of virtual memory in system space that can be paged in and out of the system.

- Device drivers that don’t need to access the memory from DPC/dispatch level or above can use paged pool.

Both memory pools are located in the system part of the address space and are mapped in the virtual address space of every process

Uniprocessor systems have three paged pools; multiprocessor systems have five(Having more than one paged pool reduces the frequency of system code blocking on simultaneous calls to pool routines)

Configuring Pool Sizes

HKLM/SYSTEM/CurrentControlSet/Control/Session Manager/Memory Management:NonPagedPoolSize and PagedPoolSize

Monitoring Pool Usage