ice版本resize 错误调试(Host key verification failed)

感谢朋友支持本博客,欢迎共同探讨交流,由于能力和时间有限,错误之处在所难免,欢迎指正!

如有转载,请保留源作者博客信息。

如需交流,欢迎大家博客留言。

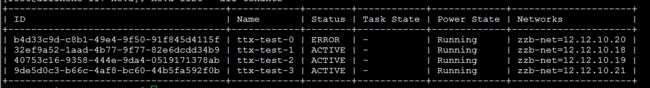

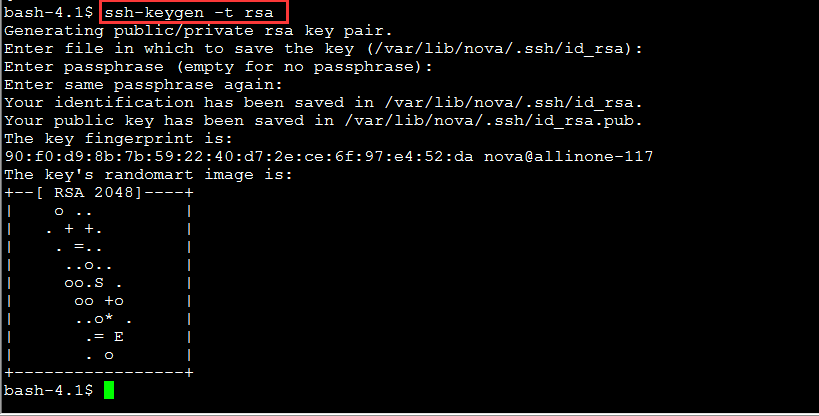

117为控制节点和计算节点共用节点上:

nova --debug resize fefe2ba2-69dc-46dc-b337-da2788d94d494

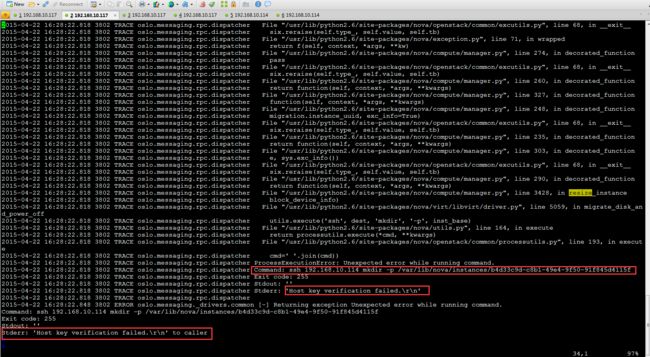

117上的compute日志报错:

vim /var/log/nova/compute.log

|

[instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Setting instance vm_state to ERROR

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Traceback (most recent call last):

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] File "/usr/lib/python2.6/site-packages/nova/compute/manager.py", line 5531, in _error_out_instance_on_exception

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] yield

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] File "/usr/lib/python2.6/site-packages/nova/compute/manager.py", line 3428, in resize_instance

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] block_device_info)

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] File "/usr/lib/python2.6/site-packages/nova/virt/libvirt/driver.py", line 5059, in migrate_disk_and_power_off

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f]

utils.execute('ssh', dest, 'mkdir', '-p', inst_base)#此处命令需要无密钥登录

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] File "/usr/lib/python2.6/site-packages/nova/utils.py", line 164, in execute

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] return processutils.execute(*cmd, **kwargs)

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] File "/usr/lib/python2.6/site-packages/nova/openstack/common/processutils.py", line 193, in execute

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] cmd=' '.join(cmd))

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] ProcessExecutionError: Unexpected error while running command.

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Command: ssh 192.168.10.114 mkdir -p /var/lib/nova/instances/b4d33c9d-c8b1-49e4-9f50-91f845d4115f

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Exit code: 255

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Stdout: ''

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f] Stderr: 'Host key verification failed.\r\n'

2015-04-22 16:28:22.435 3802 TRACE nova.compute.manager [instance: b4d33c9d-c8b1-49e4-9f50-91f845d4115f]

2015-04-22 16:28:22.818 3802 ERROR oslo.messaging.rpc.dispatcher [-] Exception during message handling: Unexpected error while running command.

Command: ssh 192.168.10.114 mkdir -p /var/lib/nova/instances/b4d33c9d-c8b1-49e4-9f50-91f845d4115f

Exit code: 255

Stdout: ''

Stderr: 'Host key verification failed.\r\n'

2015-04-22 16:28:22.818 3802 TRACE oslo.messaging.rpc.dispatcher Traceback (most recent call last):

|

上述错误说明117上用nova用户执行下述命令有错误:

ssh 192.168.10.114 mkdir -p /var/lib/nova/instances/b4d33c9d-c8b1-49e4-9f50-91f845d4115f

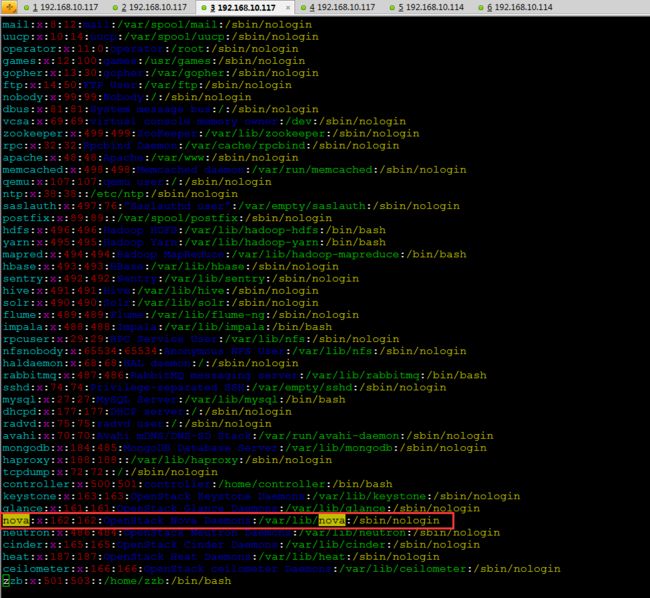

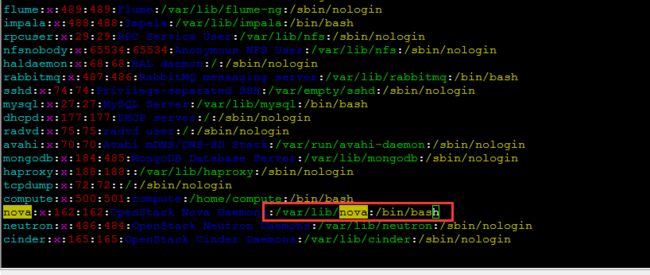

看一下117的用户文件:

vim /etc/passwd

其中

|

nova:x:162:162:OpenStack Nova Daemons:/var/lib/nova:/sbin/nologin

|

上述信息具体解释请自行查找linuxpasswd相关资料。

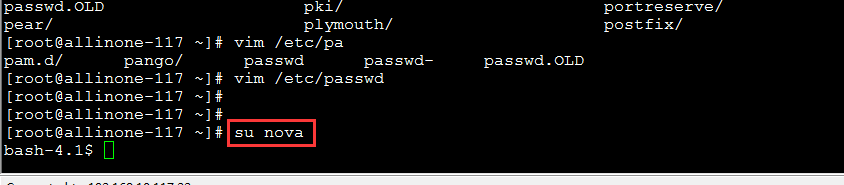

此处将nova修改为能够登录的用户:

|

nova:x:162:162:OpenStack Nova Daemons:/var/lib/nova:/bin/bash

|

然后再将生成的文件scp到114节点:

|

scp /var/lib/nova/.ssh/id_rsa.pub root@192.168.10.114:/var/lib/nova/.ssh/authorized_keys

|

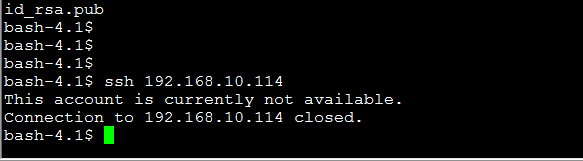

然后再nova下面执行:ssh 192.168.10.114

报错说当前账户不可用。

登录到114上面查看:

发现nova用户被禁止登录了。

打开:

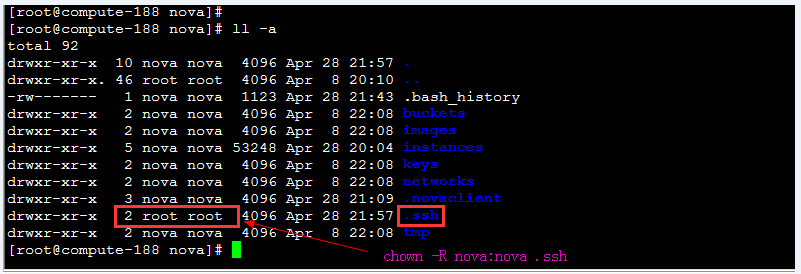

再次到117上执行发现能登录了:

注意.ssh的所属组和用户必须为nova:nova,否则无密码登录会失败

再次来验证resize:

成功!

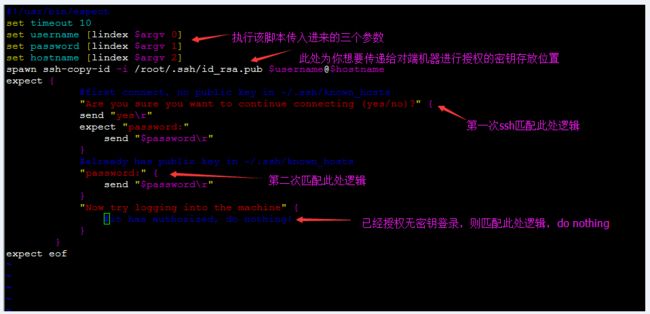

假如需要做成无密钥登录的自动脚本可以参考如下:

vim auto_ssh.sh

|

#!/usr/bin/expect

set timeout 10

set username [lindex $argv 0]

set password [lindex $argv 1]

set hostname [lindex $argv 2]

spawn ssh-copy-id -i /root/.ssh/id_rsa.pub $username@$hostname

expect {

#first connect, no public key in ~/.ssh/known_hosts

"Are you sure you want to continue connecting (yes/no)?" {

send "yes\r"

expect "password:"

send "$password\r"

}

#already has public key in ~/.ssh/known_hosts

"password:" {

send "$password\r"

}

"Now try logging into the machine" {

#it has authorized, do nothing!

}

}

expect eof

|

chmod 777 auto_ssh.sh

然后执行下述命令即可。

./auto_ssh.sh root 123456 192.168.10.162

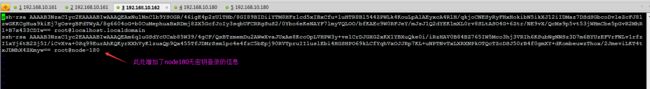

试验结果:

162机器上传看:

成功!

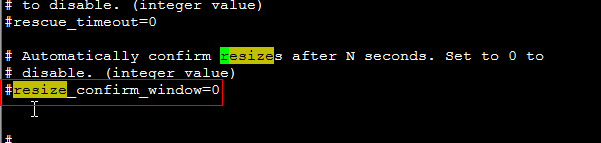

注意在/etc/nova/nova.conf

中有两个与resize相关的配置项:

上述表示在resize之后如果N秒之内不确认resize则自动resize!

选择true则只能resize到本机

# Allow destination machine to match source for resize. Useful

# when testing in single-host environments. (boolean value)

#allow_resize_to_same_host=false

下面测试resize和确认resize功能:

测试resize之后,回滚resize操作:

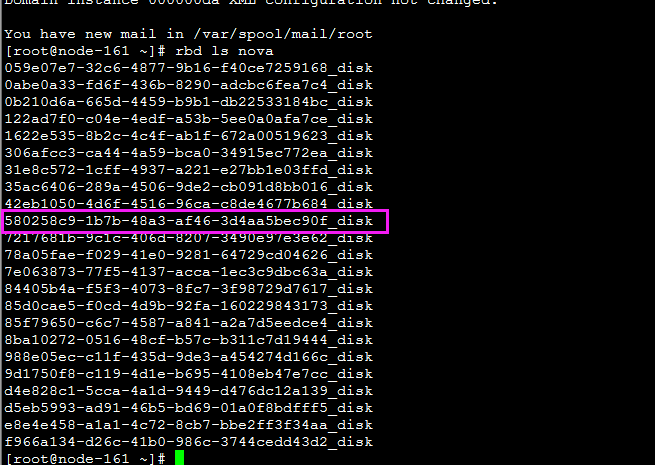

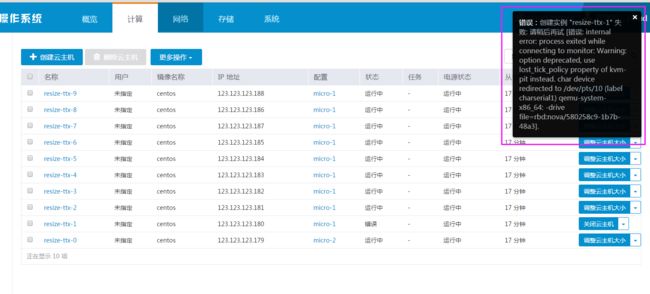

revert_resize之后rbd磁盘文件找不到bug修复

</features>

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<devices>

<emulator>/usr/bin/qemu-system-x86_64</emulator>

<disk type='network' device='disk'>

<driver name='qemu' type='raw' cache='none'/>

<auth username='admin'>

<secret type='ceph' uuid='6f943fc4-2611-48b9-96a3-a5917db4a281'/>

</auth>

<source protocol='rbd' name='nova/07b57f2d-3c61-4f7f-8716-bd0cd78fe33b_disk'>

<host name='11.11.0.161' port='6789'/>

<host name='11.11.0.162' port='6789'/>

</source>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw' cache='none'/>

<source file='/var/lib/nova/instances/07b57f2d-3c61-4f7f-8716-bd0cd78fe33b/disk.config'/>

<target dev='vdd' bus='ide'/>

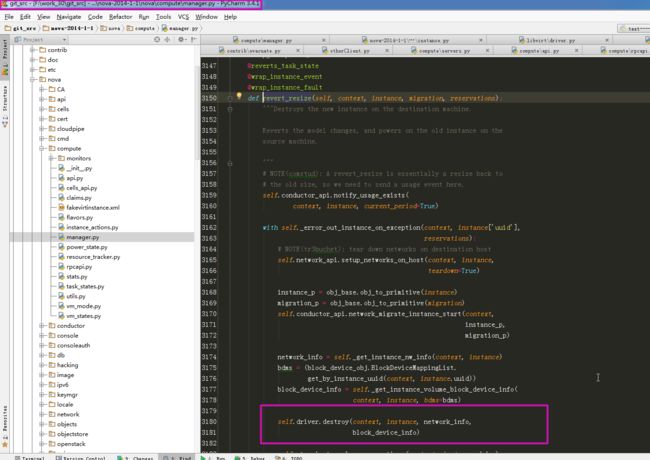

跟踪代码发现:

vim /usr/lib/python2.6/site-packages/nova/compute/manager.py

|

@wrap_exception()

@reverts_task_state

@wrap_instance_event

@wrap_instance_fault

def revert_resize(self, context, instance, migration, reservations):

"""Destroys the new instance on the destination machine.

Reverts the model changes, and powers on the old instance on the

source machine.

"""

quotas = quotas_obj.Quotas.from_reservations(context,

reservations,

instance=instance)

# NOTE(comstud): A revert_resize is essentially a resize back to

# the old size, so we need to send a usage event here.

self.conductor_api.notify_usage_exists(

context, instance, current_period=True)

with self._error_out_instance_on_exception(context, instance,

quotas=quotas):

# NOTE(tr3buchet): tear down networks on destination host

self.network_api.setup_networks_on_host(context, instance,

teardown=True)

instance_p = obj_base.obj_to_primitive(instance)

migration_p = obj_base.obj_to_primitive(migration)

self.network_api.migrate_instance_start(context,

instance_p,

migration_p)

network_info = self._get_instance_nw_info(context, instance)

bdms = objects.BlockDeviceMappingList.get_by_instance_uuid(

context, instance.uuid)

block_device_info = self._get_instance_block_device_info(

context, instance, bdms=bdms)

self.driver.destroy(context, instance, network_info,

block_device_info) #此处将开始有A resize到B的虚拟机直接删除了,也就将共享的rbd磁盘文件删除了。因此回退resize会报找不到rbd文件异常

self._terminate_volume_connections(context, instance, bdms)

migration.status = 'reverted'

migration.save(context.elevated())

rt = self._get_resource_tracker(instance.node)

rt.drop_resize_claim(context, instance)

self.compute_rpcapi.finish_revert_resize(context, instance,

migration, migration.source_compute,

quotas.reservations)

|

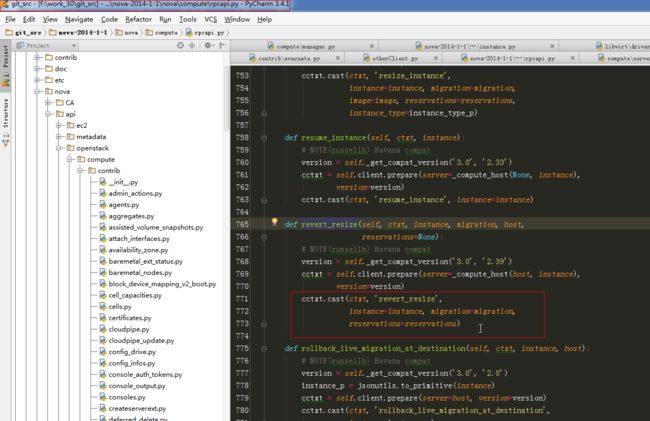

下面附上从

revert_resize wsgi发布router 入口一直往底层根据代码:

解决方案为当后端为rbd共享存储时候,不上次rbd镜像文件。

vim /usr/lib/python2.6/site-packages/nova/virt/libvirt/driver.py:1070

|

if destroy_disks:

self._delete_instance_files(instance)

self._cleanup_lvm(instance)

#NOTE(haomai): destroy volumes if needed

if CONF.libvirt.images_type == 'rbd':

#edit by ttx do'nt delete rbd image's file when revert_resize

#self._cleanup_rbd(instance)

|

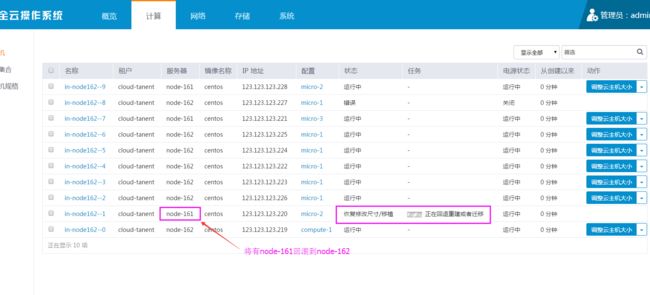

继续测试resize与回滚resize:

上图时刻虚拟机状态:

查看回滚后的虚拟机是否正常:(其实回滚过程中也是需要重启虚拟机的)

resize、resize确认、resize回滚三个功能修改bug完成,测试完毕。