x264代码剖析(六):encode()函数之x264_encoder_headers()函数

x264代码剖析(六):encode()函数之x264_encoder_headers()函数

encode()函数是x264的主干函数,主要包括x264_encoder_open()函数、x264_encoder_headers()函数、x264_encoder_encode()函数与x264_encoder_close()函数四大部分,其中x264_encoder_encode()函数是其核心部分,具体的H.264视频编码算法均在此模块。上一篇博文主要分析了x264_encoder_open()函数,本文主要学习x264_encoder_headers()函数。

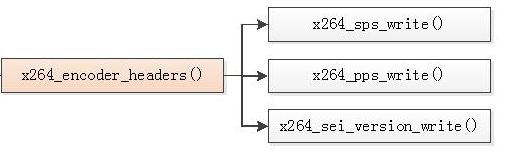

x264_encoder_headers()是libx264的一个API函数,用于输出SPS/PPS/SEI这些H.264码流的头信息,如下图所示。它调用了下面的函数:

x264_sps_write():输出SPS

x264_pps_write():输出PPS

x264_sei_version_write():输出SEI

下面对x264_encoder_headers()函数所涉及的函数进行介绍,首先就是SPS与PPS的初始化工作;其次是x264_encoder_headers()函数的内容;最后就是x264_encoder_headers()函数中用于输出SPS/PPS/SEI这些H.264码流头信息的具体函数,即x264_sps_write()函数、x264_pps_write()函数与x264_sei_version_write()函数。

1、SPS与PPS的初始化函数x264_sps_init()、x264_pps_init()

x264_sps_init()根据输入参数生成H.264码流的SPS(Sequence Parameter Set,序列参数集)信息,即根据输入参数集x264_param_t中的信息,初始化了SPS结构体中的成员变量。该函数的定义位于encoder\set.c,对应的代码如下:

////////////////初始化SPS

void x264_sps_init( x264_sps_t *sps, int i_id, x264_param_t *param )

{

int csp = param->i_csp & X264_CSP_MASK;

sps->i_id = i_id;

sps->i_mb_width = ( param->i_width + 15 ) / 16; //以宏块为单位的宽度

sps->i_mb_height= ( param->i_height + 15 ) / 16; //以宏块为单位的高度

sps->i_chroma_format_idc = csp >= X264_CSP_I444 ? CHROMA_444 :

csp >= X264_CSP_I422 ? CHROMA_422 : CHROMA_420; //色度取样格式

sps->b_qpprime_y_zero_transform_bypass = param->rc.i_rc_method == X264_RC_CQP && param->rc.i_qp_constant == 0;

//型profile

if( sps->b_qpprime_y_zero_transform_bypass || sps->i_chroma_format_idc == CHROMA_444 )

sps->i_profile_idc = PROFILE_HIGH444_PREDICTIVE; //YUV444的时候

else if( sps->i_chroma_format_idc == CHROMA_422 )

sps->i_profile_idc = PROFILE_HIGH422;

else if( BIT_DEPTH > 8 )

sps->i_profile_idc = PROFILE_HIGH10;

else if( param->analyse.b_transform_8x8 || param->i_cqm_preset != X264_CQM_FLAT )

sps->i_profile_idc = PROFILE_HIGH; //高型 High Profile 目前最常见

else if( param->b_cabac || param->i_bframe > 0 || param->b_interlaced || param->b_fake_interlaced || param->analyse.i_weighted_pred > 0 )

sps->i_profile_idc = PROFILE_MAIN; //主型

else

sps->i_profile_idc = PROFILE_BASELINE; //基本型

sps->b_constraint_set0 = sps->i_profile_idc == PROFILE_BASELINE;

/* x264 doesn't support the features that are in Baseline and not in Main,

* namely arbitrary_slice_order and slice_groups. */

sps->b_constraint_set1 = sps->i_profile_idc <= PROFILE_MAIN;

/* Never set constraint_set2, it is not necessary and not used in real world. */

sps->b_constraint_set2 = 0;

sps->b_constraint_set3 = 0;

//级level

sps->i_level_idc = param->i_level_idc;

if( param->i_level_idc == 9 && ( sps->i_profile_idc == PROFILE_BASELINE || sps->i_profile_idc == PROFILE_MAIN ) )

{

sps->b_constraint_set3 = 1; /* level 1b with Baseline or Main profile is signalled via constraint_set3 */

sps->i_level_idc = 11;

}

/* Intra profiles */

if( param->i_keyint_max == 1 && sps->i_profile_idc > PROFILE_HIGH )

sps->b_constraint_set3 = 1;

sps->vui.i_num_reorder_frames = param->i_bframe_pyramid ? 2 : param->i_bframe ? 1 : 0;

/* extra slot with pyramid so that we don't have to override the

* order of forgetting old pictures */

//参考帧数量

sps->vui.i_max_dec_frame_buffering =

sps->i_num_ref_frames = X264_MIN(X264_REF_MAX, X264_MAX4(param->i_frame_reference, 1 + sps->vui.i_num_reorder_frames,

param->i_bframe_pyramid ? 4 : 1, param->i_dpb_size));

sps->i_num_ref_frames -= param->i_bframe_pyramid == X264_B_PYRAMID_STRICT;

if( param->i_keyint_max == 1 )

{

sps->i_num_ref_frames = 0;

sps->vui.i_max_dec_frame_buffering = 0;

}

/* number of refs + current frame */

int max_frame_num = sps->vui.i_max_dec_frame_buffering * (!!param->i_bframe_pyramid+1) + 1;

/* Intra refresh cannot write a recovery time greater than max frame num-1 */

if( param->b_intra_refresh )

{

int time_to_recovery = X264_MIN( sps->i_mb_width - 1, param->i_keyint_max ) + param->i_bframe - 1;

max_frame_num = X264_MAX( max_frame_num, time_to_recovery+1 );

}

sps->i_log2_max_frame_num = 4;

while( (1 << sps->i_log2_max_frame_num) <= max_frame_num )

sps->i_log2_max_frame_num++;

//POC类型

sps->i_poc_type = param->i_bframe || param->b_interlaced || param->i_avcintra_class ? 0 : 2;

if( sps->i_poc_type == 0 )

{

int max_delta_poc = (param->i_bframe + 2) * (!!param->i_bframe_pyramid + 1) * 2;

sps->i_log2_max_poc_lsb = 4;

while( (1 << sps->i_log2_max_poc_lsb) <= max_delta_poc * 2 )

sps->i_log2_max_poc_lsb++;

}

sps->b_vui = 1;

sps->b_gaps_in_frame_num_value_allowed = 0;

sps->b_frame_mbs_only = !(param->b_interlaced || param->b_fake_interlaced);

if( !sps->b_frame_mbs_only )

sps->i_mb_height = ( sps->i_mb_height + 1 ) & ~1;

sps->b_mb_adaptive_frame_field = param->b_interlaced;

sps->b_direct8x8_inference = 1;

sps->crop.i_left = param->crop_rect.i_left;

sps->crop.i_top = param->crop_rect.i_top;

sps->crop.i_right = param->crop_rect.i_right + sps->i_mb_width*16 - param->i_width;

sps->crop.i_bottom = (param->crop_rect.i_bottom + sps->i_mb_height*16 - param->i_height) >> !sps->b_frame_mbs_only;

sps->b_crop = sps->crop.i_left || sps->crop.i_top ||

sps->crop.i_right || sps->crop.i_bottom;

sps->vui.b_aspect_ratio_info_present = 0;

if( param->vui.i_sar_width > 0 && param->vui.i_sar_height > 0 )

{

sps->vui.b_aspect_ratio_info_present = 1;

sps->vui.i_sar_width = param->vui.i_sar_width;

sps->vui.i_sar_height= param->vui.i_sar_height;

}

sps->vui.b_overscan_info_present = param->vui.i_overscan > 0 && param->vui.i_overscan <= 2;

if( sps->vui.b_overscan_info_present )

sps->vui.b_overscan_info = ( param->vui.i_overscan == 2 ? 1 : 0 );

sps->vui.b_signal_type_present = 0;

sps->vui.i_vidformat = ( param->vui.i_vidformat >= 0 && param->vui.i_vidformat <= 5 ? param->vui.i_vidformat : 5 );

sps->vui.b_fullrange = ( param->vui.b_fullrange >= 0 && param->vui.b_fullrange <= 1 ? param->vui.b_fullrange :

( csp >= X264_CSP_BGR ? 1 : 0 ) );

sps->vui.b_color_description_present = 0;

sps->vui.i_colorprim = ( param->vui.i_colorprim >= 0 && param->vui.i_colorprim <= 9 ? param->vui.i_colorprim : 2 );

sps->vui.i_transfer = ( param->vui.i_transfer >= 0 && param->vui.i_transfer <= 15 ? param->vui.i_transfer : 2 );

sps->vui.i_colmatrix = ( param->vui.i_colmatrix >= 0 && param->vui.i_colmatrix <= 10 ? param->vui.i_colmatrix :

( csp >= X264_CSP_BGR ? 0 : 2 ) );

if( sps->vui.i_colorprim != 2 ||

sps->vui.i_transfer != 2 ||

sps->vui.i_colmatrix != 2 )

{

sps->vui.b_color_description_present = 1;

}

if( sps->vui.i_vidformat != 5 ||

sps->vui.b_fullrange ||

sps->vui.b_color_description_present )

{

sps->vui.b_signal_type_present = 1;

}

/* FIXME: not sufficient for interlaced video */

sps->vui.b_chroma_loc_info_present = param->vui.i_chroma_loc > 0 && param->vui.i_chroma_loc <= 5 &&

sps->i_chroma_format_idc == CHROMA_420;

if( sps->vui.b_chroma_loc_info_present )

{

sps->vui.i_chroma_loc_top = param->vui.i_chroma_loc;

sps->vui.i_chroma_loc_bottom = param->vui.i_chroma_loc;

}

sps->vui.b_timing_info_present = param->i_timebase_num > 0 && param->i_timebase_den > 0;

if( sps->vui.b_timing_info_present )

{

sps->vui.i_num_units_in_tick = param->i_timebase_num;

sps->vui.i_time_scale = param->i_timebase_den * 2;

sps->vui.b_fixed_frame_rate = !param->b_vfr_input;

}

sps->vui.b_vcl_hrd_parameters_present = 0; // we don't support VCL HRD

sps->vui.b_nal_hrd_parameters_present = !!param->i_nal_hrd;

sps->vui.b_pic_struct_present = param->b_pic_struct;

// NOTE: HRD related parts of the SPS are initialised in x264_ratecontrol_init_reconfigurable

sps->vui.b_bitstream_restriction = param->i_keyint_max > 1;

if( sps->vui.b_bitstream_restriction )

{

sps->vui.b_motion_vectors_over_pic_boundaries = 1;

sps->vui.i_max_bytes_per_pic_denom = 0;

sps->vui.i_max_bits_per_mb_denom = 0;

sps->vui.i_log2_max_mv_length_horizontal =

sps->vui.i_log2_max_mv_length_vertical = (int)log2f( X264_MAX( 1, param->analyse.i_mv_range*4-1 ) ) + 1;

}

}

x264_pps_init()根据输入参数生成H.264码流的PPS(Picture Parameter Set,图像参数集)信息,即根据输入参数集x264_param_t中的信息,初始化了PPS结构体中的成员变量。该函数的定义位于encoder\set.c,对应的代码如下:

////////////////初始化PPS

void x264_pps_init( x264_pps_t *pps, int i_id, x264_param_t *param, x264_sps_t *sps )

{

pps->i_id = i_id;

pps->i_sps_id = sps->i_id; //所属的SPS

pps->b_cabac = param->b_cabac; //是否使用CABAC?

pps->b_pic_order = !param->i_avcintra_class && param->b_interlaced;

pps->i_num_slice_groups = 1;

//目前参考帧队列的长度

//注意是这个队列中当前实际的、已存在的参考帧数目,这从它的名字“active”中也可以看出来。

pps->i_num_ref_idx_l0_default_active = param->i_frame_reference;

pps->i_num_ref_idx_l1_default_active = 1;

//加权预测

pps->b_weighted_pred = param->analyse.i_weighted_pred > 0;

pps->b_weighted_bipred = param->analyse.b_weighted_bipred ? 2 : 0;

//量化参数QP的初始值

pps->i_pic_init_qp = param->rc.i_rc_method == X264_RC_ABR || param->b_stitchable ? 26 + QP_BD_OFFSET : SPEC_QP( param->rc.i_qp_constant );

pps->i_pic_init_qs = 26 + QP_BD_OFFSET;

pps->i_chroma_qp_index_offset = param->analyse.i_chroma_qp_offset;

pps->b_deblocking_filter_control = 1;

pps->b_constrained_intra_pred = param->b_constrained_intra;

pps->b_redundant_pic_cnt = 0;

pps->b_transform_8x8_mode = param->analyse.b_transform_8x8 ? 1 : 0;

pps->i_cqm_preset = param->i_cqm_preset;

switch( pps->i_cqm_preset )

{

case X264_CQM_FLAT:

for( int i = 0; i < 8; i++ )

pps->scaling_list[i] = x264_cqm_flat16;

break;

case X264_CQM_JVT:

for( int i = 0; i < 8; i++ )

pps->scaling_list[i] = x264_cqm_jvt[i];

break;

case X264_CQM_CUSTOM:

/* match the transposed DCT & zigzag */

transpose( param->cqm_4iy, 4 );

transpose( param->cqm_4py, 4 );

transpose( param->cqm_4ic, 4 );

transpose( param->cqm_4pc, 4 );

transpose( param->cqm_8iy, 8 );

transpose( param->cqm_8py, 8 );

transpose( param->cqm_8ic, 8 );

transpose( param->cqm_8pc, 8 );

pps->scaling_list[CQM_4IY] = param->cqm_4iy;

pps->scaling_list[CQM_4PY] = param->cqm_4py;

pps->scaling_list[CQM_4IC] = param->cqm_4ic;

pps->scaling_list[CQM_4PC] = param->cqm_4pc;

pps->scaling_list[CQM_8IY+4] = param->cqm_8iy;

pps->scaling_list[CQM_8PY+4] = param->cqm_8py;

pps->scaling_list[CQM_8IC+4] = param->cqm_8ic;

pps->scaling_list[CQM_8PC+4] = param->cqm_8pc;

for( int i = 0; i < 8; i++ )

for( int j = 0; j < (i < 4 ? 16 : 64); j++ )

if( pps->scaling_list[i][j] == 0 )

pps->scaling_list[i] = x264_cqm_jvt[i];

break;

}

}

2、x264_encoder_headers()函数

x264_encoder_headers()是libx264的一个API函数,用于输出SPS/PPS/SEI这些H.264码流的头信息,x264_encoder_headers()的定义位于encoder\encoder.c。x264_encoder_headers()分别调用了x264_sps_write(),x264_pps_write(),x264_sei_version_write()输出了SPS,PPS,和SEI信息。在输出每个NALU之前,需要调用x264_nal_start(),在输出NALU之后,需要调用x264_nal_end()。对应的代码分析如下:

/******************************************************************/

/******************************************************************/

/*

======Analysed by RuiDong Fang

======Csdn Blog:http://blog.csdn.net/frd2009041510

======Date:2016.03.09

*/

/******************************************************************/

/******************************************************************/

/************====== x264_encoder_headers()函数 ======************/

/*

功能:x264_encoder_headers()是libx264的一个API函数,用于输出SPS/PPS/SEI这些H.264码流的头信息

*/

/****************************************************************************

* x264_encoder_headers:

****************************************************************************/

int x264_encoder_headers( x264_t *h, x264_nal_t **pp_nal, int *pi_nal )

{

int frame_size = 0;

/* init bitstream context */

h->out.i_nal = 0;

bs_init( &h->out.bs, h->out.p_bitstream, h->out.i_bitstream );

/* Write SEI, SPS and PPS. */

/*在输出每个NALU之前,需要调用x264_nal_start(),在输出NALU之后,需要调用x264_nal_end()*/

/* generate sequence parameters */

x264_nal_start( h, NAL_SPS, NAL_PRIORITY_HIGHEST );

x264_sps_write( &h->out.bs, h->sps ); //////////////////////////输出SPS

if( x264_nal_end( h ) )

return -1;

/* generate picture parameters */

x264_nal_start( h, NAL_PPS, NAL_PRIORITY_HIGHEST );

x264_pps_write( &h->out.bs, h->sps, h->pps ); //////////////////////////输出PPS

if( x264_nal_end( h ) )

return -1;

/* identify ourselves */

x264_nal_start( h, NAL_SEI, NAL_PRIORITY_DISPOSABLE );

if( x264_sei_version_write( h, &h->out.bs ) ) //////////////////////////输出SEI(其中包含了配置信息)

return -1;

if( x264_nal_end( h ) )

return -1;

frame_size = x264_encoder_encapsulate_nals( h, 0 );

if( frame_size < 0 )

return -1;

/* now set output*/

*pi_nal = h->out.i_nal;

*pp_nal = &h->out.nal[0];

h->out.i_nal = 0;

return frame_size;

}

2.1、x264_sps_write()函数

x264_sps_write()用于输出SPS。该函数的定义位于encoder\set.c,x264_sps_write()将x264_sps_t结构体中的信息输出出来形成了一个NALU。对应的代码如下:

////////////////输出SPS

void x264_sps_write( bs_t *s, x264_sps_t *sps )

{

bs_realign( s );

bs_write( s, 8, sps->i_profile_idc ); //型profile,8bit

bs_write1( s, sps->b_constraint_set0 );

bs_write1( s, sps->b_constraint_set1 );

bs_write1( s, sps->b_constraint_set2 );

bs_write1( s, sps->b_constraint_set3 );

bs_write( s, 4, 0 ); /* reserved */

bs_write( s, 8, sps->i_level_idc ); //级level,8bit

bs_write_ue( s, sps->i_id ); //本SPS的 id号

if( sps->i_profile_idc >= PROFILE_HIGH )

{

//色度取样格式

//0代表单色

//1代表4:2:0

//2代表4:2:2

//3代表4:4:4

bs_write_ue( s, sps->i_chroma_format_idc );

if( sps->i_chroma_format_idc == CHROMA_444 )

bs_write1( s, 0 ); // separate_colour_plane_flag

//亮度

//颜色位深=bit_depth_luma_minus8+8

bs_write_ue( s, BIT_DEPTH-8 ); // bit_depth_luma_minus8

//色度与亮度一样

bs_write_ue( s, BIT_DEPTH-8 ); // bit_depth_chroma_minus8

bs_write1( s, sps->b_qpprime_y_zero_transform_bypass );

bs_write1( s, 0 ); // seq_scaling_matrix_present_flag

}

//log2_max_frame_num_minus4主要是为读取另一个句法元素frame_num服务的

//frame_num 是最重要的句法元素之一

//这个句法元素指明了frame_num的所能达到的最大值:

//MaxFrameNum = 2^( log2_max_frame_num_minus4 + 4 )

bs_write_ue( s, sps->i_log2_max_frame_num - 4 );

//pic_order_cnt_type 指明了poc (picture order count) 的编码方法

//poc标识图像的播放顺序。

//由于H.264使用了B帧预测,使得图像的解码顺序并不一定等于播放顺序,但它们之间存在一定的映射关系

//poc 可以由frame-num 通过映射关系计算得来,也可以索性由编码器显式地传送。

//H.264 中一共定义了三种poc 的编码方法

bs_write_ue( s, sps->i_poc_type );

if( sps->i_poc_type == 0 )

bs_write_ue( s, sps->i_log2_max_poc_lsb - 4 );

//num_ref_frames 指定参考帧队列可能达到的最大长度,解码器依照这个句法元素的值开辟存储区,这个存储区用于存放已解码的参考帧,

//H.264 规定最多可用16 个参考帧,因此最大值为16。

bs_write_ue( s, sps->i_num_ref_frames );

bs_write1( s, sps->b_gaps_in_frame_num_value_allowed );

//pic_width_in_mbs_minus1加1后为图像宽(以宏块为单位):

// PicWidthInMbs = pic_width_in_mbs_minus1 + 1

//以像素为单位图像宽度(亮度):width=PicWidthInMbs*16

bs_write_ue( s, sps->i_mb_width - 1 );

//pic_height_in_map_units_minus1加1后指明图像高度(以宏块为单位)

bs_write_ue( s, (sps->i_mb_height >> !sps->b_frame_mbs_only) - 1);

bs_write1( s, sps->b_frame_mbs_only );

if( !sps->b_frame_mbs_only )

bs_write1( s, sps->b_mb_adaptive_frame_field );

bs_write1( s, sps->b_direct8x8_inference );

bs_write1( s, sps->b_crop );

if( sps->b_crop )

{

int h_shift = sps->i_chroma_format_idc == CHROMA_420 || sps->i_chroma_format_idc == CHROMA_422;

int v_shift = sps->i_chroma_format_idc == CHROMA_420;

bs_write_ue( s, sps->crop.i_left >> h_shift );

bs_write_ue( s, sps->crop.i_right >> h_shift );

bs_write_ue( s, sps->crop.i_top >> v_shift );

bs_write_ue( s, sps->crop.i_bottom >> v_shift );

}

bs_write1( s, sps->b_vui );

if( sps->b_vui )

{

bs_write1( s, sps->vui.b_aspect_ratio_info_present );

if( sps->vui.b_aspect_ratio_info_present )

{

int i;

static const struct { uint8_t w, h, sar; } sar[] =

{

// aspect_ratio_idc = 0 -> unspecified

{ 1, 1, 1 }, { 12, 11, 2 }, { 10, 11, 3 }, { 16, 11, 4 },

{ 40, 33, 5 }, { 24, 11, 6 }, { 20, 11, 7 }, { 32, 11, 8 },

{ 80, 33, 9 }, { 18, 11, 10}, { 15, 11, 11}, { 64, 33, 12},

{160, 99, 13}, { 4, 3, 14}, { 3, 2, 15}, { 2, 1, 16},

// aspect_ratio_idc = [17..254] -> reserved

{ 0, 0, 255 }

};

for( i = 0; sar[i].sar != 255; i++ )

{

if( sar[i].w == sps->vui.i_sar_width &&

sar[i].h == sps->vui.i_sar_height )

break;

}

bs_write( s, 8, sar[i].sar );

if( sar[i].sar == 255 ) /* aspect_ratio_idc (extended) */

{

bs_write( s, 16, sps->vui.i_sar_width );

bs_write( s, 16, sps->vui.i_sar_height );

}

}

bs_write1( s, sps->vui.b_overscan_info_present );

if( sps->vui.b_overscan_info_present )

bs_write1( s, sps->vui.b_overscan_info );

bs_write1( s, sps->vui.b_signal_type_present );

if( sps->vui.b_signal_type_present )

{

bs_write( s, 3, sps->vui.i_vidformat );

bs_write1( s, sps->vui.b_fullrange );

bs_write1( s, sps->vui.b_color_description_present );

if( sps->vui.b_color_description_present )

{

bs_write( s, 8, sps->vui.i_colorprim );

bs_write( s, 8, sps->vui.i_transfer );

bs_write( s, 8, sps->vui.i_colmatrix );

}

}

bs_write1( s, sps->vui.b_chroma_loc_info_present );

if( sps->vui.b_chroma_loc_info_present )

{

bs_write_ue( s, sps->vui.i_chroma_loc_top );

bs_write_ue( s, sps->vui.i_chroma_loc_bottom );

}

bs_write1( s, sps->vui.b_timing_info_present );

if( sps->vui.b_timing_info_present )

{

bs_write32( s, sps->vui.i_num_units_in_tick );

bs_write32( s, sps->vui.i_time_scale );

bs_write1( s, sps->vui.b_fixed_frame_rate );

}

bs_write1( s, sps->vui.b_nal_hrd_parameters_present );

if( sps->vui.b_nal_hrd_parameters_present )

{

bs_write_ue( s, sps->vui.hrd.i_cpb_cnt - 1 );

bs_write( s, 4, sps->vui.hrd.i_bit_rate_scale );

bs_write( s, 4, sps->vui.hrd.i_cpb_size_scale );

bs_write_ue( s, sps->vui.hrd.i_bit_rate_value - 1 );

bs_write_ue( s, sps->vui.hrd.i_cpb_size_value - 1 );

bs_write1( s, sps->vui.hrd.b_cbr_hrd );

bs_write( s, 5, sps->vui.hrd.i_initial_cpb_removal_delay_length - 1 );

bs_write( s, 5, sps->vui.hrd.i_cpb_removal_delay_length - 1 );

bs_write( s, 5, sps->vui.hrd.i_dpb_output_delay_length - 1 );

bs_write( s, 5, sps->vui.hrd.i_time_offset_length );

}

bs_write1( s, sps->vui.b_vcl_hrd_parameters_present );

if( sps->vui.b_nal_hrd_parameters_present || sps->vui.b_vcl_hrd_parameters_present )

bs_write1( s, 0 ); /* low_delay_hrd_flag */

bs_write1( s, sps->vui.b_pic_struct_present );

bs_write1( s, sps->vui.b_bitstream_restriction );

if( sps->vui.b_bitstream_restriction )

{

bs_write1( s, sps->vui.b_motion_vectors_over_pic_boundaries );

bs_write_ue( s, sps->vui.i_max_bytes_per_pic_denom );

bs_write_ue( s, sps->vui.i_max_bits_per_mb_denom );

bs_write_ue( s, sps->vui.i_log2_max_mv_length_horizontal );

bs_write_ue( s, sps->vui.i_log2_max_mv_length_vertical );

bs_write_ue( s, sps->vui.i_num_reorder_frames );

bs_write_ue( s, sps->vui.i_max_dec_frame_buffering );

}

}

//RBSP拖尾

//无论比特流当前位置是否字节对齐 , 都向其中写入一个比特1及若干个(0~7个)比特0 , 使其字节对齐

bs_rbsp_trailing( s );

bs_flush( s );

}

2.2、x264_pps_write()函数

x264_pps_write()用于输出PPS。该函数的定义位于encoder\set.c,x264_pps_write()将x264_pps_t结构体中的信息输出出来形成了一个NALU。对应的代码如下:

////////////////输出PPS

void x264_pps_write( bs_t *s, x264_sps_t *sps, x264_pps_t *pps )

{

bs_realign( s );

bs_write_ue( s, pps->i_id ); //PPS的ID

bs_write_ue( s, pps->i_sps_id );//该PPS引用的SPS的ID

//entropy_coding_mode_flag

//0表示熵编码使用CAVLC,1表示熵编码使用CABAC

bs_write1( s, pps->b_cabac );

bs_write1( s, pps->b_pic_order );

bs_write_ue( s, pps->i_num_slice_groups - 1 );

bs_write_ue( s, pps->i_num_ref_idx_l0_default_active - 1 );

bs_write_ue( s, pps->i_num_ref_idx_l1_default_active - 1 );

//P Slice 是否使用加权预测?

bs_write1( s, pps->b_weighted_pred );

//B Slice 是否使用加权预测?

bs_write( s, 2, pps->b_weighted_bipred );

//pic_init_qp_minus26加26后用以指明亮度分量的QP的初始值。

bs_write_se( s, pps->i_pic_init_qp - 26 - QP_BD_OFFSET );

bs_write_se( s, pps->i_pic_init_qs - 26 - QP_BD_OFFSET );

bs_write_se( s, pps->i_chroma_qp_index_offset );

bs_write1( s, pps->b_deblocking_filter_control );

bs_write1( s, pps->b_constrained_intra_pred );

bs_write1( s, pps->b_redundant_pic_cnt );

if( pps->b_transform_8x8_mode || pps->i_cqm_preset != X264_CQM_FLAT )

{

bs_write1( s, pps->b_transform_8x8_mode );

bs_write1( s, (pps->i_cqm_preset != X264_CQM_FLAT) );

if( pps->i_cqm_preset != X264_CQM_FLAT )

{

scaling_list_write( s, pps, CQM_4IY );

scaling_list_write( s, pps, CQM_4IC );

bs_write1( s, 0 ); // Cr = Cb

scaling_list_write( s, pps, CQM_4PY );

scaling_list_write( s, pps, CQM_4PC );

bs_write1( s, 0 ); // Cr = Cb

if( pps->b_transform_8x8_mode )

{

if( sps->i_chroma_format_idc == CHROMA_444 )

{

scaling_list_write( s, pps, CQM_8IY+4 );

scaling_list_write( s, pps, CQM_8IC+4 );

bs_write1( s, 0 ); // Cr = Cb

scaling_list_write( s, pps, CQM_8PY+4 );

scaling_list_write( s, pps, CQM_8PC+4 );

bs_write1( s, 0 ); // Cr = Cb

}

else

{

scaling_list_write( s, pps, CQM_8IY+4 );

scaling_list_write( s, pps, CQM_8PY+4 );

}

}

}

bs_write_se( s, pps->i_chroma_qp_index_offset );

}

//RBSP拖尾

//无论比特流当前位置是否字节对齐 , 都向其中写入一个比特1及若干个(0~7个)比特0 , 使其字节对齐

bs_rbsp_trailing( s );

bs_flush( s );

}

2.3、x264_sei_version_write()函数

x264_sei_version_write()用于输出SEI。SEI中一般存储了H.264中的一些附加信息,x264_sei_version_write()的定义位于encoder\set.c,x264_sei_version_write()首先调用了x264_param2string()将当前的配置参数保存到字符串opts[]中,然后调用sprintf()结合opt[]生成完整的SEI信息,最后调用x264_sei_write()输出SEI信息。在这个过程中涉及到一个libx264的API函数x264_param2string()。对应的代码如下:

////////////////输出SEI(其中包含了配置信息)

int x264_sei_version_write( x264_t *h, bs_t *s )

{

// random ID number generated according to ISO-11578

static const uint8_t uuid[16] =

{

0xdc, 0x45, 0xe9, 0xbd, 0xe6, 0xd9, 0x48, 0xb7,

0x96, 0x2c, 0xd8, 0x20, 0xd9, 0x23, 0xee, 0xef

};

char *opts = x264_param2string( &h->param, 0 );//////////////把设置信息转换为字符串

char *payload;

int length;

if( !opts )

return -1;

CHECKED_MALLOC( payload, 200 + strlen( opts ) );

memcpy( payload, uuid, 16 );

//配置信息的内容

//opts字符串内容还是挺多的

sprintf( payload+16, "x264 - core %d%s - H.264/MPEG-4 AVC codec - "

"Copy%s 2003-2016 - http://www.videolan.org/x264.html - options: %s",

X264_BUILD, X264_VERSION, HAVE_GPL?"left":"right", opts );

length = strlen(payload)+1;

//输出SEI

//数据类型为USER_DATA_UNREGISTERED

x264_sei_write( s, (uint8_t *)payload, length, SEI_USER_DATA_UNREGISTERED );

x264_free( opts );

x264_free( payload );

return 0;

fail:

x264_free( opts );

return -1;

}

其中,x264_param2string()用于将当前设置转换为字符串输出出来,x264_param2string()的定义位于common\common.c,可以看出x264_param2string()几乎遍历了libx264的所有设置选项,使用“s += sprintf()”的形式将它们连接成一个很长的字符串,并最终将该字符串返回。对应的代码如下:

/****************************************************************************

* x264_param2string:

****************************************************************************/

//////////////////////把设置信息转换为字符串

char *x264_param2string( x264_param_t *p, int b_res )

{

int len = 1000;

char *buf, *s;

if( p->rc.psz_zones )

len += strlen(p->rc.psz_zones);

buf = s = x264_malloc( len );

if( !buf )

return NULL;

if( b_res )

{

s += sprintf( s, "%dx%d ", p->i_width, p->i_height );

s += sprintf( s, "fps=%u/%u ", p->i_fps_num, p->i_fps_den );

s += sprintf( s, "timebase=%u/%u ", p->i_timebase_num, p->i_timebase_den );

s += sprintf( s, "bitdepth=%d ", BIT_DEPTH );

}

if( p->b_opencl )

s += sprintf( s, "opencl=%d ", p->b_opencl );

s += sprintf( s, "cabac=%d", p->b_cabac );

s += sprintf( s, " ref=%d", p->i_frame_reference );

s += sprintf( s, " deblock=%d:%d:%d", p->b_deblocking_filter,

p->i_deblocking_filter_alphac0, p->i_deblocking_filter_beta );

s += sprintf( s, " analyse=%#x:%#x", p->analyse.intra, p->analyse.inter );

s += sprintf( s, " me=%s", x264_motion_est_names[ p->analyse.i_me_method ] );

s += sprintf( s, " subme=%d", p->analyse.i_subpel_refine );

s += sprintf( s, " psy=%d", p->analyse.b_psy );

if( p->analyse.b_psy )

s += sprintf( s, " psy_rd=%.2f:%.2f", p->analyse.f_psy_rd, p->analyse.f_psy_trellis );

s += sprintf( s, " mixed_ref=%d", p->analyse.b_mixed_references );

s += sprintf( s, " me_range=%d", p->analyse.i_me_range );

s += sprintf( s, " chroma_me=%d", p->analyse.b_chroma_me );

s += sprintf( s, " trellis=%d", p->analyse.i_trellis );

s += sprintf( s, " 8x8dct=%d", p->analyse.b_transform_8x8 );

s += sprintf( s, " cqm=%d", p->i_cqm_preset );

s += sprintf( s, " deadzone=%d,%d", p->analyse.i_luma_deadzone[0], p->analyse.i_luma_deadzone[1] );

s += sprintf( s, " fast_pskip=%d", p->analyse.b_fast_pskip );

s += sprintf( s, " chroma_qp_offset=%d", p->analyse.i_chroma_qp_offset );

s += sprintf( s, " threads=%d", p->i_threads );

s += sprintf( s, " lookahead_threads=%d", p->i_lookahead_threads );

s += sprintf( s, " sliced_threads=%d", p->b_sliced_threads );

if( p->i_slice_count )

s += sprintf( s, " slices=%d", p->i_slice_count );

if( p->i_slice_count_max )

s += sprintf( s, " slices_max=%d", p->i_slice_count_max );

if( p->i_slice_max_size )

s += sprintf( s, " slice_max_size=%d", p->i_slice_max_size );

if( p->i_slice_max_mbs )

s += sprintf( s, " slice_max_mbs=%d", p->i_slice_max_mbs );

if( p->i_slice_min_mbs )

s += sprintf( s, " slice_min_mbs=%d", p->i_slice_min_mbs );

s += sprintf( s, " nr=%d", p->analyse.i_noise_reduction );

s += sprintf( s, " decimate=%d", p->analyse.b_dct_decimate );

s += sprintf( s, " interlaced=%s", p->b_interlaced ? p->b_tff ? "tff" : "bff" : p->b_fake_interlaced ? "fake" : "0" );

s += sprintf( s, " bluray_compat=%d", p->b_bluray_compat );

if( p->b_stitchable )

s += sprintf( s, " stitchable=%d", p->b_stitchable );

s += sprintf( s, " constrained_intra=%d", p->b_constrained_intra );

s += sprintf( s, " bframes=%d", p->i_bframe );

if( p->i_bframe )

{

s += sprintf( s, " b_pyramid=%d b_adapt=%d b_bias=%d direct=%d weightb=%d open_gop=%d",

p->i_bframe_pyramid, p->i_bframe_adaptive, p->i_bframe_bias,

p->analyse.i_direct_mv_pred, p->analyse.b_weighted_bipred, p->b_open_gop );

}

s += sprintf( s, " weightp=%d", p->analyse.i_weighted_pred > 0 ? p->analyse.i_weighted_pred : 0 );

if( p->i_keyint_max == X264_KEYINT_MAX_INFINITE )

s += sprintf( s, " keyint=infinite" );

else

s += sprintf( s, " keyint=%d", p->i_keyint_max );

s += sprintf( s, " keyint_min=%d scenecut=%d intra_refresh=%d",

p->i_keyint_min, p->i_scenecut_threshold, p->b_intra_refresh );

if( p->rc.b_mb_tree || p->rc.i_vbv_buffer_size )

s += sprintf( s, " rc_lookahead=%d", p->rc.i_lookahead );

s += sprintf( s, " rc=%s mbtree=%d", p->rc.i_rc_method == X264_RC_ABR ?

( p->rc.b_stat_read ? "2pass" : p->rc.i_vbv_max_bitrate == p->rc.i_bitrate ? "cbr" : "abr" )

: p->rc.i_rc_method == X264_RC_CRF ? "crf" : "cqp", p->rc.b_mb_tree );

if( p->rc.i_rc_method == X264_RC_ABR || p->rc.i_rc_method == X264_RC_CRF )

{

if( p->rc.i_rc_method == X264_RC_CRF )

s += sprintf( s, " crf=%.1f", p->rc.f_rf_constant );

else

s += sprintf( s, " bitrate=%d ratetol=%.1f",

p->rc.i_bitrate, p->rc.f_rate_tolerance );

s += sprintf( s, " qcomp=%.2f qpmin=%d qpmax=%d qpstep=%d",

p->rc.f_qcompress, p->rc.i_qp_min, p->rc.i_qp_max, p->rc.i_qp_step );

if( p->rc.b_stat_read )

s += sprintf( s, " cplxblur=%.1f qblur=%.1f",

p->rc.f_complexity_blur, p->rc.f_qblur );

if( p->rc.i_vbv_buffer_size )

{

s += sprintf( s, " vbv_maxrate=%d vbv_bufsize=%d",

p->rc.i_vbv_max_bitrate, p->rc.i_vbv_buffer_size );

if( p->rc.i_rc_method == X264_RC_CRF )

s += sprintf( s, " crf_max=%.1f", p->rc.f_rf_constant_max );

}

}

else if( p->rc.i_rc_method == X264_RC_CQP )

s += sprintf( s, " qp=%d", p->rc.i_qp_constant );

if( p->rc.i_vbv_buffer_size )

s += sprintf( s, " nal_hrd=%s filler=%d", x264_nal_hrd_names[p->i_nal_hrd], p->rc.b_filler );

if( p->crop_rect.i_left | p->crop_rect.i_top | p->crop_rect.i_right | p->crop_rect.i_bottom )

s += sprintf( s, " crop_rect=%u,%u,%u,%u", p->crop_rect.i_left, p->crop_rect.i_top,

p->crop_rect.i_right, p->crop_rect.i_bottom );

if( p->i_frame_packing >= 0 )

s += sprintf( s, " frame-packing=%d", p->i_frame_packing );

if( !(p->rc.i_rc_method == X264_RC_CQP && p->rc.i_qp_constant == 0) )

{

s += sprintf( s, " ip_ratio=%.2f", p->rc.f_ip_factor );

if( p->i_bframe && !p->rc.b_mb_tree )

s += sprintf( s, " pb_ratio=%.2f", p->rc.f_pb_factor );

s += sprintf( s, " aq=%d", p->rc.i_aq_mode );

if( p->rc.i_aq_mode )

s += sprintf( s, ":%.2f", p->rc.f_aq_strength );

if( p->rc.psz_zones )

s += sprintf( s, " zones=%s", p->rc.psz_zones );

else if( p->rc.i_zones )

s += sprintf( s, " zones" );

}

return buf;

}