Hbase学习二

# Hbase学习二

标签(空格分隔): Hbase

[TOC]

一,回顾和复习

kudu:集成HDFS和HBase的所有优势,速度是Hbase的10倍

二,Hbase架构以及各角色的作用

2,Zookeeper

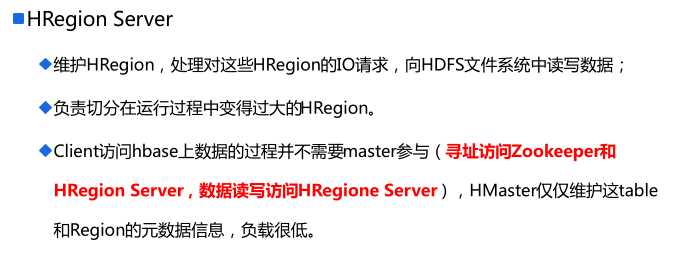

====存储所有HRegion的寻址入口=======================================

table -> HRegion -> HRegionServer

1 : N | 1 : 1

Client -> zookeeper -> meta -> Region -> RegionServer

====================================================================

=====配置master-backup=================================

1,在conf目录下创建master-backup文件

2,添加服务器IP

3,bin/hbase-daemon.sh start master-backup

5,Hbase&Zookeeper

二,Hbase Java API

1,pom.xml文件添加如下配置

<!-- HBase Client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>2,拷贝配置文件到资源目录下面

拷贝core-site.xml,hdfs-site.xml,hbase-site.xml到/home/hadoop002/workspace5/hadoopstudy/res目录下

3,HBaseApp.java代码如下

package com.ibeifeng.bigdata.senior.hbase.app;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.PrefixFilter;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.IOUtils;

public class HBaseApp {

public static HTable getHTableByTableName(String tableName) throws Exception {

// Get instance of Configuration

Configuration conf = HBaseConfiguration.create();

// Get table Instance

HTable table = new HTable(conf, tableName);

return table;

}

public static void getData() throws Exception {

HTable table = HBaseApp.getHTableByTableName("student");

// Get data

Get get = new Get(Bytes.toBytes("10001"));

// add column

get.addColumn( //

Bytes.toBytes("info"), //

Bytes.toBytes("name")//

);

get.addColumn( //

Bytes.toBytes("info"), //

Bytes.toBytes("age")//

);

// get.addFamily(family)

Result result = table.get(get);

for (Cell cell : result.rawCells()) {

System.out.print(Bytes.toString(CellUtil.cloneRow(cell)) + "\t");

System.out.println(//

Bytes.toString(CellUtil.cloneFamily(cell)) //

+ ":" + //

Bytes.toString(CellUtil.cloneQualifier(cell)) //

+ "->" + //

Bytes.toString(CellUtil.cloneValue(cell)) //

+ " " + //

cell.getTimestamp());

System.out.println("-----------------------------");

}

// close

table.close();

}

public static void putData() throws Exception {

HTable table = HBaseApp.getHTableByTableName("student");

Put put = new Put(Bytes.toBytes("10007")) ;

// Add a column with value

put.add( //

Bytes.toBytes("info"), //

Bytes.toBytes("name"), //

Bytes.toBytes("tianqi") //

) ;

put.add( //

Bytes.toBytes("info"), //

Bytes.toBytes("sex"), //

Bytes.toBytes("male") //

) ;

put.add( //

Bytes.toBytes("info"), //

Bytes.toBytes("address"), //

Bytes.toBytes("shanghai") //

) ;

/** Map<String, String> kvs = new HashMap<String, String>() ; for(String key : kvs.keySet()){ put.add( HBaseTableConstant.HBASE_TABLE_STUDENT_CF, // Bytes.toBytes(key), // Bytes.toBytes(kvs.get(key)) // ) ; } */

table.put(put);

// close

table.close();

}

public static void deleteData() throws Exception {

HTable table = HBaseApp.getHTableByTableName("student");

Delete delete = new Delete(Bytes.toBytes("10012")) ;

/** delete.deleteColumn(// Bytes.toBytes("info"), // Bytes.toBytes("telphone")// ); */

delete.deleteFamily(Bytes.toBytes("info"));

table.delete(delete);

table.close();

}

public static void scanData() throws Exception {

String tableName = "student" ;

HTable table = null ;

ResultScanner resultScanner = null ;

try{

table = HBaseApp.getHTableByTableName(tableName);

Scan scan = new Scan() ;

// ==========================================================================

// Range

scan.setStartRow(Bytes.toBytes("1000"));

scan.setStopRow(Bytes.toBytes("10005")) ;

// Scan

// Scan scan2 = new Scan(startRow, stopRow) ;

// add CF and Column

// scan.addFamily(family)

// scan.addColumn(family, qualifier)

// Filter

PrefixFilter filter = new PrefixFilter(Bytes.toBytes("")) ;

scan.setFilter(filter) ;

/** * 话单查询 * telphone_datetime * 查询三月份通话记录,使用Scan Range * startrow: * 18298459876_20160301000000000 * stoprow: * 18298459876_20160401000000000 * 使用前缀匹配Filter查询 * prefix = 18298459876_201603 * 分页查询 * 尽量不要使用PageFilter,很慢,性能很不好 * 可以在页面前端展示的时候进行分页(伪分页) */

// Cache

// scan.setCacheBlocks(cacheBlocks);

// scan.setCaching(caching);

// scan.setBatch(batch);

// ==========================================================================

resultScanner = table.getScanner(scan) ;

for(Result result : resultScanner){

for (Cell cell : result.rawCells()) {

System.out.print(Bytes.toString(CellUtil.cloneRow(cell)) + "\t");

System.out.println(//

Bytes.toString(CellUtil.cloneFamily(cell)) //

+ ":" + //

Bytes.toString(CellUtil.cloneQualifier(cell)) //

+ "->" + //

Bytes.toString(CellUtil.cloneValue(cell)) //

+ " " + //

cell.getTimestamp()

);

}

System.out.println("-----------------------------");

}

}catch(Exception e){

e.printStackTrace();

}finally {

IOUtils.closeStream(table);

IOUtils.closeStream(resultScanner);

}

}

public static void main(String[] args) throws Exception {

deleteData();

}

}

4,对hbase的基本操作进行封装

- HbaseHelp.java:

package org.apache.hadoop.hbase;

import java.io.IOException;

import java.io.InterruptedIOException;

import java.util.HashMap;

import java.util.Map;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.RetriesExhaustedWithDetailsException;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

public class HbaseHelp {

private HTable hTable;

public HbaseHelp(String tablename) throws Exception {

this.hTable = HbaseUtils.getHTableByTableName(tablename);

}

// query data

public Result queryData(ParamObj paramObj) throws IOException {

Get get = new Get(Bytes.toBytes(paramObj.getRowKey()));

Map<String, HashMap<String, String>> kvs= paramObj.getKvs();

for (String key : kvs.keySet()) {

HashMap<String, String> temp=kvs.get(key);

for(String columnname:temp.keySet()){

get.addColumn(Bytes.toBytes(key), //

Bytes.toBytes(columnname) //

);

}

}

Result result = this.hTable.get(get);

return result;

}

//put data

public boolean putData(ParamObj paramObj){

Put put = new Put(Bytes.toBytes(paramObj.getRowKey()));

Map<String, HashMap<String, String>> kvs= paramObj.getKvs();

for (String columnfamily : kvs.keySet()) {

HashMap<String, String> temp=kvs.get(columnfamily);

for(String columnname:temp.keySet()){

put.add(

Bytes.toBytes(columnfamily),

Bytes.toBytes(columnname),

Bytes.toBytes(temp.get(columnname))

) ;

}

}

boolean succ=true;

try {

this.hTable.put(put);

} catch (Exception e) {

e.printStackTrace();

succ=false;

}

return succ;

}

//delete data by columnfamily

public boolean deleteByColumnFamily(ParamObj paramObj) throws IOException{

Delete delete = new Delete(Bytes.toBytes(paramObj.getRowKey())) ;

Map<String, HashMap<String, String>> kvs= paramObj.getKvs();

for (String columnfamily : kvs.keySet()) {

delete.deleteFamily(Bytes.toBytes(columnfamily));

this.hTable.delete(delete);

}

return true;

}

//delete data by column

public boolean deleteByColumn(ParamObj paramObj) throws IOException{

Delete delete = new Delete(Bytes.toBytes(paramObj.getRowKey())) ;

Map<String, HashMap<String, String>> kvs= paramObj.getKvs();

for (String columnfamily : kvs.keySet()) {

HashMap<String, String> temp=kvs.get(columnfamily);

for(String columnname:temp.keySet()){

delete.deleteColumn(//

Bytes.toBytes(columnfamily), //

Bytes.toBytes(columnname)//

);

this.hTable.delete(delete);

}

}

return true;

}

//scan data

public ResultScanner scanData(String startRowKey,String stopRowKey,ParamObj paramObj) throws Exception {

Scan scan = new Scan() ;

scan.setStartRow(Bytes.toBytes(startRowKey));

scan.setStopRow(Bytes.toBytes(stopRowKey)) ;

Map<String, HashMap<String, String>> kvs= paramObj.getKvs();

for (String columnfamily : kvs.keySet()) {

scan.addFamily(Bytes.toBytes(columnfamily));

}

ResultScanner resultScanner =this.hTable.getScanner(scan);

return resultScanner;

}

//close

public void close() throws IOException{

this.hTable.close();

}

}

- HbaseUtils.java:

package org.apache.hadoop.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.HTable;

public class HbaseUtils {

public static HTable getHTableByTableName(String tableName) throws Exception {

// Get instance of Configuration

Configuration conf = HBaseConfiguration.create();

// Get table Instance

HTable table = new HTable(conf, tableName);

return table;

}

}

- ParamObj.java:

package org.apache.hadoop.hbase;

import java.util.HashMap;

import java.util.Map;

public class ParamObj {

private String rowKey;

private Map<String, HashMap<String, String>> kvs = new HashMap<String, HashMap<String, String>>() ;

public String getRowKey() {

return rowKey;

}

public void setRowKey(String rowKey) {

this.rowKey = rowKey;

}

public Map<String, HashMap<String, String>> getKvs() {

return kvs;

}

public void setKvs(Map<String, HashMap<String, String>> kvs) {

this.kvs = kvs;

}

}