Storm Metric

If you have been following Storm’s updates over the past year, you may have noticed the metrics framework feature, added in version 0.9.0 (New Storm metrics system PR). This provides nicer primitives built into Storm for collecting application specific metrics and reporting those metrics to external systems in a manageable and scalable way.

This blog post is a brief howto on using this system since the only examples of this system I’ve seen used are in the core storm code.

Concepts

Storm’s metrics framework mainly consists of two API additions: 1) Metrics, 2) Metrics Consumers.

Metric

An object initialized in a Storm bolt or spout (or Trident Function) that is used for instrumenting the respective bolt/spout for metric collection. This object must also be registered with Storm using the TopologyContext.registerMetric(...) function. Metric’s must implementbacktype.storm.metric.api.IMetric.

Several useful IMetric implementations exist. (Excerpt from the Storm Metrics wiki page with some extra notes added).

AssignableMetric – set the metric to the explicit value you supply. Useful if it’s an external value or in the case that you are already calculating the summary statistic yourself. Note: Useful forstatsd Gauges.

CombinedMetric – generic interface for metrics that can be updated associatively.

CountMetric – a running total of the supplied values. Call incr() to increment by one, incrBy(n) to add/subtract the given number.

Note: Useful for statsd counters.

MultiCountMetric – a hashmap of count metrics. Note: Useful for many Counters where you may not know the name of the metric a priori or where creating many Counter’s manually is burdensome.

MeanReducer – an implementation of ReducedMetric that tracks a running average of values given to its reduce() method. (It accepts Double, Integer or Long values, and maintains the internal average as a Double.) Despite his reputation, the MeanReducer is actually a pretty nice guy in person.

Metrics Consumer

A object meant to process/report/log/etc output from Metric objects (represented as DataPointobjects) for all the various places these Metric objects were registered, also providing useful metadata about where the metric was collected such as worker host, worker port, componentID (bolt/spout name), taskID, timestamp, and updateInterval (all represented as TaskInfo objects). MetricConsumers are registered in the storm topology configuration (usingbacktype.storm.Config.registerMetricsConsumer(...)) or in Storm’s system config (Under the config name topology.metrics.consumer.register). Metrics Consumers must implementbacktype.storm.metric.api.IMetricsConsumer.

Example Usage

To demonstrate how to use the new metrics framework, I will walk through some changes I made to the ExclamationTopology included in storm-starter. These changes will allow us to collect some metrics including:

- A simple count of how many times the execute() method was called per time period (5 sec in this example).

- A count of how many times an individual word was encountered per time period (1 minute in this example).

- The mean length of all words encountered per time period (1 minute in this example).

Adding Metrics to the ExclamationBolt

Add three new member variables to ExclamationBolt. Notice there are all decalred as transient. This is needed because none of these Metrics are Serializable and all non transient variables in Storm bolts and spouts must be Serializable.

| 123 |

|

Initialize and register these Metrics in the Bolt’s prepare method. Metrics can only be registered in the prepare method of bolts or the open method of spouts. Otherwise an exception is thrown. The registerMetric takes three arguments: 1) metric name, 2) metric object, and 3) time bucket size in seconds. The “time bucket size in seconds” controls how often the metrics are sent to the Metrics Consumer.

| 12345678910111213141516 |

|

Actually increment/update the metrics in the bolt’s execute method. In this example we are just:

- incrementing a counter every time we handle a word.

- incrementing a counter for each specific word encountered.

- updating the mean length of word we encountered.

| 1234567891011121314 |

|

Collecting/Reporting Metrics

Lastly, we need to enable a Metric Consumer in order to collect and process these metrics. The Metric Consumer is meant to be the interface between the Storm metrics framework and some external system (such as Statsd, Riemann, etc). In this example, we are just going to log the metrics using Storm’s builtin LoggingMetricsConsumer. This is accomplished by registering the Metrics Consumer when defining the Storm topology. In this example, we are registering the metrics consumer with a parallelism hint of 2.

Here is the line we need to add when defining the topology.

| 1 |

|

Here is the full code for defining the toplogy:

| 12345678910111213141516171819202122 |

|

After running this topology, you should see log entries in $STORM_HOME/logs/metrics.log that look like this.

| 12345678910111213141516 |

|

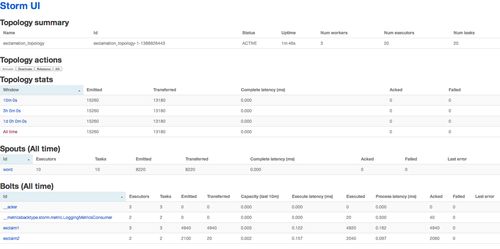

You should also see the LoggingMetricsConsumer show up as a Bolt in the Storm web UI, like this (After clicking the “Show System Stats” button at the bottom of the page):

Summary

- We instrumented the ExclamationBolt to collect some simple metrics. We accomplished this by initializing and registering the metrics in the Bolt’s prepare method and then by incrementing/updating the metrics in the bolt’s execute method.

- We had the metrics framework simply log all the metrics that were gathered using the builtinLoggingMetricsConsumer.

The full code is here as well as posted below. A diff between the original ExclamationTopology and mine is here.

In a future post I hope to present a Statsd Metrics Consumer that I am working on to allow for easy collection of metrics in statsd and then visualization in graphite, like this.

–@jason_trost

| 123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108 |

|