linux high memory 映射-1

书籍:深入理解linux内核;linux source code

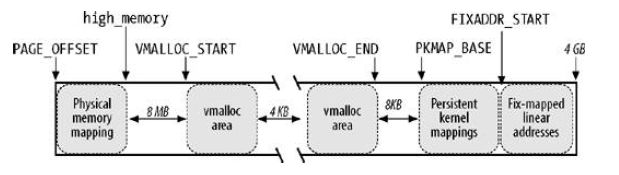

与直接映射的物理内存末端、高端内存的始端所对应的线性地址存放在high_memory变量中;在x86上,high_memory为896M,高于896MB以上的页框并没有映射在内核线性地址空间的第4个GB。

假定内核调用__get_free_pages();在高端内存分配一个页框。如果分配器在高端内存确实分配了一个页框,那么此函数不能返回它的线性地址,因为他根本不存在,在X64上不存在这个问题,因为可用的线性地址远大于能安装的RAM大小。即此时HIGH_memeory为空;

那么高端内存如何映射???

内核可以采用三种不同的机制将页框映射到高端内存;分别叫做永久内核映射、临时内核映射以及非连续内存分配。

建立永久内核映射可能阻塞当前进程;这发生在空闲页表项不存在时,也就是在高端内存上没有页表项可以用作页框的“窗口”时。因此,永久内核映射不能用于中断处理程序和可延迟函数。相反,建立临时内核映射绝不会要求阻塞当前进程;不过,他的缺点是只有很少的临时内核映射可以同时建立起来。

vmalloc area,Persistent kernel mappings区域和固定映射线性地址空间中的FIX_KMAP区域,这三个区域对应的映射机制分别为非连续内存分配,永久内核映射和临时内核映射。

永久映射

永久内核映射允许内核建立高端页框到内核地址空间的长期映射。他们使用住内核页表中一个专门的页表,其地址存放在变量pkmap_page_table中,这在前面的页表机制管理区初始化中已经介绍过了。页表中的表项数由LAST_PKMAP宏产生。因此,内核一次最多访问2MB或4MB的高端内存。

该页表映射的线性地址从PKMAP_BASE开始。pkmap_count数组包含LAST_PKMAP个计数器,pkmap_page_table页表中的每一项都有一个。

pkmap_count数组来记录pkmap_page_table中每一个页表项的使用状态,其实就是为每个页表项分配一个计数器来记录相应的页表是否已经被用来映射。计数值分为以下三种情况:

计数值为0:对应的页表项没有映射高端内存,即为空闲可用的;自由项

计数值为1: 对应的页表项没有映射高端内存,但是不可用,因为上次映射后对应的TLB项还未被淸刷;缓冲项

计数值为n(n>1):对应的页表项已经映射了一个高端内存页框,并且有n-1个内核成分正在利用这种映射关系;映射项

/*这里由定义可以看出永久内存映射为固定映射下面的4M空间*/ #define PKMAP_BASE ((FIXADDR_BOOT_START - PAGE_SIZE * (LAST_PKMAP + 1)) \ & PMD_MASK)

为了记录高端内存页框与永久内核映射包含的线性地址之间的联系,内核使用了page_address_htable散列表。该表包含一个page_address_map数据结构,用于为高端内存中的每一个页框进行当前映射。而该数据结构还包含一个指向页描述符的指针和分配给该页框的线性地址。

*

* Hash table bucket

*/

static struct page_address_slot {

struct list_head lh; /* List of page_address_maps */

spinlock_t lock; /* Protect this bucket's list */

} ____cacheline_aligned_in_smp page_address_htable[1<<PA_HASH_ORDER];

/*

* Describes one page->virtual association

*/

struct page_address_map {

struct page *page;

void *virtual;

struct list_head list;

};

先通过alloc_page(__GFP_HIGHMEM)分配到了一个属于高端内存区域的page结构,然后调用kmap(struct page*page)来建立与永久内核映射区的映射,需要注意一点的是,当永久内核映射区没有空闲的页表项可供映射时,请求映射的进程会被阻塞,因此永久内核映射请求不能发生在中断和可延迟函数中。

/**

* page_address - get the mapped virtual address of a page

* @page: &struct page to get the virtual address of

*

* Returns the page's virtual address.

*/

void *page_address(const struct page *page)

{

unsigned long flags;

void *ret;

struct page_address_slot *pas;

if (!PageHighMem(page))/*如果页框不在高端内存中*/

<span style="white-space:pre"> </span> /*线性地址总是存在,通过计算页框下标

然后将其转换成物理地址,最后根据相应的

物理地址得到线性地址*/

return lowmem_page_address(page);

/*从page_address_htable散列表中得到pas*/

pas = page_slot(page);

ret = NULL;

spin_lock_irqsave(&pas->lock, flags);

if (!list_empty(&pas->lh)) {

<span style="white-space:pre"> /*如果对应的链表不空,

该链表中存放的是page_address_map结构*/ </span>

struct page_address_map *pam;

list_for_each_entry(pam, &pas->lh, list) {

if (pam->page == page) {

ret = pam->virtual;/*返回线性地址*/

goto done;

}

}

}

done:

spin_unlock_irqrestore(&pas->lock, flags);

return ret;

}

void *kmap(struct page *page)

{

might_sleep();

if (!PageHighMem(page))/*页框属于低端内存*/

return page_address(page);/*返回页框的虚拟地址*/

return kmap_high(page);

}

/**

* kmap_high - map a highmem page into memory

* @page: &struct page to map

*

* Returns the page's virtual memory address.

*

* We cannot call this from interrupts, as it may block.

*/

void *kmap_high(struct page *page)

{

unsigned long vaddr;

/*

* For highmem pages, we can't trust "virtual" until

* after we have the lock.

*/

lock_kmap();/*获取自旋锁防止多处理器系统上的并发访问*/

/*试图获取页面的虚拟地址,因为之前可能已经有进程为该页框建立了到永久内核映射区的映射*/

vaddr = (unsigned long)page_address(page);

/*虚拟地址不存在则调用map_new_virtual()为该页框分配一个虚拟地址,完成映射*/

if (!vaddr)

vaddr = map_new_virtual(page);

pkmap_count[PKMAP_NR(vaddr)]++;/*相应的页表项的计数值加1*/#define PKMAP_NR(virt) ((virt-PKMAP_BASE) >> PAGE_SHIFT

BUG_ON(pkmap_count[PKMAP_NR(vaddr)] < 2);

unlock_kmap();

return (void*) vaddr;

}

map_new_virtual()函数在永久内核映射区对应的页表中找到一个空闲的表项来映射这个页框;即为这个页框分配一个线性地址

static inline unsigned long map_new_virtual(struct page *page)

{

unsigned long vaddr;

int count;

/*LAST_PKMAP为永久映射区可以映射的页框数,在禁用PAE的情况下为512,开启PAE的情况下为1024,

也就是说内核通过kmap,一次最多能映射2M/4M的高端内存*/

start:

count = LAST_PKMAP;

/* Find an empty entry */

for (;;) {

/*last_pkmap_nr记录了上次遍历pkmap_count数组找到一个空闲页表项后的位置,首先从

last_pkmap_nr出开始遍历,如果未能在pkmap_count中找到计数值为0的页表项,则last_pkmap_nr

和LAST_PKMAP_MASK相与后又回到0,进行第二轮遍历; 因为之前映射的 被释放了*/ <span style="font-size:24px; line-height: 26px;"><span style="font-size: 18px;"><span style="font-family:Arial;color:#333333;"></span></span></span><pre name="code" class="cpp">

last_pkmap_nr = (last_pkmap_nr + 1) & LAST_PKMAP_MASK;if (!last_pkmap_nr) { /*last_pkmap_nr变为了0,也就是说第一次遍历中未能找到计数值为0的页表项*/ flush_all_zero_pkmaps();count = LAST_PKMAP;}if (!pkmap_count[last_pkmap_nr])/*找到一个计数值为0的页表项,即空闲可用的页表项*/ break; /* Found a usable entry */if (--count)continue;/* * Sleep for somebody else to unmap their entries */

*在pkmap_count数组中,找不到计数值为0或1的页表项,即所有页表项都被内核映射了, 则声明一个等待队列,并将当前要求映射高端内存的进程添加到等待队列中然后

阻塞该进程,等待其他的进程释放了KMAP区的某个页框的映射*/ {DECLARE_WAITQUEUE(wait, current);__set_current_state(TASK_UNINTERRUPTIBLE);add_wait_queue(&pkmap_map_wait, &wait);//等待唤醒比如 在kunmap后有新的线性地址空出来了unlock_kmap();schedule();remove_wait_queue(&pkmap_map_wait, &wait);lock_kmap();/* Somebody else might have mapped it while we slept */if (page_address(page)) /*在睡眠的时候,可能有其他的进程映射了该页面,所以先试图获取页面的虚拟地址,成功的话直接返回*/ return (unsigned long)page_address(page);/* Re-start */goto start;}}vaddr = PKMAP_ADDR(last_pkmap_nr);/*寻找到了一个未被映射的页表项,获取该页表项对应的线性地址并赋给vaddr*/ set_pte_at(&init_mm, vaddr, &(pkmap_page_table[last_pkmap_nr]), mk_pte(page, kmap_prot)); /*将pkmap_page_table中对应的pte设为申请映射的页框的pte,完成永久内核映射区中的页表项到物理页框的映射*/ /*接下来把pkmap_count[last_pkmap_nr]置为1,1表示缓存???不能用

实际上 是在他的上层函数kmap_high里面完成的(pkmap_count[PKMAP_NR(vaddr)]++).*/ pkmap_count[last_pkmap_nr] = 1;set_page_address(page, (void *)vaddr);//整个映射就完成;再把page和对应的线性地址

加入到page_address_htable哈希链表里面return vaddr;} #define PKMAP_ADDR(nr) (PKMAP_BASE + ((nr) << PAGE_SHIFT))

#define PKMAP_BASE ((FIXADDR_BOOT_START - PAGE_SIZE * (LAST_PKMAP + 1)) \

& PMD_MASK)

这两个宏定义意义见上图;

flush_all_zero_pkmaps(),将计数值为1的页表项置为0,撤销已经不用了的映射,并且刷新TLB

tatic void flush_all_zero_pkmaps(void)

{

int i;

int need_flush = 0;

flush_cache_kmaps();

for (i = 0; i < LAST_PKMAP; i++) {

struct page *page;

/*

* zero means we don't have anything to do,

* >1 means that it is still in use. Only

* a count of 1 means that it is free but

* needs to be unmapped

*/

/*将计数值为1的页面的计数值设为0,*/

if (pkmap_count[i] != 1)

continue;

pkmap_count[i] = 0;

/* sanity check */

BUG_ON(pte_none(pkmap_page_table[i]));

/*

* Don't need an atomic fetch-and-clear op here;

* no-one has the page mapped, and cannot get at

* its virtual address (and hence PTE) without first

* getting the kmap_lock (which is held here).

* So no dangers, even with speculative execution.

*/

/*撤销之前的映射关系,并将page从page_address_htable散列表中删除*/

page = pte_page(pkmap_page_table[i]);

pte_clear(&init_mm, (unsigned long)page_address(page),

&pkmap_page_table[i]);

set_page_address(page, NULL);

need_flush = 1;

}

if (need_flush)/*刷新TLB*/

flush_tlb_kernel_range(PKMAP_ADDR(0), PKMAP_ADDR(LAST_PKMAP));

}

调用set_page_address()将page与该页表项对应的线性地址进行关联;j将page结构中的virtual赋上对应的值,同时将映射的page添加到page_address_htable散列表中,该散列表维护着所有被映射到永久内核映射区的页框,散列表中的每一项记录了页框的page结构地址和映射页框的线性地址。

/**

* set_page_address - set a page's virtual address

* @page: &struct page to set

* @virtual: virtual address to use

*/

void set_page_address(struct page *page, void *virtual)

{

unsigned long flags;

struct page_address_slot *pas;

struct page_address_map *pam;

BUG_ON(!PageHighMem(page));

pas = page_slot(page);

if (virtual) { /* Add */

BUG_ON(list_empty(&page_address_pool));

spin_lock_irqsave(&pool_lock, flags);

pam = list_entry(page_address_pool.next,

struct page_address_map, list);/*从page_address_pool中得到一个空闲的page_address_map*/

list_del(&pam->list);/*将该节点从page_address_pool中删除*/

spin_unlock_irqrestore(&pool_lock, flags);

pam->page = page;

pam->virtual = virtual;

spin_lock_irqsave(&pas->lock, flags);

list_add_tail(&pam->list, &pas->lh);/*将pam添入散列表*/

spin_unlock_irqrestore(&pas->lock, flags);

} else { /* Remove *//*从散列表中删除一个节点,执行与上面相反的操作*/

spin_lock_irqsave(&pas->lock, flags);

list_for_each_entry(pam, &pas->lh, list) {

if (pam->page == page) {

list_del(&pam->list);

spin_unlock_irqrestore(&pas->lock, flags);

spin_lock_irqsave(&pool_lock, flags);

list_add_tail(&pam->list, &page_address_pool);

spin_unlock_irqrestore(&pool_lock, flags);

goto done;

}

}

spin_unlock_irqrestore(&pas->lock, flags);

}

done:

return;

}

Kunmap 撤销先前Kmap映射的永久内核映射。如果确实在高端内存中,调用kunmap_high;

void kunmap(struct page *page)

{

if (in_interrupt())

BUG();

if (!PageHighMem(page))

return;

kunmap_high(page);

}

/**

* kunmap_high - unmap a highmem page into memory

* @page: &struct page to unmap

*

* If ARCH_NEEDS_KMAP_HIGH_GET is not defined then this may be called

* only from user context.

*/

void kunmap_high(struct page *page)

{

unsigned long vaddr;

unsigned long nr;

unsigned long flags;

int need_wakeup;

lock_kmap_any(flags);

vaddr = (unsigned long)page_address(page);

BUG_ON(!vaddr);

nr = PKMAP_NR(vaddr);

/*

* A count must never go down to zero

* without a TLB flush!

*/

need_wakeup = 0;

switch (--pkmap_count[nr]) {

case 0:

BUG();

case 1:

/*

* Avoid an unnecessary wake_up() function call.

* The common case is pkmap_count[] == 1, but

* no waiters.

* The tasks queued in the wait-queue are guarded

* by both the lock in the wait-queue-head and by

* the kmap_lock. As the kmap_lock is held here,

* no need for the wait-queue-head's lock. Simply

* test if the queue is empty.

*//*确定pkmap_map_wait等待队列是否为空*/

need_wakeup = waitqueue_active(&pkmap_map_wait);

}

unlock_kmap_any(flags);

/* do wake-up, if needed, race-free outside of the spin lock */

if (need_wakeup)

wake_up(&pkmap_map_wait); 唤醒由map_new_virtual添加在等待队列中进程;

}