[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案

上一篇文章讲述了GlusterFS的基本概念和环境,那么本章将介绍如何在GlusterFS中添加功能,即设计相应的xlator。

一、需求分析

需要设计一个xlator,server端可以audit到client端的unlink(删除文件)以及rmdir(删除文件夹)操作,操作被记录在server brick的日志里,server就能够跟踪到删除文件的客户机,保证了存储的安全性。

二、设计方案

设计含有unlink和rmdir的xlator主要涉及到两个方面,一个是audit xlator(识别unlink和rmdir操作)代码的编写和volfile的编写。整个操作的流程是这样的:

===========================================================================

2.1 audit.c代码编写

首先在工作目录下建立目录"addxlator_sample",在里面编辑audit.c文件:

audit.c:

/*

This translator audits the unlink and rmdir operation by

the client. The operation can be logged at server logs

including client_id,file_id,operation , which guarantees

the security of the system.

*/

#ifndef _CONFIG_H

#define _CONFIG_H

#include "config.h"

#include "xlator.h"

#endif

#include <fnmatch.h>

#include <errno.h>

#include "glusterfs.h"

#include "xlator.h"

#include <stdarg.h>

#include "defaults.h"

#include "logging.h"

/*unlink call back function*/

int

audit_unlink_cbk(call_frame_t *frame, void *cookie, xlator_t *this, int32_t op_ret, int32_t op_errno, struct iatt *preparent, struct iatt *postparent, dict_t *xdata)

{

gf_log(this->name,GF_LOG_ERROR,"in audit translator unlink call back");

//client info: frame->root->client->client_uid

if(frame!=NULL&&frame->root!=NULL&&frame->root->client!=NULL&&frame->root->client->client_uid!=NULL )

{

gf_log(this->name,GF_LOG_INFO,"in audit translator unlink call back client = %s.opt=unlink",frame->root->client->client_uid);

}

STACK_UNWIND_STRICT(unlink,frame,op_ret,op_errno,preparent,postparent,xdata);

return 0;

}

/*rmdir call back function*/

int

audit_rmdir_cbk(call_frame_t *frame,void *cookie,xlator_t *this,int32_t op_ret, int32_t op_errno, struct iatt *preparent,struct iatt *postparent, dict_t *xdata)

{

gf_log(this->name,GF_LOG_ERROR,"in audit translator rmdir call back");

if(frame!=NULL&&frame->root!=NULL&&frame->root->client!=NULL&&frame->root->client->client_uid!=NULL )

{

gf_log(this->name,GF_LOG_ERROR,"in audit translator rmdir call back. client = %s.operation = rmdir",frame->root->client->client_uid);

}

STACK_UNWIND_STRICT(rmdir,frame,op_ret,op_errno,preparent,postparent,xdata);

return 0;

}

static int

audit_unlink(call_frame_t *frame, xlator_t *this, loc_t *loc, int xflag,dict_t *xdata)

{

struct ios_conf *conf = NULL;

conf = this->private;

gf_log(this->name,GF_LOG_INFO,"in audit unlink path=%s",loc->path);

STACK_WIND(frame,audit_unlink_cbk,FIRST_CHILD(this),FIRST_CHILD(this)->fops->unlink,loc,xflag,xdata);

return 0;

}

static int

audit_rmdir(call_frame_t *frame, xlator_t *this, loc_t *loc,int flags, dict_t *xdata)

{

gf_log(this->name,GF_LOG_INFO,"in audit translator rmdir.path= %s",loc->path);

STACK_WIND(frame,audit_rmdir_cbk,FIRST_CHILD(this),FIRST_CHILD(this)->fops->rmdir,loc,flags,xdata);

return 0;

}

int

reconfigure(xlator_t *this , dict_t *options)

{

return 0;

}

int

init(xlator_t *this)

{

struct ios_conf *conf = NULL;

int ret = -1;

gf_log(this->name,GF_LOG_ERROR,"audit translator loaded");

if(!this)

return -1;

if(!this->children)

{

gf_log(this->name,GF_LOG_ERROR,"audit translator requires at least one subvolume");

return -1;

}

if(!this->parents)

{

gf_log(this->name,GF_LOG_ERROR,"dangling volume.check volfile ") ;

}

conf = this->private;

this->private = conf;

ret = 0;

return ret;

}

void

fini(xlator_t *this)

{

struct ios_conf *conf = NULL;

if(!this) return ;

conf = this->private;

if(!conf)

return ;

this->private = NULL;

GF_FREE(conf);

gf_log(this->name,GF_LOG_ERROR,"audit translator unloaded");

return ;

}

int

notify(xlator_t *this,int32_t event,void *data,...)

{

default_notify(this,event,data);

return 0;

}

struct xlator_fops fops =

{

.unlink = audit_unlink, //audit unlink operation

.rmdir = audit_rmdir, //audit rmdir operation

};

struct xlator_cbks cbks =

{};

struct volume_options options[] =

{};

Makefile:

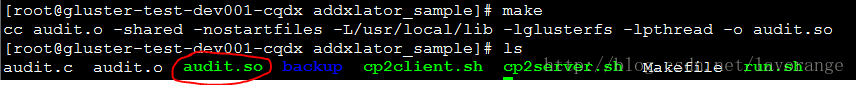

#add audit(client file operation) translator into glusterfs volume file #email:[email protected] #blog:http://blog.csdn.net/lavorange TARGET = audit.so OBJECTS = audit.o GLUSTERFS_SRC = /root/gluster3.6 GLUSTERFS_LIB = /usr/local/lib HOST_OS = HF_LINUX_HOST_OS CFLAGS = -fPIC -Wall -o0 -g \ -DHAVE_CONFIG_H -D_FILE_OFFSET_BITS=64 -D_GNU_SOURCE \ -D$(HOST_OS) -I$(GLUSTERFS_SRC) -I$(GLUSTERFS_SRC)/libglusterfs/src -I$(GLUSTERFS_SRC)/contrib/uuid LDFLAGS = -shared -nostartfiles -L$(GLUSTERFS_LIB) -lglusterfs -lpthread $(TARGET):$(OBJECTS) $(CC) $(OBJECTS) $(LDFLAGS) -o $(TARGET) clean: rm -rf $(TARGET) $(OBJECTS)执行make命令得到audit.so文件:

将audit.so文件拷贝到client端和server端的/usr/local/lib/glusterfs/xlator/debug/(具体根据不同版本路径有所不同)下面,使得程序在运行的时候自动加载相应模块。在此执行两个脚本:

cp2client.sh:

#!/bin/bash cp audit.so /usr/local/lib/glusterfs/xlator/debugcp2server.sh:

#!/bin/bash scp audit.so [email protected]:/usr/local/lib/glusterfs/3.6.3_iqiyi_1/xlator/debug/ scp audit.so [email protected]:/usr/local/lib/glusterfs/3.6.3_iqiyi_1/xlator/debug/正如脚本里面写的:

client端的so拷贝到:/usr/local/lib/glusterfs/xlator/debug下面

server端的so拷贝到:/usr/local/lib/glusterfs/3.6.3_iqiyi_1/xlator/debug下面

2.3 编辑volfile

client端img.tcp-fuse.vol:

volume img-client-0

type protocol/client

option send-gids true

option transport-type tcp

option remote-subvolume /data/gluster

option remote-host 10.23.85.48

option ping-timeout 42

end-volume

volume img-client-1

type protocol/client

option send-gids true

option transport-type tcp

option remote-subvolume /data/gluster

option remote-host 10.23.85.49

option ping-timeout 42

end-volume

volume img-replicate-0

type cluster/replicate

subvolumes img-client-0 img-client-1

end-volume

volume img-dht

type cluster/distribute

subvolumes img-replicate-0

end-volume

volume img-write-behind

type performance/write-behind

subvolumes img-dht

end-volume

volume img-read-ahead

type performance/read-ahead

subvolumes img-write-behind

end-volume

volume img-io-cache

type performance/io-cache

subvolumes img-read-ahead

end-volume

volume img-quick-read

type performance/quick-read

subvolumes img-io-cache

end-volume

volume img-open-behind

type performance/open-behind

subvolumes img-quick-read

end-volume

volume img-md-cache

type performance/md-cache

subvolumes img-open-behind

end-volume

volume testvol-audit

type debug/audit

subvolumes img-md-cache

end-volume

volume testvol-trace

type debug/trace

subvolumes testvol-audit

end-volume

volume img

type debug/io-stats

option count-fop-hits off

option latency-measurement off

subvolumes testvol-trace

end-volume

截图:

server端img.10.23.85.48.data-gluster.vol(每个server端文件修改一致):

volume img-posix

type storage/posix

option volume-id dd4193c5-f059-443d-8b76-f089f1bd9d61

option directory /data/gluster

end-volume

volume img-changelog

type features/changelog

option changelog-barrier-timeout 120

option changelog-dir /data/gluster/.glusterfs/changelogs

option changelog-brick /data/gluster

subvolumes img-posix

end-volume

volume img-access-control

type features/access-control

subvolumes img-changelog

end-volume

volume img-locks

type features/locks

subvolumes img-access-control

end-volume

volume img-io-threads

type performance/io-threads

subvolumes img-locks

end-volume

volume img-barrier

type features/barrier

option barrier-timeout 120

option barrier disable

subvolumes img-io-threads

end-volume

volume img-index

type features/index

option index-base /data/gluster/.glusterfs/indices

subvolumes img-barrier

end-volume

volume img-marker

type features/marker

option quota off

option gsync-force-xtime off

option xtime off

option timestamp-file /var/lib/glusterd/vols/img/marker.tstamp

option volume-uuid dd4193c5-f059-443d-8b76-f089f1bd9d61

subvolumes img-index

end-volume

volume img-quota

type features/quota

option deem-statfs off

option timeout 0

option server-quota off

option volume-uuid img

subvolumes img-marker

end-volume

<strong>volume testvol-audit

type debug/audit

subvolumes img-quota

end-volume</strong>

volume testvol-trace

type debug/trace

subvolumes testvol-audit

end-volume

volume /data/gluster

type debug/io-stats

option count-fop-hits off

option latency-measurement off

subvolumes testvol-trace

end-volume

volume img-server

type protocol/server

option auth.addr./data/gluster.allow *

option auth.login.cb2802fa-094e-47d2-9737-8206e9705a1e.password bcf08562-9c21-462b-8852-ac968cc51dae

option auth.login./data/gluster.allow cb2802fa-094e-47d2-9737-8206e9705a1e

option transport-type tcp

subvolumes /data/gluster

end-volume

截图:

2.4 重启server端服务

重新启动server端的程序,将所有server端的程序都进行重启,用glusterfsd重启,加载新加入的so文件以及解析修改过的volfile。

首先kill掉glusterfsd进行,然后在启动server端进程(每个server端都需要这么做):

注:在此简单的说明一下,就是server端的glusterfsd进程在启动卷volume(gluster volume start img)之后就开始执行了。

2.5 client端挂载运行

在debug模式下在客户端运行挂载程序:

glusterfsd -s 10.23.85.48 --volfile-id=img /mnt --debug在client的端的挂载点/mnt进行删除操作,我们进行两个操作unlink以及rmdir操作(删除文件/文件夹),

![[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案_第4张图片](http://img.e-com-net.com/image/info5/34381f33975942de9ecd707c7b6898da.png)

我们在挂载点删除了dd文件和EE文件夹,我们在server端的日志进行查看(/var/log/glusterfs/bricks/data-gluster.log):

可以看出audit到了文件的path,进行操作的client信息等,完整的实现了audit功能。

到此为止就已经能实现audit这个xlator,能够实现功能了。

参考资料:

1.http://blog.csdn.net/liuaigui/article/details/7786215

2.http://blog.chinaunix.net/uid-11344913-id-3794453.html

3.http://blog.chinaunix.net/uid-11344913-id-3795965.html

Author:忆之独秀

Email:[email protected]

注明出处:http://blog.csdn.net/lavorange/article/details/44902541

![[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案_第1张图片](http://img.e-com-net.com/image/info5/16e1e03682d0402ea5b08229a23a5a94.png)

![[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案_第2张图片](http://img.e-com-net.com/image/info5/b51b39374bca4d6a89db120c77357a81.png)

![[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案_第3张图片](http://img.e-com-net.com/image/info5/c8d26adfb8a54eb6800e9077a37a9067.jpg)

![[置顶] 【GlusterFS学习之三】:GlusterFS的audit operation xlator设计实现方案_第5张图片](http://img.e-com-net.com/image/info5/59d9b2dbae53423a861ba31aaa5d8112.jpg)