WebSocket官方文档翻译——HTML5 Web Sockets:A Quantum Leap in Scalability for the Web

HTML5 Web Sockets:A Quantum Leap in Scalability for the Web

By Peter Lubbers & Frank Greco, Kaazing Corporation

(This article has also been translated into Bulgarian.)

(该文已经被翻译成了保加利亚语)

Lately there has been a lot of buzz around HTML5 Web Sockets, which defines a full-duplex communication channel that operates through a single socket over the Web. HTML5 Web Sockets is not just another incremental enhancement to conventional HTTP communications; it represents a colossal advance, especially for real-time, event-driven web applications.

近来围绕着HTML5 Web Sockets有很大的动静,它定义了一种在通过一个单一的socket在网络上进行全双工通讯的通道。它不仅仅是传统的HTTP通讯的一个增量的提高,尤其对于实时、事件驱动的应用来说是一个飞跃。

HTML5 Web Sockets provides such a dramatic improvement from the old, convoluted "hacks" that are used to simulate a full-duplex connection in a browser that it prompted Google's Ian Hickson—the HTML5 specification lead—to say:

"Reducing kilobytes of data to 2 bytes…and reducing latency from 150ms to 50ms is far more than marginal. In fact, these two factors alone are enough to make Web Sockets seriously interesting to Google."

Let's take a look at how HTML5 Web Sockets can offer such an incredibly dramatic reduction of unnecessary network traffic and latency by comparing it to conventional solutions.

HTML5 Web Sockets相对于老的技术(在浏览器中模拟全双工连接的复杂技术)有了如此巨大的提升,以致于谷歌的Ian Hickson—HTML5说明书的总编说:“把数据从kb减少到2b...,延迟时间从150ms减少到50ms,这远远不止是个微调。实际上,仅仅是这两个事实就已经足够让谷歌对Web Sockets产生非常浓厚的兴趣。”

让我们通过对比常规的解决方案来看看HTML5 Web Sockets是如何在非必要的网络传输和延迟性上提供如此难以置信的巨大降低。

Polling, Long-Polling, and Streaming—Headache 2.0

Normally when a browser visits a web page, an HTTP request is sent to the web server that hosts that page. The web server acknowledges this request and sends back the response. In many cases—for example, for stock prices, news reports, ticket sales, traffic patterns, medical device readings, and so on—the response could be stale by the time the browser renders the page. If you want to get the most up-to-date "real-time" information, you can constantly refresh that page manually, but that's obviously not a great solution.

一旦当一个浏览器访问一个网页时,会向拥有这个页面的服务器发送一个HTTP请求。服务器认可这个请求并发送一个相应。在许多场景下,例如股票价格、新闻报道、售票、航线、医疗设备等等,在浏览器渲染页面之后响应就变旧了。

Current attempts to provide real-time web applications largely revolve around polling and other server-side push technologies, the most notable of which is Comet, which delays the completion of an HTTP response to deliver messages to the client. Comet-based push is generally implemented in JavaScript and uses connection strategies such as long-polling or streaming.

现在尝试提供实时的网络应用一般都围绕着轮询和其它服务端的推送技术,最引人注意的是Comet,它延迟了发往客户端的HTTP响应的结束。基于Comet的推送一般采用JavaScript 实现并使用长连接或流式的连接策略。

With polling, the browser sends HTTP requests at regular intervals and immediately receives a response. This technique was the first attempt for the browser to deliver real-time information. Obviously, this is a good solution if the exact interval of message delivery is known, because you can synchronize the client request to occur only when information is available on the server. However, real-time data is often not that predictable, making unnecessary requests inevitable and as a result, many connections are opened and closed needlessly in low-message-rate situations.

轮询过程中,浏览器在一个固定的间隔内发送HTTP请求并立即收到响应。这个技术浏览器发送实时数据的第一个尝试。很显然,如果发送的数据的时间间隔是明确的这是一个很好的解决方案,因为你可以在服务器上的信息准备好后同步启动客户短的请求。但是,实时数据通常都是不可预期的,这必然造成许多不必要的请求,致使在低频率消息情况下许多连接被不必要的打开和关闭。

With long-polling, the browser sends a request to the server and the server keeps the request open for a set period. If a notification is received within that period, a response containing the message is sent to the client. If a notification is not received within the set time period, the server sends a response to terminate the open request. It is important to understand, however, that when you have a high message volume, long-polling does not provide any substantial performance improvements over traditional polling. In fact, it could be worse, because the long-polling might spin out of control into an unthrottled, continuous loop of immediate polls.

长轮询过程中,浏览器向服务器发送一个请求,在一段时间内服务器保持这个请求打开。假如在这个时间内收到一个通知,包含信息的响应将发向客户端。假如在一段时间内没有收到通知,服务端发送响应去结束请求。一定要理解在大数据量消息的情况下,长轮询相对于传统的轮询并不能提供大幅性能提升。实际上,它可能更糟,因为它有可能失控成为非节流(unthrottled),陷入立即推送的循环中。

With streaming, the browser sends a complete request, but the server sends and maintains an open response that is continuously updated and kept open indefinitely (or for a set period of time). The response is then updated whenever a message is ready to be sent, but the server never signals to complete the response, thus keeping the connection open to deliver future messages. However, since streaming is still encapsulated in HTTP, intervening firewalls and proxy servers may choose to buffer the response, increasing the latency of the message delivery. Therefore, many streaming Comet solutions fall back to long-polling in case a buffering proxy server is detected. Alternatively, TLS (SSL) connections can be used to shield the response from being buffered, but in that case the setup and tear down of each connection taxes the available server resources more heavily.

流式中,浏览器向服务器发送一个完整的请求,服务器发送一个响应并维护一个打开的响应,这个响应被持续更新并保持打开状态。当有消息要发送的时候响应就会被更新,但是为了能保证连接打开而一直能发送数据,服务端从来不会发送结束响应的信号。尽管如此,由于流式是建立在HTTP之上的,防火墙或代理服务器可能会缓存响应,,从而增加消息的延迟发送。因此当检测到代理服务器时,流式Comet方案会退回到长连接。另一种选择,使用TLS(SSL)连接可以防止响应被缓存,但是这种情况下创建和销毁每一个连接将消耗更多的可用的服务器资源。

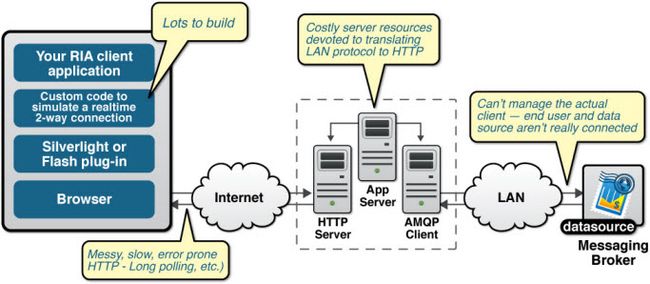

Ultimately, all of these methods for providing real-time data involve HTTP request and response headers, which contain lots of additional, unnecessary header data and introduce latency. On top of that, full-duplex connectivity requires more than just the downstream connection from server to client. In an effort to simulate full-duplex communication over half-duplex HTTP, many of today's solutions use two connections: one for the downstream and one for the upstream. The maintenance and coordination of these two connections introduces significant overhead in terms of resource consumption and adds lots of complexity. Simply put, HTTP wasn't designed for real-time, full-duplex communication as you can see in the following figure, which shows the complexities associated with building a Comet web application that displays real-time data from a back-end data source using a publish/subscribe model over half-duplex HTTP.

最后,所有这些提供实时数据的方式都会引入HTTP请求和响应头,这包含很多额外的非必需的头数据,从而增加了延迟性。加上全双工并不仅仅是从服务端到客户端的下行连接。在半双工的HTTP上去模拟全双工通讯,许多解决方案都使用两个连接:一个上行一个下行。这两个连接的维护和协议带来了很大的资源消耗,增加了很多复杂性。简而言之,HTTP并不是设计用来进行实时、全双工通讯的,下图展示了创建一个Comet网络应用(在半双工的HTTP上使用订阅模式实时获取后端数据)的复杂度。

Figure 1—The complexity of Comet applications

It gets even worse when you try to scale out those Comet solutions to the masses. Simulating bi-directional browser communication over HTTP is error-prone and complex and all that complexity does not scale. Even though your end users might be enjoying something that looks like a real-time web application, this "real-time" experience has an outrageously high price tag. It's a price that you will pay in additional latency, unnecessary network traffic and a drag on CPU performance.

当你打算从Comet解决方案扩展到更大众方案(masses)的时候可能会更糟糕。在HTTP模拟全双工的浏览器通讯易出错、复杂而且复杂度无法降低。尽管你的终端用户非常喜欢实时性的网络应用,这个“实时性”体验带来了巨大的代价。这个代价是支付在额外的延迟、非必要的网络传输以及对CPU性能的影响上。

HTML5 Web Sockets to the Rescue!

Defined in the Communications section of the HTML5 specification, HTML5 Web Sockets represents the next evolution of web communications—a full-duplex, bidirectional communications channel that operates through a single socket over the Web. HTML5 Web Sockets provides a true standard that you can use to build scalable, real-time web applications. In addition, since it provides a socket that is native to the browser, it eliminates many of the problems Comet solutions are prone to. Web Sockets removes the overhead and dramatically reduces complexity.

定义在HTML5说明书中的交互部分,HTML5 Web Sockets代表了全双工的网络交互的下一个演变,在网络上通过一个单一的socket搭建一个双向通讯的通道。HTML5 Web Sockets提供了一个真正的标准,用来可扩展的、实时的网络应用。另外,由于它创建了一个浏览器本地的socket,它避免了很多Comet方式遇到的问题。Web Sockets移除了开销大幅度减轻了复杂度。

To establish a WebSocket connection, the client and server upgrade from the HTTP protocol to the WebSocket protocol during their initial handshake, as shown in the following example:

为了建立一个WebSocket连接,客户端和服务端在进行首次握手的时候把HTTP协议升级为WebSocket协议,就像下面的例子示范的那样:

Example 1—The WebSocket handshake (browser request and server response)

GET /text HTTP/1.1\r\n Upgrade: WebSocket\r\n Connection: Upgrade\r\n Host: www.websocket.org\r\n …\r\n HTTP/1.1 101 WebSocket Protocol Handshake\r\n Upgrade: WebSocket\r\n Connection: Upgrade\r\n …\r\n

Once established, WebSocket data frames can be sent back and forth between the client and the server in full-duplex mode. Both text and binary frames can be sent full-duplex, in either direction at the same time. The data is minimally framed with just two bytes. In the case of text frames, each frame starts with a 0x00 byte, ends with a 0xFF byte, and contains UTF-8 data in between. WebSocket text frames use a terminator, while binary frames use a length prefix.

一旦建立,WebSocket数据帧可以采用全双工的模式在客户端和服务端之间进行来回传送。文本和二进制帧在任何方向任何时间都可以进行全双工通讯。数据被最大限度的缩小到2b。如果是文本帧,每一个帧以0X00b开始,以0xFFb结尾,中间是UTF-8的数据。WebSocket文本帧使用终止符,二进制帧使用长度前缀。

Note: although the Web Sockets protocol is ready to support a diverse set of clients, it cannot deliver raw binary data to JavaScript, because JavaScript does not support a byte type. Therefore, binary data is ignored if the client is JavaScript—but it can be delivered to other clients that support it.

注意:尽管Web Sockets协议支持不同种类的客户端,它不支持向JavaScript发送二进制数据,因为JavaScript不支持二进制数据。因此,假如客户端是JavaScript二进制数据会被忽略——但是可以把它发送给其它支持二进制的客户端。

The Showdown: Comet vs. HTML5 Web Sockets

So how dramatic is that reduction in unnecessary network traffic and latency? Let's compare a polling application and a WebSocket application side by side.

那么在非必要的网络传输和延迟性上究竟减少了多少?让我们一起比较一下长连接应用和WebSocket应用。

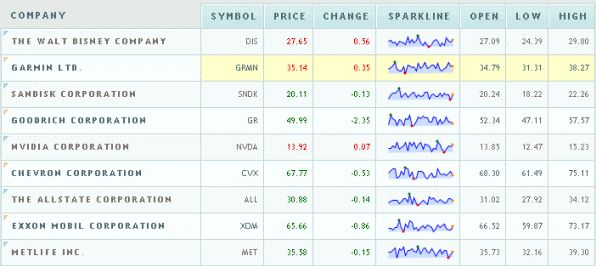

For the polling example, I created a simple web application in which a web page requests real-time stock data from a RabbitMQ message broker using a traditional publish/subscribe model. It does this by polling a Java Servlet that is hosted on a web server. The RabbitMQ message broker receives data from a fictitious stock price feed with continuously updating prices. The web page connects and subscribes to a specific stock channel (a topic on the message broker) and uses an XMLHttpRequest to poll for updates once per second. When updates are received, some calculations are performed and the stock data is shown in a table as shown in the following image.

在长连接的例子中,我创建了一个简单的网络应用:一个网页使用传统的发布/订阅模式从RabbitMQ消息队列中获取实时股票信息。它通过与网络服务器上的Java Servlet进行长连接实现。RabbitMQ消息队列从虚构的持续改变股票价格的股票价格服务接收数据。网页连接并订阅一个股票通道,然后使用XMLHttpRequest每秒更新一次。当接收到更新后,会进行一些计算,然后在下图所示的表格中展示股票数据。

Figure 2—A JavaScript stock ticker application

图2—一个JavaScript股票应用

Note: The back-end stock feed actually produces a lot of stock price updates per second, so using polling at one-second intervals is actually more prudent than using a Comet long-polling solution, which would result in a series of continuous polls. Polling effectively throttles the incoming updates here.

注意:后台的股票服务实际上每秒产生许多价格上的更新,因此每秒轮询一次比使用Comet长连接更节省,后者会造成大量的持续的轮询。这里轮询有效的节制了数据更新。

It all looks great, but a look under the hood reveals there are some serious issues with this application. For example, in Mozilla Firefox with Firebug (a Firefox add-on that allows you to debug web pages and monitor the time it takes to load pages and execute scripts), you can see that GET requests hammer the server at one-second intervals. Turning on Live HTTP Headers(another Firefox add-on that shows live HTTP header traffic) reveals the shocking amount of header overhead that is associated with each request. The following two examples show the HTTP header data for just a single request and response.

它看上去很不错,但是揭开面具,这里有许多严重的问题。例如,在Mozilla Firefox中使用Firebug(一个火狐插件——可以对网页进行deb、跟踪加载页面和执行脚本的时间),你可以看到每隔一秒GET请求就去连接服务器。打开Live HTTP Headers(另外一个火狐插件——可以显示活跃 HTTP 头传输)暴露了每一个连接上巨大数量的头开销(header overhead)。下面的例子展示了一个请求和响应的头信息。

Example 2—HTTP request header

GET /PollingStock//PollingStock HTTP/1.1 Host: localhost:8080 User-Agent: Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.1.5) Gecko/20091102 Firefox/3.5.5 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-us Accept-Encoding: gzip,deflate Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7 Keep-Alive: 300 Connection: keep-alive Referer: http://www.example.com/PollingStock/ Cookie: showInheritedConstant=false; showInheritedProtectedConstant=false; showInheritedProperty=false; showInheritedProtectedProperty=false; showInheritedMethod=false; showInheritedProtectedMethod=false; showInheritedEvent=false; showInheritedStyle=false; showInheritedEffect=false

Example 3—HTTP response header

HTTP/1.x 200 OK X-Powered-By: Servlet/2.5 Server: Sun Java System Application Server 9.1_02 Content-Type: text/html;charset=UTF-8 Content-Length: 21 Date: Sat, 07 Nov 2009 00:32:46 GMT

Just for fun, I counted all the characters. The total HTTP request and response header information overhead contains 871 bytes and that does not even include any data! Of course, this is just an example and you can have less than 871 bytes of header data, but I have also seen cases where the header data exceeded 2000 bytes. In this example application, the data for a typical stock topic message is only about 20 characters long. As you can see, it is effectively drowned out by the excessive header information, which was not even required in the first place!

仅仅为了好玩,我数了数所有的字符。整个HTTP请求和相应的头信息中包含了871位还不包含任何数据!当然这仅仅是个例子,你可以有少于871位的头数据,但是我还见过头数据超过2000位的情况。在这个例子中,典型股票标题信息仅仅20个字符长。就像你看到的,它被过多的头信息淹没了,这些头信息甚至起初都不需要。

So, what happens when you deploy this application to a large number of users? Let's take a look at the network throughput for just the HTTP request and response header data associated with this polling application in three different use cases.

那么当你把这个应用部署到大用户量的场景下会怎么样?让我们计算一下与这个轮询应用相关的HTTP请求和响应的头数据在三种场景下的网络吞吐量。

-

Use case A: 1,000 clients polling every second: Network throughput is (871 x 1,000) = 871,000 bytes = 6,968,000 bits per second (6.6 Mbps)

A:每秒1000个客户端轮询,每秒的网络流量是6.6 Mbps。 -

Use case B: 10,000 clients polling every second: Network throughput is (871 x 10,000) = 8,710,000 bytes = 69,680,000 bits per second (66 Mbps)

B:每秒10,000个客户端轮询,每秒的网络流量是66 Mbps。

-

Use case C: 100,000 clients polling every 1 second: Network throughput is (871 x 100,000) = 87,100,000 bytes = 696,800,000 bits per second (665 Mbps)

C:每秒100,000个客户端轮询,每秒的网络流量是665 Mbps。

That's an enormous amount of unnecessary network throughput! If only we could just get the essential data over the wire. Well, guess what? You can with HTML5 Web Sockets! I rebuilt the application to use HTML5 Web Sockets, adding an event handler to the web page to asynchronously listen for stock update messages from the message broker (check out the many how-tos and tutorials on tech.kaazing.com/documentation/ for more information on how to build a WebSocket application). Each of these messages is a WebSocket frame that has just two bytes of overhead (instead of 871)! Take a look at how that affects the network throughput overhead in our three use cases.

这是一个大量的非必要网络流量。假如我们可以从网络上仅仅获得必要的数据。好了,猜到什么了?对了,你可以使用HTML5 Web Sockets。我重构了应用去使用HTML5 Web Sockets,在网页上增加了一个事件处理器去异步监听来自于代理的股票更新信息。每一个信息都是一个WebSocket帧,仅仅有2位的开销(而不是871)!看一下在三种场景下它是如何影响网络流量消耗的。

-

Use case A: 1,000 clients receive 1 message per second: Network throughput is (2 x 1,000) = 2,000 bytes = 16,000 bits per second (0.015 Mbps)

A:1000个客户端每秒接收到一个消息,每秒的网络流量是0.015 Mbps。 -

Use case B: 10,000 clients receive 1 message per second: Network throughput is (2 x 10,000) = 20,000 bytes = 160,000 bits per second (0.153 Mbps)

B:10,000个客户端每秒接收到一个消息,每秒的网络流量是0.15 Mbps。

-

Use case C: 100,000 clients receive 1 message per second: Network throughput is (2 x 100,000) = 200,000 bytes = 1,600,000 bits per second (1.526 Mbps)

C:100,000个客户端每秒接收到一个消息,每秒的网络流量是1.526 Mbps。

As you can see in the following figure, HTML5 Web Sockets provide a dramatic reduction of unnecessary network traffic compared to the polling solution.

在下面的图中你可以看到,与轮询方案相比,HTML5 Web Sockets对非必要网络传输提供了巨大的减少。

Figure 3—Comparison of the unnecessary network throughput overhead between the polling and the WebSocket applications

图3—轮询方案和WebSocket应用在非必要网络消耗上的比较

And what about the reduction in latency? Take a look at the following figure. In the top half, you can see the latency of the half-duplex polling solution. If we assume, for this example, that it takes 50 milliseconds for a message to travel from the server to the browser, then the polling application introduces a lot of extra latency, because a new request has to be sent to the server when the response is complete. This new request takes another 50ms and during this time the server cannot send any messages to the browser, resulting in additional server memory consumption.

在延迟性减少上又如何呢?看看下面的图表。在上半部分,你可以看到半双工轮询方案的延迟。假定,在这个例子中,一个信息从服务端发往浏览器需要消耗50毫秒,轮询应用导致了许多额外的延迟,因为当响应完成时,一个新的请求将会发往服务端。这个新请求又花费了50毫秒,在这个时间内服务端不能向客户端发送任何消息,这导致服务端额外的内存消耗。

In the bottom half of the figure, you see the reduction in latency provided by the WebSocket solution. Once the connection is upgraded to WebSocket, messages can flow from the server to the browser the moment they arrive. It still takes 50 ms for messages to travel from the server to the browser, but the WebSocket connection remains open so there is no need to send another request to the server.

图表的下半部分,你可以看到WebSocket方案在延迟性上的减少。一旦连接被升级为WebSocket,消息一旦到达就会从服务端发往客户端。消息从服务端发往浏览器仍然花费50毫秒,但是WebSocket连接保持打开,所以不需要再向服务端发送另一个请求。

Figure 4—Latency comparison between the polling and WebSocket applications

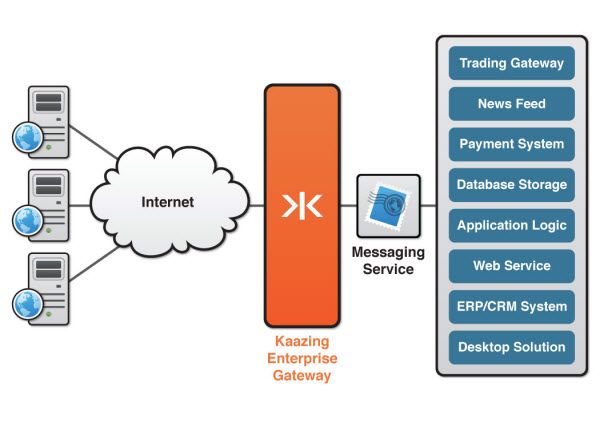

HTML5 Web Sockets and the Kaazing WebSocket Gateway

Today, only Google's Chrome browser supports HTML5 Web Sockets natively, but other browsers will soon follow. To work around that limitation, however, Kaazing WebSocket Gateway provides complete WebSocket emulation for all the older browsers (I.E. 5.5+, Firefox 1.5+, Safari 3.0+, and Opera 9.5+), so you can start using the HTML5 WebSocket APIs today.

现在,仅有谷歌浏览器原生支持HTML5 Web Sockets,但是其它浏览器马上会支持。为了解决这个限制,Kaazing WebSocket Gateway为所有老浏览器提供了一个完全的WebSocket 仿真,所以你可以从今天开始使用HTML5 WebSocket APIs。

WebSocket is great, but what you can do once you have a full-duplex socket connection available in your browser is even greater. To leverage the full power of HTML5 Web Sockets, Kaazing provides a ByteSocket library for binary communication and higher-level libraries for protocols like Stomp, AMQP, XMPP, IRC and more, built on top of WebSocket.

WebSocket很伟大,但是当你的浏览器中有一个全双工的socket连接的时候,你可以做什么更伟大。为了有效利用HTML5 Web Sockets的能力,Kaazing在WebSocket之上为二进制交互提供了ByteSocket,在Stomp, AMQP, XMPP, IRC等等之上提供了更高层次的函数库。

Figure 5—Kaazing WebSocket Gateway extends TCP-based messaging to the browser with ultra high performance

Summary

HTML5 Web Sockets provides an enormous step forward in the scalability of the real-time web. As you have seen in this article, HTML5 Web Sockets can provide a 500:1 or—depending on the size of the HTTP headers—even a 1000:1 reduction in unnecessary HTTP header traffic and 3:1 reduction in latency. That is not just an incremental improvement; that is a revolutionary jump—a quantum leap!

HTML5 Web Sockets在实时网络的扩展性上提供了一个巨大的进步。就像你在本文中看到的,依赖于HTTP头体积,HTML5 Web Sockets可以提供500:1甚至1000:1的非必要HTTP头信息传输的变少,以及3:1延迟性的降低。这不仅仅是个进步,它是巨大的跳跃—一个飞跃。

Kaazing WebSocket Gateway makes HTML5 WebSocket code work in all the browsers today, while providing additional protocol libraries that allow you to harness the full power of the full-duplex socket connection that HTML5 Web Sockets provides and communicate directly to back-end services. For more information about Kaazing WebSocket Gateway, visit kaazing.com and the Kaazing technology network at tech.kaazing.com.

Kaazing WebSocket Gateway使HTML5 WebSocket可以工作在当前所有的浏览器上,提供了额外的协议函数库允许你去利用全双工socket通讯的全部力量,并同后台直接通讯。要了解更多的关于 Kaazing WebSocket Gateway的信息,请访问kaazing.com 以及技术网站tech.kaazing.com上的Kaazing。