MapReduce练习(二)

MapReduce练习(二)

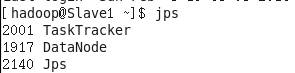

1、启动hadoop-1.2.1 集群:

Master:

Slave:

2、任务要求:

有一批电话通信清单,记录了用户A拨打给用户B的记录

做一个倒排索引,记录拨打给用户B所有用户A

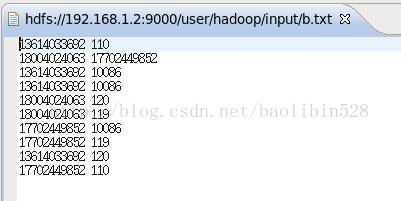

3、要处理的数据传到hdfs上:

4、MapReduce代码:

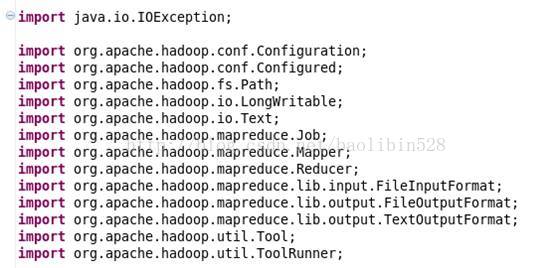

导入的包:

记错行数::

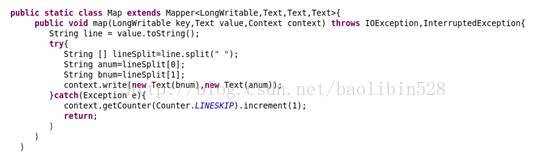

Map方法:

Redecu方法:

Run方法:

主方法:

5、处理的数据结果:

6、处理过程日志:

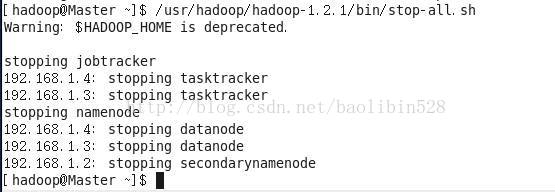

7、关闭集群:

8、附部分代码和结果:

Map函数:

public static class Map extendsMapper<LongWritable,Text,Text,Text>{

publicvoid map(LongWritable key,Text value,Context context) throwsIOException,InterruptedException{

Stringline = value.toString();

try{

String[] lineSplit=line.split(" ");

Stringanum=lineSplit[0];

Stringbnum=lineSplit[1];

context.write(newText(bnum),new Text(anum));

}catch(Exceptione){

context.getCounter(Counter.LINESKIP).increment(1);

return;

}

}

}

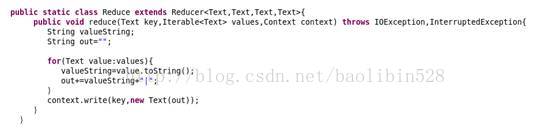

Reduce函数:

public static class Reduce extendsReducer<Text,Text,Text,Text>{

publicvoid reduce(Text key,Iterable<Text> values,Context context) throwsIOException,InterruptedException{

StringvalueString;

Stringout="";

for(Textvalue:values){

valueString=value.toString();

out+=valueString+"|";

}

context.write(key,newText(out));

}

}

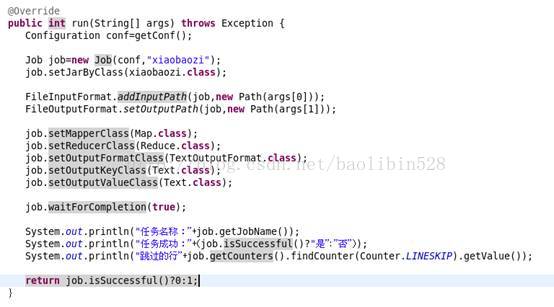

Run方法:

public int run(String[] args) throwsException {

Configurationconf=getConf();

Jobjob=new Job(conf,"xiaobaozi");

job.setJarByClass(xiaobaozi.class);

FileInputFormat.addInputPath(job,newPath(args[0]));

FileOutputFormat.setOutputPath(job,newPath(args[1]));

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputFormatClass(TextOutputFormat.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

job.waitForCompletion(true);

System.out.println("任务名称:"+job.getJobName());

System.out.println("任务成功:"+(job.isSuccessful()?"是":"否"));

System.out.println("跳过的行"+job.getCounters().findCounter(Counter.LINESKIP).getValue());

returnjob.isSuccessful()?0:1;

}

enum Counter{

LINESKIP, //出错的行

}

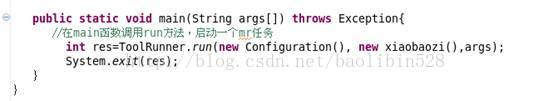

public static void main(String args[]) throws Exception{

//在main函数调用run方法,启动一个mr任务

intres=ToolRunner.run(new Configuration(), new xiaobaozi(),args);

System.exit(res);

}

10086 13614033692|13614033692|17702449852| 110 13614033692|17702449852| 119 18004024063|17702449852| 120 18004024063|13614033692| 17702449852 18004024063|