Hadoop 求最大值 最小值 BiggestSmallest

Hadoop 求最大值 最小值

1、源代码

package com.dtspark.hadoop.hellomapreduce;

import java.io.IOException;

import java.util.Iterator;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.FloatWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import com.dtspark.hadoop.hellomapreduce.BiggestSmallest.BiggestSmallestMapper;

public class BiggestSmallest {

/*

* 将文件中的每行数据作为输出的key

*/

public static class BiggestSmallestMapper

extends Mapper<Object, Text, Text,LongWritable>{

private final LongWritable data = new LongWritable(0);

private final Text keyFoReducer=new Text("keyFoReducer");

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

System.out.println("Map Method Invoked!");

data.set(Long.parseLong(value.toString()));

System.out.println(data);

context.write(keyFoReducer,data);

}

}

/*

* 将key输出

*/

public static class BiggestSmallestReducer

extends Reducer<Text, LongWritable,Text,LongWritable> {

private long maxValue =Long.MIN_VALUE;

private long minValue =Long.MAX_VALUE;

public void reduce(Text key, Iterable<LongWritable> values,

Context context

) throws IOException, InterruptedException {

System.out.println("Reduce Method Invoked!");

for(LongWritable item : values){

if (item.get()>maxValue){

maxValue=item.get();

}

if (item.get()<minValue){

minValue=item.get();

}

}

context.write(new Text("maxValue"), new LongWritable(maxValue));

context.write(new Text("minValue"), new LongWritable(minValue));

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length < 2) {

System.err.println("Usage: DefferentData <in> [<in>...] <out>");

System.exit(2);

}

Job job = new Job(conf, "Average");

job.setJarByClass(BiggestSmallest.class);

job.setMapperClass(BiggestSmallestMapper.class);

job.setCombinerClass(BiggestSmallestReducer.class);//加快效率

job.setReducerClass(BiggestSmallestReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

for (int i = 0; i < otherArgs.length - 1; ++i) {

FileInputFormat.addInputPath(job, new Path(otherArgs[i]));

}

FileOutputFormat.setOutputPath(job,

new Path(otherArgs[otherArgs.length - 1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

2、数据文件

[root@master IMFdatatest]#pwd

/usr/local/IMFdatatest

[root@master IMFdatatest]#cat dataBiggestsmallest.txt

1

33

55

66

77

45

34567

50

88776

345

5555555

23

32

3、上传集群

[root@master IMFdatatest]#hadoop dfs -put dataBiggestsmallest.txt /library

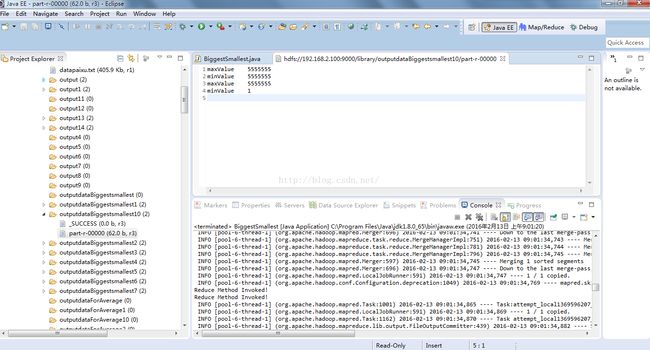

4、运行参数

hdfs://192.168.2.100:9000/library/dataBiggestsmallest.txt

hdfs://192.168.2.100:9000/library/outputdataBiggestsmallest10

5、运行结果

[root@master IMFdatatest]#hadoop dfs -cat /library/outputdataBiggestsmallest10/part-r-00000

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/12 20:03:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

maxValue 5555555

minValue 5555555

maxValue 5555555

minValue 1

[root@master IMFdatatest]#

这里有点奇怪,单独打印最大值 或者最小值都是正确的 只有一行

如果同时打印,就重复了