【Flume】【源码分析】深入flume-ng的三大组件——source,channel,sink

概览

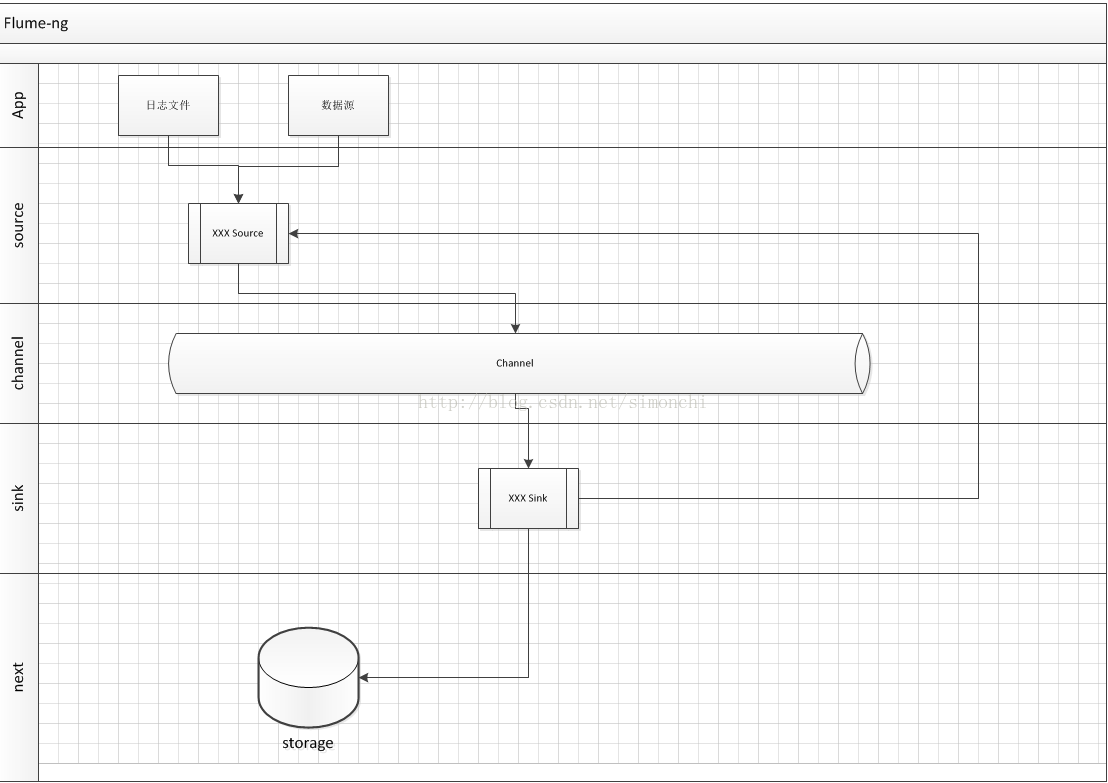

flume-ng中最重要的核心三大组件就是source,channel,sink

source负责从源端收集数据,产出event

channel负责暂存event,以备下游取走消费

sink负责消费通道中的event,写到最终的输出端上

以上是总体的一个简单结构图,下面我们来深入每一个组件的内部看看:

1、Source

source接口的定义如下:

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface Source extends LifecycleAware, NamedComponent {

/**

* Specifies which channel processor will handle this source's events.

*

* @param channelProcessor

*/

public void setChannelProcessor(ChannelProcessor channelProcessor);

/**

* Returns the channel processor that will handle this source's events.

*/

public ChannelProcessor getChannelProcessor();

}

source生成event并且调用配置的channelprocessor的相关方法,持续的将events存入配置的channel里

channelProcessor中有通道选择器和拦截器链,该过程处在source端收到数据和放入通道直接

看一个source的具体工作流程:ExecSource

该source继承了两个类

1、NamedComponent

负责给每一个组件取一个唯一标识,就是名字,这个名字来源于我们的配置

2、LifecycleAware

负责组件的启停和状态维护

Source接口的直接实现类是AbstractSource抽象类

该类中就定义了通道处理器

还有一个生命状态周期的枚举类型

public enum LifecycleState {

IDLE, START, STOP, ERROR;

public static final LifecycleState[] START_OR_ERROR = new LifecycleState[] {

START, ERROR };

public static final LifecycleState[] STOP_OR_ERROR = new LifecycleState[] {

STOP, ERROR };

}

这里就定义了一个组件会有的4种状态

实现接口的启停组件方法,方法体中只有一个状态的赋值,具体实现,我们来看一个具体的Source——ExecSource

读取配置方面很简单,这里就不说了,看下start方法

public void start() {

logger.info("Exec source starting with command:{}", command);

executor = Executors.newSingleThreadExecutor();

runner = new ExecRunnable(shell, command, getChannelProcessor(), sourceCounter,

restart, restartThrottle, logStderr, bufferCount, batchTimeout, charset);

// FIXME: Use a callback-like executor / future to signal us upon failure.

runnerFuture = executor.submit(runner);

/*

* NB: This comes at the end rather than the beginning of the method because

* it sets our state to running. We want to make sure the executor is alive

* and well first.

*/

sourceCounter.start();

super.start();

logger.debug("Exec source started");

}

该方法内部就是启动了一个线程去执行我们配置的终端命令

前面一篇文章也说过从入口分析如何调用到该start方法,以及调用频率:http://blog.csdn.net/simonchi/article/details/42970373

2、channel

对于通道来说,最重要的就是event的维护,flume的核心就是要中转这些event,所以event一定不能出事

Channel接口定义如下:

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface Channel extends LifecycleAware, NamedComponent {

/**

* <p>Puts the given event into the channel.</p>

* <p><strong>Note</strong>: This method must be invoked within an active

* {@link Transaction} boundary. Failure to do so can lead to unpredictable

* results.</p>

* @param event the event to transport.

* @throws ChannelException in case this operation fails.

* @see org.apache.flume.Transaction#begin()

*/

public void put(Event event) throws ChannelException;

/**

* <p>Returns the next event from the channel if available. If the channel

* does not have any events available, this method must return {@code null}.

* </p>

* <p><strong>Note</strong>: This method must be invoked within an active

* {@link Transaction} boundary. Failure to do so can lead to unpredictable

* results.</p>

* @return the next available event or {@code null} if no events are

* available.

* @throws ChannelException in case this operation fails.

* @see org.apache.flume.Transaction#begin()

*/

public Event take() throws ChannelException;

/**

* @return the transaction instance associated with this channel.

*/

public Transaction getTransaction();

}

|

通道中的event全部都在事务的管理之中

先来看看这个事务的定义

<pre code_snippet_id="593798" snippet_file_name="blog_20150130_5_6226220" name="code" class="java">public interface Transaction {

public enum TransactionState {Started, Committed, RolledBack, Closed };

/**

* <p>Starts a transaction boundary for the current channel operation. If a

* transaction is already in progress, this method will join that transaction

* using reference counting.</p>

* <p><strong>Note</strong>: For every invocation of this method there must

* be a corresponding invocation of {@linkplain #close()} method. Failure

* to ensure this can lead to dangling transactions and unpredictable results.

* </p>

*/

public void begin();

/**

* Indicates that the transaction can be successfully committed. It is

* required that a transaction be in progress when this method is invoked.

*/

public void commit();

/**

* Indicates that the transaction can must be aborted. It is

* required that a transaction be in progress when this method is invoked.

*/

public void rollback();

/**

* <p>Ends a transaction boundary for the current channel operation. If a

* transaction is already in progress, this method will join that transaction

* using reference counting. The transaction is completed only if there

* are no more references left for this transaction.</p>

* <p><strong>Note</strong>: For every invocation of this method there must

* be a corresponding invocation of {@linkplain #begin()} method. Failure

* to ensure this can lead to dangling transactions and unpredictable results.

* </p>

*/

public void close();

}

|

和我们想想中的一样,也就是一些标准的事务方法的定义,和一个事务状态的枚举类型的定义

基本事务语义抽象类是对它的实现BasicTransactionSemantics

该类定义了两个属性

state状态和initialThreadId,id是唯一的,用来标识事务

构造方法中会赋值为NEW状态,并获取当前事务的一个ID值

重点来看下如下几个方法的具体实现:

protected void doBegin() throws InterruptedException {}

protected abstract void doPut(Event event) throws InterruptedException;

protected abstract Event doTake() throws InterruptedException;

protected abstract void doCommit() throws InterruptedException;

protected abstract void doRollback() throws InterruptedException;

protected void doClose() {}

|

1、doBegin

没什么好说的,就是检查状态是否NEW,ID是否匹配,没有问题后,将状态修改为OPEN,表示事务打开

2、doPut

takeList和putList维护的是希望取出成功和放入成功的event队列

检查ID是否匹配,状态是否打开,event是否为空,为空当然这个put就没意义了

关键看具体是怎么put的?

在FileChannel中有个内部静态类

static class FileBackedTransaction extends BasicTransactionSemantics

private final LinkedBlockingDeque<FlumeEventPointer> takeList;

private final LinkedBlockingDeque<FlumeEventPointer> putList;

|

这分别定义了两个双向队列,用于拿和放

protected void doPut(Event event) throws InterruptedException {

channelCounter.incrementEventPutAttemptCount();

if(putList.remainingCapacity() == 0) {

throw new ChannelException("Put queue for FileBackedTransaction " +

"of capacity " + putList.size() + " full, consider " +

"committing more frequently, increasing capacity or " +

"increasing thread count. " + channelNameDescriptor);

}

// this does not need to be in the critical section as it does not

// modify the structure of the log or queue.

if(!queueRemaining.tryAcquire(keepAlive, TimeUnit.SECONDS)) {

throw new ChannelFullException("The channel has reached it's capacity. "

+ "This might be the result of a sink on the channel having too "

+ "low of batch size, a downstream system running slower than "

+ "normal, or that the channel capacity is just too low. "

+ channelNameDescriptor);

}

boolean success = false;

log.lockShared();

try {

FlumeEventPointer ptr = log.put(transactionID, event);

Preconditions.checkState(putList.offer(ptr), "putList offer failed "

+ channelNameDescriptor);

queue.addWithoutCommit(ptr, transactionID);

success = true;

} catch (IOException e) {

throw new ChannelException("Put failed due to IO error "

+ channelNameDescriptor, e);

} finally {

log.unlockShared();

if(!success) {

// release slot obtained in the case

// the put fails for any reason

queueRemaining.release();

}

}

}

|

第一行,跟监控的度量信息有关,表示即将放入通道的event数量+1,监控度量请参考:http://blog.csdn.net/simonchi/article/details/43270461

1、检查队列的剩余空间

2、keepAlive秒时间内获取一个共享信号量,说明put的过程是互斥的

如果该时间内没有成功获取该信号量,那么event放入失败

3、FlumeEventPointer是用来做检查点机制的,因为这是文件通道,会用日志记录的

1、将event和事务id绑定到Pointer上

2、将pointer放入队列尾部

3、通道中的事件队列FlumeEventQueue添加一个未提交的事件,绑定了事务ID

4、释放共享信号量

3、doTake

protected Event doTake() throws InterruptedException {

channelCounter.incrementEventTakeAttemptCount();

if(takeList.remainingCapacity() == 0) {

throw new ChannelException("Take list for FileBackedTransaction, capacity " +

takeList.size() + " full, consider committing more frequently, " +

"increasing capacity, or increasing thread count. "

+ channelNameDescriptor);

}

log.lockShared();

/*

* 1. Take an event which is in the queue.

* 2. If getting that event does not throw NoopRecordException,

* then return it.

* 3. Else try to retrieve the next event from the queue

* 4. Repeat 2 and 3 until queue is empty or an event is returned.

*/

try {

while (true) {

FlumeEventPointer ptr = queue.removeHead(transactionID);

if (ptr == null) {

return null;

} else {

try {

// first add to takeList so that if write to disk

// fails rollback actually does it's work

Preconditions.checkState(takeList.offer(ptr),

"takeList offer failed "

+ channelNameDescriptor);

log.take(transactionID, ptr); // write take to disk

Event event = log.get(ptr);

return event;

} catch (IOException e) {

throw new ChannelException("Take failed due to IO error "

+ channelNameDescriptor, e);

} catch (NoopRecordException e) {

LOG.warn("Corrupt record replaced by File Channel Integrity " +

"tool found. Will retrieve next event", e);

takeList.remove(ptr);

} catch (CorruptEventException ex) {

if (fsyncPerTransaction) {

throw new ChannelException(ex);

}

LOG.warn("Corrupt record found. Event will be " +

"skipped, and next event will be read.", ex);

takeList.remove(ptr);

}

}

}

} finally {

log.unlockShared();

}

}

|

1、剩余容量检查

2、检查点机制,日志记录操作,从头部取event

3、从takeList中删除该event

4、doCommit

protected void doCommit() throws InterruptedException {

int puts = putList.size();

int takes = takeList.size();

if(puts > 0) {

Preconditions.checkState(takes == 0, "nonzero puts and takes "

+ channelNameDescriptor);

log.lockShared();

try {

log.commitPut(transactionID);

channelCounter.addToEventPutSuccessCount(puts);

synchronized (queue) {

while(!putList.isEmpty()) {

if(!queue.addTail(putList.removeFirst())) {

StringBuilder msg = new StringBuilder();

msg.append("Queue add failed, this shouldn't be able to ");

msg.append("happen. A portion of the transaction has been ");

msg.append("added to the queue but the remaining portion ");

msg.append("cannot be added. Those messages will be consumed ");

msg.append("despite this transaction failing. Please report.");

msg.append(channelNameDescriptor);

LOG.error(msg.toString());

Preconditions.checkState(false, msg.toString());

}

}

queue.completeTransaction(transactionID);

}

} catch (IOException e) {

throw new ChannelException("Commit failed due to IO error "

+ channelNameDescriptor, e);

} finally {

log.unlockShared();

}

} else if (takes > 0) {

log.lockShared();

try {

log.commitTake(transactionID);

queue.completeTransaction(transactionID);

channelCounter.addToEventTakeSuccessCount(takes);

} catch (IOException e) {

throw new ChannelException("Commit failed due to IO error "

+ channelNameDescriptor, e);

} finally {

log.unlockShared();

}

queueRemaining.release(takes);

}

putList.clear();

takeList.clear();

channelCounter.setChannelSize(queue.getSize());

}

|

1、如果putList不为空,提交的是放入通道的事件数量

2、如果takeList不为空,提交的是从通道拿走的事件数量

5、doRollback

protected void doRollback() throws InterruptedException {

int puts = putList.size();

int takes = takeList.size();

log.lockShared();

try {

if(takes > 0) {

Preconditions.checkState(puts == 0, "nonzero puts and takes "

+ channelNameDescriptor);

synchronized (queue) {

while (!takeList.isEmpty()) {

Preconditions.checkState(queue.addHead(takeList.removeLast()),

"Queue add failed, this shouldn't be able to happen "

+ channelNameDescriptor);

}

}

}

putList.clear();

takeList.clear();

queue.completeTransaction(transactionID);

channelCounter.setChannelSize(queue.getSize());

log.rollback(transactionID);

} catch (IOException e) {

throw new ChannelException("Commit failed due to IO error "

+ channelNameDescriptor, e);

} finally {

log.unlockShared();

// since rollback is being called, puts will never make it on

// to the queue and we need to be sure to release the resources

queueRemaining.release(puts);

}

}

|

在此之前,putList,takeList都没有clear,所以这里可以对着两个双向队列回滚操作

以上是文件通道的实现,如果是内存通道,就没有log的检查点记录了,简单多了,不需要维护状态了。

3、sink

sink的接口定义如下:

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface Sink extends LifecycleAware, NamedComponent {

/**

* <p>Sets the channel the sink will consume from</p>

* @param channel The channel to be polled

*/

public void setChannel(Channel channel);

/**

* @return the channel associated with this sink

*/

public Channel getChannel();

/**

* <p>Requests the sink to attempt to consume data from attached channel</p>

* <p><strong>Note</strong>: This method should be consuming from the channel

* within the bounds of a Transaction. On successful delivery, the transaction

* should be committed, and on failure it should be rolled back.

* @return READY if 1 or more Events were successfully delivered, BACKOFF if

* no data could be retrieved from the channel feeding this sink

* @throws EventDeliveryException In case of any kind of failure to

* deliver data to the next hop destination.

*/

public Status process() throws EventDeliveryException;

public static enum Status {

READY, BACKOFF

}

}

|

sink与一个通道连接,并消费通道中的events,把它们发送到一个配置的目的地

其实和source的原理大部分相同,同样有一个AbstractSink

我们同样看一个具体实现吧,HDFSEventSink

看它的process方法

public Status process() throws EventDeliveryException {

Channel channel = getChannel();

Transaction transaction = channel.getTransaction();

List<BucketWriter> writers = Lists.newArrayList();

transaction.begin();

…………………………

transaction.commit();

if (txnEventCount < 1) {

return Status.BACKOFF;

} else {

sinkCounter.addToEventDrainSuccessCount(txnEventCount);

return Status.READY;

}

} catch (IOException eIO) {

transaction.rollback();

LOG.warn("HDFS IO error", eIO);

return Status.BACKOFF;

} catch (Throwable th) {

transaction.rollback();

LOG.error("process failed", th);

if (th instanceof Error) {

throw (Error) th;

} else {

throw new EventDeliveryException(th);

}

} finally {

transaction.close();

}

}

|

这里可以看到,flume-ng在sink端是有事务控制的

事务从 从通道中取event开始,到sink到下一个目的地结束

在这个过程中,任意的失败都会导致事务的回滚,这就保证数据了一致性。