hadoop例子

目录[-]

算法是程序的精髓所在,算法也是一个人是否适合做软件开发的衡量标准。当然算法不是衡量一个人是否聪明的标准,熟练掌握以下几种,做到触类旁通即可。

以下几个例子测试环境:伪分布式, IP 为 localhost ,集群和 eclipse 在同一个系统内。1.排序:

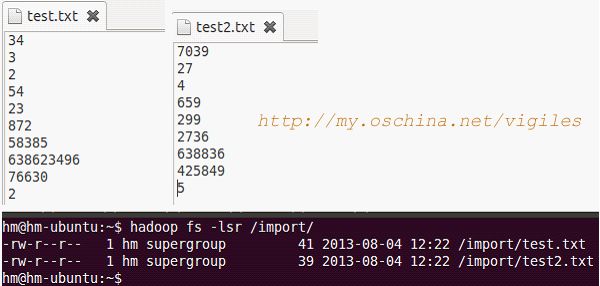

1)数据:

hadoop fs -mkdir /import

创建一个或者多个文本,上传

hadoop fs -put test.txt /import/

创建一个或者多个文本,上传

hadoop fs -put test.txt /import/

2)代码:package com.cuiweiyou.sort;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

//hadoop默认排序:

//如果k2、v2类型是Text-文本,结果是按照字典顺序

//如果k2、v2类型是LongWritable-数字,结果是按照数字大小顺序

public class SortTest {

/**

* 内部类:映射器 Mapper<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

public static class MyMapper extends Mapper<LongWritable, Text, LongWritable, NullWritable> {

/**

* 重写map方法

*/

public void map(LongWritable k1, Text v1, Context context) throws IOException, InterruptedException {

//这里v1转为k2-数字类型,舍弃k1。null为v2

context.write(new LongWritable(Long.parseLong(v1.toString())), NullWritable.get());

//因为v1可能重复,这时,k2也是可能有重复的

}

}

/**

* 内部类:拆分器 Reducer<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

public static class MyReducer extends Reducer<LongWritable, NullWritable, LongWritable, NullWritable> {

/**

* 重写reduce方法

* 在此方法执行前,有个shuffle过程,会根据k2将对应的v2归并为v2[...]

*/

protected void reduce(LongWritable k2, Iterable<NullWritable> v2, Reducer<LongWritable, Context context) throws IOException, InterruptedException {

//k2=>k3, v2[...]舍弃。null => v3

context.write(k2, NullWritable.get());

//此时,k3如果发生重复,根据默认算法会发生覆盖,即最终仅保存一个k3

}

}

public static void main(String[] args) throws Exception {

// 声明配置信息

Configuration conf = new Configuration();

conf.set("fs.default.name", "hdfs://localhost:9000");

// 创建作业

Job job = new Job(conf, "SortTest");

job.setJarByClass(SortTest.class);

// 设置mr

job.setMapperClass(MyMapper.class);

job.setReducerClass(MyReducer.class);

// 设置输出类型,和Context上下文对象write的参数类型一致

job.setOutputKeyClass(LongWritable.class);

job.setOutputValueClass(NullWritable.class);

// 设置输入输出路径

FileInputFormat.setInputPaths(job, new Path("/import/"));

FileOutputFormat.setOutputPath(job, new Path("/out"));

// 执行

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

?

3)测试:

可以看到,不仅排序而且去重了。

2.去重:

需求:查取手机号有哪些。这里的思路和上面排序算法的思路是一致的,仅仅多了分割出手机号这一步骤。

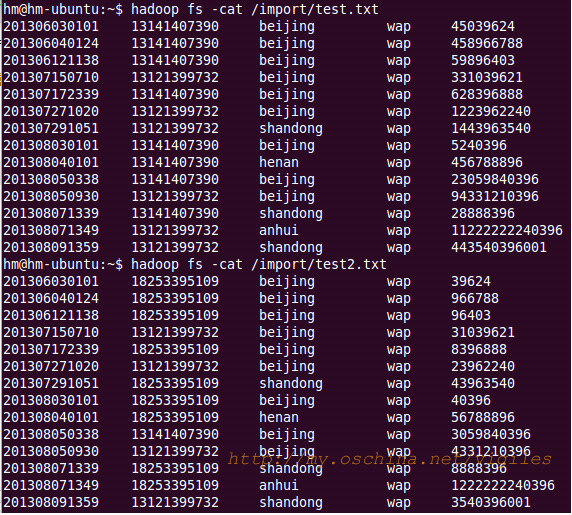

1)数据:

创建两个文本,手动输入一些测试内容。每个字段用制表符隔开。日期,电话,地址,方式,数据量。

2)代码:

(1)map和reduce:

/**

* 映射器 Mapper<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

public static class MyMapper extends Mapper<LongWritable, Text, Text, NullWritable> {

/**

* 重写map方法

*/

protected void map(LongWritable k1, Text v1, Context context) throws IOException ,InterruptedException {

//按照制表符进行分割

String[] tels = v1.toString().split("\t");

//k1 => k2-第2列手机号,null => v2

context.write(new Text(tels[1]), NullWritable.get());

}

}

/************************************************************

* 在map后,reduce前,有个shuffle过程,会根据k2将对应的v2归并为v2[...]

***********************************************************/

/**

* 拆分器 Reducer<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

public static class MyReducer extends Reducer<Text, NullWritable, Text, NullWritable> {

/**

* 重写reduce方法

*/

protected void reduce(Text k2, Iterable<NullWritable> v2, Context context) throws IOException ,InterruptedException {

//此时,k3如果发生重复,根据默认算法会发生覆盖,即最终仅保存一个k3,达到去重到效果

context.write(k2, NullWritable.get());

}

}

?

(2)配置输出:

?

job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

3)测试:

3.过滤:

需求:查询在北京地区发生的上网记录。思路同上,当写出 k2 、 v2 时加一个判断即可。1)数据:

同上。2)代码:

(1)map和reduce:

/**

* 内部类:映射器 Mapper<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

publicstatic class MyMapper extendsMapper<LongWritable, Text, Text, NullWritable> {

/**

* 重写map方法

*/

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//按照制表符进行分割

finalString[] adds = v1.toString().split("\t");

//地址在第3列

//k1 => k2-地址,null => v2

if(adds[2].equals("beijing")){

context.write(newText(v1.toString()), NullWritable.get());

}

}

}

/**

* 内部类:拆分器 Reducer<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

publicstatic class MyReducer extendsReducer<Text, NullWritable, Text, NullWritable> {

/**

* 重写reduce方法

*/

protectedvoid reduce(Text k2, Iterable<NullWritable> v2, Context context) throwsIOException ,InterruptedException {

context.write(k2, NullWritable.get());

}

}

?

/**

* 内部类:映射器 Mapper<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

publicstatic class MyMapper extendsMapper<LongWritable, Text, Text, NullWritable> {

/**

* 重写map方法

*/

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//按照制表符进行分割

finalString[] adds = v1.toString().split("\t");

//地址在第3列

//k1 => k2-地址,null => v2

if(adds[2].equals("beijing")){

context.write(newText(v1.toString()), NullWritable.get());

}

}

}

/**

* 内部类:拆分器 Reducer<KEY_IN, VALUE_IN, KEY_OUT, VALUE_OUT>

*/

publicstatic class MyReducer extendsReducer<Text, NullWritable, Text, NullWritable> {

/**

* 重写reduce方法

*/

protectedvoid reduce(Text k2, Iterable<NullWritable> v2, Context context) throwsIOException ,InterruptedException {

context.write(k2, NullWritable.get());

}

}

(2)配置输出:

job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

?

job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

3)测试:

4.TopN:

这个算法非常经典,面试必问。实现这个效果的算法也很多。下面是个简单的示例。

需求:找到流量最大值;找出前5个最大值。

1)数据:

同上。

2)代码1-最大值:

(1)map和reduce:

//map

publicstatic class MyMapper extendsMapper<LongWritable, Text, LongWritable, NullWritable> {

//首先创建一个临时变量,保存一个可存储的最小值:Long.MIN_VALUE=-9223372036854775808

longtemp = Long.MIN_VALUE;

//找出最大值

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//按照制表符进行分割

finalString[] flows = v1.toString().split("\t");

//将文本转数值

finallong val = Long.parseLong(flows[4]);

//如果v1比临时变量大,则保存v1的值

if(temp<val){

temp = val;

}

}

/** ---此方法在全部的map任务结束后执行一次。这时仅输出临时变量到最大值--- **/

protectedvoid cleanup(Context context) throwsIOException ,InterruptedException {

context.write(newLongWritable(temp), NullWritable.get());

System.out.println("文件读取完毕");

}

}

//reduce

publicstatic class MyReducer extendsReducer<LongWritable, NullWritable, LongWritable, NullWritable> {

//临时变量

Long temp = Long.MIN_VALUE;

//因为一个文件得到一个最大值,再次将这些值比对,得到最大的

protectedvoid reduce(LongWritable k2, Iterable<NullWritable> v2, Context context) throwsIOException ,InterruptedException {

longlong1 = Long.parseLong(k2.toString());

//如果k2比临时变量大,则保存k2的值

if(temp<long1){

temp = long1;

}

}

/** !!!此方法在全部的reduce任务结束后执行一次。这时仅输出临时变量到最大值!!! **/

protectedvoid cleanup(Context context) throwsIOException, InterruptedException {

context.write(newLongWritable(temp), NullWritable.get());

}

}

?

(2)配置输出:

?

job.setOutputKeyClass(LongWritable.class); job.setOutputValueClass(NullWritable.class);

3)测试1:

4)代码2-TopN:

(1)map和reduce:

?

//map

publicstatic class MyMapper extendsMapper<LongWritable, Text, LongWritable, NullWritable> {

//首先创建一个临时变量,保存一个可存储的最小值:Long.MIN_VALUE=-9223372036854775808

longtemp = Long.MIN_VALUE;

//Top5存储空间

long[] tops;

/** 次方法在run中调用,在全部map之前执行一次 **/

protectedvoid setup(Context context) {

//初始化数组长度为5

tops = newlong[5];

}

//找出最大值

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//按照制表符进行分割

finalString[] flows = v1.toString().split("\t");

//将文本转数值

finallong val = Long.parseLong(flows[4]);

//保存在0索引

tops[0] = val;

//排序后最大值在最后一个索引,这样从后到前依次减小

Arrays.sort(tops);

}

/** ---此方法在全部到map任务结束后执行一次。这时仅输出临时变量到最大值--- **/

protectedvoid cleanup(Context context) throwsIOException ,InterruptedException {

//保存前5条数据

for(inti = 0; i < tops.length; i++) {

context.write(newLongWritable(tops[i]), NullWritable.get());

}

}

}

//reduce

publicstatic class MyReducer extendsReducer<LongWritable, NullWritable, LongWritable, NullWritable> {

//临时变量

Long temp = Long.MIN_VALUE;

//Top5存储空间

long[] tops;

/** 次方法在run中调用,在全部map之前执行一次 **/

protectedvoid setup(Context context) {

//初始化长度为5

tops = newlong[5];

}

//因为每个文件都得到5个值,再次将这些值比对,得到最大的

protectedvoid reduce(LongWritable k2, Iterable<NullWritable> v2, Context context) throwsIOException ,InterruptedException {

longtop = Long.parseLong(k2.toString());

//

tops[0] = top;

//

Arrays.sort(tops);

}

/** ---此方法在全部到reduce任务结束后执行一次。输出前5个最大值--- **/

protectedvoid cleanup(Context context) throwsIOException, InterruptedException {

//保存前5条数据

for(inti = 0; i < tops.length; i++) {

context.write(newLongWritable(tops[i]), NullWritable.get());

}

}

}

(2)配置输出:

job.setOutputKeyClass(LongWritable.class); job.setOutputValueClass(NullWritable.class);

?

5)测试2:

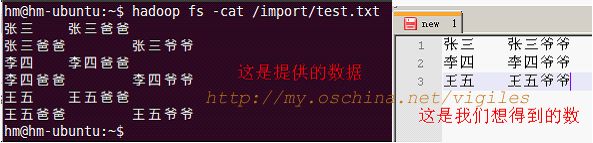

5.单表关联:

本例中的单表实际就是一个文本文件。

1)数据:

2)代码:

(1)map和reduce:

?

//map

publicstatic class MyMapper extendsMapper<LongWritable, Text, Text, Text> {

//拆分原始数据

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//按制表符拆分记录

String[] splits = v1.toString().split("\t");

//一条k2v2记录:把孙辈作为k2;祖辈加下划线区分,作为v2

context.write(newText(splits[0]),newText("_"+splits[1]));

//一条k2v2记录:把祖辈作为k2;孙辈作为v2。就是把原两个单词调换位置保存

context.write(newText(splits[1]),newText(splits[0]));

}

/**

张三 _张三爸爸

张三爸爸 张三

张三爸爸 _张三爷爷

张三爷爷 张三爸爸

**/

}

//reduce

publicstatic class MyReducer extendsReducer<Text, Text, Text, Text> {

//拆分k2v2[...]数据

protectedvoid reduce(Text k2, Iterable<Text> v2, Context context) throwsIOException ,InterruptedException {

String grandchild = "";//孙辈

String grandfather = ""; //祖辈

/**

张三爸爸 [_张三爷爷,张三]

**/

//从迭代中遍历v2[...]

for(Text man : v2) {

String p = man.toString();

//如果单词是以下划线开始的

if(p.startsWith("_")){

//从索引1开始截取字符串,保存到祖辈变量

grandfather = p.substring(1);

}

//如果单词没有下划线起始

else{

//直接赋值给孙辈变量

grandchild = p;

}

}

//在得到有效数据的情况下

if( grandchild!=""&& grandfather!=""){

//写出得到的结果。

context.write(newText(grandchild), newText(grandfather));

}

/**

k3=张三,v3=张三爷爷

**/

}

}

(2)配置输出:

?

job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class);

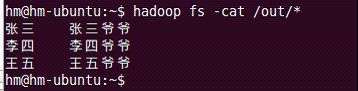

3)测试:

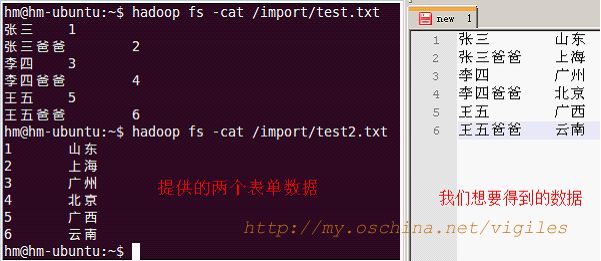

6.双表关联:

本例中仍简单采用两个文本文件。

1)数据:

2)代码:

(1)map和reduce:

?

//map

publicstatic class MyMapper extendsMapper<LongWritable, Text, Text, Text> {

//拆分原始数据

protectedvoid map(LongWritable k1, Text v1, Context context) throwsIOException ,InterruptedException {

//拆分记录

String[] splited = v1.toString().split("\t");

//如果第一列是数字(使用正则判断),就是地址表

if(splited[0].matches("^[-+]?(([0-9]+)([.]([0-9]+))?|([.]([0-9]+))?)$")){

String addreId = splited[0];

String address = splited[1];

//k2,v2-加两条下划线作为前缀标识为地址

context.write(newText(addreId), newText("__"+address));

}

//否则就是人员表

else{

String personId = splited[1];

String persName = splited[0];

//k2,v2-加两条横线作为前缀标识为人员

context.write(newText(personId), newText("--"+persName));

}

/**

1 __北京

1 --张三

**/

}

}

//reduce

publicstatic class MyReducer extendsReducer<Text, Text, Text, Text> {

//拆分k2v2[...]数据

protectedvoid reduce(Text k2, Iterable<Text> v2, Context context) throwsIOException ,InterruptedException {

String address = ""; //地址

String person = ""; //人员

/**

1, [__北京,--张三]

**/

//迭代的是address或者person

for(Text text : v2) {

String tmp = text.toString();

if(tmp.startsWith("__")){

//如果是__开头的是address

address = tmp.substring(2);//从索引2开始截取字符串

}

if(tmp.startsWith("--")){

//如果是--开头的是person

person = tmp.substring(2);

}

}

context.write(newText(person), newText(address));

}

/**

k3=张三,v3=北京

**/

}

(2)配置输出:

?

job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class);

3)测试: