MongoDB, as some of you may know, has a process-wide write lock. This has caused some degree of ridicule from database purists when they discover such a primitive locking model. Now per-database and per-collection locking is on the roadmap for MongoDB, but it's not here yet. What was announced in MongoDB version 2.0 was locking-with-yield. I was curious about the performance impact of the write lock and the improvement of lock-with-yield, so I decided to do a little benchmark, MongoDB 1.8 versus MongoDB 2.0.

PAGE FAULTS AND WORKING SETS

MongoDB uses the operating system's virtual memory system to handle writing data back to disk. The way this works is that MongoDB maps the entire database into the OS's virtual memory and lets the OS decide which pages are 'hot' and need to be inphysical memory, as well as which pages are 'cold' and can be swapped out to disk. MongoDB also has a periodic process that makes sure that the database on disk is sync'd up with the database in memory. (MongoDB also includes a journalling system to make sure you don't lose data, but it's beyond the scope of this article.)

What this means is that you have very different behavior depending on whether the particular page you're reading or writing is in memory or not. If it is not in memory, this is called a page fault and the query or update has to wait for the very slow operation of loading the necessary data from disk. If the data is in memory (the OS has decided it's a 'hot' page), then an update is literally just writing to system RAM, a very fast operation. This is why 10gen (the makers of MongoDB) recommend that, if at all possible, you have sufficient RAM on your server to keep the entire working set (the set of data you access frequently) in memory at all times.

If you are able to do this, it turns out that the global write lock really doesn't affect you. Blocking reads for a few nanoseconds while a write completes turns out to be a non-issue. (I have not measured this, but I suspect that the acquisition of the global write lock takes significantly longer than the actual write.) But this is all kind of hand-waving at this point. I did end up measuring what happens to your query rate as you do more and more (non-faulting) writes and found very little effect.

2.0 TO THE RESCUE?

So the real problem happens when you're doing a write, you acquire the lock, and then you try to write and fault while holding the lock. Since a page fault can take around 40,000 times longer than a nonfaulting memory operation, this obviously causes problems. In MongoDB version 2.0 and higher, this is addressed by detecting the likelihood of a page fault and releasing the lock before faulting. This allows other reads and writes to proceed while the data is brought in from disk. This is what I'm really trying to measure here; what is the effect of a so-called "write lock with yield" as opposed to a plain old "write lock?"

BENCHMARK SETUP

First off, I didn't want to worry about all the stuff I normally run on my laptop to get in the way, and I'd like to make the benchmark as reproducible as possible, so I decided to run it on Amazon EC2. (I have made the code I used available here - particularly fill_data.py, lib.py, and combined_benchmark.py if you'd like to try to replicate the results. Or you can just grab my image ami-63ca1e0a off AWS.) I used an m1.large instance size instance running Ubuntu server with ephemeral storage used for the database store. I also reformatted the ephemeral disk from ext3 to ext4 and mounted it with noatime for performance purposes. MongoDB is installed from the 10gen .deb repositories as version 1.8.4 (mongodb18-10gen) and 2.0.2 (mongodb-10gen).

The database used in the test contains one collection of 150 million 112-byte documents. This was chosen to give a total size significantly in excess of the 7.5 GB of physical RAM allocated to the m1.large instances. (The collection size with its index takes up 21.67 GB in virtual memory). Journalling has been turned *off* in both 1.8 and 2.0.

What I wanted to measure is the effect of writes on reads that are occurring simultaneously. In order to simulate such a load, the Python benchmark code uses the gevent library to create two greenlets. One is reading randomly from its working set of 10,000 documents as fast as possible, intended to show non-faulting read performance. The second greenlet attempts to write to a random document of the 150 million total documents at a specified rate. I then measure the achieved write and read rate and create a scatter plot of the results.

RESULTS

First, I wanted to show the effect of non-faulting writes on non-faulting reads. For this, I restrict writes to occur only in the first 10,000 documents (the same set that's being read). As expected, the write lock doesn't degrade performance much, and there's little difference between 1.8 and 2.0 in this case.

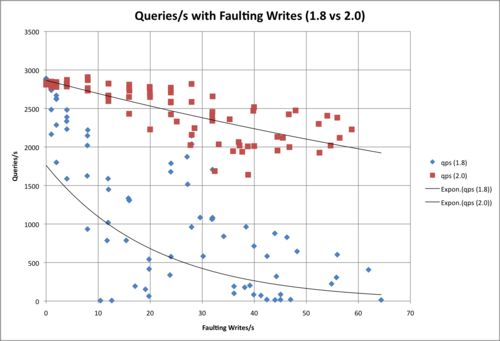

The next test shows the difference between 1.8 and 2.0 in fairly stark contrast. We can see an immediate drop-off in read performance in the presence of faulting writes, with virtually no reads getting through when we have 48 (!) writes per second. In contrast, 2.0 is able to maintain around 60% of its peak performance at that write level. (Going much beyond 48 faulting writes per second makes things difficult to measure, as you're using a significant percentage of the disk's total capacity just to service your page faults).

CONCLUSION

The global write lock in MongoDB will certainly not be mourned when it's gone, but as I've shown here, its effect on MongoDB's performance is significantly less than you might expect. In the case of non-faulting reads and writes (the working set fits in RAM), the write lock degrades performance only slightly as the number of writes increases, and in the presence of faulting writes, the lock-with-yield approach of 2.0 mitigates most of the performance impact of the occasional faulting write.