iOS中语音合成技术

一 摘要

今天讲啥呢,今天给大家介绍一个大家平时不经常使用的系统自带的语音合成控件,具体是什么控件呢,是AVSpeechSynthesizer.

二 发展史

首先我们来看看它的发展史,要说它的来历,出现的时间比较早,从IOS5开始,IOS系统已经在siri上集成了语音合成的功能,但是那时候全部是私有API。但是在IOS7,就新增了一个简单的API.可以实现无网络语音功能,支持多种语言.

大家看到这里一定非常想看看它的具体使用是什么,不要着急,先让大家看看它最终效果,这样大家会更有兴趣.

这里是有声音的,gif没办法把声音也保存起来,大家只看到了文字语言的切换,大家如果是感兴趣,大家可以把代码下载下来自己运行一下看一下顺便也听一下效果.好了,现在我们来看看具体的实现.

三 具体实现

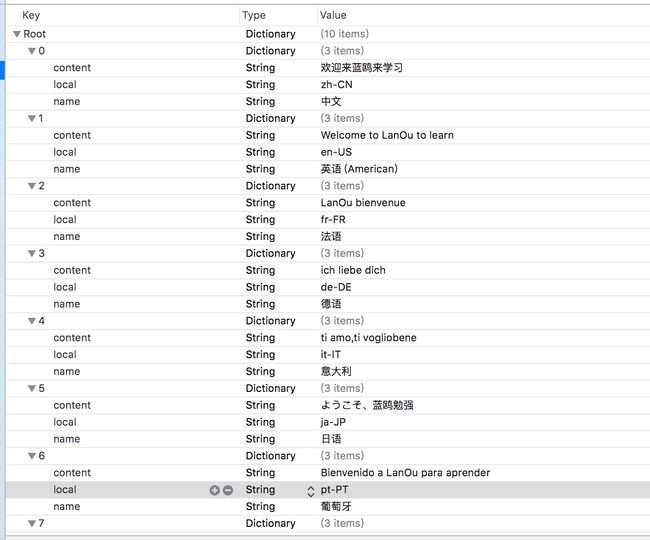

1.首先我们需要准备一个plist文件,里面包含语言类型,内容,国家.如图所示

2.plist文件的读取

NSString *plistPath = [[NSBundle mainBundle] pathForResource:@"Language" ofType:@"plist"];

NSDictionary *dictionary = [[NSDictionary alloc] initWithContentsOfFile:plistPath];3.导入AVFoundation/AVFoundation.h框架

注意:语音合成相关的API都是在AVFoundation/AVFoundation.h这个框架下面的.

#import <AVFoundation/AVFoundation.h>4.定义一个成员变量,记录语音合成器 AVSpeechSynthesizer

AVSpeechSynthesizer *av = [[AVSpeechSynthesizer alloc]init];5.定义语音对象 AVSpeechSynthesisVoice

其中这里的语言类型,我们是从plist文件里面获取的.

AVSpeechSynthesisVoice *voice = [AVSpeechSynthesisVoice voiceWithLanguage:[dic objectForKey:@"local"]];6.实例化发声对象 AVSpeechUtterance,指定要朗读的内容

AVSpeechUtterance *utterance = [[AVSpeechUtterance alloc]initWithString:[dic objectForKey:@"content"]];7.指定语音和朗诵速度

中文朗诵速度:0.1还能够接受

英文朗诵速度:0.3还可以

utterance.voice = voice;

utterance.rate *= 0.7;

8.启动

[av speakUtterance:utterance];大家看到这里 ,用法是不是非常简单,好了大家看一下完整的代码.

//

// ViewController.m

// ReadDiffrentLanguages

//

// Created by Apple on 16/5/22.

// Copyright © 2016年 liuYuGang. All rights reserved.

//

#import "ViewController.h"

#import <AVFoundation/AVFoundation.h>

@interface ViewController ()

@property (weak, nonatomic) IBOutlet UILabel *labL;

@property (weak, nonatomic) IBOutlet UILabel *textL;

@end

@implementation ViewController

- (void)viewDidLoad {

[super viewDidLoad];

self.view.backgroundColor = [UIColor colorWithPatternImage:[UIImage imageNamed:@"f66129f9-6.jpg"]];

// Do any additional setup after loading the view, typically from a nib.

}

- (IBAction)speak:(id)sender {

NSString *plistPath = [[NSBundle mainBundle] pathForResource:@"Language" ofType:@"plist"];

NSDictionary *dictionary = [[NSDictionary alloc] initWithContentsOfFile:plistPath];

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

for (int i=0; i<[dictionary count]; i++) {

NSDictionary *dic = [dictionary objectForKey:[NSString stringWithFormat:@"%d",i]];

AVSpeechSynthesisVoice *voice = [AVSpeechSynthesisVoice voiceWithLanguage:[dic objectForKey:@"local"]];

AVSpeechSynthesizer *av = [[AVSpeechSynthesizer alloc]init];

AVSpeechUtterance *utterance = [[AVSpeechUtterance alloc]initWithString:[dic objectForKey:@"content"]];

utterance.voice = voice;

utterance.rate *= 0.7;

[av speakUtterance:utterance];

dispatch_async(dispatch_get_main_queue(), ^{

_labL.text = [dic objectForKey:@"name"];

_textL.text = [dic objectForKey:@"content"];

});

[NSThread sleepForTimeInterval:3.5];

}

});

}

- (void)didReceiveMemoryWarning {

[super didReceiveMemoryWarning];

// Dispose of any resources that can be recreated.

}四 效果

五 结论

是不是非常简单,大家可以自己下下来自己运行一下看看效果.

源码:

http://download.csdn.net/detail/baihuaxiu123/9528396

大家技术交流可以关注我个人微信公众号: