SparkStreaming之Output Operations

Output Operation On DStream

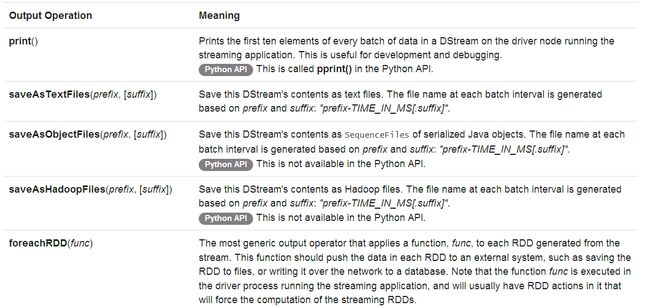

输出操作允许DStream的数据保存在外部系统中,像数据库或者文件系统。下面是官网给的说明:

1、print函数

/**

* Print the first ten elements of each RDD generated in this DStream. This is an output

* operator, so this DStream will be registered as an output stream and there materialized.

*/

def print(): Unit = ssc.withScope {

print(10)

}

/**

* Print the first num elements of each RDD generated in this DStream. This is an output

* operator, so this DStream will be registered as an output stream and there materialized.

*/

def print(num: Int): Unit = ssc.withScope {

def foreachFunc: (RDD[T], Time) => Unit = {

(rdd: RDD[T], time: Time) => {

val firstNum = rdd.take(num + 1)

// scalastyle:off println

println("-------------------------------------------")

println(s"Time: $time")

println("-------------------------------------------")

firstNum.take(num).foreach(println)

if (firstNum.length > num) println("...")

println()

// scalastyle:on println

}

}

foreachRDD(context.sparkContext.clean(foreachFunc), displayInnerRDDOps = false)

}从源码可以看错,输入print()不加参数是默认输入10行,如下就是默认输出的。

2、saveAsTextFiles函数

下面是我书写的保存形式:

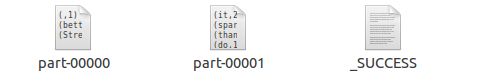

data.saveAsTextFiles("file:///root/application/test","txt")保存下来是 一个一个的文件夹,每个batch interval一个文件:

打开进去看是:

3、saveAsObjectFiles函数

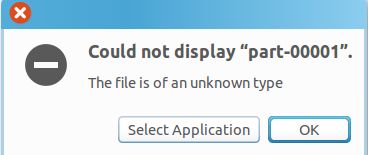

保存和saveAsTextFiles一样,但是不可以打开

原因是,先看看对這个函数的说明:

保存DStream的内容为一个序列化的文件SequenceFile。每一个批间隔的文件的文件名基于prefix和suffix生成。"prefix-TIME_IN_MS[.suffix]",在Python API中不可用。

就是说保存下来的是一个序列化的文件SequenceFile文件。

import org.apache.log4j.{Level, Logger}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object scalaOutput {

def main(args: Array[String]) {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

Logger.getLogger("org.eclipse.jetty.Server").setLevel(Level.OFF)

val conf = new SparkConf().setAppName("scalaOutput test").setMaster("local[4]")

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc,Seconds(2))

//set the Checkpoint directory

ssc.checkpoint("./Res")

//get the socket Streaming data

val socketStreaming = ssc.socketTextStream("master",9999)

val data = socketStreaming.map(x => (x, 1)).reduceByKeyAndWindow(_+_,

(a,b) => a+b*0

,Seconds(6),Seconds(2))

data.saveAsTextFiles("file:///root/application/test","txt")

//data.saveAsObjectFiles("file:///root/application/test","txt")

//data.saveAsHadoopFiles("file:///root/application/test","txt")

//data.saveAsHadoopFiles("hdfs://master:9000/examples/test-","txt")

/*data.map(evt =>{

val str = new ArrayBuffer[String]()//StringBuffer(String)

("string received: "+ str)

}

).saveAsTextFiles("file:///root/application/test","txt")*/

data.print()

ssc.start()

ssc.awaitTermination()

}

}