Hadoop2.4.1 简单的wordCount的MapReduce程序

我也是初学Hadoop,这一系列的博客只是记录我学习的过程。今天写了个自己的wordCount程序。

1.环境:Centos 6.5 32位, 在linux环境中开发。

2.核心代码如下:

2.1 Mapper类。

package com.npf.hadoop;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.util.StringUtils;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

@Override

protected void setup(Mapper<LongWritable, Text, Text, LongWritable>.Context context)throws IOException, InterruptedException {

System.out.println("WordCountMapper.setup()");

}

@Override

protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException {

String[] words = StringUtils.split(value.toString(),' ');

for (String word : words) {

context.write(new Text(word), new LongWritable(1L));

}

}

@Override

protected void cleanup(Mapper<LongWritable, Text, Text, LongWritable>.Context context)throws IOException, InterruptedException {

System.out.println("WordCountMapper.cleanup()");

}

}

package com.npf.hadoop;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable>{

@Override

protected void setup(Reducer<Text, LongWritable, Text, LongWritable>.Context context)throws IOException, InterruptedException {

System.out.println("WordCountReducer.setup()");

}

@Override

protected void reduce(Text word, Iterable<LongWritable> counts,Context context)throws IOException, InterruptedException {

Iterator<LongWritable> iterator = counts.iterator();

long count = 0L;

while (iterator.hasNext()) {

LongWritable element = iterator.next();

count = count + element.get();

}

context.write(word, new LongWritable(count));

}

@Override

protected void cleanup(Reducer<Text, LongWritable, Text, LongWritable>.Context context)throws IOException, InterruptedException {

System.out.println("WordCountReducer.cleanup()");

}

}

2.3 runner主程序入口。

package com.npf.hadoop;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

*

* @author root

*

*/

public class WordCountRunner {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://devcitibank:9000");

Job job = Job.getInstance(conf);

job.setJarByClass(WordCountRunner.class);

//mappper

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//reducer

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

FileInputFormat.setInputPaths(job, "hdfs://devcitibank:9000/wordcount/srcdata");

FileOutputFormat.setOutputPath(job, new Path("hdfs://devcitibank:9000/wordcount/outputdata"));

job.waitForCompletion(true);

}

}

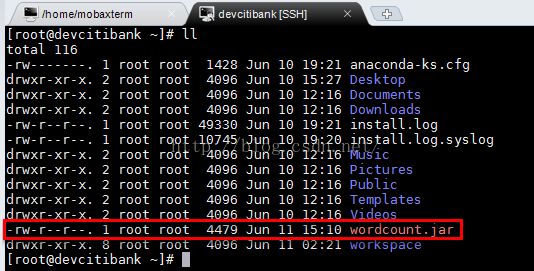

3. 我们通过Eclipse将我们的程序打成一个Jar包,打到/root目录下面。Jar包的名字我们命名为wordcount.jar。

4. ok, 我们来验证下在/root/目录下是否存在我们的Jar包。

5. 验证hadoop集群是否启动。

6. 验证我们在集群中的/wordcount/srcdata/目录下面是否有我们需要处理的文件。

7.提交wordcount.jar到hadoop集群中去处理。

8. 执行成功后,我们去hadoop集群中去查看结果。

9.我们还可以在网页上面查看Job的运行状态。

10.源代码已托管到GitHub上面,wordcount 。