基于mysql的hive安装配置(apache-hive-2.0.1-bin.tar.gz)

引言: Hive是一种强大的数据仓库查询语言,类似SQL,本文将介绍如何搭建Hive的开发测试环境。

1. 什么是Hive?

hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供简单的sql查询功能,可以将sql语句转换为MapReduce任务进行运行。 其优点是学习成本低,可以通过类SQL语句快速实现简单的MapReduce统计,不必开发专门的MapReduce应用,十分适合数据仓库的统计分析。

2. 按照Hive的准备条件

2.1 Hadoop集群环境已经安装完毕

2.2 本文使用CentOS6做为开发环境

3. 安装步骤

3.1 下载Hive包:apache-hive-2.0.1-bin.tar.gz

3.2 将其解压到/opt/my目录下

tar xzvf apache-hive-2.0.1-bin.tar.gz

3.3 hive名字太长了,缩短hive的名字

# mv apache-hive-2.0.1 hive

3.4 设置环境变量

export HIVE_HOME=/opt/my/hive

export PATH=$PATH:$HIVE_HOME/bin

export CLASSPATH=$CLASSPATH:$HIVE_HOME/bin

3.5. 修改hive-env.xml, 将hive-env.xml.template.改名: mv hive-env.xml.template hive-env.xml

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/opt/my/hadoop

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/opt/my/hive/conf

3.6. 默认hadoop的堆大小为1G,如果有特殊需要,可以调的大一些,我们这里就用默认得就可以了

3.7 修改hive-site.xml,主要修改数据库的连接信息. mv hive-default.xml.template hive-site.xml

<property> <name>hive.metastore.local</name> <value>true</value> </property> <span style="font-family: Arial;"> </span>

<property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://192.168.1.100:3306/hive?characterEncoding=UTF-8</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root<value>

<description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hadoop</value> <description>password to use against metastore database</description> </property>

3.7 保存退出。

3.8 安装mysql作为hive的metastore

mysql的安装详见我的另一篇文档 http://blog.csdn.net/nengyu/article/details/51615836

同事需要将mysql的连接驱动放入 /hive/lib目录中

3.9. 我参考了一些网上的文档,到这一步就结束了,就启动hive,不过会报如下错,

Exception in thread "main" java.lang.RuntimeException: Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql)

3.10 我是执行如下:

执行:# schematool -dbType mysql -initSchema

3.11 执行后还会报如下错误:

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

3.12 不要惊慌,在hive目录下创建iotmp文件夹,修改文件hive-site.xml

<property>

<name>hive.querylog.location</name>

<value>/opt/my/hive/iotmp</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value><pre name="code" class="html" style="color: rgb(51, 51, 51); font-size: 14px; line-height: 26px;">/opt/my/hive/iotmp</value> <description>Local scratch space for Hive jobs</description> </property> <property> <name>hive.downloaded.resources.dir</name> <value>

/opt/my/hive/iotmp</value> <description>Temporary local directory for added resources in the remote file system.</description> </property> 保存退出。启动hive

[root@Master bin]# ./hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/my/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/my/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in jar:file:/opt/my/hive/lib/hive-common-2.0.1.jar!/hive-log4j2.properties Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases. hive>

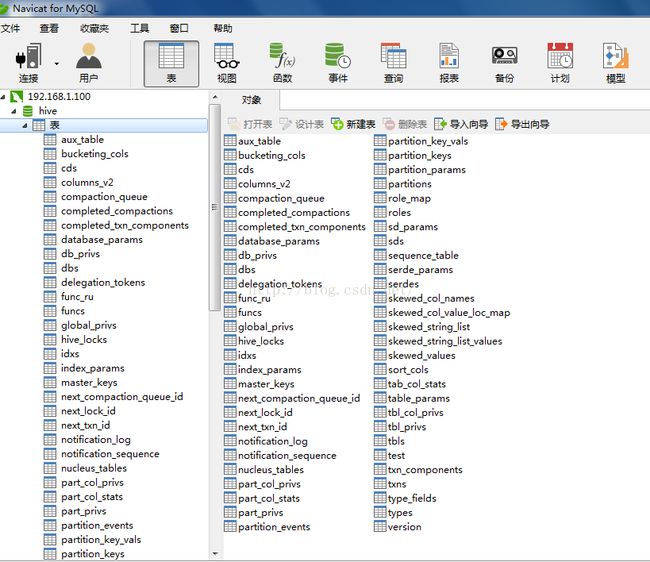

3.13 测试mysql数据库连接hive

使用mysql客户端工具登录,输入用户名:root,密码hadoop,我在mysql 上建了hive数据库,登录后如下图:

真是很奇妙!!!