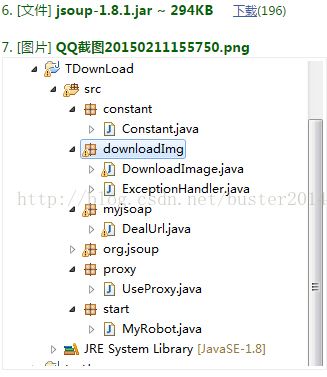

webcrawler-----Jsoap爬虫美女图片多线程

文章来源:http://www.oschina.net/code/snippet_1447924_45939

多线程部分根据网络代码改编,自己添加Jsoap 模块支持代理,jsoup-1.8.1.jar(需要的jar包在网上)

1、java代码:

package constant;

public class Constant {

public static final String proxyHost = "*.*.*.*";//代理IP地址

public static final String proxyPort = "8080";//代理端口

public static final String AGENT = "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.79";

public static final String IMAGE_PATH = "D:\\IMAGE";//图片存放地址

}2、java代码:

package downloadImg;

import java.io.BufferedInputStream;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.MalformedURLException;

import java.net.URL;

import java.text.SimpleDateFormat;

import java.util.List;

import constant.Constant;

import proxy.UseProxy;

public class DownloadImage implements Runnable {

private int imageCount = 0;

private File image = null;

private URL imageUrl = null;

private List<String> images = null;

private BufferedInputStream inputStream = null;

private BufferedOutputStream outputStream = null;

public DownloadImage(List<String> image) {

this.images = image;

}

@Override

public void run() {

// TODO Auto-generated method stub

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd_HHmmssSSS");

try {

while (!images.isEmpty()) {

new UseProxy();

imageUrl = new URL(images.remove(0));

imageUrl.openConnection().setConnectTimeout(12000);

imageUrl.openConnection().setReadTimeout(12000);

inputStream = new BufferedInputStream(imageUrl.openStream());

image = new File(Constant.IMAGE_PATH + "\\" /*+ dateFormat.format(new Date())*/+ getFileName(imageUrl));

if (!image.getParentFile().exists()) {

image.getParentFile().mkdirs();

}

outputStream = new BufferedOutputStream(new FileOutputStream(image));

byte[] buf = new byte[2048];

int length = inputStream.read(buf);

while (length != -1) {

outputStream.write(buf, 0, length);

length = inputStream.read(buf);

}

next();

}

// wait();

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

System.out.println("链接解析失败---" + imageUrl);

e.printStackTrace();

} finally {

try {

next();

} catch (IOException e) {

e.printStackTrace();

}

}

}

private String getFileName(URL url) {

String fileName = url.getFile();

return fileName.substring(fileName.lastIndexOf('/') + 1);

}

public void next() throws IOException {

if (inputStream != null) {

inputStream.close();

}

if (outputStream != null) {

outputStream.close();

}

image = null;

// images = null;

imageUrl = null;

inputStream = null;

outputStream = null;

System.gc();

System.out.println("DownloadImage >>> " + ++imageCount);

}

}3、java代码:

package myjsoap;

import java.util.List;

import org.jsoup.Connection;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import proxy.UseProxy;

import constant.Constant;

import downloadImg.DownloadImage;

public class DealUrl implements Runnable{

// 已解析url队列

private List<String> visited = null;

// 未解析url队列

private List<String> hrefs = null;

// 图片链接队列

private List<String> images = null;

//已解析链接数

private int analyze = 0;

private int count = 0;

public DealUrl(List<String> hrefs, List<String> visited, List<String> images) {

this.hrefs = hrefs;

this.visited = visited;

this.images = images;

}

public void run() {

while (!hrefs.isEmpty()) {

// 把当前要解析的url字符串从hrefs移到visited

String urlTmp = hrefs.remove(0);

if (visited.indexOf(urlTmp) != -1)

continue;

visited.add(urlTmp);

Document doc = getUrlDoc((String) visited.get(visited.size() - 1));

if (doc == null)

continue;

System.out.println("已解析第 " + ++analyze + " 个连接。。。"+urlTmp);

Elements hrefLinks = doc.select("a[href]");

Elements imgLinks = doc.select("img[src]");

if (hrefLinks != null)

for (Element link : hrefLinks) {

String newUrl = link.attr("abs:href");

if (newUrl.indexOf("ququ") != -1)

hrefs.add(newUrl);

// System.out.println(++count + " >>> " +

// link.attr("abs:href"));

}

if (imgLinks == null)

continue;

for (Element link : imgLinks) {

String temImgUrl = link.attr("abs:src");

if (temImgUrl.indexOf(".jpg") != -1 && images.indexOf(temImgUrl) == -1) {

images.add(link.attr("abs:src"));

System.out.println("img:"+link.attr("abs:src"));

}

}

new Thread(new DownloadImage(images)).start();

}

System.gc();

}

public Document getUrlDoc(String url){

Document doc = null;

try {

new UseProxy();//不是代理上网的可以注释掉

Connection conneciton = Jsoup.connect(url);

conneciton.userAgent(Constant.AGENT);

doc = conneciton.get();

} catch (Exception e) {

System.out.println("connect fail!");

return null;

}

return doc;

}

}

4、java代码:

package proxy;

import java.util.Properties;

import constant.Constant;

public class UseProxy {

public UseProxy() {

Properties prop = System.getProperties();

prop.setProperty("http.proxyHost", Constant.proxyHost);

prop.setProperty("http.proxyPort", Constant.proxyPort);

}

}5、java代码:

package start;

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import myjsoap.DealUrl;

public class MyRobot {

private List<String> hrefs = Collections.synchronizedList(new ArrayList<String>());

private List<String> visited = Collections.synchronizedList(new ArrayList<String>());

private List<String> images = Collections.synchronizedList(new ArrayList<String>());

public MyRobot(String href) {

hrefs.add(href);

}

public void run() throws InterruptedException {

ExecutorService pool = Executors.newFixedThreadPool(2);

pool.execute(new DealUrl(hrefs, visited, images));

Thread.sleep(8000);

pool.execute(new DealUrl(hrefs, visited, images));

pool.shutdown();

}

public static void main(String[] args) throws InterruptedException {

MyRobot robot = new MyRobot("http://500ququ.com/");

robot.run();

}

}