现在大数据是越来越火了,而我自己研究这方面也很长时间了,今天就根据我自己的经验教会大家学会如何使用MapReduce,下文中将MapReduce简写为MR。

本篇博客将结合实际案例来具体说明MR的每一个知识点。

![[置顶] 深入理解与应用Hadoop中的MapReduce_第1张图片](http://img.e-com-net.com/image/info5/53d60d625beb480e8cf752e55ad0dc30.png)

| 3、MR中map()函数和reduce()函数如何编写 |

![[置顶] 深入理解与应用Hadoop中的MapReduce_第2张图片](http://img.e-com-net.com/image/info5/6bab8258b0cc472697fdad8fb55ba4ce.png)

下面将用一个具体的电信业务说明MR最基本的编写过程:

实验所用数据:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第3张图片](http://img.e-com-net.com/image/info5/4a148cb1e6e347ea8c8bf62b17fb37e8.jpg)

具体字段描述:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第4张图片](http://img.e-com-net.com/image/info5/f959ae5f5e6e4f25bfff6d8920db463b.jpg)

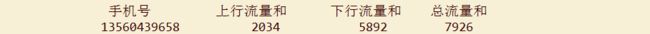

业务要求:统计同一个用户的上行总流量和,下行总流量和以及上下总流量和

例如:

代码示例:

package com.appache.celephone3

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.FSDataInputStream

import org.apache.hadoop.fs.FileSystem

import org.apache.hadoop.fs.Path

import org.apache.hadoop.io.IOUtils

import org.apache.hadoop.io.Text

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat

public class FlowCount

{

public static String path1 = "hdfs://hadoop:9000/dir/flowdata.txt"

public static String path2 = "hdfs://hadoop:9000/dirout/"

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration()

conf.set("fs.default.name","hdfs://hadoop:9000/")

FileSystem fileSystem = FileSystem.get(conf)

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true)

}

Job job = new Job(conf,"FlowCount")

job.setJarByClass(FlowCount.class)

//编写驱动

FileInputFormat.setInputPaths(job, new Path(path1))

job.setInputFormatClass(TextInputFormat.class)

job.setMapperClass(MyMapper.class)

job.setMapOutputKeyClass(Text.class)

job.setMapOutputValueClass(Text.class)

//shuffle洗牌阶段

job.setReducerClass(MyReducer.class)

job.setOutputKeyClass(Text.class)

job.setOutputValueClass(Text.class)

job.setOutputFormatClass(TextOutputFormat.class)

FileOutputFormat.setOutputPath(job, new Path(path2))

//将任务提交给JobTracker

job.waitForCompletion(true)

//查看程序的运行结果

FSDataInputStream fr = fileSystem.open(new Path("hdfs://hadoop:9000/dirout/part-r-00000"))

IOUtils.copyBytes(fr,System.out,1024,true)

}

}

package com.appache.celephone3;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MyMapper extends Mapper<LongWritable, Text, Text, Text>

{

@Override

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String line = v1.toString();

String[] splited = line.split("\t");

String msisdn = splited[1];

String upFlow = splited[8];

String downFlow = splited[9];

long flowsum = Long.parseLong(upFlow) + Long.parseLong(downFlow);

context.write(new Text(msisdn), new Text(upFlow+"\t"+downFlow+"\t"+String.valueOf(flowsum)));

}

}

package com.appache.celephone3;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MyReducer extends Reducer<Text, Text, Text, Text>

{

@Override

protected void reduce(Text k2, Iterable<Text> v2s,Context context)throws IOException, InterruptedException

{

long upFlowSum = 0L;

long downFlowSum = 0L;

long FlowSum = 0L;

for(Text v2:v2s)

{

String[] splited = v2.toString().split("\t");

upFlowSum += Long.parseLong(splited[0]);

downFlowSum += Long.parseLong(splited[1]);

FlowSum += Long.parseLong(splited[2]);

}

String data = String.valueOf(upFlowSum)+"\t"+String.valueOf(downFlowSum)+"\t"+String.valueOf(FlowSum);

context.write(k2,new Text(data));

}

}

运行结果:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第5张图片](http://img.e-com-net.com/image/info5/8c8d8778a8774d5ea2f44b9c9795f30a.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第6张图片](http://img.e-com-net.com/image/info5/d5885fa5f6db40979c379fccd6558efe.jpg)

具体业务描述:对于上面的电信数据,统计同一个用户的上行总流量和,下行总流量和以及上下总流量和,并且手机号(11位)的信息输出到一个文件中,非手机号(8位)的信息输出到一个文件中

代码示例:

package com.appache.partitioner

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.FileSystem

import org.apache.hadoop.fs.Path

import org.apache.hadoop.io.Text

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat

public class FlowCount

{

public static String path1 = "hdfs://hadoop:9000/dir/flowdata.txt"

public static String path2 = "hdfs://hadoop:9000/dirout/"

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration()

conf.set("fs.default.name", "hdfs://hadoop:9000/")

FileSystem fileSystem = FileSystem.get(conf)

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true)

}

Job job = new Job(conf,"FlowCount")

job.setJarByClass(FlowCount.class)

FileInputFormat.setInputPaths(job, new Path(path1))

job.setInputFormatClass(TextInputFormat.class)

job.setMapperClass(MyMapper.class)

job.setMapOutputKeyClass(Text.class)

job.setMapOutputValueClass(FlowBean.class)

//shuffle阶段:分区、排序、分组、本地归并

job.setPartitionerClass(MyPartitioner.class)

job.setNumReduceTasks(2)

//<k2,v2s>

job.setReducerClass(MyReducer.class)

job.setOutputKeyClass(Text.class)

job.setOutputValueClass(FlowBean.class)

job.setOutputFormatClass(TextOutputFormat.class)

FileOutputFormat.setOutputPath(job, new Path(path2))

//提交作业

job.waitForCompletion(true)

}

}

package com.appache.partitioner;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

public class FlowBean implements Writable {

long upFlow ;

long downFlow;

long flowSum;

public FlowBean() {}

public FlowBean(String upFlow,String downFlow)

{

this.upFlow = Long.parseLong(upFlow);

this.downFlow = Long.parseLong(downFlow);

this.flowSum = Long.parseLong(upFlow) + Long.parseLong(downFlow);

}

public long getupFlow()

{return upFlow;}

public long getdownFlow()

{return downFlow;}

public long getflowSum ()

{return flowSum;}

@Override

public void write(DataOutput out) throws IOException

{

out.writeLong(upFlow);

out.writeLong(downFlow);

out.writeLong(flowSum);

}

@Override

public void readFields(DataInput in) throws IOException

{

upFlow = in.readLong();

downFlow = in.readLong();

flowSum = in.readLong();

}

public String toString()

{

return upFlow+"\t"+downFlow+"\t"+flowSum;

}

}

package com.appache.partitioner;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MyMapper extends Mapper<LongWritable, Text, Text, FlowBean>

{

@Override

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String line = v1.toString();

String[] splited = line.split("\t");

String msisdn = splited[1];

FlowBean flowData = new FlowBean(splited[8],splited[9]);

context.write(new Text(msisdn), flowData);

}

}

package com.appache.partitioner;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class MyPartitioner extends Partitioner<Text,FlowBean> //分区<18330267966,{100,200}>

{

@Override

public int getPartition(Text k2, FlowBean v2, int numPartitions)

{

String tele = k2.toString();

if(tele.length() == 11)

return 0;

else

return 1;

}

}

package com.appache.partitioner;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MyReducer extends Reducer<Text, FlowBean, Text, FlowBean>

{

@Override

protected void reduce(Text k2, Iterable<FlowBean> v2s,Context context)throws IOException, InterruptedException

{

long upFlow = 0L;

long downFlow = 0L;

long flowSum = 0L;

for(FlowBean v2: v2s)

{

upFlow += v2.getupFlow();

downFlow += v2.getdownFlow();

flowSum += v2.getflowSum();

}

context.write(k2, new FlowBean(upFlow+"",downFlow+""));

}

}

运行结果:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第7张图片](http://img.e-com-net.com/image/info5/1d86d6ee993d4529aebdb91610a5bd11.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第8张图片](http://img.e-com-net.com/image/info5/903b805ec55b4685928abfd8cf303bff.png)

业务描述:

对于上面业务得到的统计结果:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第9张图片](http://img.e-com-net.com/image/info5/52e9d4cbbfd6431c96bc13ac3e6b9805.png)

先按照总流量由低到高排序,在总流量相同的情况下,按照下行流量和从低到高排序:

实例代码:

package com.appache.sort

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.FSDataInputStream

import org.apache.hadoop.fs.FileSystem

import org.apache.hadoop.fs.Path

import org.apache.hadoop.io.IOUtils

import org.apache.hadoop.io.NullWritable

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat

public class FlowCount

{

public static String path1 = "hdfs://hadoop:9000/flowCount.txt"

public static String path2 = "hdfs://hadoop:9000/dirout/"

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration()

conf.set("fs.default.name","hdfs://hadoop:9000/")

FileSystem fileSystem = FileSystem.get(conf)

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true)

}

Job job = new Job(conf, "FlowCount")

job.setJarByClass(FlowCount.class)

//编写驱动

FileInputFormat.setInputPaths(job,new Path(path1))

job.setInputFormatClass(TextInputFormat.class)

job.setMapperClass(MyMapper.class)

job.setMapOutputKeyClass(FlowBean.class)

job.setMapOutputValueClass(NullWritable.class)

//shuffle优化阶段

job.setReducerClass(MyReducer.class)

job.setOutputKeyClass(FlowBean.class)

job.setOutputValueClass(NullWritable.class)

job.setOutputFormatClass(TextOutputFormat.class)

FileOutputFormat.setOutputPath(job,new Path(path2))

job.waitForCompletion(true)

//查看运行结果:

FSDataInputStream fr = fileSystem.open(new Path("hdfs://hadoop:9000/dirout/part-r-00000"))

IOUtils.copyBytes(fr,System.out,1024,true)

}

}

package com.appache.sort;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

public class FlowBean implements WritableComparable<FlowBean>

{

private String msisdn;

private long upFlow;

private long downFlow;

private long flowSum;

public FlowBean(){}

public FlowBean(String msisdn,String upFlow,String downFlow,String flowSum)

{

this.msisdn = msisdn;

this.upFlow = Long.parseLong(upFlow);

this.downFlow = Long.parseLong(downFlow);

this.flowSum = Long.parseLong(flowSum);

}

public String getMsisdn()

{

return msisdn;

}

public long getUpFlow()

{

return upFlow;

}

public long getDownFlow()

{

return downFlow;

}

public long getFlowSum()

{

return flowSum;

}

@Override

public void write(DataOutput out) throws IOException

{

out.writeUTF(msisdn);

out.writeLong(upFlow);

out.writeLong(downFlow);

out.writeLong(flowSum);

}

@Override

public void readFields(DataInput in) throws IOException

{

this.msisdn = in.readUTF();

this.upFlow = in.readLong();

this.downFlow = in.readLong();

this.flowSum = in.readLong();

}

@Override

public int compareTo(FlowBean obj)

{

if(this.flowSum == obj.flowSum)

return (int)(obj.downFlow - this.downFlow);

else

return (int)(this.flowSum - obj.flowSum);

}

public String toString()

{

return this.msisdn+"\t"+this.upFlow+"\t"+this.downFlow+"\t"+this.flowSum;

}

}

package com.appache.sort;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MyMapper extends Mapper<LongWritable, Text, FlowBean, NullWritable>

{

@Override

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String line = v1.toString();

String[] splited = line.split("\t");

FlowBean flowdata = new FlowBean(splited[0],splited[1],splited[2],splited[3]);

context.write(flowdata, NullWritable.get());

}

}

package com.appache.sort;

import java.io.IOException;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

public class MyReducer extends Reducer<FlowBean, NullWritable, FlowBean, NullWritable>

{

@Override

protected void reduce(FlowBean k2, Iterable<NullWritable> v2s,Context context)throws IOException, InterruptedException

{

for(NullWritable v2:v2s)

{

context.write(k2, NullWritable.get());

}

}

}

运行结果:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第10张图片](http://img.e-com-net.com/image/info5/ffff5a314bbb44b695ea72a979abd6d0.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第11张图片](http://img.e-com-net.com/image/info5/1c7ee9f558c64dd5960d388f9ca73239.png)

| 7、MR程序的优化方式3—本地归并Combiner |

具体业务描述:对于上面的电信数据,统计同一个用户的上行总流量和,下行总流量和以及上下总流量和,代码中要求加入本地归并优化方式:

代码示例:

package com.appache.celephone3

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.FSDataInputStream

import org.apache.hadoop.fs.FileSystem

import org.apache.hadoop.fs.Path

import org.apache.hadoop.io.IOUtils

import org.apache.hadoop.io.Text

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat

public class FlowCount

{

public static String path1 = "hdfs://hadoop:9000/dir/flowdata.txt"

public static String path2 = "hdfs://hadoop:9000/dirout/"

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration()

conf.set("fs.default.name","hdfs://hadoop:9000/")

FileSystem fileSystem = FileSystem.get(conf)

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true)

}

Job job = new Job(conf,"FlowCount")

job.setJarByClass(FlowCount.class)

//编写驱动

FileInputFormat.setInputPaths(job, new Path(path1))

job.setInputFormatClass(TextInputFormat.class)

job.setMapperClass(MyMapper.class)

job.setMapOutputKeyClass(Text.class)

job.setMapOutputValueClass(Text.class)

//加入本地归并优化方式:

job.setCombinerClass(MyReducer.class)

job.setNumReduceTasks(2)

//shuffle洗牌阶段

job.setReducerClass(MyReducer.class)

job.setOutputKeyClass(Text.class)

job.setOutputValueClass(Text.class)

job.setOutputFormatClass(TextOutputFormat.class)

FileOutputFormat.setOutputPath(job, new Path(path2))

//将任务提交给JobTracker

job.waitForCompletion(true)

//查看程序的运行结果

FSDataInputStream fr = fileSystem.open(new Path("hdfs://hadoop:9000/dirout/part-r-00000"))

IOUtils.copyBytes(fr,System.out,1024,true)

}

}

package com.appache.celephone3;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MyMapper extends Mapper<LongWritable, Text, Text, Text>

{

@Override

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String line = v1.toString();

String[] splited = line.split("\t");

String msisdn = splited[1];

String upFlow = splited[8];

String downFlow = splited[9];

long flowsum = Long.parseLong(upFlow) + Long.parseLong(downFlow);

context.write(new Text(msisdn), new Text(upFlow+"\t"+downFlow+"\t"+String.valueOf(flowsum)));

}

}

package com.appache.celephone3;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MyReducer extends Reducer<Text, Text, Text, Text>

{

@Override

protected void reduce(Text k2, Iterable<Text> v2s,Context context)throws IOException, InterruptedException

{

long upFlowSum = 0L;

long downFlowSum = 0L;

long FlowSum = 0L;

for(Text v2:v2s)

{

String[] splited = v2.toString().split("\t");

upFlowSum += Long.parseLong(splited[0]);

downFlowSum += Long.parseLong(splited[1]);

FlowSum += Long.parseLong(splited[2]);

}

String data = String.valueOf(upFlowSum)+"\t"+String.valueOf(downFlowSum)+"\t"+String.valueOf(FlowSum);

context.write(k2,new Text(data));

}

}

运行结果:

![[置顶] 深入理解与应用Hadoop中的MapReduce_第12张图片](http://img.e-com-net.com/image/info5/7f74008c21764731bc8f7328219b5338.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第13张图片](http://img.e-com-net.com/image/info5/3beb2fd7c52647de9a5fe0dc8f9fb770.png)

对于文章的内容,如有问题,欢迎留言指正!

![[置顶] 深入理解与应用Hadoop中的MapReduce_第3张图片](http://img.e-com-net.com/image/info5/4a148cb1e6e347ea8c8bf62b17fb37e8.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第4张图片](http://img.e-com-net.com/image/info5/f959ae5f5e6e4f25bfff6d8920db463b.jpg)

![]()

![[置顶] 深入理解与应用Hadoop中的MapReduce_第1张图片](http://img.e-com-net.com/image/info5/53d60d625beb480e8cf752e55ad0dc30.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第2张图片](http://img.e-com-net.com/image/info5/6bab8258b0cc472697fdad8fb55ba4ce.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第5张图片](http://img.e-com-net.com/image/info5/8c8d8778a8774d5ea2f44b9c9795f30a.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第6张图片](http://img.e-com-net.com/image/info5/d5885fa5f6db40979c379fccd6558efe.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第7张图片](http://img.e-com-net.com/image/info5/1d86d6ee993d4529aebdb91610a5bd11.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第8张图片](http://img.e-com-net.com/image/info5/903b805ec55b4685928abfd8cf303bff.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第9张图片](http://img.e-com-net.com/image/info5/52e9d4cbbfd6431c96bc13ac3e6b9805.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第10张图片](http://img.e-com-net.com/image/info5/ffff5a314bbb44b695ea72a979abd6d0.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第11张图片](http://img.e-com-net.com/image/info5/1c7ee9f558c64dd5960d388f9ca73239.png)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第12张图片](http://img.e-com-net.com/image/info5/7f74008c21764731bc8f7328219b5338.jpg)

![[置顶] 深入理解与应用Hadoop中的MapReduce_第13张图片](http://img.e-com-net.com/image/info5/3beb2fd7c52647de9a5fe0dc8f9fb770.png)