Flink入门(十二)自定义eventTime

kafka消息是自带timestamp的,但有的时候需要自定义eventTime,直接上代码

final StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironment();

env.setParallelism(2);

//这里我采用eventTime

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

//接收kafka消息

Properties propsConsumer = new Properties();

propsConsumer.setProperty("bootstrap.servers", KafkaConfig.KAFKA_BROKER_LIST);

propsConsumer.setProperty("group.id", "trafficwisdom-streaming");

propsConsumer.put("enable.auto.commit", false);

propsConsumer.put("max.poll.records", 1000);

FlinkKafkaConsumer011 consumer = new FlinkKafkaConsumer011("topic-test", new SimpleStringSchema(), propsConsumer);

consumer.setStartFromLatest();

DataStream stream = env.addSource(consumer);

stream.print();

//自定义,消息中dateTime作为eventTime

DataStream streamResult= stream.assignTimestampsAndWatermarks(new AscendingTimestampExtractor() {

@Override

public long extractAscendingTimestamp(String element) {

JSONObject jsonObject = JSON.parseObject(element);

Long dateTime = jsonObject.getLong("dateTime");

return dateTime;

}

});

//下面测试窗口

DataStream> finalResult=streamResult.map(new MapFunction>() {

@Override

public Tuple2 map(String value) throws Exception {

JSONObject jsonObject = JSON.parseObject(value);

String key=jsonObject.getString("key");

return Tuple2.of(key,1);

}

});

DataStream> aggResult =finalResult.keyBy(0).window(SlidingEventTimeWindows.of(Time.seconds(60*60), Time.seconds(30)))

.allowedLateness(Time.seconds(10))

.sum(1);

aggResult.print();

env.execute("WaterMarkDemo");

下面测试说明,如果发两条消息:

{"dateTime":1558925900000,"key":"test1"} //2019-05-27 10:58:20

{"dateTime":1558925920000,"key":"test1"} //2019-05-27 10:58:40

结果:

1> {"dateTime":1558925900000,"key":"test1"}

1> {"dateTime":1558925920000,"key":"test1"}

1> (test1,1)

紧接着发送消息

{"dateTime":1558925960000,"key":"test5"} //2019-05-27 10:59:20

结果出现

1> {"dateTime":1558925960000,"key":"test5"}

1> (test1,2)

为什么第一次统计结果不是(test1,2),

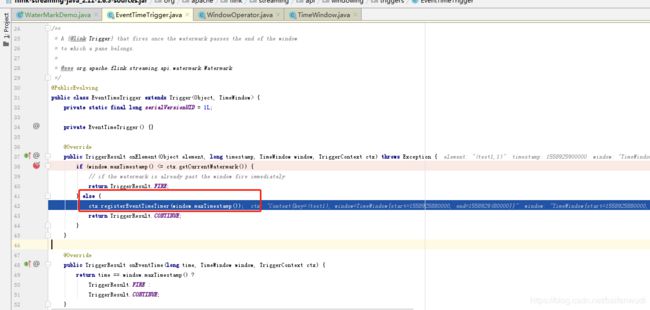

看了org.apache.flink.streaming.api.windowing.triggers.EventTimeTrigger中

ctx.registerEventTimeTimer(window.maxTimestamp())方法,取end-1(就是1558925920000-1),所以没把第二条算进去。

重新启动demo,重新发送消息

发送两条信息:

{"dateTime":1558925900000,"key":"test1"} //2019-05-27 10:58:20

{"dateTime":1558925960000,"key":"test1"} //2019-05-27 10:59:20

结果:

1> {"dateTime":1558925900000,"key":"test1"}

1> {"dateTime":1558925960000,"key":"test1"}

1> (test1,1)

1> (test1,1)

出现两次统计结果,因为我是window窗口30秒触发一次

重新启动demo,发送消息:

{"dateTime":1558925900000,"key":"test1"} //2019-05-27 10:58:20

{"dateTime":1558926140000,"key":"test1"} //2019-05-27 11:02:20

结果

1> {"dateTime":1558925900000,"key":"test1"}

1> {"dateTime":1558926140000,"key":"test1"}

1> (test1,1)

1> (test1,1)

1> (test1,1)

1> (test1,1)

1> (test1,1)

1> (test1,1)

1> (test1,1)

1> (test1,1)

5分钟30s一次,正好10次统计结果。