kafka + sparkStreaming 学习笔记

Kafka

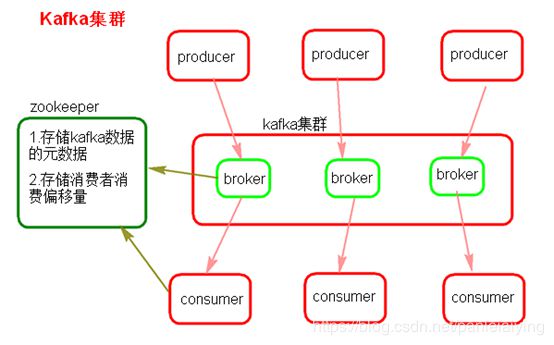

kafka是一个高吞吐的分布式消息队列系统。特点是生产者消费者模式,先进先出(FIFO)保证顺序,自己不丢数据,默认每隔7天清理数据。消息列队常见场景:系统之间解耦合、峰值压力缓冲、异步通信。

-

producer : 消息生产者

-

consumer : 消息消费之

-

broker : kafka集群的server,负责处理消息读、写请求,存储消息,在kafka cluster这一层这里,其实里面是有很多个broker

-

topic : 消息队列/分类相当于队列,里面有生产者和消费者模型

-

zookeeper : 元数据信息存在zookeeper中,包括:存储消费偏移量,topic话题信息,partition信息

-

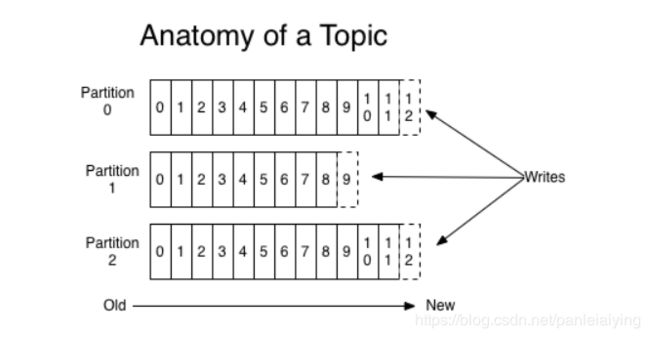

1、一个topic分成多个partition

-

2、每个partition内部消息强有序, 其中的每个消息都有一个序号交offset

-

3、一个partition 只对应一个broker, 一个broker 可以管理多个partition

-

4、 消息直接写入文件,并不保存在内存中

-

5、按照时间策略, 默认一周删除, 而不是消息消费完就删除

-

6、producer自己决定网那个partition写消息,可以是轮询的负载均衡,或者是基于hash的partition策略

kafka 的消息消费模型

- consumer 自己维护消费到哪个offset

- 每个consumer都有对应的group

- group 内是queue消费模型

– 各个consumer消费不同的partition

– 一个消息在group内只消费一次 - 各个group各自独立消费,互不影响

kafka 特点

- 生存者消费模型:FIFO; partition内部是FIFO的, partition之间不是FIFO

- 高性能:单节点支持上千个客户端,百MB/s 吞吐

- 持久性:直接持久在普通的磁盘上,性能比较好; 直接append 方式追加到磁盘,数据不会丢

- 分布式:数据副本冗余,流量负载均衡、可扩展; 数据副本,也就是同一份数据可以到不同的broker上面去,也就是当一份数据, 磁盘坏掉,数据不亏丢失

- 很灵活: 消息长时间持久化+Cilent维护消费状态; 1、持久花时间长,可以是一周、一天,2、可以自定义消息偏移量

kafka 安装

- https://www.apache.org/dyn/closer.cgi?path=/kafka/2.0.1/kafka_2.11-2.0.1.tgz

下载 - 解压压缩包,修改config 文件夹下 server.properties

// 节点编号:(不同节点按0,1,2,3整数来配置)

broker.id = 0

// 数据存放目录

log.dirs = /log

// zookeeper 集群配置

zookeeper.connect=node1:2181,node2:2181,node3:2181

-

启动

bin/kafka-server-start.sh config/server.properties可以单独配置一个启动文件

vim start-kafka.shnohup bin/kafka-server-start.sh config/server.properties > kafka.log 2>&1 &

授权 chmod 755 start-kafka.sh

kafka基础命令

创建topic./kafka-topics.sh --zookeeper node1:2181,node2:2181,node3:2181 --create --topic t0315 --partitions 3 --replication-factor 3

查看topic: ./kafka-topics.sh --zookeeper node1:2181,node2:2181,node3:2181 --list

生产者:./kafka-console-producer.sh --topic t0315 --broker-list node1:9092,node2:9092,node3:9092

消费者:./kafka-console-consumer.sh --bootstrap-server node1:9092,node2:9092,node3:9092 --topic t0315

获取描述: ./kafka-topics.sh --describe --zookeeper node1:2181,node2:2181,node3:2181 --topic t0315

kafka中有一个被称为优先副本(preferred replicas)的概念。如果一个分区有3个副本,且这3个副本的优先级别分别为0,1,2,根据优先副本的概念,0会作为leader 。当0节点的broker挂掉时,会启动1这个节点broker当做leader。当0节点的broker再次启动后,会自动恢复为此partition的leader。不会导致负载不均衡和资源浪费,这就是leader的均衡机制。

在配置文件conf/ server.properties中配置开启(默认就是开启):auto.leader.rebalance.enable true

Code 部分

sparkStreaming 的direact 方式

2.2.0

junit

junit

4.11

test

org.apache.spark

spark-streaming-kafka-0-8_2.11

${spark.version}

org.apache.spark

spark-streaming_2.11

${spark.version}

org.apache.spark

spark-core_2.11

${spark.version}

org.apache.spark

spark-hive_2.11

${spark.version}

org.apache.spark

spark-sql_2.11

${spark.version}

producer 部分:

import kafka.serializer.StringEncoder;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

/** *@Author PL *@Date 2018/12/27 10:59 *@Description TODO **/

public class KafkaProducer {

public static void main(String[] args) throws InterruptedException {

Properties pro = new Properties();

pro.put("bootstrap.servers","node1:9092,node2:9092,node3:9092");

pro.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

pro.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

//Producer producer = new Producer(new ProducerConfig(pro));

//org.apache.kafka.clients.producer.KafkaProducer producer1 = new Kafka

org.apache.kafka.clients.producer.KafkaProducer<String,String> producer = new org.apache.kafka.clients.producer.KafkaProducer<String, String>(pro);

System.out.println("11");

String topic = "t0315";

String msg = "hello word";

for (int i =0 ;i <100;i++) {

producer.send(new ProducerRecord<String, String>(topic, "hello", msg));

System.out.println(msg);

}

producer.close();

}

}

customer

import kafka.serializer.StringDecoder;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaPairInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.KafkaUtils;

import scala.Tuple2;

import java.util.*;

/** *@Author PL *@Date 2018/12/26 13:28 *@Description TODO **/

public class SparkStreamingForkafka {

public static void main(String[] args) throws InterruptedException {

SparkConf sc = new SparkConf().setMaster("local[2]").setAppName("test");

JavaStreamingContext jsc = new JavaStreamingContext(sc, Durations.seconds(5));

Map<String,String> kafkaParam = new HashMap<>();

kafkaParam.put("metadata.broker.list","node1:9092,node2:9092,node3:9092");

//kafkaParam.put("t0315",1);

HashSet<String> topic = new HashSet<>();

topic.add("t0315");

//JavaPairInputDStream line = KafkaUtils.createStream(jsc,"node1:9092,node2:9092,node3:9092","wordcountGrop",kafkaParam);

JavaPairInputDStream<String, String> line = KafkaUtils.createDirectStream(jsc, String.class, String.class, StringDecoder.class, StringDecoder.class, kafkaParam, topic);

JavaDStream<String> flatLine = line.flatMap(new FlatMapFunction<Tuple2<String, String>, String>() {

@Override

public Iterator<String> call(Tuple2<String, String> tuple2) throws Exception {

return Arrays.asList(tuple2._2.split(" ")).iterator();

}

});

JavaPairDStream<String, Integer> pair = flatLine.mapToPair(new PairFunction<String, String, Integer>() {

@Override

public Tuple2<String, Integer> call(String s) throws Exception {

return new Tuple2<String, Integer>(s, 1);

}

});

JavaPairDStream<String, Integer> count = pair.reduceByKey(new Function2<Integer, Integer, Integer>() {

@Override

public Integer call(Integer integer, Integer integer2) throws Exception {

return integer + integer2;

}

});

count.print();

jsc.start();

jsc.awaitTermination();

jsc.close();;

}

}

上述方式为一个SparkStreaming 的消费者, direct方式就是把kafka当成一个存储数据的库,spark 自己维护offset。假设,driver 端宕机了, 之后再重启,会从offset 那一部分开始取?

所以我们需要将kafka 的offset 保存在文件中, 宕机之后在启动时去恢复文件中的offset 读取数据。

import kafka.serializer.StringDecoder;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function0;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaPairInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.KafkaUtils;

import scala.Tuple2;

import java.util.*;

/** *@Author PL *@Date 2018/12/26 13:28 *@Description TODO **/

public class KafkaCheckPoint {

public static void main(String[] args) throws InterruptedException {

final String checkPoint = "./checkPoint";

Function0<JavaStreamingContext> scFunction = new Function0<JavaStreamingContext>() {

@Override

public JavaStreamingContext call() throws Exception {

return createJavaStreamingContext();

}

};

// 如果存在checkport 就恢复数据,不存在就直接运行

JavaStreamingContext jsc = JavaStreamingContext.getOrCreate(checkPoint, scFunction);

jsc.start();

jsc.awaitTermination();

jsc.close();;

}

public static JavaStreamingContext createJavaStreamingContext(){

System.out.println("初始化"); // 第一次会执行,宕机之后重启执行数据恢复时不执行

final SparkConf sc = new SparkConf().setMaster("local").setAppName("test");

JavaStreamingContext jsc = new JavaStreamingContext(sc, Durations.seconds(5));

/** * checkpoint 保存 * 1、 配置信息 * 2、Dstream 执行逻辑 * 3、Job 的执行进度 * 4、offset */

jsc.checkpoint("./checkPoint");

Map<String,String> kafkaParam = new HashMap<>();

kafkaParam.put("metadata.broker.list","node1:9092,node2:9092,node3:9092");

HashSet<String> topic = new HashSet<>();

topic.add("t0315");

JavaPairInputDStream<String, String> line = KafkaUtils.createDirectStream(jsc, String.class, String.class, StringDecoder.class, StringDecoder.class, kafkaParam, topic);

JavaDStream<String> flatLine = line.flatMap(new FlatMapFunction<Tuple2<String, String>, String>() {

@Override

public Iterator<String> call(Tuple2<String, String> tuple2) throws Exception {

return Arrays.asList(tuple2._2.split(" ")).iterator();

}

});

JavaPairDStream<String, Integer> pair = flatLine.mapToPair(new PairFunction<String, String, Integer>() {

@Override

public Tuple2<String, Integer> call(String s) throws Exception {

return new Tuple2<String, Integer>(s, 1);

}

});

JavaPairDStream<String, Integer> count = pair.reduceByKey(new Function2<Integer, Integer, Integer>() {

@Override

public Integer call(Integer integer, Integer integer2) throws Exception {

return integer + integer2;

}

});

count.print();

return jsc;

}

}

这次我们启动的时候会发现先从checkpoint中恢复数据, 从上次宕机的数据开始读取并执行。但是,当我们更改功能时,发现新修改的部分没有执行, 还是执行的上次保存的代码。。。。。。。

这时候可以把offset 保存至zookeeper中

主方法

import com.pl.data.offset.getoffset.GetTopicOffsetFromKafkaBroker;

import com.pl.data.offset.getoffset.GetTopicOffsetFromZookeeper;

import kafka.common.TopicAndPartition;

import org.apache.log4j.Logger;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import java.util.Map;

public class UseZookeeperManageOffset {

/** * 使用log4j打印日志,“UseZookeeper.class” 设置日志的产生类 */

static final Logger logger = Logger.getLogger(UseZookeeperManageOffset.class);

public static void main(String[] args) throws InterruptedException {

/** * 从kafka集群中得到topic每个分区中生产消息的最大偏移量位置 */

Map<TopicAndPartition, Long> topicOffsets = GetTopicOffsetFromKafkaBroker.getTopicOffsets("node1:9092,node2:9092,node3:9092", "t0315");

/** * 从zookeeper中获取当前topic每个分区 consumer 消费的offset位置 */

Map<TopicAndPartition, Long> consumerOffsets =

GetTopicOffsetFromZookeeper.getConsumerOffsets("node1:2181,node2:2181,node3:2181","pl","t0315");

/** * 合并以上得到的两个offset , * 思路是: * 如果zookeeper中读取到consumer的消费者偏移量,那么就zookeeper中当前的offset为准。 * 否则,如果在zookeeper中读取不到当前消费者组消费当前topic的offset,就是当前消费者组第一次消费当前的topic, * offset设置为topic中消息的最大位置。 */

if(null!=consumerOffsets && consumerOffsets.size()>0){

topicOffsets.putAll(consumerOffsets);

}

/** * 如果将下面的代码解开,是将topicOffset 中当前topic对应的每个partition中消费的消息设置为0,就是从头开始。 */

/*for(Map.Entry item:topicOffsets.entrySet()){ item.setValue(0l); }*/

/** * 构建SparkStreaming程序,从当前的offset消费消息 */

JavaStreamingContext jsc = SparkStreamingDirect.getStreamingContext(topicOffsets,"pl");

jsc.start();

jsc.awaitTermination();

jsc.close();

}

}

获取kafka中当前的offset 偏移量(kafka API)

import kafka.api.PartitionOffsetRequestInfo;

import kafka.cluster.Broker;

import kafka.common.TopicAndPartition;

import kafka.javaapi.OffsetRequest;

import kafka.javaapi.OffsetResponse;

import kafka.javaapi.PartitionMetadata;

import kafka.javaapi.TopicMetadata;

import kafka.javaapi.TopicMetadataRequest;

import kafka.javaapi.TopicMetadataResponse;

import kafka.javaapi.consumer.SimpleConsumer;

/** * 测试之前需要启动kafka * @author root * */

public class GetTopicOffsetFromKafkaBroker {

public static void main(String[] args) {

Map<TopicAndPartition, Long> topicOffsets = getTopicOffsets("node1:9092,node2:9092,node3:9092", "t0315");

Set<Entry<TopicAndPartition, Long>> entrySet = topicOffsets.entrySet();

for(Entry<TopicAndPartition, Long> entry : entrySet) {

TopicAndPartition topicAndPartition = entry.getKey();

Long offset = entry.getValue();

String topic = topicAndPartition.topic();

int partition = topicAndPartition.partition();

System.out.println("topic = "+topic+",partition = "+partition+",offset = "+offset);

}

}

/** * 从kafka集群中得到当前topic,生产者在每个分区中生产消息的偏移量位置 * @param KafkaBrokerServer * @param topic * @return */

public static Map<TopicAndPartition,Long> getTopicOffsets(String KafkaBrokerServer, String topic){

Map<TopicAndPartition,Long> retVals = new HashMap<TopicAndPartition,Long>();

// 遍历kafka集群,并拆分

for(String broker:KafkaBrokerServer.split(",")){

SimpleConsumer simpleConsumer = new SimpleConsumer(broker.split(":")[0],Integer.valueOf(broker.split(":")[1]), 64*10000,1024,"consumer");

TopicMetadataRequest topicMetadataRequest = new TopicMetadataRequest(Arrays.asList(topic));

TopicMetadataResponse topicMetadataResponse = simpleConsumer.send(topicMetadataRequest);

List<TopicMetadata> topicMetadataList = topicMetadataResponse.topicsMetadata();

// 遍历每个topic下的元数据

for (TopicMetadata metadata : topicMetadataList) {

// 遍历元数据下的分区

for (PartitionMetadata part : metadata.partitionsMetadata()) {

Broker leader = part.leader();

if (leader != null) {

TopicAndPartition topicAndPartition = new TopicAndPartition(topic, part.partitionId());

PartitionOffsetRequestInfo partitionOffsetRequestInfo = new PartitionOffsetRequestInfo(kafka.api.OffsetRequest.LatestTime(), 10000);

OffsetRequest offsetRequest = new OffsetRequest(ImmutableMap.of(topicAndPartition, partitionOffsetRequestInfo), kafka.api.OffsetRequest.CurrentVersion(), simpleConsumer.clientId());

OffsetResponse offsetResponse = simpleConsumer.getOffsetsBefore(offsetRequest);

if (!offsetResponse.hasError()) {

long[] offsets = offsetResponse.offsets(topic, part.partitionId());

retVals.put(topicAndPartition, offsets[0]);

}

}

}

}

simpleConsumer.close();

}

return retVals;

}

}

获取zookeeper中上次的消费的offset

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.retry.RetryUntilElapsed;

import com.fasterxml.jackson.databind.ObjectMapper;

import kafka.common.TopicAndPartition;

public class GetTopicOffsetFromZookeeper {

public static Map<TopicAndPartition,Long> getConsumerOffsets(String zkServers,String groupID, String topic) {

Map<TopicAndPartition,Long> retVals = new HashMap<TopicAndPartition,Long>();

// 连接 zookeeper

ObjectMapper objectMapper = new ObjectMapper();

CuratorFramework curatorFramework = CuratorFrameworkFactory.builder()

.connectString(zkServers).connectionTimeoutMs(1000)

.sessionTimeoutMs(10000).retryPolicy(new RetryUntilElapsed(1000, 1000)).build();

curatorFramework.start();

try{

String nodePath = "/consumers/"+groupID+"/offsets/" + topic;

if(curatorFramework.checkExists().forPath(nodePath)!=null){

List<String> partitions=curatorFramework.getChildren().forPath(nodePath);

for(String partiton:partitions){

int partitionL=Integer.valueOf(partiton);

Long offset=objectMapper.readValue(curatorFramework.getData().forPath(nodePath+"/"+partiton),Long.class);

TopicAndPartition topicAndPartition=new TopicAndPartition(topic,partitionL);

retVals.put(topicAndPartition, offset);

}

}

}catch(Exception e){

e.printStackTrace();

}

curatorFramework.close();

return retVals;

}

public static void main(String[] args) {

Map<TopicAndPartition, Long> consumerOffsets = getConsumerOffsets("node1:2181,node2:2181,node3:2181","pl","t0315");

Set<Entry<TopicAndPartition, Long>> entrySet = consumerOffsets.entrySet();

for(Entry<TopicAndPartition, Long> entry : entrySet) {

TopicAndPartition topicAndPartition = entry.getKey();

String topic = topicAndPartition.topic();

int partition = topicAndPartition.partition();

Long offset = entry.getValue();

System.out.println("topic = "+topic+",partition = "+partition+",offset = "+offset);

}

}

}

读取kafka中指定offset开始的消息

import com.fasterxml.jackson.databind.ObjectMapper;

import kafka.common.TopicAndPartition;

import kafka.message.MessageAndMetadata;

import kafka.serializer.StringDecoder;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.retry.RetryUntilElapsed;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.HasOffsetRanges;

import org.apache.spark.streaming.kafka.KafkaUtils;

import org.apache.spark.streaming.kafka.OffsetRange;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.atomic.AtomicReference;

public class SparkStreamingDirect {

public static JavaStreamingContext getStreamingContext(Map<TopicAndPartition, Long> topicOffsets,final String groupID){

SparkConf conf = new SparkConf().setMaster("local[*]").setAppName("SparkStreamingOnKafkaDirect");

conf.set("spark.streaming.kafka.maxRatePerPartition", "10");

JavaStreamingContext jsc = new JavaStreamingContext(conf, Durations.seconds(5));

// jsc.checkpoint("/checkpoint");

Map<String, String> kafkaParams = new HashMap<String, String>();

kafkaParams.put("metadata.broker.list","node1:9092,node2:9092,node3:9092");

// kafkaParams.put("group.id","MyFirstConsumerGroup");

for(Map.Entry<TopicAndPartition,Long> entry:topicOffsets.entrySet()){

System.out.println(entry.getKey().topic()+"\t"+entry.getKey().partition()+"\t"+entry.getValue());

}

JavaInputDStream<String> message = KafkaUtils.createDirectStream(

jsc,

String.class,

String.class,

StringDecoder.class,

StringDecoder.class,

String.class,

kafkaParams,

topicOffsets,

new Function<MessageAndMetadata<String,String>,String>() {

private static final long serialVersionUID = 1L;

public String call(MessageAndMetadata<String, String> v1)throws Exception {

return v1.message();

}

}

);

final AtomicReference<OffsetRange[]> offsetRanges = new AtomicReference<>();

JavaDStream<String> lines = message.transform(new Function<JavaRDD<String>, JavaRDD<String>>() {

private static final long serialVersionUID = 1L;

@Override

public JavaRDD<String> call(JavaRDD<String> rdd) throws Exception {

OffsetRange[] offsets = ((HasOffsetRanges) rdd.rdd()).offsetRanges();

offsetRanges.set(offsets);

return rdd;

}

}

);

message.foreachRDD(new VoidFunction<JavaRDD<String>>(){

/** * */

private static final long serialVersionUID = 1L;

@Override

public void call(JavaRDD<String> t) throws Exception {

ObjectMapper objectMapper = new ObjectMapper();

CuratorFramework curatorFramework = CuratorFrameworkFactory.builder()

.connectString("node1:2181,node2:2181,node3:2181").connectionTimeoutMs(1000)

.sessionTimeoutMs(10000).retryPolicy(new RetryUntilElapsed(1000, 1000)).build();

curatorFramework.start();

for (OffsetRange offsetRange : offsetRanges.get()) {

long fromOffset = offsetRange.fromOffset();

long untilOffset = offsetRange.untilOffset();

final byte[] offsetBytes = objectMapper.writeValueAsBytes(offsetRange.untilOffset());

String nodePath = "/consumers/"+groupID+"/offsets/" + offsetRange.topic()+ "/" + offsetRange.partition();

System.out.println("nodePath = "+nodePath);

System.out.println("fromOffset = "+fromOffset+",untilOffset="+untilOffset);

if(curatorFramework.checkExists().forPath(nodePath)!=null){

curatorFramework.setData().forPath(nodePath,offsetBytes);

}else{

curatorFramework.create().creatingParentsIfNeeded().forPath(nodePath, offsetBytes);

}

}

curatorFramework.close();

}

});

lines.print();

return jsc;

}

}