用python创建的神经网络--mnist手写数字识别率达到98%

周末根据Tariq Rashid大神的指导,没有使用tensorflow等框架,用python编写了一个三层神经网络,并应用再mnist手写库识别上,经过多方面参数调优,识别率竟然达到了98%。 调优比较难,经验感觉特别宝贵,为避免时间长了忘记,记录整理如下。

目录

一、加载所需要的库

二、定义神经网络类

三、创建神经网络对象并用MNIST训练集训练

四、用测试集测试准确率

五、参数调优过程记录

六、测试下自己绘制的字体图片识别效果

七、特别优化:补充旋转图像的模型训练

具体过程记录

一、加载所需要的库

# Code for a 3-layer neural network, and code for learning the MNIST dataset

# [email protected],2018.8 Studying to write neural network by python

# license is GPLv2

import numpy

# scipy.special for the sigmoid function expit()

import scipy.special

import matplotlib.pyplot

# ensure the plots are inside this jupyter notebook, not an external window

%matplotlib inline

# helper to load data from PNG image files

import imageio

# glob helps select multiple files using patterns

import glob二、定义神经网络类

# neural network class definition (3 layers)

class neuralNetwork:

# initialise the neural network

def __init__(self,inputnodes,hiddennodes,outputnodes,learningrate):

# set number of nodes in each input,hidden,output layer

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

# learning rate

self.lr = learningrate

# link weight matrices ,wih and who

# weithg inside the arrays are w_i_j, where link is from node i to node j in the next layer

# w11 w21

# w12 w22 etc

self.wih = (numpy.random.normal(0.0, pow(self.hnodes,-0.5), (self.hnodes,self.inodes) ) )

self.who = (numpy.random.normal(0.0, pow(self.onodes,-0.5), (self.onodes,self.hnodes) ) )

# activation function is the sigmoid function

self.activation_function = lambda x: scipy.special.expit(x)

pass

# train the neural network

def train(self,inputs_list,targets_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list,ndmin=2).T

targets = numpy.array(targets_list,ndmin=2).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih,inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

# output layer error is the (target-actual)

output_errors = targets - final_outputs

# hidden layer error is the output_errors,split by weights,recombined at hidden nodes

hidden_errors = numpy.dot(self.who.T, output_errors)

# update the weights for the links between the hidden and output layers

self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs))

# update the weights for the links between the input and hidden layers

self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs))

pass

# query the neural network

def query(self,inputs_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list,ndmin=2).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih,inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

return final_outputs三、创建神经网络对象并用MNIST训练集训练

# number of input,hidden and output nodes

# 28 * 28 = 784

input_nodes = 784

hidden_nodes = 200

output_nodes = 10

# learning rate is 0.3

learning_rate = 0.1

# create instance of neural network

n = neuralNetwork(input_nodes,hidden_nodes,output_nodes,learning_rate)

# train the neural network

# load the mnist training data csv file into a list

training_data_file = open("mnist_dataset/mnist_train.csv",'r')

training_data_list = training_data_file.readlines()

training_data_file.close()

# epochs is the number of times the training data set is used for training

epochs = 5

for e in range(epochs):

# go through all records in the training data set

for record in training_data_list:

all_values = record.split(',')

# scale and shift the inputs

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

# create the target output values (all 0.01, except the desired label which is 0.99)

targets = numpy.zeros(output_nodes) + 0.01

# all_values[0] is the target label for this record

targets[int(all_values[0])] = 0.99

n.train(inputs,targets)

pass

pass四、用测试集测试准确率

# test the neural network

# load the mnist test data csv file to a list

test_data_file = open("mnist_dataset/mnist_test.csv",'r')

test_data_list = test_data_file.readlines()

test_data_file.close()

# scorecard for how well the network performs,initially empty

scorecard = []

# go through all records in the test data set

for record in test_data_list:

all_values = record.split(',')

# correct answer is first value

correct_label = int(all_values[0])

# scale and shift the inputs

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

# query the network

outputs = n.query(inputs)

# the index of the highest value corresponds to the label

label = numpy.argmax(outputs)

# print("Answer label is:",correct_label," ; ",label," is network's answer")

# append correct or incorrect to list

if(label == correct_label):

# network's answer matches correct answer, add 1 to scorecard

scorecard.append(1)

else:

scorecard.append(0)

pass

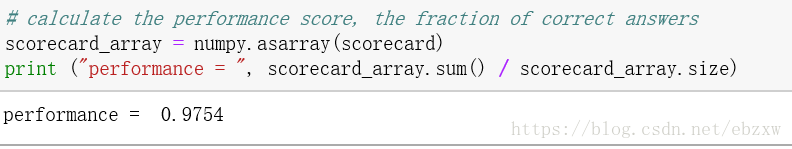

# calculate the performance score ,the fraction of correct answers

scorecard_array = numpy.asarray(scorecard)

print("performance = ", scorecard_array.sum() / scorecard_array.size )

五、参数调优过程记录

由于代码编写根据S曲线激活函数设计了输入、输出值范围,代码中进行了专门的优化考虑, 训练优化不考虑更换激活函数。

'''

有效的参数调优说明

学习率 训练轮数 隐藏层节点 结果准确率 说明

0.3 1 100 0.9473 初始经验,效果还不错。

0.6 1 100 0.9047 学习率再增加到0.6,测试准确率下降。好像大的学习率导致了梯度下降中有来回跳动和超调

0.1 1 100 0.9502 降低学习率到0.1,准确率增加。

0.01 1 100 0.9241 更低的学习率也不行,应该是限制了梯度下降的速度,步长太小。

0.2 1 100 0.9515 学习率调到0.2为最优

0.2 5 100 0.9611 5~7轮迭代是比较好的经验值。测试准确率提高到96.11%

0.1 5 100 0.9653 增加训练轮数,可适当降低学习率,神经网络有更优的表现

0.1 5 200 0.9723 增加影藏层节点数,神经网络有更好的学习能力

0.1 5 500 0.9751 这个结果已经非常好了!

'''六、测试下自己绘制的字体图片识别效果(28*28)

# 测试神经网络是否能准确识别自己的手绘28*28 png图像

# our own image test data set

our_own_dataset = []

# load the png image data as test data set

for image_file_name in glob.glob('my_own_images/2828_my_own_?.png'):

# use the filename to set the correct label

label = int(image_file_name[-5:-4])

# load image data from png files into an array

print ("loading ... ", image_file_name)

img_array = imageio.imread(image_file_name, as_gray=True)

# reshape from 28x28 to list of 784 values, invert values

img_data = 255.0 - img_array.reshape(784)

# then scale data to range from 0.01 to 1.0

img_data = (img_data / 255.0 * 0.99) + 0.01

print(numpy.min(img_data))

print(numpy.max(img_data))

# append label and image data to test data set

record = numpy.append(label,img_data)

our_own_dataset.append(record)

pass

# test the neural network with our own images

# record to test

item = 2

# plot image

matplotlib.pyplot.imshow(our_own_dataset[item][1:].reshape(28,28), cmap='Greys', interpolation='None')

# correct answer is first value

correct_label = our_own_dataset[item][0]

# data is remaining values

inputs = our_own_dataset[item][1:]

# query the network

outputs = n.query(inputs)

print (outputs)

# the index of the highest value corresponds to the label

label = numpy.argmax(outputs)

print("network says ", label)

# append correct or incorrect to list

if (label == correct_label):

print ("Good,match!")

else:

print ("no match!")

pass结果样子如下:

前面所有事情做好后,最高达到了 97.5%, 还算不错!

七、特别优化:补充旋转图像的模型训练(按经验,分别左、右旋转10度)

在神经网络训练部分增加对旋转图像的训练,如下后面部分:

# train the neural network

# epochs is the number of times the training data set is used for training

epochs = 10

for e in range(epochs):

# go through all records in the training data set

for record in training_data_list:

# split the record by the ',' commas

all_values = record.split(',')

# scale and shift the inputs

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

# create the target output values (all 0.01, except the desired label which is 0.99)

targets = numpy.zeros(output_nodes) + 0.01

# all_values[0] is the target label for this record

targets[int(all_values[0])] = 0.99

n.train(inputs, targets)

## create rotated variations

# rotated anticlockwise by x degrees

inputs_plusx_img = scipy.ndimage.interpolation.rotate(inputs.reshape(28,28), 10, cval=0.01, order=1, reshape=False)

n.train(inputs_plusx_img.reshape(784), targets)

# rotated clockwise by x degrees

inputs_minusx_img = scipy.ndimage.interpolation.rotate(inputs.reshape(28,28), -10, cval=0.01, order=1, reshape=False)

n.train(inputs_minusx_img.reshape(784), targets)

pass

pass将训练轮次调整为10,完成对旋转图像的训练后, 神经网络模型在测试验证中准确率达到了 97.9% , 已经非常好了!