tensorflow 激活函数 elu exponential

#!/usr/bin/env python3

# -*- coding:UTF-8 -*-

import tensorflow as tf

import numpy as np

from tensorflow.python import debug as tf_debug

#tf.keras.backend.set_session(tf_debug.LocalCLIDebugWrapperSession(tf.Session()))

import scipy.misc

x_train = np.array([[1,1],[0,0],[0,1],[1,0]]);

y_train = np.array([0,0,1,1])

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(2, input_shape=(2,),activation='sigmoid',use_bias =True),

tf.keras.layers.Dense(3, activation='relu'),

tf.keras.layers.Dense(3, activation='elu'),

tf.keras.layers.Dense(3, activation='softplus'),

tf.keras.layers.Dense(3, activation='linear'),

tf.keras.layers.Dense(3, activation='exponential'),

tf.keras.layers.Dense(3, activation='selu'),

tf.keras.layers.Dense(1, activation='tanh',use_bias =True)

])

model.compile(optimizer='adam',

loss='mean_squared_error',

metrics=['binary_accuracy'])

model.fit(x_train, y_train, epochs=5000)

model.save('/home/a/my_minis.h5')

new_model = tf.keras.models.load_model('/home/a/my_minis.h5')

print(new_model.predict(x_train))

print(new_model.predict_classes(x_train))

运行结果:

loss: 3.1563e-05 - binary_accuracy: 1.0000

Epoch 5000/5000

4/4 [==============================] - 0s 808us/sample - loss: 3.1545e-05 - binary_accuracy: 1.0000

[[2.9830632e-04]

[1.9936262e-04]

[9.9207312e-01]

[9.9205357e-01]]

[[0]

[0]

[1]

[1]]

--------------------------------------------------------------

https://www.tensorflow.org/api_docs/python/tf/keras/activations

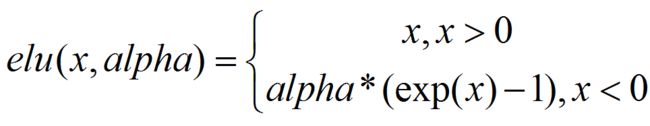

tf.keras.activations.elu

tf.keras.activations.elu(

x,

alpha=1.0

)

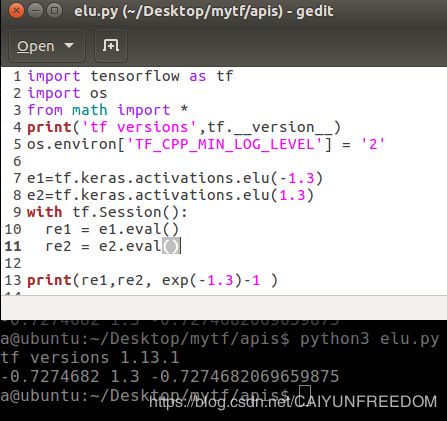

import tensorflow as tf

import os

from math import *

print('tf versions',tf.__version__)

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

e1=tf.keras.activations.elu(-1.3)

e2=tf.keras.activations.elu(1.3)

with tf.Session():

re1 = e1.eval()

re2 = e2.eval()

print(re1,re2, exp(-1.3)-1 )

import tensorflow as tf

import os

from math import *

print('tf versions',tf.__version__)#tf versions 1.13.1

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

e1=tf.keras.activations.exponential(1.3)

e2=tf.keras.activations.exponential(0.1)

with tf.Session():

re1 = e1.eval()

re2 = e2.eval()

print(re1,re2, exp(1.3),exp(0.1) )#3.6692965 1.105171 3.6692966676192444 1.1051709180756477

import tensorflow as tf

import os

from math import *

print('tf versions',tf.__version__)#tf versions 1.13.1

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

e1=tf.keras.activations.linear(1.3)

e2=tf.keras.activations.linear(0.1)

print(e1,e2)#1.3 0.1relu

代码托管在github

https://github.com/sofiathefirst/AIcode