Spark SQL简单操作演示(含导出表)

还没装spark看这:spark搭建

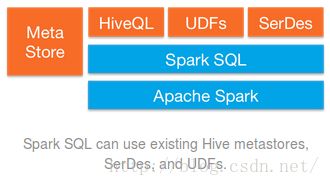

Spark SQL前身是Shark,由于Shark对于Hive的太多依赖制约了Spark的发展,Spark SQL由此产生。

Spark SQL只要在编译的时候引入Hive支持,就可以支持Hive表访问,UDF,SerDe,以及HiveQL/HQL

启动spark-sql

guo@drguo:/opt/spark-1.6.1-bin-hadoop2.6/bin$ spark-sql

16/05/15 21:20:55 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

spark-sql可以像hive一样把写好的sql直接source执行

spark-sql> source /home/guo/1.sql

但速度比hive快的多:Spark SQL与Hive On MapReduce速度比较

1.sql

drop table if exists cainiao;

create external table cainiao(dater bigint, item_id bigint, store_code bigint, qty_alipay_njhs bigint)

row format delimited fields terminated by ','

location '/cainiao';

create table predict as select item_id, store_code, sum(qty_alipay_njhs) as target

from cainiao where dater>=20141228 and dater<=20150110 group by item_id, store_code;

drop table if exists cainiaoq;

create external table cainiaoq(dater bigint, item_id bigint, qty_alipay_njhs bigint)

row format delimited fields terminated by ','

location '/cainiaoq';

create table predictq as select item_id, "all" as store_code, sum(qty_alipay_njhs) as target

from cainiaoq where dater>=20141228 and dater<=20150110 group by item_id;

spark-sql> show tables;

cainiao false

cainiaoq false

predict false

predictq falsespark-sql> insert overwrite local directory '/home/guo/cainiaodiqu'

> row format delimited

> fields terminated by ','

> select * from predict;

Error in query:

Unsupported language features in query: insert overwrite local directory '/home/guo/cainiaodiqu'

row format delimited

fields terminated by ','

select * from predict官方文档:https://spark.apache.org/docs/latest/sql-programming-guide.html

第一个办法(2016.5.26刚想到的)

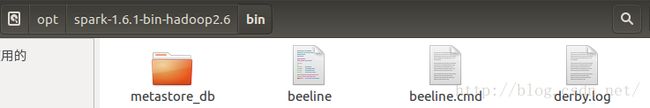

因为hive如果什么都没配,也会用自带的derby存储,也是在哪启动的就存在哪,所以只要在相同目录下启动,在spark-sql里创建的表,hive里当然也有了,当然就可以用上面spark-sql不支持的语句导出了!真是机智如我!!!2016.10.26补充:看一下cdh或者hdp自动部署的配置文件就会发现,spark sql和hive往往会配置使用同一个元数据库。

guo@drguo:/opt/spark-1.6.1-bin-hadoop2.6/bin$ hive

hive> show tables;

OK

cainiao

cainiaoq

ijcai

ijcaitest

ijpredict

predict

predictq

Time taken: 2.136 seconds, Fetched: 7 row(s)第二个办法

启动spark-shell(scala)

guo@drguo:/opt/spark-1.6.1-bin-hadoop2.6/bin$ spark-shell

16/05/15 20:30:07 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.1

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_73)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc.

SQL context available as sqlContext.

scala> sqlContext.sql("show tables").show

+---------+-----------+

|tableName|isTemporary|

+---------+-----------+

| cainiao| false|

| cainiaoq| false|

| predict| false|

| predictq| false|

+---------+-----------+

scala> sqlContext.sql("select * from predict limit 10").show

+-------+----------+------+

|item_id|store_code|target|

+-------+----------+------+

| 33| 2| 1|

| 33| 3| 0|

| 33| 4| 4|

| 33| 5| 1|

| 132| 1| 0|

| 132| 2| 1|

| 132| 3| 1|

| 330| 5| 1|

| 549| 1| 3|

| 549| 2| 2|

+-------+----------+------+

存在hdfs上(/user/guo/下),好像只能存为这三种格式,我试了下txt和csv都不行,如果还能存别的格式请告诉我。

scala> sqlContext.sql("select * from predict ").write.format("json").save("predictj")

scala> sqlContext.sql("select * from predict ").write.format("parquet").save("predictp")

scala> sqlContext.sql("select * from predict ").write.format("orc").save("predicto")