pyspark学习与工作历程

pyspark学习与工作历程

pyspark中的dataframe操作

spark sql理解:属于架设在spark core之上的高级层。即在使用中,需要在SparkContext基础上架一层SQLContext。Spark SQL的RDD称为SchemaRDD。

from pyspark import SQLContext, Row

sqlCtx = SQLContext(sc)

完整官方文档

-

设置一个应用

spark = SparkSession.builder.master("local").appName("world num").config(conf = SparkConf()).getOrCreate()其中master、appName

-

读取文件

- 从本地文件系统读取csv(不在集群):

spark.read.csv(path, header, sep=).

- 从本地文件系统读取csv(不在集群):

spark_2 = SparkSession.builder.master("local").appName("read citycode").config(conf = SparkConf()).getOrCreate()

spark_2.read.csv('file:///home/hadoop/xxx/project_0509_khjl/行政区划代码_2018_02_民政部.csv', header=True, sep=',')

4. 读取本地csv文件(在集群当中):

参考文档1

4. 读取本地txt

from pyspark.sql import SparkSession

from pyspark import SparkConf

spark_2 = SparkSession.builder.master("local").appName("read citycode").config(conf = SparkConf()).getOrCreate()

ctcode_df = spark_2.read.csv(r'E:\xxx\Proj\task1_20180509\process\行政区划代码_2018_02_民政部.csv', header=True, sep=',')

5. 上传本地的csv到集群当中——似乎是根目录:

hadoop fs -put /home/hadoop/xxx/project_0509_khjl/xz.csv

6. 将pyspark中的dataframe保存到本地

df1.to_csv('/home/hadoop/xxx/project_0509_khjl/mydt_pddf.csv', encoding='utf-8')

7. 保存文件到集群

df.repartition(20).write.csv("cc_out.csv", sep='|') # repartition中是分区数量

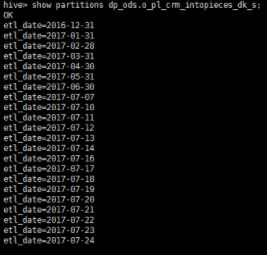

8. 从分区中选取数据

- sql_1 = "select %s from big_table.sheet1 WHERE partition_name='2018-05-10'" % (key_item) # 从big_table.sheet1表的分区'2018-05-10'中取出数据。

-

增加

- 合并行、行合并

df.union(df1)#将df与df1进行行合并,这时df与df1的列名要相同

- dataframe合并

df.join(df1, con=[cond1, cond2])wordVecs.join(sourceDF,wordVecs.name==sourceDF.name).drop(sourceDF.name)- 条件可以写成多个条件 [df1.adf2.a,df1.bdf2.b]

- 合并行、行合并

-

删除

- 移除行全部相同的数据——采用.dropDuplicates()

- 移除某一列中相同的数据——采用.dropDuplicates(subset=[列名])

- 删除某一列、多列

df.drop('age') # 某一列 df.drop('a','b','c') # 删除多列```

-

改

- 修改列名

df.selectExpr("列名1 as 新列名1","列名2 as 新列名2") df.select(col("列名1").alias("新列名1"),col("列名2").alias("新列名2"))

- 修改列名

-

减

-

排

- 排序:

df.orderBy() - 根据某一列排序

pd.DataFrame(rdd3_ls.sort('time').take(5), columns=rdd3_ls.columns) pd.DataFrame(rdd3_ls.sort(asc('time')).take(5), columns=rdd3_ls.columns)```

- 排序:

-

组合统计

-

分组

df.groupBy("key").count().orderBy("key").show() -

唯一值、去重:distinct()、dropDuplicates()

df.distinct() df.dropDuplicates(['staff_id']).orderBy('staff_id').limit(10).show() -

agg()方法:

df.agg({"role_id":"max"}).collect()

>> [Row(max(role_id)=372)] -

adf

-

函数功能

-

转换成udf:

```

from pyspark.sql.functions import udf

from pyspark.sql.types import *

ss = udf(split_sentence, ArrayType(StringType()))

documentDF.select(ss("text").alias("text_array")).show();

```

9. pyspark中StructType:

```

StructTpye(StructField('first', IntegerType()),StructField('Second', StringType()),StructField('Third', FloatType()),)

return (1,'2',3.0)

```

10. 更改列的类型astype():

`df.select(df.xzqhdm.astype(IntegerType()).alias('xzqhdm')).show()`

-

空值的判断与处理

-

pyspark dataframe的null和非null的判断

test1.business_code.notnull()

intopieces_merge_PBC['id_no'].isNotNull() -

时间的处理

- 最好用该形式:`select from_unixtime(1519818348, 'yyyy-MM-dd hh:mm:ss')`

- 表现形式主要有:

- `from_unixtime(时间戳, “yyyy-MM-dd hh:mm:ss”)`

- `from_unixtime(时间戳, “yyyy-MM-dd “)`

- 列变量名称

df.printSchema() - 显示内容

- df.show()

Spark Cheatsheet

参考:

(1)常用函数 http://www.cnblogs.com/redhat0019/p/8665491.html

(2)cheat sheet https://s3.amazonaws.com/assets.datacamp.com/blog_assets/PySpark_Cheat_Sheet_Python.pdf

一、initializing spark

1. 获取SparkSession

spark = SparkSession.builder.config(conf = SparkConf()).getOrCreate()

2. 获取SparkContext

- 获取sparkSession: se = SparkSession.builder.config(conf = SparkConf()).getOrCreate()

- 获取sparkContext: sc = se.sparkContext

- 获取sqlContext: sq = SparkSession.builder.getOrCreate()

- 获取DataFrame: df = sqlContext.createDataFrame(userRows)

from pyspark import SparkContext

from pyspark import SparkConf

import pyspark

# spark初始化配置

my_app = "my_spark1"

master = "local"

conf = SparkConf().setAppName(my_app).setMaster(master)

# sc = sc.getOrCreate(conf)

sc = SparkContext(conf=conf)

# 关闭spark(Cannot run multiple SparkContexts at once;)

# sc.stop() ???还是无法停止

二、载入数据

# 内部创建

rdd = sc.parallelize([('a',1),('a',2),('b',2)])

rdd2 = sc.parallelize(range(100))

rdd4 = sc.parallelize([("a",["x","y","z"]),

("b",["p","r"])])

# 外部读取文件

textFile = sc.textFile('mytext.txt')

textFile2 = sc.wholeTextFiles('my_directory/')

三、RDD信息获取操作

## 获知rdd基本信息

rdd.getNumPartitions # 分区数

rdd.count() # RDD的item数

rdd.countByKey

rdd.countByValue

rdd.collectAsMap() # 以dict返回kv

rdd2.sum() # RDD要素的总和

sc.parallelize([0]).isEmpty() # 检查RDD是否为空

print(rdd) # 打印当前对象

type(rdd) # 获取当前对象类型

## summary信息

rdd2.max()

rdd2.min()

rdd2.mean()

rdd2.stdev()

rdd2.variance()

rdd2.histogram(3) # ??干嘛的

ParallelCollectionRDD[31] at parallelize at PythonRDD.scala:175

([0, 33, 66, 99], [33, 33, 34])

四、函数应用

# 将function用于每一个要素

rdd.map(lambda x: x+(x[1],x[0])).collect()

# 将function应用每一个要素,然后展开

rdd5 = rdd.flatMap(lambda x: x+(x[1],x[0]))

rdd5.collect()

# 将function应用到每一个dict中的value当中展开

rdd4.flatMapValues(lambda x: x).collect()

[('a', 'x'), ('a', 'y'), ('a', 'z'), ('b', 'p'), ('b', 'r')]

五、选择数据

# 获取

rdd.collect() # 将所有rdd元素以list返回

rdd.take(2) # 取前2个元素

rdd.first() # 取首个元素

rdd.top(2) # 取顶部两个

rdd.takeOrdered(1) # 从小到大排序取出前 3 条数据

# 采样

rdd2.sample(False, fraction=0.15, seed=81).collect() # 以0.15比例,随机种子81,取样

# 过滤

rdd.filter(lambda x: "a" in x).collect() # 过滤RDD

rdd5.distinct().collect() # 去重

rdd.keys().collect() # 返回kv值

['a', 'a', 'b']

六、迭代

# 通过foreach来应用函数

def g(x):

print(x)

rdd.foreach(g)

七、重塑数据

# 减少

rdd.reduceByKey(lambda x,y: x+y).collect() # 根据key合并rdd中的值

rdd.reduce(lambda x,y: x+y) # 展开

# 分组

rdd2.groupBy(lambda x: x%2).mapValues(list).collect()

rdd.groupByKey().mapValues(list).collect() # 根据key分组

rdd2_g = rdd2.groupBy(lambda x: x<50) # 将根据x值分为2组

# sorted(rdd2_g[0][1]).mapValues(list).collect() ??如何访问某一组

# 聚合 https://blog.csdn.net/qingyang0320/article/details/51603243,https://blog.csdn.net/u011011025/article/details/76206335

seqop = (lambda x,y: (x[0]+y,x[1]+1))

combop = (lambda x,y: (x[0]+y[0],x[1]+y[1]))

rdd2.aggregate((0,0),seqOp=seqop,combOp=combop) # 不好理解

rdd2.fold()

rdd2.foldByKey().collect()

rdd2.keyBy().collect()

九、算术运算

十、排序

rdd.sortBy(lambda x:x[1]).collect() # 根据function排序

# rdd.sortByKey().collect() # 根据key对kv排序

[('a', 1), ('a', 2), ('b', 2)]

十一、重新分区

rdd.repartition(4) # 分配4个分区

rdd.coalesce(1) # 减少相应的RDD分区,设为1

CoalescedRDD[297] at coalesce at NativeMethodAccessorImpl.java:0

十二、保存文件

# 保存为txt

rdd.saveAsTextFile('rdd.txt') # ("hdfs://192.168.88.128:9000/data/result.txt") 将结果保存成文件

# 保存为hadoopfile,同时可参考,http://aducode.github.io/posts/2016-08-02/write2hdfsinpyspark.html

rdd.saveAsHadoopFile("hdfs://namenodehost/parent/child", org.apache.hadoop.mapred.TextOutputFormat')

# 关闭sparkcontext

sc.stop()

help(rdd2.sample)

Help on method sample in module pyspark.rdd:

sample(withReplacement, fraction, seed=None) method of pyspark.rdd.PipelinedRDD instance

Return a sampled subset of this RDD.

:param withReplacement: can elements be sampled multiple times (replaced when sampled out)

:param fraction: expected size of the sample as a fraction of this RDD's size

without replacement: probability that each element is chosen; fraction must be [0, 1]

with replacement: expected number of times each element is chosen; fraction must be >= 0

:param seed: seed for the random number generator

.. note:: This is not guaranteed to provide exactly the fraction specified of the total

count of the given :class:`DataFrame`.

>>> rdd = sc.parallelize(range(100), 4)

>>> 6 <= rdd.sample(False, 0.1, 81).count() <= 14

True

pyspark入门上手

参考的链接:

(1)入门部分 https://blog.csdn.net/cymy001/article/details/78483723

1. 从本地读入文件,进行初始化成dataframe

pyspark核心模块SparkContext(简称sc);最主要数据载体RDD。

RDD的创建:

方法1 直接内存创建

rdd = sc.parallelize([1,2,3,4,5])

利用list创建一个RDD;使用sc.parallelize可以把Python list,NumPy array或者Pandas Series,Pandas DataFrame转成Spark RDD。

方法2 外部文件创建(文本、表格??、Hive?、spark sql?)

file_path #文本路径

sc.textFile(file_path) # 文本的读取,每一行是一个item

注意:如果读入的是文件夹,spark将子文件处理成一个item,在HDFS中一个block的item大小默认限制128MB,但有设置方式。

textFile()支持文件夹、压缩文件、通配符

from pyspark import SparkContext as sc

from pyspark import SparkConf

import pyspark

# from pyspark.sql import

logFile = r"D:\TEST\jupyter\spark\test_spark.txt"

conf = SparkConf().setAppName("miniProject").setMaster("local[*]")

sc = sc.getOrCreate(conf)

# rdd = sc.parallelize([1,2,3,4,5])

rdd = sc.textFile(logFile)

rdd.map

print(rdd.collect())

rdd.map(lambda s: len(s)).reduce(lambda a,b:a+b)

937909

rdd.take(3)

['Spark’s primary abstraction is a distributed collection of items called a Dataset. Datasets can be created from Hadoop InputFormats (such as HDFS files) or by transforming other Datasets. Due to Python’s dynamic nature, we don’t need the Dataset to be strongly-typed in Python. As a result, all Datasets in Python are Dataset[Row], and we call it DataFrame to be consistent with the data frame concept in Pandas and R. Let’s make a new DataFrame from the text of the README file in the Spark source directory:',

'[英文小说有声读物.-.教父].Godfather.doc',

'']

案例1:计数并输出

rdd.count() # 查看dataframe中行数

rdd.first() # 查看dataframe中第一行

# sample

x = sc.parallelize(range(7))

ylist = [x.sample(withReplacement=False, fraction=0.5) for i in range(5)] # call 'sample' 5 timesprint('x = ' + str(x.collect()))

for cnt,y in zip(range(len(ylist)), ylist):

print('sample:' + str(cnt) + ' y = ' + str(y.collect()))

sample:0 y = [2, 3, 5, 6]

sample:1 y = [3, 4, 6]

sample:2 y = [0, 2, 3, 4, 5, 6]

sample:3 y = [0, 2, 6]

sample:4 y = [0, 1, 3, 4]

不是很理解这个地方。??

x.sample(withReplacement=False, fraction=0.5, 123).collect()

x.sample(withReplacement=False, fraction=.6, seed=123).collect()

** 对rdd进行操作的两种方式:**

- 对RDD中每一个item执行相同操作返回list:rdd.map()

- 对RDD中每一个item执行相同操作得到list之后,以平铺方式将结果组成新的list:rdd.flatMap()

wordrdd = rdd.flatMap(lambda sentence: sentence.split(" "))

# wordrdd2 = rdd.map(lambda sentence: sentence.split(" ")) # 采用map方式,采用flatmap方式

print(wordrdd.collect())

wordrdd.count()

['Spark’s', 'primary', 'abstraction', 'is', 'a', 'distributed', 'collection', 'of', 'items', 'called', 'a', 'Dataset.', 'Datasets', 'can', 'be', 'created', 'from', 'Hadoop', 'InputFormats', '(such', 'as', 'HDFS', 'files)', 'the', 'README', 'file', 'in', 'the', 'Spark', 'source', 'directory:', '[英文小说有声读物.-.教父].Godfather.doc', '', 'For', 'Anthony', 'Cleri', '', 'Book', 'One', '', 'of', 'Carlo', 'Rizzi,', 'she', 'said', 'the', 'necessary', 'prayers', 'for', 'the', 'soul', 'of', 'Michael', 'Corleone.', '', '', '', '']

174901

wordrdd.cache()

PythonRDD[83] at collect at :2

wordrdd.count()

174901

rdd.first()

'Spark’s primary abstraction is a distributed collection of items called a Dataset. Datasets can be created from Hadoop InputFormats (such as HDFS files) or by transforming other Datasets. Due to Python’s dynamic nature, we don’t need the Dataset to be strongly-typed in Python. As a result, all Datasets in Python are Dataset[Row], and we call it DataFrame to be consistent with the data frame concept in Pandas and R. Let’s make a new DataFrame from the text of the README file in the Spark source directory:'

案例 2 通过sql对数据进行计数和操作。

结合rdd.filter()筛选出满足条件的item,结合SparkSession来使用更便捷。

from pyspark.sql import SparkSession

SparkSession实质上是SQLContext和HiveContext的组合,在SQLContext和HiveContext上可用的API在SparkSession上同样是可以使用的。

SparkSession内部封装了SparkContext,所以计算实际上是由SparkContext完成的。

from pyspark.sql import SparkSession

logFile = "test_spark.txt" # Should be some file on your system

a = SparkSession.builder.appName("SimpleApp")

spark = a.getOrCreate()

logData = spark.read.text(logFile).cache()

numAs = logData.filter(logData.value.contains('spark')).count()

numBs = logData.filter(logData.value.contains('great')).count()

print("Lines with a: %i, lines with b: %i" % (numAs, numBs))

spark.stop()

2. 数据类型支持及其理解:

java的键值对,RDD

pyspark SequenceFile

python 的 array。

array进行写入时,需要转换成java类型

linelenghts = rdd.map(lambda s: len(s)*10)

totallenght = linelenghts.reduce(lambda a, b: a+b)

totallenght

9379090

如果后续还需使用linelenghts对象,可以通过添加lineLengths.persist()代码的方式。

linelenghts.persist()

PythonRDD[27] at RDD at PythonRDD.scala:48

linelenghts.reduce(lambda a,b: a+b)

60.108565196536254

3. 集群的理解

from pyspark import SparkContext

from pyspark import SparkConf

import pyspark

conf = SparkConf().setAppName("miniProject").setMaster("local[*]")

# sc = sc.getOrCreate(conf)

sc = SparkContext(conf=conf)

counter = 0

rdd = sc.parallelize(range(10))

# Wrong: Don't do this!!

def increment_counter(x):

global counter

counter += x

rdd.foreach(increment_counter)

print("Counter value: ", counter)

4. 键值对

pairs = rdd.map(lambda s: s(s,1))

counts = pairs.reduceByKey(lambda a, b: a + b)

其他内容

一、其他小实践

-

pyspark中截取字符串,结合条件判断的使用

pyspark.sql.functions.substring(str, pos, len)rdd15_unique = rdd15.distinct() rdd15_unique.where(substring(rdd15_unique.id_no, 1, 14)=='**************').count() -

获取某一列属于某list中值的数据

df.where(df['列名'].isin([list]))testf_ok2 = testf.where(testf['presona2'].isin(['A网点', 'B网点', 'c网点'])) testf_ok2.count(), testf.count() -

pyspark中正则表达与字符串处理 参考

二、其他注意的:

- pyspark中属性ArrayType(数组)的说明

‘’‘必须保持元素的类型一致性。

‘’’ - pyspark中属性MapType() https://blog.csdn.net/zdy0_2004/article/details/49592589

‘’‘确保函数的返回类型是dict,且所有的key保持类型一致,

MapType(StringType(), IntegerType())

‘’’ - pyspark中select中的字段

- 采用partitions可以快速从表从提取信息