Hadoop(七)之Yarm 集群

目录

1、Yarn产生的原因

1.1、MapreduceV1中,jobtracker存在瓶颈:

1.2、将jobtracker的职责划分成两个部分:

2、Yarn的架构

2.1、ResourceManager ----> master node,可配多个RM实现HA机制,

2.2、NodeManager ----> slave nodes,每台机器上一个

2.3、ApplicationMaster ----> 特定运算框架自己实现,接口为统一的AppMaster

3、Yarn运行application的流程

4、MapReduce程序向yarn提交执行的流程分析

5、application生命周期

6、资源请求

7、任务调度--capacity scheduler / fair scheduler

7.1、Scheduler概述

7.2、Capacity Scheduler配置

7.3、Fair Scheduler配置

1、Yarn产生的原因

1.1、MapreduceV1中,jobtracker存在瓶颈:

- 集群上运行的所有mr程序都有jobtracker来调度

- SPOF单点故障

- 职责划分不清晰

1.2、将jobtracker的职责划分成两个部分:

- 资源调度与管理:由统一的资源调度平台(集群)来实现(yarn)

- 任务监控与管理:

A、每一个application运行时拥有一个自己的任务监控管理进程AppMaster

B、AppMaster的生命周期:application提交给yarn集群之后,yarn负责启动该application的AppMaster,随后任务的执行监控调度等工作都交由AppMaster,待这个application运行完毕后,AppMaster向yarn注销自己。

C、AppMaster的具体实现由application所使用的分布式运算框架自己负责,比如Mapreduce类型的application有MrAppMaster实现类。Spark DAG应用则有SparkOnYarn的SparkContext实现。

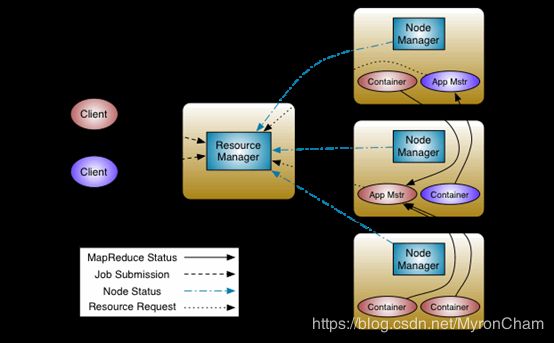

2、Yarn的架构

2.1、ResourceManager ----> master node,可配多个RM实现HA机制,

由两个核心组件构成:

Scheduler 和ApplicationsManager;

- Scheduler:负责资源调度,调度策略可插拔(内置实现 CapacityScheduler / FairScheduler ),不提供对application运行的监控;

- ApplicationsManager:负责响应任务提交请求,协商applicationMaster运行的container,重启失败的applicationMaster

2.2、NodeManager ----> slave nodes,每台机器上一个

职责:加载containers,监控各container的资源使用情况,并向Resourcemanager/Scheduler汇报

2.3、ApplicationMaster ----> 特定运算框架自己实现,接口为统一的AppMaster

职责:向Scheduler请求适当的资源,跟踪任务的执行,监控任务执行进度、状态等

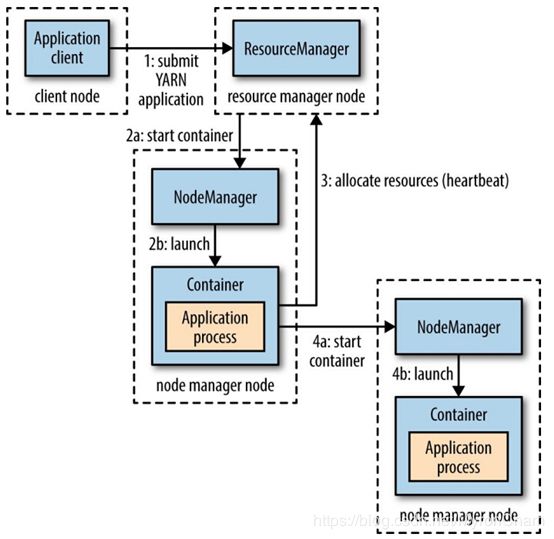

3、Yarn运行application的流程

详细参见《yarn运行application的流程图》

*Job提交流程详解

流程简述

源码跟踪:注重客户端与resourcemanager之间的交互

4、MapReduce程序向yarn提交执行的流程分析

- Job.waitForCompletion()

- 创建 yarnrunner

- 向resourcemanager提交请求,获取application id

- Yarnrunner提交job

5、application生命周期

Yarn支持短周期和长周期应用

- MR:短周期应用,用户的每一个job为一个application

- Spark:短周期应用,但比上一种效率要高,它是将一个工作流(DAG)转化为一个application,这样在job之间可以重用container及中间结果数据可以不用落地。

- Storm:long-running应用,应用为多用户共享,降低了资源调度的前期消耗,从而可以为用户提供低时延响应。

6、资源请求

资源请求由Container对象描述,支持数据本地性约束,如处理hdfs上的数据,则container优先分配在block所在的datanode,如该datanode资源不满足要求,则优选同机架,还不能满足则随机分配。

Application可以在其生命周期的任何阶段请求资源,可以在一开始就请求所需的所有资源,也可以在运行过程中动态请求资源;如spark,采用第一种策略;而MR则分两个阶段,map task的资源是在一开始一次性请求,而reduce task的资源则是在运行过程中动态请求;并且,任务失败后,还可以重新请求资源进行重试

7、任务调度--capacity scheduler / fair scheduler

由于集群资源有限,当无法满足众多application的资源请求时,yarn需要适当的策略对application的资源请求进行调度;

7.1、Scheduler概述

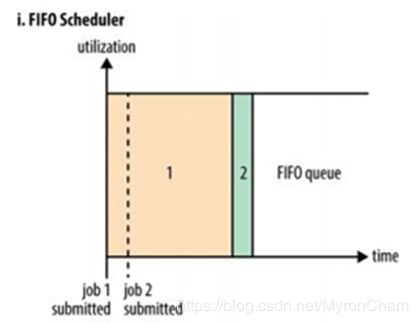

Yarn中实现的调度策略有三种:FIFO/Capacity/Fair Schedulers

(1)FIFO Scheduler:

将所有application按提交的顺序排队,先进先出

优点---->简单易懂且不用任何配置

缺点---->不适合于shared clusters;大的应用会将集群资源占满从而导致大量应用等待

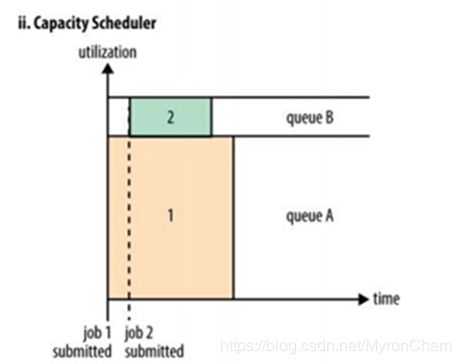

(2)Capacity Scheduler

将application划分为多条任务队列,每条队列拥有相应的资源

在队列的内部,资源分配遵循FIFO策略

队列资源支持弹性调整:一个队列的空闲资源可以分配给“饥饿”队列(注意:一旦之前的空闲队列需求增长,因为不支持“先占”,不能强制kill资源container,则需要等待其他队列释放资源;为防止这种状况的出现,可以配置队列最大资源进行限制)

任务队列支持继承结构

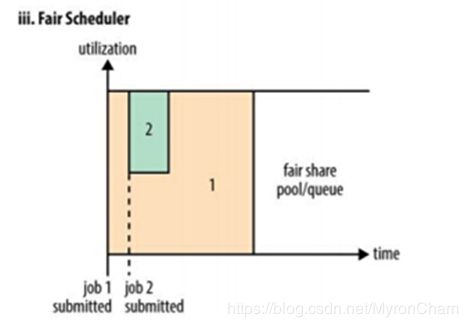

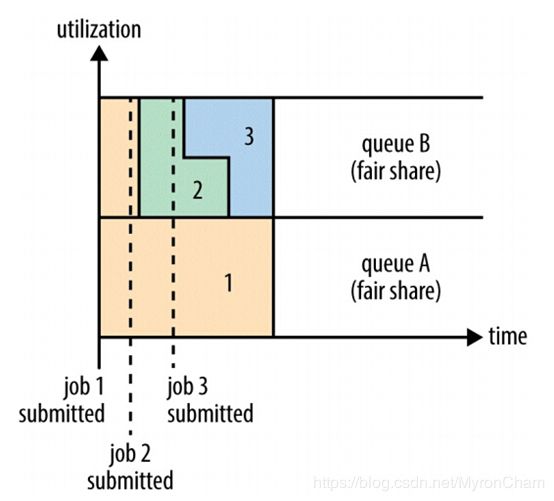

(3)Fair Scheduler

不需要为特定small application保留资源,而是在需要执行时进行动态公平分配;

动态资源分配有一个延后,因为需要等待large job释放一部分资源;

Small job资源使用完毕后,large job可以再次获得全部资源;

Fair Scheduler也支持在application queue之间进行调度。

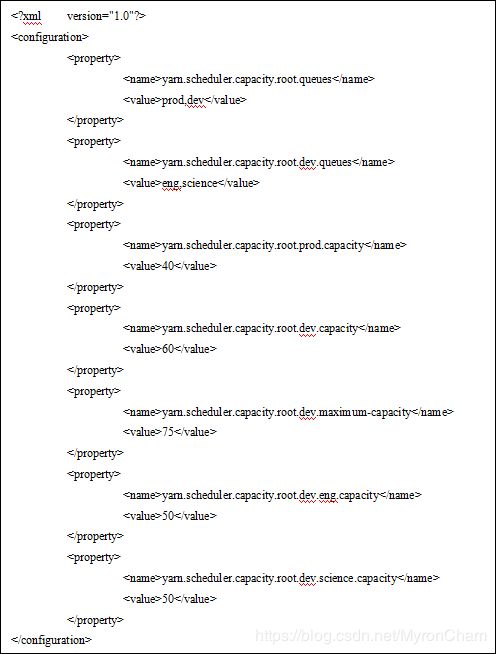

7.2、Capacity Scheduler配置

yarn的Scheduler机制,由yarn-site.xml中的配置参数指定:

默认值为:

修改为:

CapacityScheduler的配置文件则位于:etc/hadoop/capacity-scheduler.xml

capacity-scheduler .xml示例

如果修改了capacity-scheduler.xml(比如添加了新的queue),只需要执行:

yarn rmadmin -refreshQueues即可生效

application中指定所属的queue使用配置参数:

mapreduce.job.queuename

在示例配置中,此处queuename即为prod或dev或science

如果给定的queue name不存在,则在submission阶段报错

如果没有指定queue name,则会被列入default queue

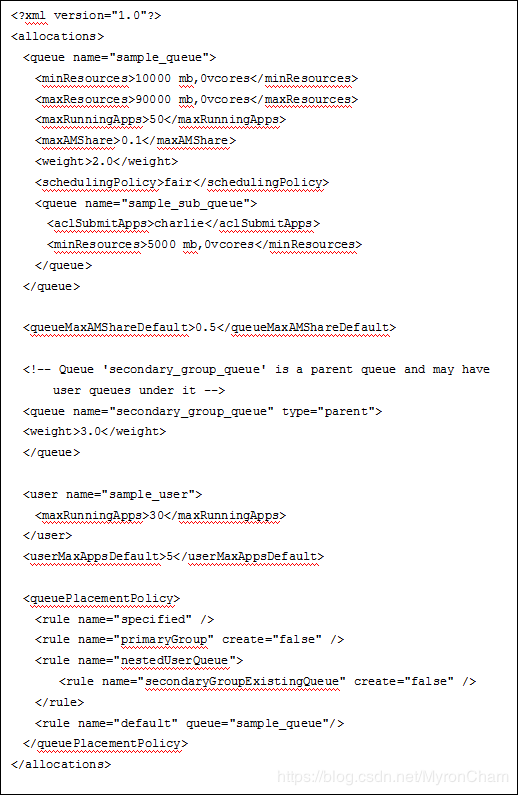

7.3、Fair Scheduler配置

Fair Scheduler工作机制:

启用Fair Scheduler,在yarn-site.xml中

yarn.resourcemanager.scheduler.class org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler 配置参数--参考官网:

http://hadoop.apache.org/docs/current/hadoop-yarn/hadoop-yarn-site/FairScheduler.html

一部分位于yarn-site.xml

| yarn.scheduler.fair.allocation.file |

Path to allocation file. An allocation file is an XML manifest describing queues and their properties, in addition to certain policy defaults. This file must be in the XML format described in the next section. If a relative path is given, the file is searched for on the classpath (which typically includes the Hadoop conf directory). Defaults to fair-scheduler.xml. |

| yarn.scheduler.fair.user-as-default-queue |

Whether to use the username associated with the allocation as the default queue name, in the event that a queue name is not specified. If this is set to “false” or unset, all jobs have a shared default queue, named “default”. Defaults to true. If a queue placement policy is given in the allocations file, this property is ignored. |

| yarn.scheduler.fair.preemption |

Whether to use preemption. Defaults to false. |

| yarn.scheduler.fair.preemption.cluster-utilization-threshold |

The utilization threshold after which preemption kicks in. The utilization is computed as the maximum ratio of usage to capacity among all resources. Defaults to 0.8f. |

| yarn.scheduler.fair.sizebasedweight |

Whether to assign shares to individual apps based on their size, rather than providing an equal share to all apps regardless of size. When set to true, apps are weighted by the natural logarithm of one plus the app’s total requested memory, divided by the natural logarithm of 2. Defaults to false. |

| yarn.scheduler.fair.assignmultiple |

Whether to allow multiple container assignments in one heartbeat. Defaults to false. |

| yarn.scheduler.fair.max.assign |

If assignmultiple is true, the maximum amount of containers that can be assigned in one heartbeat. Defaults to -1, which sets no limit. |

| yarn.scheduler.fair.locality.threshold.node |

For applications that request containers on particular nodes, the number of scheduling opportunities since the last container assignment to wait before accepting a placement on another node. Expressed as a float between 0 and 1, which, as a fraction of the cluster size, is the number of scheduling opportunities to pass up. The default value of -1.0 means don’t pass up any scheduling opportunities. |

| yarn.scheduler.fair.locality.threshold.rack |

For applications that request containers on particular racks, the number of scheduling opportunities since the last container assignment to wait before accepting a placement on another rack. Expressed as a float between 0 and 1, which, as a fraction of the cluster size, is the number of scheduling opportunities to pass up. The default value of -1.0 means don’t pass up any scheduling opportunities. |

| yarn.scheduler.fair.allow-undeclared-pools |

If this is true, new queues can be created at application submission time, whether because they are specified as the application’s queue by the submitter or because they are placed there by the user-as-default-queue property. If this is false, any time an app would be placed in a queue that is not specified in the allocations file, it is placed in the “default” queue instead. Defaults to true. If a queue placement policy is given in the allocations file, this property is ignored. |

另外还可以制定一个allocation file来描述application queue