kaggle泰坦尼克(Titanic: Machine Learning from Disaster)数据分析(一)

这是第二个kaggle上的入门项目,泰坦尼克号灾难人员是否获救预测。依然从投票较前的kernel中学习。这次学习的kernel是Introduction to Ensembling/Stacking in Python

1、准备工作

导入各种库:

# Load in our libraries

import pandas as pd

import numpy as np

import re

import sklearn

import xgboost as xgb

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

import plotly.offline as py

py.init_notebook_mode(connected=True)

import plotly.graph_objs as go

import plotly.tools as tls

import warnings

warnings.filterwarnings('ignore')

#Going to use these 5 base models for the stacking

from sklearn.ensemble import (RandomForestClassifier, AdaBoostClassifier,

GradientBoostingClassifier, ExtraTreesClassifier)

from sklearn.svm import SVC

from sklearn.model_selection import KFold

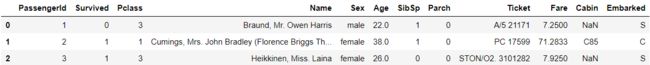

读取数据集,显示前三行:

#Load in the train and test datasets

train = pd.read_csv('data/train.csv')

test = pd.read_csv('data/test.csv')

#Store our passenger ID for easy access

PassengerId = test['PassengerId']

train.head(3)

数据集中各变量名的含义:

(1)Survived:是否获救,1表示是。

(2)Pclass: 客票类型(1 = 1st, 2 = 2nd, 3 = 3rd),社会经济地位的代表

(3)SibSP:船上(继)兄弟姐妹/配偶的人数

(4)Parch:船上父母(继)子女的人数

(5)Ticket:票号

(6)Fare:票价

(7)Cabin:客舱号

(8)Embarked:登船港,C=瑟堡,Q=昆士敦,S=南安普敦

2、特征工程

连接训练集和测试集,增加两个变量:名字的长度和是否有客舱:

full_data = [train, test]

#Some features of my own that I have added in

#Gives the length of the name

train['Name_length'] = train['Name'].apply(len)

test['Name_length'] = test['Name'].apply(len)

#Feature that tells whether a passenger had a cabin on the Titanic

train['Has_Cabin'] = train["Cabin"].apply(lambda x: 0 if type(x) == float else 1)

test['Has_Cabin'] = test["Cabin"].apply(lambda x: 0 if type(x) == float else 1)

增加两个变量:船上家人总数和是否一个人:

#Feature engineering steps taken from Sina

#Create new feature FamilySize as a combination of SibSp and Parch

for dataset in full_data:

dataset['FamilySize'] = dataset['SibSp'] + dataset['Parch'] + 1

#Create new feature IsAlone from FamilySize

for dataset in full_data:

dataset['IsAlone'] = 0

dataset.loc[dataset['FamilySize'] == 1, 'IsAlone'] = 1

用S填充登船港,票价用中值填充

#Remove all NULLS in the Embarked column

for dataset in full_data:

dataset['Embarked'] = dataset['Embarked'].fillna('S')

#Remove all NULLS in the Fare column and create a new feature CategoricalFare

for dataset in full_data:

dataset['Fare'] = dataset['Fare'].fillna(train['Fare'].median())

train['CategoricalFare'] = pd.qcut(train['Fare'], 4)

注:pd.qcut(数组,k)表示将对应的数组切成相同的k份,返回每个数对应的分组。

增加年龄分层变量:

# Create a New feature CategoricalAge

for dataset in full_data:

age_avg = dataset['Age'].mean()

age_std = dataset['Age'].std()

age_null_count = dataset['Age'].isnull().sum()

age_null_random_list = np.random.randint(age_avg - age_std, age_avg + age_std, size=age_null_count)

dataset['Age'][np.isnan(dataset['Age'])] = age_null_random_list

dataset['Age'] = dataset['Age'].astype(int)

train['CategoricalAge'] = pd.cut(train['Age'], 5)

注:numpy.random.randint(low, high=None, size=None, dtype=‘l’),函数的作用是,返回一个随机整型数,范围从低(包括)到高(不包括)。pd.cut将根据值本身来选择箱子均匀间隔,即每个箱子的间距都是相同的,而qcut是根据这些值的频率来选择箱子的均匀间隔,即每个箱子中含有的数的数量是相同的。

新增变量,返回名字中.前面的信息:

# Define function to extract titles from passenger names

def get_title(name):

title_search = re.search(' ([A-Za-z]+)\.', name)

# If the title exists, extract and return it.

if title_search:

return title_search.group(1)

return ""

# Create a new feature Title, containing the titles of passenger names

for dataset in full_data:

dataset['Title'] = dataset['Name'].apply(get_title)

注:re.search函数会在字符串内查找模式匹配,只要找到第一个匹配然后返回,如果字符串没有匹配,则返回None。 group(1) 列出第一个括号匹配部分。

将一些特殊的名字用其它代替:

#Group all non-common titles into one single grouping "Rare"

for dataset in full_data:

dataset['Title'] = dataset['Title'].replace(['Lady', 'Countess','Capt', 'Col','Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], 'Rare')

dataset['Title'] = dataset['Title'].replace('Mlle', 'Miss')

dataset['Title'] = dataset['Title'].replace('Ms', 'Miss')

dataset['Title'] = dataset['Title'].replace('Mme', 'Mrs')

根据之前的分类状况,将一些变量用map接收一个函数 f 和一个list,并通过把函数 f 依次作用在 list 的每个元素上,得到一个新的list并返回:

for dataset in full_data:

#Mapping Sex

dataset['Sex'] = dataset['Sex'].map( {'female': 0, 'male': 1} ).astype(int)

#Mapping titles

title_mapping = {"Mr": 1, "Miss": 2, "Mrs": 3, "Master": 4, "Rare": 5}

dataset['Title'] = dataset['Title'].map(title_mapping)

dataset['Title'] = dataset['Title'].fillna(0)

#Mapping Embarked

dataset['Embarked'] = dataset['Embarked'].map( {'S': 0, 'C': 1, 'Q': 2} ).astype(int)

#Mapping Fare

dataset.loc[ dataset['Fare'] <= 7.91, 'Fare'] = 0

dataset.loc[(dataset['Fare'] > 7.91) & (dataset['Fare'] <= 14.454), 'Fare'] = 1

dataset.loc[(dataset['Fare'] > 14.454) & (dataset['Fare'] <= 31), 'Fare'] = 2

dataset.loc[ dataset['Fare'] > 31, 'Fare'] = 3

dataset['Fare'] = dataset['Fare'].astype(int)

#Mapping Age

dataset.loc[ dataset['Age'] <= 16, 'Age'] = 0

dataset.loc[(dataset['Age'] > 16) & (dataset['Age'] <= 32), 'Age'] = 1

dataset.loc[(dataset['Age'] > 32) & (dataset['Age'] <= 48), 'Age'] = 2

dataset.loc[(dataset['Age'] > 48) & (dataset['Age'] <= 64), 'Age'] = 3

dataset.loc[ dataset['Age'] > 64, 'Age'] = 4 ;

在上述的操作后,可以直接把一些变量删除:

#Feature selection

drop_elements = ['PassengerId', 'Name', 'Ticket', 'Cabin', 'SibSp']

train = train.drop(drop_elements, axis = 1)

train = train.drop(['CategoricalAge', 'CategoricalFare'], axis = 1)

test = test.drop(drop_elements, axis = 1)

然后来看一下训练集的前三行:

train.head(3)

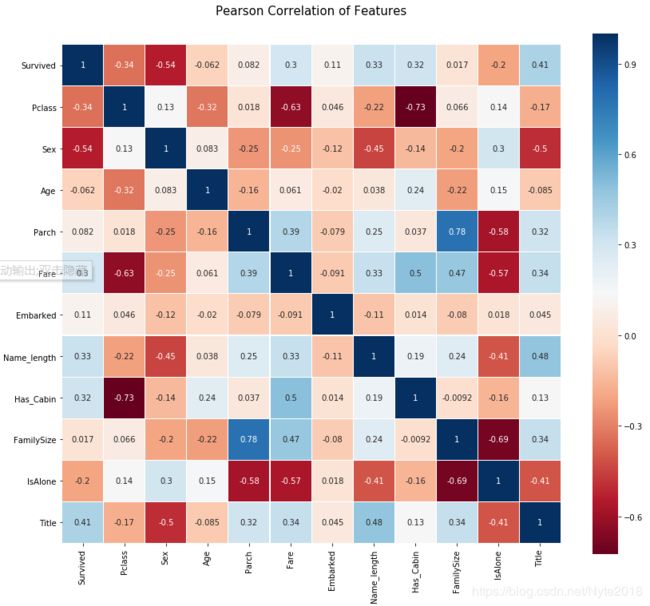

3、可视化

将数据集中各变量进行相关性分析,用热图表示;

colormap = plt.cm.RdBu

plt.figure(figsize=(14,12))

plt.title('Pearson Correlation of Features', y=1.05, size=15)

sns.heatmap(train.astype(float).corr(),linewidths=0.1,vmax=1.0,

square=True, cmap=colormap, linecolor='white', annot=True)

可以看出,并没有很多变量间有很强的相关性,这说明可以将这些特征用于训练模型中,因为没有太多冗余或多余的数据。这里两个相关性最高的变量是Family size和Parch。

作pairplot图进行每个变量之间的分布情况:

4、模型融合

先编写了一个sklearnhelper类,它允许我们扩展所有sklearn分类器通用的内置方法(如train、predict和fit):

#Some useful parameters which will come in handy later on

ntrain = train.shape[0]

ntest = test.shape[0]

SEED = 0 #for reproducibility

NFOLDS = 5 #set folds for out-of-fold prediction

kf = KFold(n_splits= NFOLDS, random_state=SEED,shuffle=True)

#Class to extend the Sklearn classifier

class SklearnHelper(object):

def __init__(self, clf, seed=0, params=None):

params['random_state'] = seed

self.clf = clf(**params)

def train(self, x_train, y_train):

self.clf.fit(x_train, y_train)

def predict(self, x):

return self.clf.predict(x)

def fit(self,x,y):

return self.clf.fit(x,y)

def feature_importances(self,x,y):

print(self.clf.fit(x,y).feature_importances_)

#Class to extend XGboost classifer

定义Out-of-Fold预测:

def get_oof(clf, x_train, y_train, x_test):

oof_train = np.zeros((ntrain,))

oof_test = np.zeros((ntest,))

oof_test_skf = np.empty((NFOLDS, ntest))

for i, (train_index, test_index) in enumerate(kf):

x_tr = x_train[train_index]

y_tr = y_train[train_index]

x_te = x_train[test_index]

clf.train(x_tr, y_tr)

oof_train[test_index] = clf.predict(x_te)

oof_test_skf[i, :] = clf.predict(x_test)

oof_test[:] = oof_test_skf.mean(axis=0)

return oof_train.reshape(-1, 1), oof_test.reshape(-1, 1)

5、基础模型

选用以下分类器:随机森林、Extra Trees、AdaBoost、Gradient Boosting和SVM。

对于参数的介绍:

(1)n_jobs:训练过程中核的数量,如果设置为-1,说明所有的核都会被使用。

(2)n_estimators:学习模型中的分类树个数,默认为10。

(3)max_depth:树的最大深度,如果设的太大会过拟合。

(4)verbose:控制是否在学习过程中输出文本。值为0将抑制所有文本,值为3将在每次迭代时输出树学习过程。

#Put in our parameters for said classifiers

#Random Forest parameters

rf_params = {

'n_jobs': -1,

'n_estimators': 500,

'warm_start': True,

#'max_features': 0.2,

'max_depth': 6,

'min_samples_leaf': 2,

'max_features' : 'sqrt',

'verbose': 0

}

#Extra Trees Parameters

et_params = {

'n_jobs': -1,

'n_estimators':500,

#'max_features': 0.5,

'max_depth': 8,

'min_samples_leaf': 2,

'verbose': 0

}

#AdaBoost parameters

ada_params = {

'n_estimators': 500,

'learning_rate' : 0.75

}

#Gradient Boosting parameters

gb_params = {

'n_estimators': 500,

#'max_features': 0.2,

'max_depth': 5,

'min_samples_leaf': 2,

'verbose': 0

}

#Support Vector Classifier parameters

svc_params = {

'kernel' : 'linear',

'C' : 0.025

}

通过之前的sklearnhelper类定义五个模型对象:

#Create 5 objects that represent our 4 models

rf = SklearnHelper(clf=RandomForestClassifier, seed=SEED, params=rf_params)

et = SklearnHelper(clf=ExtraTreesClassifier, seed=SEED, params=et_params)

ada = SklearnHelper(clf=AdaBoostClassifier, seed=SEED, params=ada_params)

gb = SklearnHelper(clf=GradientBoostingClassifier, seed=SEED, params=gb_params)

svc = SklearnHelper(clf=SVC, seed=SEED, params=svc_params)

将数据集转换为numpy数组:

#Create Numpy arrays of train, test and target ( Survived) dataframes to feed into our models

y_train = train['Survived'].ravel()

train = train.drop(['Survived'], axis=1)

x_train = train.values # Creates an array of the train data

x_test = test.values # Creats an array of the test data

现在将这些数据输入到5个基本分类器中,并用Out-of-Fold预测:

#Create our OOF train and test predictions. These base results will be used as new features

et_oof_train, et_oof_test = get_oof(et, x_train, y_train, x_test) # Extra Trees

rf_oof_train, rf_oof_test = get_oof(rf,x_train, y_train, x_test) # Random Forest

ada_oof_train, ada_oof_test = get_oof(ada, x_train, y_train, x_test) # AdaBoost

gb_oof_train, gb_oof_test = get_oof(gb,x_train, y_train, x_test) # Gradient Boost

svc_oof_train, svc_oof_test = get_oof(svc,x_train, y_train, x_test) # Support Vector Classifier

输出模型的特征重要性:

rf_feature = rf.feature_importances(x_train,y_train)

et_feature = et.feature_importances(x_train, y_train)

ada_feature = ada.feature_importances(x_train, y_train)

gb_feature = gb.feature_importances(x_train,y_train)

[0.10380105 0.20962742 0.03501215 0.02017078 0.04806419 0.02894929

0.12963927 0.04875585 0.07153658 0.01171714 0.29272627]

[0.12005454 0.37744389 0.03198743 0.01684555 0.05412797 0.02901463

0.0455709 0.08438315 0.04447681 0.02188982 0.17420532]

[0.034 0.012 0.02 0.068 0.042 0.01 0.674 0.014 0.05 0.006 0.07 ]

[0.08720304 0.01257659 0.04739033 0.01349999 0.0514999 0.02508658

0.17069105 0.03810338 0.11514459 0.00868216 0.4301224 ]

直接将这些值存储:

rf_features = [0.10380105, 0.20962742, 0.03501215, 0.02017078, 0.04806419, 0.02894929,

0.12963927, 0.04875585, 0.07153658, 0.01171714, 0.29272627]

et_features = [0.12005454, 0.37744389, 0.03198743, 0.01684555, 0.05412797, 0.02901463,

0.0455709, 0.08438315, 0.04447681, 0.02188982, 0.17420532]

ada_features = [0.034, 0.012, 0.02, 0.068, 0.042, 0.01, 0.67, 0.014, 0.05, 0.006, 0.07]

gb_features = [0.08720304, 0.01257659, 0.04739033, 0.01349999, 0.0514999, 0.02508658,

0.17069105, 0.03810338, 0.11514459, 0.00868216, 0.4301224 ]

创建特征的dataframe:

cols = train.columns.values

#Create a dataframe with features

feature_dataframe = pd.DataFrame( {'features': cols,

'Random Forest feature importances': rf_features,

'Extra Trees feature importances': et_features,

'AdaBoost feature importances': ada_features,

'Gradient Boost feature importances': gb_features

})

通过绘制散点图交互特征重要性:

# Scatter plot

trace = go.Scatter(

y = feature_dataframe['Random Forest feature importances'].values,

x = feature_dataframe['features'].values,

mode='markers',

marker=dict(

sizemode = 'diameter',

sizeref = 1,

size = 25,

# size= feature_dataframe['AdaBoost feature importances'].values,

#color = np.random.randn(500), #set color equal to a variable

color = feature_dataframe['Random Forest feature importances'].values,

colorscale='Portland',

showscale=True

),

text = feature_dataframe['features'].values

)

data = [trace]

layout= go.Layout(

autosize= True,

title= 'Random Forest Feature Importance',

hovermode= 'closest',

# xaxis= dict(

# title= 'Pop',

# ticklen= 5,

# zeroline= False,

# gridwidth= 2,

# ),

yaxis=dict(

title= 'Feature Importance',

ticklen= 5,

gridwidth= 2

),

showlegend= False

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig,filename='scatter2010')

# Scatter plot

trace = go.Scatter(

y = feature_dataframe['Extra Trees feature importances'].values,

x = feature_dataframe['features'].values,

mode='markers',

marker=dict(

sizemode = 'diameter',

sizeref = 1,

size = 25,

# size= feature_dataframe['AdaBoost feature importances'].values,

#color = np.random.randn(500), #set color equal to a variable

color = feature_dataframe['Extra Trees feature importances'].values,

colorscale='Portland',

showscale=True

),

text = feature_dataframe['features'].values

)

data = [trace]

layout= go.Layout(

autosize= True,

title= 'Extra Trees Feature Importance',

hovermode= 'closest',

# xaxis= dict(

# title= 'Pop',

# ticklen= 5,

# zeroline= False,

# gridwidth= 2,

# ),

yaxis=dict(

title= 'Feature Importance',

ticklen= 5,

gridwidth= 2

),

showlegend= False

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig,filename='scatter2010')

# Scatter plot

trace = go.Scatter(

y = feature_dataframe['AdaBoost feature importances'].values,

x = feature_dataframe['features'].values,

mode='markers',

marker=dict(

sizemode = 'diameter',

sizeref = 1,

size = 25,

# size= feature_dataframe['AdaBoost feature importances'].values,

#color = np.random.randn(500), #set color equal to a variable

color = feature_dataframe['AdaBoost feature importances'].values,

colorscale='Portland',

showscale=True

),

text = feature_dataframe['features'].values

)

data = [trace]

layout= go.Layout(

autosize= True,

title= 'AdaBoost Feature Importance',

hovermode= 'closest',

# xaxis= dict(

# title= 'Pop',

# ticklen= 5,

# zeroline= False,

# gridwidth= 2,

# ),

yaxis=dict(

title= 'Feature Importance',

ticklen= 5,

gridwidth= 2

),

showlegend= False

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig,filename='scatter2010')

# Scatter plot

trace = go.Scatter(

y = feature_dataframe['Gradient Boost feature importances'].values,

x = feature_dataframe['features'].values,

mode='markers',

marker=dict(

sizemode = 'diameter',

sizeref = 1,

size = 25,

# size= feature_dataframe['AdaBoost feature importances'].values,

#color = np.random.randn(500), #set color equal to a variable

color = feature_dataframe['Gradient Boost feature importances'].values,

colorscale='Portland',

showscale=True

),

text = feature_dataframe['features'].values

)

data = [trace]

layout= go.Layout(

autosize= True,

title= 'Gradient Boosting Feature Importance',

hovermode= 'closest',

# xaxis= dict(

# title= 'Pop',

# ticklen= 5,

# zeroline= False,

# gridwidth= 2,

# ),

yaxis=dict(

title= 'Feature Importance',

ticklen= 5,

gridwidth= 2

),

showlegend= False

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig,filename='scatter2010')

再来看一下这些值前三行:

#Create the new column containing the average of values

feature_dataframe['mean'] = feature_dataframe.mean(axis= 1) # axis = 1 computes the mean row-wise

feature_dataframe.head(3)

y = feature_dataframe['mean'].values

x = feature_dataframe['features'].values

data = [go.Bar(

x= x,

y= y,

width = 0.5,

marker=dict(

color = feature_dataframe['mean'].values,

colorscale='Portland',

showscale=True,

reversescale = False

),

opacity=0.6

)]

layout= go.Layout(

autosize= True,

title= 'Barplots of Mean Feature Importance',

hovermode= 'closest',

# xaxis= dict(

# title= 'Pop',

# ticklen= 5,

# zeroline= False,

# gridwidth= 2,

# ),

yaxis=dict(

title= 'Feature Importance',

ticklen= 5,

gridwidth= 2

),

showlegend= False

)

fig = go.Figure(data=data, layout=layout)

py.plot(fig, filename='bar-direct-labels')

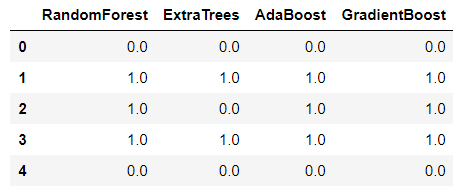

一级输出的二级预测,一级输出作为新的特征:

base_predictions_train = pd.DataFrame( {'RandomForest': rf_oof_train.ravel(),

'ExtraTrees': et_oof_train.ravel(),

'AdaBoost': ada_oof_train.ravel(),

'GradientBoost': gb_oof_train.ravel()

})

base_predictions_train.head()

data = [

go.Heatmap(

z= base_predictions_train.astype(float).corr().values ,

x=base_predictions_train.columns.values,

y= base_predictions_train.columns.values,

colorscale='Viridis',

showscale=True,

reversescale = True

)

]

py.plot(data, filename='labelled-heatmap')

将训练集和测试集连接:

x_train = np.concatenate(( et_oof_train, rf_oof_train, ada_oof_train, gb_oof_train, svc_oof_train), axis=1)

x_test = np.concatenate(( et_oof_test, rf_oof_test, ada_oof_test, gb_oof_test, svc_oof_test), axis=1)

通过XGBoost的二级学习模型:

gbm = xgb.XGBClassifier(

#learning_rate = 0.02,

n_estimators= 2000,

max_depth= 4,

min_child_weight= 2,

#gamma=1,

gamma=0.9,

subsample=0.8,

colsample_bytree=0.8,

objective= 'binary:logistic',

nthread= -1,

scale_pos_weight=1).fit(x_train, y_train)

predictions = gbm.predict(x_test)

最后,生成提交数据文件:

# Generate Submission File

StackingSubmission = pd.DataFrame({ 'PassengerId': PassengerId,

'Survived': predictions })

StackingSubmission.to_csv("StackingSubmission.csv", index=False)