图像语义分割:从头开始训练DeepLab-LargeFOV

DeepLabv1安装及调试

前言:所谓DeepLab-LargeFOV就是DeepLab v1

论文:Semantic Images Segmentation With Deep Convolutional Nets And Fully Connected CRFs

介绍:在DCNN进行分割任务时,有两个瓶颈,一个是下采样所导致的信息丢失,通过带孔卷积的方法解决;另一个是空间不变性所导致的边缘不够准确,通过全连接的CRF解决。(CRF是可以通过底层特征进行分割的一个方法)。

核心工作:空洞卷积 + 如何降低计算量 + CRF作为后处理

1.调试环境

博主是在Ubuntu+docker条件下跑的,具体参数为:

GeForce GTX 1060 6GB

Ubuntu 16.04

Docker version 18.09.1

CUDA Version 8.0.61

NVIDIA Driver Version: 384.145

2.环境安装

1)docker镜像安装

$ docker pull rysenko/deeplab

挂载本地路径运行

$ sudo nvidia-docker run -it -v /home/ubuntu:/Deeplab rysenko/deeplab bash

2)PASCAL VOC2012 & Augmented PASCAL VOC2012下载

3)修改run.py中VOC2012数据集的路径

DATA_ROOT=' /Deeplab/datasets/VOCdevkit/VOC2012'

4)修改python/my_script/tools.py(原链接已失效)

#修改caffemodel的url链接

url = 'http://www.cs.jhu.edu/~alanlab/ccvl/init_models/vgg16_20M.caffemodel'

#修改caffe路径

CAFFE_DIR='/Deeplab/DeepLab-Context/code' #docker_caffe

CAFFE_BIN='.build_release/tools/caffe.bin' #caffe.bin

5)matio安装:

$ pip install wget

$ wget http://sourceforge.net/projects/matio/files/matio/1.5.2/

$ cd matio-1.5.13

$ ./configure

$ make

$ make check

$ make install

$ sudo ldconfig

6)Compile caffe

①修改Makefile.config

#需要把/opt/caffe中的文件拷贝到/DeepLab-Context/code后再编译

$ cp -r /opt/caffe/* /Deeplab/DeepLab-Context/code

$ cd /Deeplab/DeepLab-Context/code

$ cp Makefile.config.example Makefile.config

$ vim Makefile.config

#以下是对Makefile.config的修改

1. INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial/

2. LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnumake

3. # Uncomment the *_50 lines

这里博主把Makefile.config部分贴出来供于参考(其余部分不做修改)

# Makefile.config

# ......

# CUDA architecture setting: going with all of them (up to CUDA 5.5 compatible).

# For the latest architecture, you need to install CUDA >= 6.0 and uncomment

# the *_50 lines below.

CUDA_ARCH := -gencode arch=compute_20,code=sm_20 \

-gencode arch=compute_20,code=sm_21 \

-gencode arch=compute_30,code=sm_30 \

-gencode arch=compute_35,code=sm_35 \

-gencode arch=compute_50,code=sm_50 \

-gencode arch=compute_50,code=compute_50

# ......

# Whatever else you find you need goes here.

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnumake

# ......

②对hdf5进行链接

$ cd /usr/lib/x86_64-linux-gnu

$ ln -s libhdf5_serial.so.10.1.0 libhdf5.so

$ ln -s libhdf5_serial_hl.so.10.0.2 libhdf5_hl.so

$ make all -j16

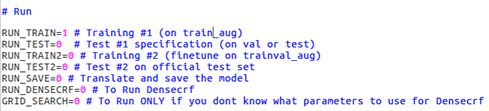

3.Running the code

$ python run.py

#可修改/DeepLab-Context/voc12/config/vgg128_noup/train.prototxt中的batch_size

#以防出现out of memory 的情况,这里我们令batch_size = 4

#根据实际要求修改以下参数

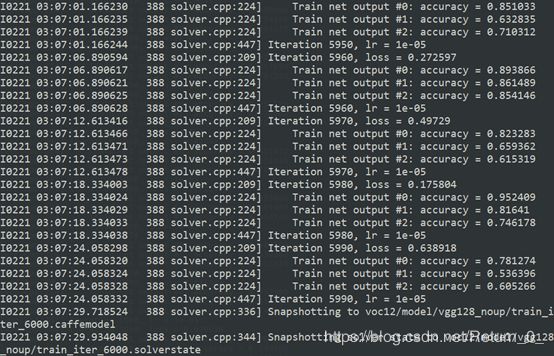

4.运行效果

参考

https://blog.csdn.net/Xmo_jiao/article/details/77897109

https://zhuanlan.zhihu.com/p/34562137

https://blog.csdn.net/gzq0723/article/details/80153849